A Brief Review of Estimation Theory

advertisement

A Brief Review of Estimation Theory

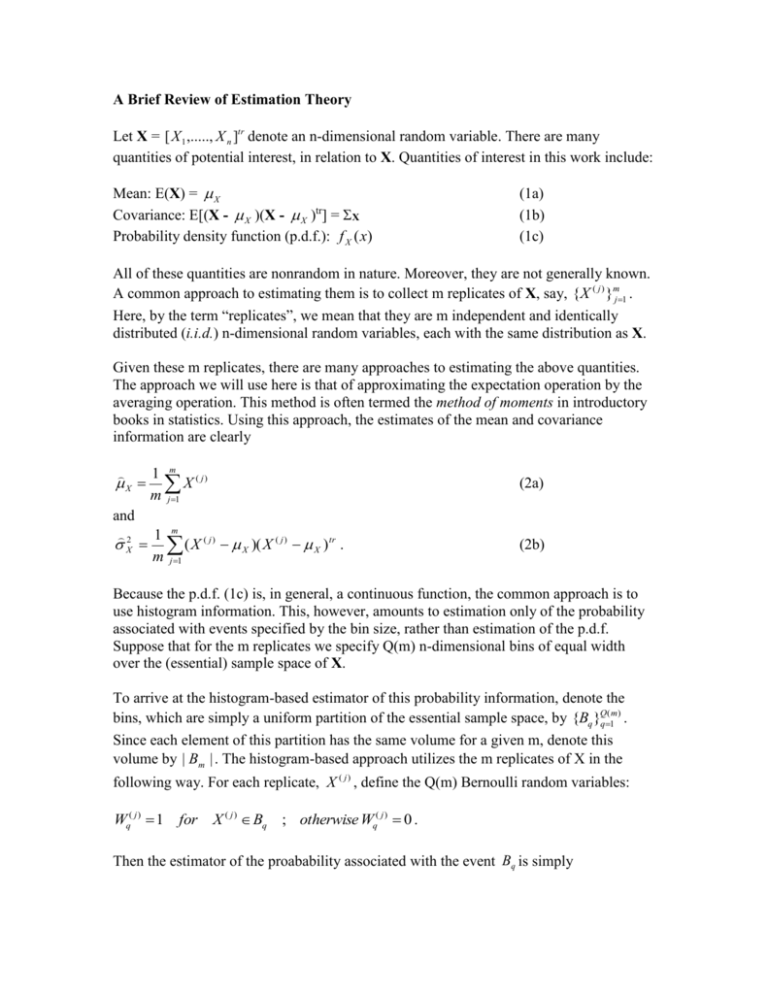

Let X = [ X 1 ,....., X n ]tr denote an n-dimensional random variable. There are many

quantities of potential interest, in relation to X. Quantities of interest in this work include:

Mean: E(X) = X

Covariance: E[(X - X )(X - X )tr] = X

Probability density function (p.d.f.): f X (x)

(1a)

(1b)

(1c)

All of these quantities are nonrandom in nature. Moreover, they are not generally known.

A common approach to estimating them is to collect m replicates of X, say, {X ( j ) }mj1 .

Here, by the term “replicates”, we mean that they are m independent and identically

distributed (i.i.d.) n-dimensional random variables, each with the same distribution as X.

Given these m replicates, there are many approaches to estimating the above quantities.

The approach we will use here is that of approximating the expectation operation by the

averaging operation. This method is often termed the method of moments in introductory

books in statistics. Using this approach, the estimates of the mean and covariance

information are clearly

X

and

2

X

1 m ( j)

X

m j 1

(2a)

1 m

( X ( j ) X )( X ( j ) X ) tr .

m j 1

(2b)

Because the p.d.f. (1c) is, in general, a continuous function, the common approach is to

use histogram information. This, however, amounts to estimation only of the probability

associated with events specified by the bin size, rather than estimation of the p.d.f.

Suppose that for the m replicates we specify Q(m) n-dimensional bins of equal width

over the (essential) sample space of X.

To arrive at the histogram-based estimator of this probability information, denote the

bins, which are simply a uniform partition of the essential sample space, by {Bq }Qq(1m) .

Since each element of this partition has the same volume for a given m, denote this

volume by | Bm | . The histogram-based approach utilizes the m replicates of X in the

following way. For each replicate, X ( j ) , define the Q(m) Bernoulli random variables:

Wq( j ) 1

for

X ( j ) Bq ; otherwise Wq( j ) 0 .

Then the estimator of the proabability associated with the event Bq is simply

1 m

f ( Bq ) [ Wq( j ) ] / | Bm |

m j 1

(2c)

Because (2c) is a scaled sum of independent Bernoulli random variables defined in (2c),

each with a different probability, say, p q , of equaling one, it is a scaled Binomial random

variable.

The estimators of (1), given by (2), all rely in the averaging of m i.i.d. random variables.

Regardless of the underlying distribution of these random varables, the Central Limit

Theorem states that under very general conditions, these estimators will behave as normal

random variables for sufficiently large m. Since a normal random variable is fully

characterized by its mean and variance, it is important to identify these quantities for each

of the estimators.

In relation to the expected values, or means of these estimators, it is straightforward to

show that they are all unbiased. This is an important property, since, as m is allowed to

approach infinity, one would hope that their variance would approach zero. In that case,

if they were biased, then they would zero in on the wrong value.

In relation to the variance of these estimators, it is a well known fact from any

introductory course that for independent random variables, the variance of the sum equals

the sum of the variance. It is also well known that the variance of a constant times a

random variable equals the constant squared times the variance of the random variable.

Applying these facts to (2a) gives

X

X

2 X2 / m .

and

X

(3a)

To find the variance of (2b) recall that the square of a unit normal random variable is a

Chi-squared random variable with mean equal to one, and variance equal to 2. It follows

that the mean and variance of the estimator (2b) are

X2 and 2 2 X4 / m .

2

X

2

X

(3b)

Since the mean and variance of a Binomial(m,p) random variable are given by mp and

mp(1-p), respectively, the mean and variance of (2c) are given by

1 m

f ( Bq ) E[ f ( Bq )] [ E{Wq( j ) }] / | Bm | p q / | Bm |

m j 1

(3c)

2 f ( B ) Var[ f ( Bq )] [

q

m

1

Var

{

{Wq( j ) }] / | Bm | 2 p q (1 p q ) /[ m | Bm | 2 ] .

2

m

j 1

The variance expresions in (3) allow us to choose a value of m, such that the variances of

our estimators are suitably small. However, they require information that is often not

available. For example, the variance expressions in (3b) and (3c) require the very

information we are trying to estimate. There are various options to dealing with this. One

is to simply use the measured values of our estimators. Another is to conduct a large

number, say n, of simulations, and use the sample variance associated with the n

measurements of our estimators. In the first case, if we obtain an estimate for a chosen

value of m, then we are forced to draw a conclusion based on only this single value. For

example, suppose we used m=100 and arrived at an estimated mean value of 0.01.

Suppose further, that it was known that the variance of X was 1.0. Then using the

variance expression in (3a), we could say that the true mean had a 3 range of 0.01

0.03. But it would be a stretch to then claim that the true mean was zero. For this reason,

it is more appropriate to recognize that, for any chosen value of m, we cannot draw such

a final conclusion. The solution to this is to choose a sequence of m values approaching

infinity, and then, from the trend, deduce what the true mean is; that is, utilize the Central

Limit Theorem.