Probabilities and Expectations

advertisement

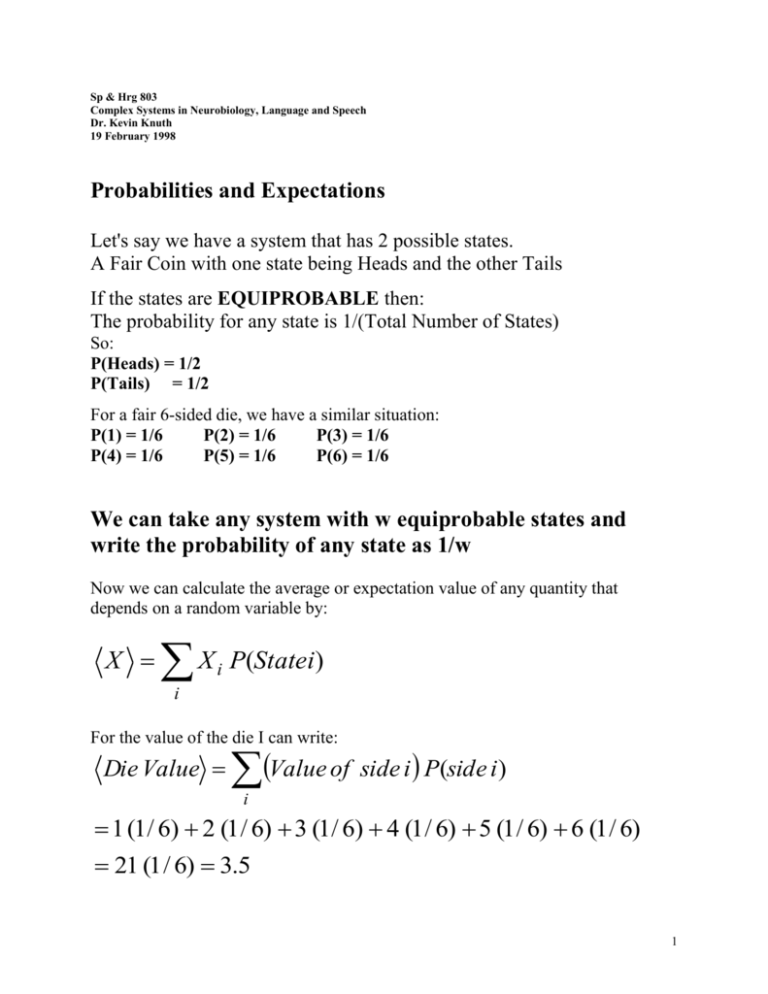

Sp & Hrg 803 Complex Systems in Neurobiology, Language and Speech Dr. Kevin Knuth 19 February 1998 Probabilities and Expectations Let's say we have a system that has 2 possible states. A Fair Coin with one state being Heads and the other Tails If the states are EQUIPROBABLE then: The probability for any state is 1/(Total Number of States) So: P(Heads) = 1/2 P(Tails) = 1/2 For a fair 6-sided die, we have a similar situation: P(1) = 1/6 P(2) = 1/6 P(3) = 1/6 P(4) = 1/6 P(5) = 1/6 P(6) = 1/6 We can take any system with w equiprobable states and write the probability of any state as 1/w Now we can calculate the average or expectation value of any quantity that depends on a random variable by: X X i P(Statei) i For the value of the die I can write: Die Value Value of side i P(side i) i 1 (1 / 6) 2 (1 / 6) 3 (1 / 6) 4 (1 / 6) 5 (1 / 6) 6 (1 / 6) 21 (1 / 6) 3.5 1 Entropy and Information Surprise is expressed as minus the Logarithm of the probability of the state. For State i: I State i Log PState i If a state is very probable, its surprise is small. If a state is not so probable, its surprise is large Shannon Information The Shannon Information, H, is the expectation value of the surprise: H Log Pi P Log P i i States Notice that it is a strange quantity. We are using the probabilities of the states to calculate the average of the logarithm of the probabilities. It is strangely self-referential. It is also closely related to the Entropy, S, of statistical mechanics: S k Log w , where w is the number of states and k is a constant. 2 Maximum Entropy and Information Imagine a system with equiprobable states - 'a' of them to be precise. One can calculate the information in this case: There are 'a' states, so the probability for any state is 1/a. a H P Log P i states1 i a 1 a states1 Log 1a Log 1 a Log a It can be shown that this is the Maximum Information. It occurs when each state is equiprobable. We have been talking about abstract states. We can apply this to real signals if we treat each possible symbol as a state of the system and the message that is sent is described by the system cycling through its possible states. The Shannon Information refers not to the information that is contained within any given message, but it refers to the system's capacity for transmitting messages. It describes the number of POSSIBLE MESSAGES. If the symbols are not equiprobable, the number of possible messages decreases. 3 Entropy, Information and Guessing Games We haven't yet specified the Base of the Logarithm to be used for these calculations. The specific base merely specifies the units by which entropy is measured. The most useful base in information theory is Base 2. In this case, the Information is measured in bits. To convert from a natural Log to a Log Base 2: Take the Log Base 10 and divide by 0.30103 Another way of looking at the information is this: The information measured in bits is the average number of yes-or-no questions that need to be asked to guess the result. What is the information of a fair coin? H P( Heads) Log ( Heads) P(Tails) Log (Tails) H Log2 12 1 We get one bit of information from a coin. We have to ask 1 yes-or-no question on the average to guess the result of the coin toss. What about for a 6-sided die? 4