Mathematical Modeling of Large Data Sets

advertisement

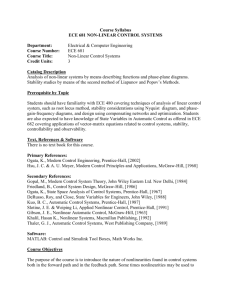

Modified Course Outline M532 -- Mathematical Modeling of Large Data Sets Credits: 3 Terms to be offered: Spring Prerequisites M369 or M530 and preparedness to do programming in a standard language. Course Description Develop mathematical theory and algorithms for the characterization of structure in large data sets. The notion of the geometry of a data set and representations via subspaces and manifolds. Discussion of linear and nonlinear techniques, global and local transformations; empirical and analytical methods. Applications include real world problems such as low-dimensional modeling of physical systems and face recognition. Instructor Mathematics faculty. Possible Texts: 1) Geometric Data Analysis, Michael Kirby, Wiley 2001. 2) Methods of Information Geometry, S.Amari, H. Nagaoka, AMS, 2001 Additional Class Material Data sets for applications and additional notes may be provided by instructor. Course Objectives At the end of the course the students will have an understanding of the mathematical theory and algorithms useful for representing information in large data sets. The students will have an understanding of how to select an appropriate methodology to process new data based on a critical understanding of the mathematical theory and algorithms. Mode of Delivery M532 will meet twice per week for an hour and 15 minutes of traditional lecture. Methods of Evaluation The grade in M532 will be based on 6 problems sets consisting of a theoretical part A) and a computing and algorithms part B) and one final capstone project. The problem sets will count for 80% of the grade and the final project 20% of the grade. Course Topics/Weekly Schedule The first half of the course will cover linear methods and the second half will be devoted to nonlinear methods for low-dimensional data representation. Week 1: Introduction to the notion of geometry in data and the dimensionality reduction problem. Qualitative description of basic course themes including i) ii) iii) iv) empirical vs analytical transformations; bilipschitz mappings local versus global representations; charts and Whitney’s theorem; dimension. linear and nonlinear mappings manifolds and subspaces Week 2: The mathematics of the singular value decomposition and its relationship to the construction of projections onto the four fundamental subspaces. Application to data reduction from physical processes, signals and images. Rank and encapsulating dimension. Week 3: Derivation of optimal projections: entropy, mean-square error, energy. Frobenius and 2-norms. Example applications to face recognition and partial differential equations. Role of symmetry. Optimal Galerkin projections. Week 4: The geometry of missing data. Low-dimensional reconstruction using the singular value decomposition. Iterative methods and arrays of linear systems. Week 5: Alternative optimality criteria for low-dimensional projections. Signal Fraction Analysis. Generalized singular value decomposition. Reduction algorithms for pattern classification. Applications to noisy real world signals such as EEG data. Week 6: Wavelets I: the continuous wavelet transform in one and two dimensions. Application to digital images, e.g., United States Forest Service data: scale based quantification of landscape ecology. Week 7: Wavelets II: the discrete wavelet transform in one and two dimensions. Dyadic grids. Multiresolution analysis. The Pyramidal algorithm. Application to signal denoising and data compression. Week 8: Radial Basis Functions: theory. Interpolation problem. Overdetermined least squares. Miccelli’s theorem. Week 9: Radial Basis Functions: algorithms and applications. Rank one updates. Nonlinear optimization. Clustering. Orthogonal least squares. Week 10: General architectures for nonlinear mappings. Neural networks. Topology preserving mappings. Nonlinear dimension reduction. Homeomorphisms. Illustrations with one and two dimensional manifolds. Week 11: Whitney’s theorem. New optimality criteria for projections. Bilipschitz functions as dimension preserving mappings. Diffeomorphisms. Week 12: Optimization problems revisited. Constrained optimization. Nonlinear least squares. Week 13: Local methods. Vector quantization. Empirical charts of an atlas. Week 14: Local SVD. Scaling laws. Time delay embedding, dynamical systems methods. Week 15: Discussion of final projects. Instructor guided project lab. Week 16: (Finals week). Student presentations of final project. This final project challenges students to select techniques learned in the previous 15 weeks to analyze a real world problem.