Word [] file

advertisement

![Word [] file](http://s3.studylib.net/store/data/005844530_1-99c17fd0e64cffcc3adcc55d1b554d18-768x994.png)

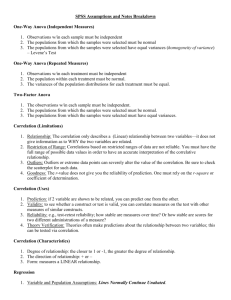

Miscellaneous Regression Notes

1. Variances and Squared Correlations

Squared correlations (of various kinds) always index variance that is shared (or overlapping) between

the variables involved in the correlation. Variances of variables, and their overlap, is often illustrated by

a Venn diagram. Consider a multiple regression model where Y is the dependent variable (DV), or

criterion, and there are two independent variables (IVs), or predictors, denoted by X and Z.

This regression model can be written as follows:

Y=b1X + b2Z + b0

X

Y

A

Z

B

C

D

The total area contained by the square box illustrates the total variance of the DV Y. The areas of the 4

separate area-elements in the figure (which are labelled A, B, C, and D respectively) therefore reflect

parts of the variance of Y. The total variance of Y is thus the sum of the 4 areas, i.e. A+B+C+D. (This

can be standardised so that Y has a variance of 1, but we won’t do so here.) The amount of variance in

Y that is shared with the IV X is thus B+C, while the amount that is shared with Z is C+D. The

amount of variance in Y that is accounted for by the complete regression model is thus B+C+D (we

don’t count C twice). A is thus the variance of Y that is not accounted for by the regression model (the

bit of the box that is outside either circle). The part of Y’s variance that is uniquely1 accounted for by X

is B and the bit uniquely accounted for by Z is D. Area C represents Y variance that is accounted

“Uniquely” here refers to the context of the specific regression model being considered. So, variance uniquely accounted

by one IV in the model is not overlapping with variance account for by any of the other IVs in the model. Obviously, other

IVs (not included in the model) may overlap with some portion of variance uniquely accounted for by an IV in the model.

1

for by the regression model, but is variance common to both X and Z. We are now in a position to

consider several kinds of correlations in terms of the above figure and the areas A, B, C and D.

1.1. Simple (Zero-order) Bivariate Correlations. This type of correlation between Y

and X can be denoted as rYX, where the subscripts denote the variables involved in the correlation.

The square of this correlation, r2YX, is equivalent to the variance in Y that overlaps with (is accounted

for by) X, expressed as a proportion of the total variance of Y. From the figure, therefore, r2YX =

(B+C)/(A+B+C+D). What is the area for the equivalent correlation between Y and Z?

1.2. Multiple Correlations from the Model. This correlation is denoted with a capital

R. We can denote a set of IVs from a regression model by writing the variables inside a pair of carets,

e.g. <XZ>. The multiple R from our model is thus the correlation between Y and <XZ> and so it

might therefore be more informatively written as RY<XZ>. (Howell has an alternative notation which I

think is likely to lead to confusion, particularly when combined with his notation for partial and

semipartial correlations -- see below. Howell would write this multiple correlation as RY.XZ.) The

squared multiple correlation, SMC or R2Y<XZ>, is the proportion of variance in Y that is accounted

for by the set of variables <XZ> in the complete regression model. The model F statistic tests

R2Y<XZ> to determine whether the model predicts the DV better than would be expected by chance.

Thus, from the figure, we can see that:

R2Y<XZ> = (B+C+D)/(A+B+C+D)

The proportion of variance in Y that is not explained by the model is called the unexplained (or

residual) variance and this is 1 - R2Y<XZ>. From the figure, we can see that the proportion of residual

Y variance is A/(A+B+C+D). Note the important fact that R2Y<XZ> is NOT equivalent to the sum

of the squares of the pairwise correlations between the DV and each of the IVs:

R2Y<XZ> < (r2YX

+ r2YZ)

In fact, from the above figure we can see that:

R2Y<XZ> = r2YX

+ r2YZ - C/(A+B+C+D)

As already noted, C/(A+B+C+D) is the proportion of Y variance accounted for the model which is

common to both X and Z. This “C” portion of variance is included in the overall model and thus if it is

large then the model F statistic may well be significant. However, area C is not included in the t-tests

applied to the regression coefficients for each of the individual IVs in the model. These t-tests assess

whether each particular IV uniquely explains an above-chance portion of DV variance. For our model

these t-tests address areas B and D respectively (as we will see below). However, the role of the “C”

portion can lead to the unusual situation in which the overall model is significantly better than chance,

and yet none of the individual IVs uniquely explains any significant amount of DV variance. In this

case, it would not be correct to conclude that these IVs explain only a nonsignificant amount of

variance in the DV: they may well have a significant zero-order correlation with the DV (and thus

explain a significant amount of DV variance).

1.3. Partial and Semipartial Correlations. (Note that SPSS refers to semipartial

correlations as part correlations.) From the figure, we can see that B/(A+B+C+D) is the variance in Y

that is uniquely explained by X expressed as a proportion of the total variance of Y. In fact, this

proportion of variance is equivalent to the squared semipartial correlation between Y and X after

controlling for Z. What ratio of areas in the figure relates to the semipartial correlation between Y and

Z after controlling for X? However, we might just as reasonably have decided to express the portion of

Y variance, uniquely accounted for by X, as a proportion of the amount of Y variance that is not

accounted for by the rest of the IVs in the model (i.e., Y variance that excludes the portion

accounted for by Z). From the figure, the portion of variance uniquely accounted for by X, expressed

as a proportion of the Y variance not accounted for by the rest of the IVs in the model, is B/{Total Y

variance - Y variance explained by Z). This is B/([A+B+C+D] - [C+D]), which can be further

rewritten as B/[A+B]. This proportion of Y variance is equivalent to the squared partial correlation

between Y and X after controlling for Z. What ratio of areas in the figure relates to the squared partial

correlation between Y and Z after controlling for X?

It may help to develop a notation for partial and semipartial correlations. We need to be able to write

an expression for the variable which results when we partial out, from a variable W, the variance

accounted for by another variable (V). The resulting variable will be denoted (W.V). The variable to

the right of the “dot” is partialled out from the variable to the left of the dot. Thus, the correlation

between another variable U and (W.V) will be denoted by rU(W.V). Using this notation, rY(X.Z) is the

semipartial correlation between Y and X after controlling for Z. (Howell uses the identical notation.) In

our notation, we might remove from Y the variance accounted for by Z, resulting in the variable (Y.Z).

Thus, we could denote a correlation between (Y.Z) and (X.Z) by the term r(Y.Z)(X.Z). This correlation

is the partial correlation between Y and X after controlling for Z. (Howell uses a shorter, but potentially

ambiguous, notation for partial correlations: he would write r(Y.Z)(X.Z) as r YX.Z. I prefer my

version.)

There are two final points in this section. First, variables, such as (Y.Z) described above, can be

calculated by finding the residual Y scores left after subtracting the predicted Y scores from the actual

Y scores. The predicted Y scores are conventionally denoted by Y with a hat over it (Ŷ; it is called “y

hat”). Ŷ is based on the simple linear regression using Z as an IV (i.e., Ŷ = b1Z + b0). Second, imagine

we had a multiple regression with 3 IVs: Y = b1X + b2Z + b3T + b0. The partial and semipartial (part)

correlations printed out by a stats package such as SPSS would correspond to the correlation between

the DV and each IV after partialling out the other 2 IVs. Thus, for the model above, the semipartial

correlation between Y and X would partial out both Z and T. We would write this, using our notation,

as rY(X.<ZT>), denoting that the set of variables <ZT> has been collectively partialled out. The

equivalent partial correlation would thus be written (in my notation) as r(Y.<ZT>)(X.<ZT>).