Example 7.2 A pizza shop sells pizzas in four different sizes. The

advertisement

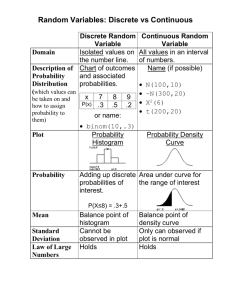

Chapter 7 Population Distributions 7.1 Basic concepts A population is the entire collection of individuals or objects about which information is desired. In this chapter, we introduce probability models that can be used to describe the distribution of characteristics of individuals in a population. A variable (random variable) associates a value with each individual or object in a population. A variable may be either categorical or numerical, depending on its possible values. A categorical variable, if each value of the variable is a category. A variable A numerical variable, if each value of the variable is a number. A discrete numerical variable, if its possible values are isolated points along the number line. A numerical variable A continuous numerical variable, if its possible values form an interval along the number line. A population distribution The distribution of all the values of a numerical variable or categories of a categorical variable. A population distribution provides important information about the population. The population distribution for a categorical variable or a discrete numerical variable can be summarized by a relative frequency histogram or a relative frequency distribution, whereas a density histogram is used to summarize the distribution of a continuous numerical variable. Example 7.1 Based on past history, a fire station reports that 25% of the calls to the station are false alarms, 60% are for small fires that can be handled by station personnel without outside assistance, and 15% are for major fires that require outside help. The following is a relative frequency histogram that represents the distribution of the variable x = type of call. relative frequency 0.8 0.6 0.4 0.2 0 false alarms small major fires fires type of call Figure 7.1 Population distribution of x = type of call Based on the information given, we have P (x = false alarm) = .25. The mean value of a numerical variable x, denoted by , describes where the population distribution of x is centered. The variance of a numerical variable x, denoted by 2, describes variability in the population distribution. When 2 is close to 0, the values of x in the population tend to be close to the mean value (little variability). When the value of 2 is large, there is more variability in the population of x values. The standard deviation of a numerical variable x, denoted by the positive square root of 2, also describes variability in the population distribution. If the population distribution for a variable is known, we can determine the values of and . For a discrete numerical variable X with distribution x x1 x2 … xk p(x) p(x1) p(x2) … p(xk) The mean of X: = x1 p( x1 ) x2 p( x2 ) xk p( xk ) The variance of X: 2 ( x1 ) 2 p( x1 ) ( x 2 ) 2 p( x 2 ) ( x k ) 2 p( x k ) For a continuous numerical variable X with the density function f(x), The mean of X: = xf ( x)dx . The variance of X: 2 = ( x ) 2 f ( x)dx . Example 7.2 A pizza shop sells pizzas in four different sizes. The 1000 most recent orders for a single pizza gave the following proportions for the various sizes. 12” .20 Size Proportion 14” .25 16” .50 18” .05 With x denoting the size of a pizza in a single-pizza order, the above table is an approximation to the population distribution of x. a) Construct a relative frequency histogram to represent the approximate distribution of this variable. b) Approximate P(x < 16). c) Approximate P(x 16). d) It can be shown that the mean value of x is approximately 14.8”. What is the approximate probability that x is within 2” of this mean value? relative frequency a) 0.6 0.4 0.2 0 12 14 16 18 Size of pizza (in inches) Figure 7.2 Population distribution of size of a pizza b) P (x < 16) = P (x = ?) + P (x = ?) = 0.20 + 0.25 = 0.45. c) P (x 16) = P (x = ?) + P (x = ?) + P( x = ?) = 0.20 + 0.25 + 0.50 = 0.95. d) P (x is within 2 of the mean value) = P (14.8 – 2 x 14.8 + 2) = P (12.8 x 16.8) = P (x = ?) + P (x = ?) = 0.25 + 0.50 = 0.75 Note: The outcome can be described with words or by using a variable name. 7.2 Important discrete distributions (distributions of discrete variables) (i) Bernoulli distribution A Bernoulli distribution comes from a Bernoulli trial. A Bernoulli trial is an experiment with two, and only two, possible outcomes. For example, tossing a coin, guessing a multiple-choice problem, recording gender of people, and so on, are all Bernoulli trials. For a Bernoulli trial, it is convenient to label one of the two possible outcomes S (for success) and the other F (for failure). As long as analysis is consistent with the labeling, it is immaterial which category is assigned S label. Then we have x Probability (proportion) S (success) F (failure) 1 Further we can consider S as 1 and F as 0. Then x Probability (proportion) 1 0 1 Table7.1 Bernoulli() distribution Mean: = Variance: 2 = (1- ) 2 (1 ) Standard deviation: = ii) Binomial distribution Suppose that we repeat a Bernoulli trial with probability of success n times. Let X be the number of successes in the n trials. Then X is called a binomial(n, ) random variable. Its distribution is called a binomial(n, ) distribution. n P( X x) x (1 ) n x , x = 0, 1, , n, x n n! , m!( read m factorial ) = m(m-1) 2 1 and 0! = 1. where x x!(n x)! Mean: = n Variance: 2 = n(1-) Standard deviation = 2 n (1 ) Example 7.3 A student answered 5 multiple-choice problems (each with 4 responses and one correct) by guess. Let X = the number of correct answers the student got. Find P(X = 2). Here X has a binomial(?, ?) distribution. Thus p( x 2) ( ) (1 14 ) 52 = ? 5! 1 2 2!( 52)! 4 7.3 Population models for continuous numerical variables. The distribution of a continuous numerical variable can be summarized by a density histogram. Note that in a density histogram, Area of a rectangle = (height) (interval width) = (density) (interval width) = (relative frequency / interval width) (interval width) = relative frequency. Thus, the area of the rectangle above each interval is equal to the relative frequency of values that fall in the interval. Since the area of a rectangle in the density histogram specifies the proportion of the population values that fall in the corresponding interval, it can be interpreted as the probability that a value in the interval would occur if an individual were randomly selected from the population. The probability of observing a value in an interval other than those used to construct the density histogram can be approximated. And the approximation of probabilities can be improved by increasing the number of intervals on which the density histogram is based. Two important ideas (1) When summarizing a continuous population distribution with a density histogram, the area of any rectangle in the histogram can be interpreted as the probability of observing a variable value in the corresponding interval when an individual is selected at random from the population. (2) When a density histogram based on a small number of intervals is used to summarize a population distribution for a continuous numerical variable, the histogram can be quite jagged. However, when the number of intervals is increased, the resulting histograms become much smoother in appearance. Example 7.4 The following relative frequency distribution summarizes birth weight for all fullterm babies born during 1995 in a semi-rural county. Class Interval 3.5 - < 4.5 4.5 - < 5.5 5.5 - < 6.5 6.5 - < 7.5 7.5 - < 8.5 8.5 - < 9.5 9.5 - < 10.5 Relative Frequency 0.01 0.04 0.25 0.37 0.26 0.05 0.02 Density 0.01 0.04 0.25 0.37 0.26 0.05 0.02 0.4 Density 0.3 0.2 0.1 0 4 5 6 7 8 9 10 Birth weight Figure 7.3 Density histograms for birth weight with 7 intervals Based on the distribution, we can find P(5.5 x < 6.5) = area of rectangle above the interval [5.5, 6.5 = (density)(width) = ? ? = 0.25 We can also approximate the probability of observing a birth weight between 6 and 7 pounds. P(6 x < 7) (½) (area of rectangle for 5.5 – 6.5) + (½) (area of rectangle for 6.5 – 7.5) = (½) (.25)(1) + (½) (.37)(1) = .31 Class Interval 3.5 - < 4.0 4.0 - < 4.5 4.5 - < 5.0 5.0 - < 5.5 5.5 - < 6.0 6.0 - < 6.5 6.5 - < 7.0 7.0 - < 7.5 7.5 - < 8.0 8.0 - < 8.5 8.5 - < 9.0 9.0 - < 9.5 9.5 - < 10.0 10.0 - < 10.5 Relative Frequency 0.004 0.006 0.015 0.025 0.10 0.15 0.17 0.20 0.14 0.12 0.02 0.03 0.00 0.02 Density 0.008 0.012 0.03 0.05 0.20 0.30 0.34 0.40 0.28 0.24 0.04 0.06 0.00 0.04 0.5 Density 0.4 0.3 0.2 0.1 0 3.75 4.25 4.75 5.25 5.75 6.25 6.75 7.25 7.75 8.25 8.75 9.25 9.75 10.3 Birth weight Figure 7.4 Density histograms for birth weight with 14 intervals Now P(6 x < 7) = area of rectangle for 6.0 – 6.5 + area of rectangle for 6.5 – 7.0 = (?)(?) + (.34)(0.5) = .32 It is often useful to represent a population distribution for a continuous variable by using a simple smooth curve that approximates the actual population distribution. Such a curve is called a continuous probability distribution. Since the total area of the rectangles in a density histogram is equal to 1, the total area under the curve must be equal to 1. A continuous probability distribution is a smooth curve (called a density curve) that serves as a model for the population distribution of a continuous variable. Properties of continuous probability distributions 1. The total area under the curve is equal to 1. 2. The area under the curve and above any particular interval is interpreted as the probability of observing a value in the corresponding interval when an individual or object is selected at random from the population. 3. For any constant c, P(x = c) = 0. 4. For continuous numerical variables and any particular numbers a and b, P(x a) = P(x < a) P(x a) = P(x > a) P(a < x < b) = P(a x b). Question: Do the above results hold for any variable x? 7.4 Important continuous distributions 1) Uniform distributions A continuous distribution is called the uniform distribution on [a, b], if its density curve is determined by 1 , a x b f ( x) b a otherwise 0 Mean: (a b) / 2 Variance: 2 (b a) 2 / 12 Standard deviation: (b a ) / 12 Example 7.5 Suppose that x has the uniform distribution on [5, 10]. Find P (x 6), P (x > 8), and P (7 < x 8.5) P (x 6) = 1 10 5 1 10 5 (? - ?) = 0.2 (? - ?) = 0.4 P (x > 8) = P (7 < x 8.5) = 101 5 (8.5 – 7) = 0.3 2) Normal distributions Normal distributions play a central role in a large body of statistics, since i) Normal distributions and distributions associated with them are very tractable analytically. ii) Normal distributions can be used to model many populations. iii) Based on the Central Limit Theorem, normal distributions can be used to approximate a large variety of distributions in large samples. Normal distributions are continuous, bell shaped, and symmetric with the height of the curve decreasing at a well-defined rate when moving from the top of the bell into either tail. Normal distributions are sometimes referred to as normal curves. There are many different normal distributions, and they are distinguished from one another by their mean and standard deviation . The value of is the number on the measurement axis lying directly below the top of the bell. The density curve of a normal distribution with mean and standard deviation is determined by f(x) = 1 2 2 e 1 2 2 ( x )2 , - < x < . 2 We denote by N ( , ) a normal distribution with mean and variance 2 . As with all continuous probability distributions, the total area under any normal curve is equal to 1. In working with normal distributions, two general skills are required: 1. We must be able to compute probabilities for a normal distribution, which are areas under a normal curve and above given intervals. 2. We must be able to describe extreme values in a normal distribution. Find probabilities 1. The standard normal distribution The standard normal distribution the normal distribution with = 0 and = 1 It is customary to use the letter z to represent a variable whose distribution is described by the standard normal curve. The term z curve is often used in place of standard normal curve. For any number z* between –3.89 and 3.89 and rounded to two decimal places, Appendix Table 2 on pages 706-707 gives P (z < z*) = P (z z*) = area under z curve to the left of z*, where the letter z is used to represent a variable whose distribution is the standard normal distribution. To find this probability, locate: 1) The row labeled with the sign of z* and the digit to either side of the decimal point (for example –1.7 or 0.5) 2) The column identified with the second digit to the right of the decimal point in z* (for example, .06 if z* = -1.76) The number at the intersection of this row and column is the desired probability, P (z < z*). We can also use the probabilities tabulated in Appendix Table 2 to calculate other probabilities involving z. For example, P(z > c) = area under the z curve to the right of c = 1- P(z c). P(a < z < b) = area under the z curve and above the interval from a to b = P(z < b) – P(z <a). Example 7.6 Find the following probabilities: (a) P(z < -1.96); (b) P(z 0.58); (c) P(z -4.10); (d) P(z < 3.92); (e) P(-1.76 < z < 0.58); (f) P(-2.00 < z < 2.00) ; (g) P(0.75 < z < 2.35); (h) P(z > 1.96); (i) P(z > -1.28). (a) The probability P(z < -1.96) can be found at the intersection of the –1.9 row and .06 column of the z table. P(z < -1.96) = 0.0250 (b) P(z 0.58) = ? (c) Since P(z < -3.89) = .0000 (that is, zero to four decimal places), it follows that P(z < -4.10) 0 (d) Since P(z < 3.92) > P(z < 3.89) = 1.000. we conclude that P(z < 3.92) ?. (e) P(-1.76 < z < 0.58) = P(z < 0.58) – P(z < -1.76) = .7190 - .0392 = .6798 (f) P(-2.00 < z < 2.00) = P(z < 2.00) – P(z < -2.00) = ? - ? = ? (g) P(0.75 < z < 2.35) = P(z < 2.35) – P(z < 0.75) = ? - ? = ? (g) P(z > 1.96) = 1 – P(z 1.96) = 1 - .9750 = .0250. (h) P(z > -1.28) = 1- P(z -1.28) = 1- ? = ? 2. Other Normal Distributions A property for normal distributions: If x has a normal distribution with mean and standard deviation , then x has the standard normal distribution. Our strategy for obtaining probabilities for any normal distribution will be to find an “equivalent” problem for the standard normal distribution, that is, if x is a variable whose behavior is described by a normal distribution with mean and standard deviation , then P( x < b) = P( x P(a < x) = P( b ) = P( z a x a x P(a < x < b) = P( b ) ) = P ( a z ) b ) = P( a z b ) where z is a variable whose distribution is standard normal. Example 7.7 Suppose that x has a normal distribution with mean 100 and standard deviation 6. Find (a) P(x 88); (b) P(94 < x < 112); (c) P(x > 110); (d) P(X = 102); (e) P(x is within 9 of the mean value). (a) P( x 88) = P(z 88100 6 ) = P(z -2) = 0.0228 (b) P(94 < x < 112) = P((? - ?)/? < z < (? - ?)/?) = P(? < z < ?) = P( z < ?) - P( z ?) =?-?=? (c) P(x > 110) = P(z > (? - ?)/?) = P(z > ?) = 1-P(z ?) = 1 - ? = ? (d) P(X = 102) = ? (e) P(x is within 9 of the mean value) = P(? < x < ?) = P(? < z < ?) = ? - ? = ? Identifying Extreme Values 1. The Standard normal distribution Let’s now see how we can identify extreme values in the standard normal distribution. (1) The smallest extreme values. Suppose we want to describe the values that make up the smallest 2% of the standard normal distribution. Symbolically, we are trying to find a value (call it z*) such that P(z < z*) = .02 Question: What percentile is z*? We look for a cumulative area of .0200 in the body of Appendix Table 2. The closest cumulative area in the table is .0202, in the –2.0 row and .05 column; so we will use z* =-2.05, the best approximation from the table. The values less than -2.05 make up the smallest 2% of the standard normal distribution. (2) The largest extreme values. Suppose that we had been interested in the largest 5% of all z values. We would then be trying to find a value of z* such that P(z > z*) = .05. Since Appendix Table 2 always works with cumulative area (area to the left), the first step is to determine Area to the left of z* = 1 - .05 = .95 Looking for the cumulative area closest to .95 in Table 2, we find that .95 falls exactly halfway between .9495 (corresponding to a z value of 1.64) and .9505 (corresponding to a z value of 1.65). Since .9500 is exactly halfway between the two areas, we will use a z value that is halfway between 1.64 and 1.65. This gives z* = (1.64 + 1.65) / 2 =1.645 The largest 5% are those values greater than 1.645. (3) The most extreme values. Sometimes we are interested in identifying the most extreme (unusually large or small) values in a distribution. For example, describe the values that make up the most extreme 5% of the standard normal distribution. We would be trying to find a value of z* such that P(z < -z* or z > z*) = .05. Since the standard normal distribution is symmetric, the most extreme 5% would be equally divided between the high side and the low side of the distribution, resulting in an area of .025 for each of the tails of the z curve. To find z*, first determine the cumulative area for z*, which is area to the left of z* = 1- (0.05)/2 = .975 The cumulative area .9750 appears in 1.9 row and .06 column of Table 2, so z* = 1.96. For the standard normal distribution, 95% of the variable values fall between –1.96 and 1.96; the most extreme 5% are those values that are either greater than 1.96 or less than – 1.96. 2. Other normal distributions To describe the extreme values for a normal distribution with mean and standard deviation , we first solve the corresponding problem for the standard normal distribution and then translate our answer into one for the normal distribution of interest. The general steps are: (1) Solve the corresponding problem for N(0, 1) to find z*. (2) Convert z* back to x* for N ( , 2 ) by x* = +z*, ( (x*-)/ = z*). Example 7.8 Let x be the amount of oxides of nitrogen emitted by a randomly selected vehicle. Suppose that x has a normal distribution with = 1.6 and = .4. What emissions levels constitute the worst 10% of the vehicles? First we find z* such that P(z > z*) = 10%. From Table 2, we see that z* = 1.28. Then x* = + z* = 1.6 + 1.28 (.4) = 2.112 The vehicles with emissions levels greater than 2.112 constitute the worst 10% of the vehicles. Exercise in class: Suppose that x has a normal distribution with mean 20 and standard deviation 5. Find (a) the smallest 20% of all x values; (b) the largest 20% of all x values; (c) the most extreme 20% of all x values. 3) t distributions A continuous distribution is called the t distribution, if its density curve is determined by f ( x) 1 (( d 1) / 2) 1 , - < x < , 2 ( d 1) / 2 ( d / 2) d (1 x / d ) where d is a positive whole number called the number of degrees of freedom (df). t distributions are distinguished by the number of degrees of freedom. The variable that has the t distribution with d degrees of freedom is denoted by td. Mean: = 0, d > 1. Variance: 2 d d 2 , d > 2. Standard deviation: 2 d d 2 , d > 2. Important properties of t distributions 1. The t curve corresponding to any fixed number of degrees of freedom is continuous, bellshaped, symmetric, and centered at zero (just like the standard normal (z) curve). 2. Each t curve is more spread out than the z curve. 3. As the number of degrees of freedom increases, the spread of the corresponding t curve decreases. 4. As the number of degrees of freedom increases, the corresponding sequence of t curves approaches the z curve. We can find probabilities related to a t distribution by using Appendix Table 4 on pages 709-711. Note: Appendix Table 4 jumps from 30 df to 35 df, then to 40 df, then to 60 df, then to 120 df, and finally to the column of z probabilities. If a number of degrees of freedom is between those tabulated, we just use the probabilities for the closest df. For df > 120, we use the z probabilities (the last column). Example 7.9 Find (1) P(t8 > 1.6); (2) P(t20 0.6); (3) P(t10 -0.6); (4) P(-0.5 < t38 < 1.0); (5) P(t121 < -0.8). (1) The probability P(t8 > 1.6) can be found at the intersection of 1.6 row and 8 column of the t table. P(t8 > 1.6) = .074 (2) P(t20 0.6) = 1 - P(t20 > 0.6) = 1- ? = ? (3) P(t10 -0.6) = P(t10 > 0.6) = 0.278 (4) The closest df to 38 is 40, thus P(-0.5 < t38 < 1.0) P(-0.5 < t40 < 1.0) = P(t40 < 1.0) - P(t40 < -0.5) = [1- P(t40 1.0)] - P(t40 > 0.5) = [1 - ?] - ? = ? - ? = ? (5) Since 121 > 120, we use the last column to find the probability, that is, P(t121 < -0.8) P(z < -0.8) = P(z > 0.8) = ?.