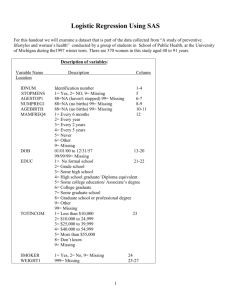

Logistic Regression Model Fitting

advertisement

LOGISTIC REGRESSION, POISSON

REGRESSION AND GENERALIZED

LINEAR MODELS

We have introduced that a continuous response, Y, could depend on continuous or

discrete variables X1, X2,… Xp-1. However, dichotomous (binary) outcome is most

common situation in biology and epidemiology.

Example:

In a longitudinal study of

coronary heart disease as a

function of age, the

response variable Y was

defined to have the two

possible outcomes: person

developed heart diease

during the study, person did

not develop heart disease

during the study. These

outcomes may be coded 1

and 0, respectively.

Logistic regression

Age and signs of coronary heart disease (CD)

Age

CD

Age

CD

Age

CD

22

23

24

27

28

30

30

32

33

35

38

0

0

0

0

0

0

0

0

0

1

0

40

41

46

47

48

49

49

50

51

51

52

0

1

0

0

0

1

0

1

0

1

0

54

55

58

60

60

62

65

67

71

77

81

0

1

1

1

0

1

1

1

1

1

1

Prevalence (%) of signs of CD

according to age group

D iseased

Age group

# in group

#

%

20 -29

5

0

0

30 - 39

6

1

17

40 - 49

7

2

29

50 - 59

7

4

57

60 - 69

5

4

80

70 - 79

2

2

100

80 - 89

1

1

100

100

80

Diseased %

60

40

20

0

0

1

2

3

4

5

6

7

Age (years)

1

The simple linear regression model

Yi=0+1Xi+i

Yi=0,1

Special Problems When Response

Variable Is Binary

The response function

1. Nonnormal Error Terms

E{Yi}=0+1Xi

When Yi=1: i =1-0-1Xi

We view Yi as a random variable with a

Bernoulli distribution with parameter I

When Yi=0: i =-0-1Xi

Yi

Prob(Yi)

1

P(Yi=1)= i

0

P(Yi=0)= 1-i

P(Yi=k)=

ik (1 i )1k

Can we assume i are normally distributed?

2. Nonconstant Error Variance

2{i}= (0+1Xi)(1-0-1Xi)

ordinary least squares is no longer optimal

3. Constraints on Response Function

, k=0,1

0E{Yi}1

E{Yi}=1*i+0*(1-i)= i

The logistic function

What does E{Yi} mean?

E{Yi}=0+1Xi = i

Probability of

disease

1.0

P(y 1)

0.8

E{Yi} is the probability that Yi=1 when

then level of the predictor variable is Xi .

This interpretation applies whether the

response function is a simple linear one,

as shown above, or a complex multiple

regression one.

eβ0β1x

1 eβ0β1x

0.6

0.4

0.2

0.0

x

Both theoretical and empirical results suggest

that when the response variable is binary, the

shape of the response function is either as

a tilted S or as a reverse tilted S.

The logistic function

P(y 1)

e β0 β1x

1 e β0 β1x

i

P( y 1)

ln

ln

1 i

1 P( y 1)

log it function

0 1 x

2

Simple Logistic Regression

1. Model: Yi=E{ Yi }+i

Where, Yi are independent Bernoulli random variables with

i

0 1 x

1 i

exp( X )

E{Yi}=i= 1 exp(0 1 Xi ) ln

0

1 i

2. How to estimate 0 and 1?

log it function

a. Likelihood Function:

Since the Yi observations are independent, their joint

probability function is:

n

g(Y1 ,..., Yn ) iYi (1 i )1Yi

i 1

The logarithm of the joint probability function (log-likelihood

function):

L(β 0 , β1 ) log e g(Y1 ,..., Yn )

n

[Yi log e (

i 1

n

πi

)] log e (1 π i )

1 πi

i 1

n

n

i 1

i 1

Yi (β 0 β1X i ) log e [1 exp(β 0 β1X i )]

3

b. Maximum Likelihood Estimation:

Log-likelihood

Maximum Likelihood Estimation

̂1

̂ 2

The maximum likelihood estimates of 0 and 1 in the simple

logistic regression model are those values of 0 and 1 that

maximize the log-likelihood function. However, no closed-form

solution exists for the values of 0 and 1 that maximize the loglikelihood function. Several Computer-intensive numerical search

procedures are widely used to find the maximum likelihood

estimates b0 and b1. We shall rely on standard statistical software

programs specifically designed for logistic regression to obtain the

maximum likelihood estimates b0 and b1.

3. Fitted Logit Response Function

ˆ

log e ( i ) b 0 b1Xi

1 ˆ i

4

4. Interpretation of b1

ˆ

log e (

) b 0 b1X

1 ˆ

when X=Xj,

when X=Xj+1,

OR

odds 1

ˆ X j

1 ˆ X j

odds 2

e

b 0 b1X j

ˆ X j 1

1 ˆ X j 1

e

b 0 b1 ( X j 1)

odds 1

e b1 log e OR=b1

odds 2

b1=increase in log-odds for a one unite increase in X

Assumption

Pi

Logit

Transform

Predictor

Predictor

5

Example:

Y = 1 if the task was finished

0 if the task wasn’t finished

X = months of programming

experience

Person

i

Months of

Experience

Xi

Task

Success

Yi

Fitted

Value

̂ i

Deviance

Residual

Devi

14

29

6

.

.

.

28

22

8

0

0

0

.

.

.

1

1

1

0.31

0.835

0.110

.

.

.

0.812

0.621

0.146

-.862

-1.899

-.483

.

.

.

.646

.976

1.962

1

2

3

.

.

.

23

24

25

SAS CODE:

SAS OUPUT:

proc logistic data = ch14ta01 ;

model y (event='1') = x ;

The LOGISTIC Procedure

run;

Notice that we can specify which event to

model using the event = option in the

model statement. The other way of

specifying that we want to model 1 as

event instead of 0 is to use the

descending option in the proc logistic

statement.

Analysis of Maximum Likelihood Estimates

Parameter

DF

Estimate

Error

Standard

Chi-Square

Wald

Pr > ChiSq

Intercept

x

1

1

-3.0597

0.1615

1.2594

0.0650

5.9029

6.1760

0.0151

0.0129

Odds Ratio Estimates

Effect

x

Point

Estimate

1.175

95% Wald

Confidence Limits

1.035

1.335

How to use the output to calculate

̂1 ? How to interpret

̂1 =0.31?

Interpretation of Odds Ratio Interpretation of b1

OR=1.175 means that the odds

of completing the task increase by

17.5 percent with each additional

month of experience.

b1=0.1615 means that the log-odds of

completing the task increase

0.1615 with each additional month

of experience.

4. Repeat Observations-Binomial Outcomes

6

In some cases, particularly for designed experiments, a number of repeat

observations are obtained at several levels of the predictor variable X.

For example, in a study of the effectiveness of coupons offering a price

reduction on a given product, 1000 homes were selected at random. The

coupons offered different price reductions (5,10,15,20 and 30 dollars),

and 200 homes werej assigned at random to each of the price reduction

categories.

Level

Price

Reduction

Number of

Households

j

1

2

3

4

5

Xj

5

10

15

20

30

nj

200

200

200

200

200

1

Yij

0

nj

Y. j Yij

i 1

Number of

Coupons

Redeemed

Y..j

30

55

70

100

137

Proportion of

Coupons

Redeemed

pj

.150

.275

.350

.500

.685

ith household redeemed coupons at level X j

̂ j

.1736

.2543

.3562

.4731

.7028

i 1,..., n j ; j 1,2,3,4,5

ith household not redeemed coupons at level X j

pj

MondelBased

Estimate

Y. j

nj

The random variable Y.j has a binomial distributi on given by :

n Y

n Y

f(Y.j ) j j . j (1 j ) j . j

Y. j

The log - likelihood function :

where

n j!

nj

Y. j Y !(n Y )!

.j

j

.j

c

nj

log e L(0 , 1 ) log e Y. j (0 1X j ) n j log e [1 exp(0 1X j )]}

j1

Y. j

SAS CODE:

data ch14ta02;

infile 'c:\stat231B06\ch14ta02.txt';

input x n y pro;

proc logistic data=ch14ta02;

model y/n=x;

/*request estimates of the predicted*/

/*values to be stored in a file named */

/*estimates under the variable name pie*/

output out=estimates p=pie;

run;

SAS OUTPUT:

The LOGISTIC Procedure

Analysis of Maximum Likelihood Estimates

Parameter

DF

Estimate

Standard

Error

Wald

Chi-Square

Pr > ChiSq

Intercept

x

1

1

-2.0443

0.0968

0.1610

0.00855

161.2794

128.2924

<.0001

<.0001

Odds Ratio Estimates

Point

Estimate

Effect

x

Obs

1

2

3

4

5

95% Wald

Confidence Limits

1.102

x

5

10

15

20

30

1.083

n

y

pro

200

200

200

200

200

30

55

70

100

137

0.150

0.275

0.350

0.500

0.685

1.120

pie

0.17362

0.25426

0.35621

0.47311

0.70280

proc print data=estimate;

run;

Multiple Logistic Regression

7

1. Model: Yi=E{ Yi }+i

Where, Yi are independent Bernoulli random variables with

exp( X 'i )

E{Yi}=i= 1 exp( X' )

i

ln i X 'i

i

1

2. How to estimate the vector ?

n

n

i 1

i 1

log it function

log e L() Yi ( X 'i ) log e [1 exp( X 'i )]

3. Fitted Logit Response Function

ˆ

log e ( i ) X'i b

1 ˆ i

Example:

1 disease present

Y

0 disease absent

X1 Age

1 Middle Class

X2

others

0

socioeconomic status

1 Lower Class

X3

others

0

Case

i

City

Sector

Xi4

Disease

Status

Yi

Fitted

Value

π̂ i

1

2

3

4

5

6

.

33

35

6

60

18

26

.

0

0

0

0

0

0

.

0

0

0

0

1

1

0

0

0

0

0

0

.

0

0

0

0

1

0

.

98

35

0

1

0

0

.171

SAS OUTPUT:

Analysis of Maximum Likelihood Estimates

Study purpose: assess the strength of the

association between each of the predictor

variables and the probability of a person having

contracted the disease

data ch14ta03;

infile 'c:\stat231B06\ch14ta03.txt'

DELIMITER='09'x;

input case x1 x2 x3 x4 y;

proc logistic data=ch14ta03;

model y (event='1')=x1 x2 x3 x4;

run;

Socioeconomic

Status

Xi2

Xi3

.209

.219

.106

.371

.111

.136

.

1 city sector 2

X4

0 city sector 1

SAS CODE:

Age

Xi1

Parameter

DF

Estimate

Standard

Error

Wald

Chi-Square

Pr > ChiSq

Intercept

x1

x2

x3

x4

1

1

1

1

1

-2.3127

0.0297

0.4088

-0.3051

1.5746

0.6426

0.0135

0.5990

0.6041

0.5016

12.9545

4.8535

0.4657

0.2551

9.8543

0.0003

0.0276

0.4950

0.6135

0.0017

Odds Ratio Estimates

Effect

x1

x2

x3

x4

Point

Estimate

1.030

1.505

0.737

4.829

95% Wald

Confidence Limits

1.003

0.465

0.226

1.807

1.058

4.868

2.408

12.907

The odds of a person having contracted the disease

increase by about 3.0 percent with each additional year of

age (X1), for given socioeconomic status and city sector

location. The odds of a person in section 2 (X4) having

contracted the disease are almost five times as great as

for a person in sector 1, for given age and socioeconomic

status.

Polynomial Logistic Regression

8

1. Model: Yi=E{ Yi }+i

Where, Yi are independent Bernoulli random variables with

exp( X 'i )

E{Yi}=i= 1 exp( X' )

i

π

ln i X 'i β 0 β11x β 22 x 2 β kk x k

πi

1

logit function

Where x denotes the centered predictor, X- X .

9

Example:

1 IPO was financed by venture capital funds

Y

0 IPO wasn't financed by venture capital funds

E s t i ma t e d P r o b a b i l i t y

1. 0

0. 9

0. 8

0. 7

X1 the face value of the company

0. 6

0. 5

Study purpose: determine the characteristics of companies that

attract venture capital.

0. 4

0. 3

SAS CODE:

0. 2

0. 1

data ipo;

infile 'c:\stat231B06\appenc11.txt';

input case vc faceval shares x3;

lnface=LOG(faceval);

run;

0. 0

13

14

15

16

17

18

19

20

l nf ace

E s t i ma t e d P r o b a b i l i t y

1. 0

* Run 1st order logistic regression analysis;

proc logistic data=ipo descending;

model vc=lnface;

output out=linear p=linpie;

run;

* produce scatterplot and fitted 1st order

logistic;

data graph1;

set linear;

run;

proc sort data=graph1;

by lnface;

run;

proc gplot data=graph1;

symbol1 color=black value=none interpol=join;

symbol2 color=black value=circle;

title'Scatter Plot and 1st Order Logit Curve';

plot linpie*lnface vc*lnface/overlay;

/* /overlay means to overlay the two graph*/

run;

*Find mean of lnface=16.7088;

proc means;

var lnface;

run;

* Run 2st order logistic regression analysis;

data step2;

set linear;

xcnt=lnface-16.708;

xcnt2=xcnt**2;

run;

proc logistic data=step2 descending;

model vc=xcnt xcnt2;

output out=estimates p=pie;

run;

0. 9

0. 8

0. 7

0. 6

0. 5

0. 4

0. 3

0. 2

0. 1

0. 0

- 3

- 2

- 1

0

1

2

3

xcnt

1.

The natural logarithm of face value is

chosen because face value ranges

over several orders of magnitude,

with a highly skewed distribution)

2.

The lowess smooth clearly suggests

a mound-shaped relationship.

SAS OUTOUT

:

The LOGISTIC Procedure

Analysis of Maximum Likelihood Estimates

Parameter

DF

Estimate

Standard

Error

Wald

Chi-Square

Pr > ChiSq

Intercept

xcnt

xcnt2

1

1

1

0.3000

0.5530

-0.8615

0.1240

0.1385

0.1404

5.8566

15.9407

37.6504

0.0155

<.0001

<.0001

Odds Ratio Estimates

Effect

Point

Estimate

95% Wald

Confidence Limits

xcnt

1.739

1.325

2.281

xcnt2

0.423

0.321

0.556

* produce scatterplot and fitted 2st order

logistic;

data graph2;

set estimates;

run;

proc sort data=graph2;

by xcnt;

run;

proc gplot data=graph2;

symbol1 color=black value=none interpol=join;

symbol2 color=black value=circle;

title'Scatter Plot and 1st Order Logit Curve';

plot pie*xcnt vc*xcnt/overlay;

/* /overlay means to overlay the two graph*/

run;

10

Inferences about Regression Parameters

1. Test Concerning a Single k: Wald Test

Hypothesis: H0: k=0 vs. Ha: k0

Test Statistic:

z*

bk

s{bk }

Decision rule: If |z*| z(1-/2), conclude H0.

If |z*|> z(1-/2), conclude Ha.

Where z is a standard normal distribution.

Note: Approximate joint confidence intervals for several logistic regression

model parameters can be developed by the Bonferroni procedure. If g

parameters are to be estimated with family confidence coefficient of

approximately 1-, the joint Bonferroni confidence limits are

bkBs{ bk}, where B=z(1-/2g).

2. Interval Estimation of a Single k

The approximate 1- confidence limits for k:

bkz(1-/2)s{ bk}

The corresponding confidence limits for the odds ratio exp(k):

exp[bkz(1-/2)s{ bk}]

11

Example:

Y = 1 if the task was finished

0 if the task wasn’t finished

X = months of programming

experience

SAS CODE:

Person

i

Months of

Experience

Xi

1

2

3

.

23

24

25

Task

Success

Yi

14

29

6

.

28

22

8

Fitted Value

Deviance

Residual

Devi

̂ i

0

0

0

.

1

1

1

-.862

-1.899

-.483

.

.646

.976

1.962

0.31

0.835

0.110

.

0.812

0.621

0.146

SAS OUPUT:

proc logistic data=ch14ta01 ;

model y (event='1')=x /cl;

run ;

The LOGISTIC Procedure

Analysis of Maximum Likelihood Estimates

Notice that (1) we can specify cl in the

model statement to get the output for

interval estimate for 0, 1, etc. (2) The

test for 1 is a two-sided test. For a onesided test, we simply divide the p-value

(0.0129) by 2. This yields the one-sided

p-value of 0.0065. (3) The text authors

report Z*=2.485 and the square of Z* is

equal to the Wald Chi-Square Statistic

6.176, which is distributed approximately

as Chi-Square distribution with df=1.

Parameter

DF

Estimate

Standard

Error

Wald

Chi-Square

Pr > ChiSq

Intercept

x

1

1

-3.0597

0.1615

1.2594

0.0650

5.9029

6.1760

0.0151

0.0129

H0: 10 vs. Ha: 1>0

for =0.05, Since one-sided p-value=0.0065<0.05, we conclude Ha,

that 1 is positive.

Odds Ratio Estimates

Effect

x

Point

Estimate

1.175

95% Wald

Confidence Limits

1.035

1.335

Wald Confidence Interval for Parameters

Parameter

Estimate

Intercept

x

-3.0597

0.1615

95% Confidence Limits

-5.5280

0.0341

-0.5914

0.2888

With approximately 95% confidence that 1 is between 0.0341

and 0.2888. The corresponding 95% condidence limits for the

odds ratio are exp(.0341)=1.03 and exp(.2888)=1.33.

3. Test Whether Several k=0: Likelihood Ratio Test

Hypothesis: H0: q=q+1=...p-1=0 v

Ha: not all of the k in H0 equal zero

Full Model:

exp( X'F )

[1 exp(X'F )]1, X'F β0 β1X1 βp 1Xp 1

1 exp( X'F )

Reduced Model:

exp( X' R )

[1 exp(X' R )]1, X' R β0 β1X1 βq 1Xq 1

1 exp( X' R )

The Likelihood Ratio Statistic:

L(R)

G 2 2log e

2[log e L(R) log e L(F)]

L(F)

The Decision rule: If G2 2(1-;p-q), conclude H0.

If G2> 2(1-;p-q), conclude Ha.

12

Example:

1 disease present

Y

0 disease absent

X1 Age

1 Middle Class

X2

others

0

socioeconomic status

1 Lower Class

X3

others

0

Case

i

Age

Xi1

Socioeconomic

Status

Xi2

Xi3

City

Sector

Xi4

Disease

Status

Yi

Fitted

Value

π̂ i

1

2

3

4

5

6

.

33

35

6

60

18

26

.

0

0

0

0

0

0

.

0

0

0

0

1

1

0

0

0

0

0

0

.

0

0

0

0

1

0

.

.209

.219

.106

.371

.111

.136

.

98

35

0

1

0

0

.171

1 city sector 2

X4

0 city sector 1

Study purpose: assess the strength of the association between each of the

predictor variables and the probability of a person having contracted the

disease

SAS CODE:

SAS OUTPUT:

Full model:

data ch14ta03;

infile 'c:\stat231B06\ch14ta03.txt'

DELIMITER='09'x;

input case x1 x2 x3 x4 y;

/*fit full model*/

proc logistic data=ch14ta03;

model y (event='1')=x1 x2 x3 x4;

run;

/*fit reduced model*/

proc logistic data=ch14ta03;

model y (event='1')=x2 x3 x4;

run;

Model Fit Statistics

Criterion

AIC

SC

-2 Log L

Intercept

Only

Intercept

and

Covariates

124.318

126.903

122.318

111.054

123.979

101.054

Reduced model:

Model Fit Statistics

Criterion

AIC

SC

-2 Log L

Intercept

Only

Intercept

and

Covariates

124.318

126.903

122.318

114.204

124.544

106.204

We use proc logistic to regress Y on X1,X2,X3 and X4 and

refer to this as full model. In SAS output for full model we

see that -2 Log Likelihood statistic=101.054. We now

regress Y on X2,X3 and X4 and refer to this as the full

model. In SAS output for reduced model we see that -2

Log Likelihood statistic=106.204. Using equation (14.60),

test page 581, we find G2=106.204-101.054=5.15. For

=0.05 we require 2(.95,1)=3.84. Since our computed G2

value (5.15) is greater than the critical value 3.84, we

conclude Ha, that X1 should not be dropped from the

model.

13

4. Global Test Whether all k=0: Score Chi-square test

Let U() be the vector of first partial derivatives of the log likelihood

with respect to the parameter vector , and let H() be the matrix of

second partial derivatives of the log likelihood with respect to . Let I()

be either - H() or the expected value of - H() . Consider a null hypothesis

H0. Let ̂ 0 be the MLE of under H0. The chi-square score statistic for

testing H0 is defined by U' (ˆ 0 )I 1 (ˆ 0 )U(ˆ 0 ) and it has an asymptotic 2

distribution with r degrees of freedom under H0, where r is the number of

restriction imposed on by H0.

Example:

1 disease present

Y

0 disease absent

X1 Age

1 Middle Class

X2

others

0

socioeconomic status

1 Lower Class

X3

others

0

Case

i

Age

Xi1

Socioeconomic

Status

Xi2

Xi3

City

Sector

Xi4

Disease

Status

Yi

Fitted

Value

π̂ i

1

2

3

4

5

6

.

33

35

6

60

18

26

.

0

0

0

0

0

0

.

0

0

0

0

1

1

0

0

0

0

0

0

.

0

0

0

0

1

0

.

.209

.219

.106

.371

.111

.136

.

98

35

0

1

0

0

.171

1 city sector 2

X4

0 city sector 1

Study purpose: assess the strength of the association between each of the

predictor variables and the probability of a person having contracted the

disease

SAS CODE:

data ch14ta03;

infile 'c:\stat231B06\ch14ta03.txt'

DELIMITER='09'x;

input case x1 x2 x3 x4 y;

proc logistic data=ch14ta03;

model y (event='1')=x1 x2 x3 x4;

run;

SAS OUTPUT:

Testing Global Null Hypothesis: BETA=0

Test

Likelihood Ratio

Score

Wald

Chi-Square

DF

Pr > ChiSq

21.2635

20.4067

16.6437

4

4

4

0.0003

0.0004

0.0023

Since p-value for the score test is 0.0004,

we reject the null hypothesis H0:

1=2=3=4=0. We can also wald test and

likelihood ratio test to test the above null

hypothesis.

14