Chapter 7 – Random Variables and Probability Distributions

advertisement

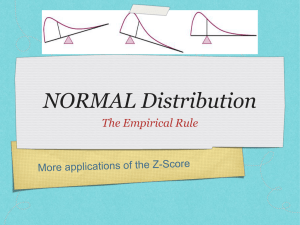

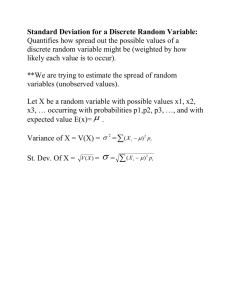

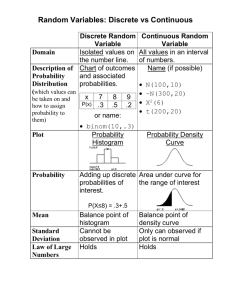

Chapter 7 – Random Variables and Probability Distributions (Linking probability with statistical inference) 7.1 Random Variables Random variables – variable whose value is subject to uncertainty, because the value depends on the outcome of a chance experiment. They are represented using lower case variables. Discrete random variable – set of possible values are isolated points on a number line. Discrete can be finite or infinite! Continuous random variable – set of possible values include intervals of the number line. 7.2 Probability Distributions for Discrete Random Variables Probability Distribution of a discrete random variable x A model that describes the long-run behavior of the variable. It gives the probability associated with each possible value of x. Each probability is the limiting relative frequency of occurrence of the corresponding x value when the chance experiment is performed repeatedly. Commonly displayed using tables, probability histograms, and formulae. Properties of Discrete Probability Distributions For every possible x value, 0 ≤ p(x) ≤ 1 p x 1 allx 7.3 Probability Distributions for Continuous Random Variables Probability Distribution for a continuous random variable x Is specified by a mathematical function denoted by f(x) and called the density function The graph of density function is a smooth curve (i.e. density curve) Two requirements: o f(x) ≥ 0 (i.e. curve cannot dip below horizontal axis) o Total area under the density curve is 1. The probability that x falls in any particular interval is the area under the curve between the least and greatest values of the interval. Three common events with probability calculations for continuous random variables: o a < x < b (x is a value between two numbers) o x < a (x is a value less than a given number) o x > b (x is a value greater than a given number) For any two number a and b, with a < b P( a ≤ x ≤ b) = P(a < x ≤ b) = P(a ≤ x < b) = P(a < x < b) o The probability that a continuous random variable x lies between a lower limit a and an upper limit b is: P(a < x < b) = (cumulative area to the left of b) – (cumulative are to the left of a) or P(a < x < b) = P(x < b) – P (x < a) 7.4 Mean and Standard Deviation of a Random Variable Mean Value of a Random Variable x – denoted by x , describes where the probability distribution is centered. The “mean” value is sometimes referred to as the expected value and is denoted by E(x). x = x P x allx Standard Deviation of a Random Variable x – denoted by x , describes variability in the probability distribution. A small x indicates the observed values tend to be close to the mean (i.e. little variability) and a large x indicates more variability in the observed values of x. Variance (denoted by 2 x = x x 2 P x allx So (standard deviation) x = 2 x (The mean, variance, and standard deviation of a random variable can be found in your calculator by putting the values of the random variable in L1 and the probabilities in L2. x will be the X and x will be the x and note that n=1 because the sum of the probabilities is 1!) The mean, variance and standard deviation of a linear function – If x is a random variable with mean x , variance 2 x , and standard deviation x ; and a and b are numerical constants, then the random variable y can be defined by: y = ax + b and is called a linear function of the random variable x the mean of y = ax + b is y = a bx =a +b x the variance of y is 2 y = 2 a bx =b2 2 x from which it follows the standard deviation of y is y =|b| x The mean, variance and standard deviation of a linear combination – If x1, x2, …, xn are random variables; and a1, a2, …, an are numerical constants; then the random variable y is defined as: y = a1x1 + a2x2 + … +anxn is a linear combination of the xi’s! and y = a1x1 + a 2 x 2 + independent) +a n x n and 2 y = a1x1 + a 2 x 2 + 2 and y = a1x1 + a 2 x 2 + =a11 + a22 + … + ann (regardless of whether the xi’s are +a n x n +a n x n = = a 21 21 a 2 2 2 2 a 2 n 2 n a21 21 a22 22 a2 n 2 n 7.5 Binomial and Geometric Distributions Arise when an experiment consists of making a sequence of dichotomous (two possible values) observations called trials. Binomial Probability Distribution – results from a sequence of trials that meet these criteria: There are a fixed number of observations Each trial can result in one of only 2 mutually exclusive outcomes (labeled S – success or F- failure) Outcomes of different trials are independent The probability that a trial results in S is the same for each trial Binomial Random Variable x = number of successes observed when a binomial experiment is performed n x Then the binomial distribution is : P(x) = n Cx x 1 where n = number of independent trials, = constant probability of a success, and nCx is a combination (n objects taken x at a time when order does not matter) n! represented by or in other words: x ! n x ! n! n x x 1 x ! n x ! Can be done in your calculator using: binompdf(#trials, prob. of success, # successes) (found in the distr menu by pressing 2nd, vars on the TI-84 or going into the Stats/List application on the TI-89 and then F5 to get to the distr menu.) P(x) = The cumulative binomial distribution is similar but gives the probability of up to a certain number of successes: binomcdf(#trials, prob. of success, maximum # of successes) mean value of a binomial random variable: X n variance of a binomial random variable 2 X n 1 standard deviation of a binomial random variable: X n 1 Geometric Probability Distribution – is interested in the number of trials need to get a success and has the criteria: Each trial can result in one of only 2 mutually exclusive outcomes (labeled S – success or F- failure) Outcomes of different trials are independent The probability that a trial results in S is the same for each trial (only difference is there is not a fixed number of trials!!!!) Geometric Random Variable x = number of trials to first success when an experiment is performed x 1 Then the geometric distribution is: P(x) = 1 Can be done in the calculator using geometpdf(prob. of success, # of first successful trial) or geometcdf(prob. of success, most # of trials) mean value of a geometric random variable: X variance of a binomial random variable 2 X 1 1 2 standard deviation of a binomial random variable: X 1 2 7.6 Normal Distributions Normal Distributions (also called a normal curve) are important because: They provide a reasonable approximation to the distribution of many variables by using a function that matches all possible outcomes of a random phenomenon with their associated probabilities. Since there are an infinite number of outcomes, a definite probability cannot be matched with a particular outcome. They play an important role in inferential statistics. There are many normal distributions and they are: bell-shaped, symmetrical, and unimodal the area under the curve is still 1 The curve continues infinitely in both directions and is asymptotic to the x-axis as it approaches ± they are defined by their mean () and their standard deviation (). The smaller the standard deviation, the taller and thinner the curve The larger the standard deviation, the wider and flatter the curve The “points of inflection” (where the curve changes from down to up) are at ±1 The Standard Normal Curve (or z curve) is the normal distribution with = 0 and = 1 and it is consistent with the Empirical Rule; where 68% fall within ±1, 95% fall within ±2, and 99.7% fall within ±3. Table A: Standard normal probabilities gives the probabilities for any z* between -3.89 and 3.89. Area under z curve to the left of z* = P(z < z*) = P(z ≤ z*) Area under z curve to the right of z* = 1 – (area under z curve to the left of z*) Area under z curve between a and b = P( z < b) – P( z < a) We can also use the Table A: Standard normal probabilities in reverse to find values that give a certain percentage. This can also be done using the invNorm function of the calculator where the arguments are invNorm (area, mean, standard). The calculator uses a default mean of zero and default standard deviation of 1 if none is given. If locations other than whole standard deviations are desired, then we compute a zscore and use Table A: Standard normal probabilities. The z-score is the number of standard deviations a value is from the mean. It is computed by: z x (this can also be found using the normalcdf function of the calculator using -1000 and 1000 as the upper or lower limits) To convert a z-score back to an x value use: x = + z