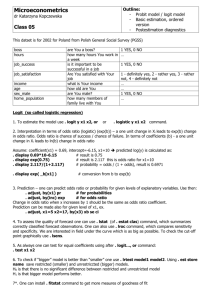

Hint for Project 3: Odds Ratios

advertisement

Odds Ratios Suppose we have 2 independent random samples from Bernoulli Distributions: X 1 ,..., X m ~ Bernoulli ( p X ) Y1 ,..., Yn ~ Bernoulli ( pY ) We wish to estimate pX and pY by maximum likelihood (as well as some functions of them): x m x Lx1 ,..., xm ; p X p X i1 i 1 p X i1 i m m l ln Lx1 ,..., xm ; p X im1 xi ln p X m im1 xi ln 1 p X l xi m xi p X pX 1 pX m i 1 im1 xi ^ m i 1 ^ 1 p X pX ^ Similarly: p Y ^ Setting this equal to 0 and solving for p X ^ m im1 xi 1 p X ^ pX n i 1 m im1 xi im1 xi ^ pX im1 xi m yi n Consider the odds of success (P(Success)/P(Failure) = P(S)/[1-P(S)]) ^ ^ odds X pX ^ 1 pX x m x m i 1 i m ^ ^ oddsY i 1 i pY ^ 1 pY y n y n i 1 i m i 1 i Due to distributional reasons, we often work with the(natural) logarithm of the odds (its sampling distribution approaches normality quicker wrt sample size). This measure is often called the logit : p ln X 1 pX ^ m xi ^ pX i 1 logit X ln ln ^ m 1 p m i 1 xi X ^ n yi ^ pY i 1 logit Y ln ln ^ n n y 1 p i 1 i Y logit X To obtain the Variance of the sample logit, we apply the delta method: ^ ^ ^ p Estimator : t p logit p ln ^ 1 p p Parameter : t ( p ) logit ( p ) ln 1 p 2 t ( p ) p ^ V t p 2 ln f y | p nE p 2 Note : Y ~ Bin 1, p E Y p ^ p p 1 p (1) p(1) 1 p 1 t ( p ) p 1 ln 2 p p 1 p p p1 p 1 p 2 p1 p 1 p ln f y | p ln p y 1 p 1 y ln f y | p y 1 y p p 1 p y ln p 1 y ln 1 p ln f y | p y 1 y 2 2 p p 1 p 2 2 y 2 ln f y | p 1 y p 1 p 1 1 1 E 2 E 2 2 2 2 p 1 p p 1 p p 1 p p1 p p 2 2 t ( p ) p ^ V t p 2 ln f y | p nE p 2 ^ p p 1 ^ ^ p1 p 1 ^ ^ n p 1 p 1 n ^ ^ p 1 p So, the Variance of the logit is approximated as above. Another way of writing this is: ^ V logit 1 xi m m xi i 1 m i 1 m m 1 1 ^ V logit Y n n yi n yi i 1 i 1 X m m i 1 m xi m i 1 xi m 1 m i 1 xi 1 m i 1 xi m Consider the Contingency Table, where Sample 1 is X, and Sample 2 is Y : Sample\Outcome Sample 1 Sample 2 Total Successes Failure x m i 1 xi yi n i 1 yi m i 1 i n i 1 m Total m m n n m n im1 xi in1 yi x i 1 yi n i 1 i mn Note that when either the number of Successes or Failures is 0 (which can happen when p = Pr(Success) is very low or high, the estimated Variance (and standard error) of the logit is infinity and the variance of the sample proportion is 0. Below, we will use an estimator that avoids this problem. The Odds Ratio is the ratio of the odds for 2 groups: OR = oddsX / oddsY. This takes on the value 1 when the odds and probabilities of Success are the same for each group. That is when outcome (Success or Failure) is independent of group. We can compute the MLE of the Odds Ratio as follows: m xi i 1 ^ m m xi n n yi ^ m x odds X i 1 i i 1 OR ^ ni 1 m n y m i 1 xi y oddsY i 1 i i 1 i n n i 1 yi This involves taking the product of the Successes for Group 1 and Failures for Group 2 and dividing by the product of the Successes for Group 2 and Failures for group 1. Problems arise in estimates and standard errors when any of these 4 counts are 0, and a method of avoiding this issue is to add 0.5 to each count in the table, leading to: m xi 0.5 i 1 ~ m ~ m x 0 . 5 i odds X i 1 OR ~ n y 0.5 oddsY i 1n i n i 1 yi 0.5 m i 1 n i 1 y 0.5m x 0.5 xi 0.5 n i 1 yi 0.5 n i m i 1 i The (natural) logarithm of the Odds Ratio is the difference between the 2 logits: ln(OR) = logitX – logitY: ^ ^ odds X ln OR ln ^ odds Y ^ ^ logit logit X ~ odds X ln OR ln ~ odds Y ~ ~ logit logit X ~ ^ ~ ^ ~ V ln OR V logit X Y Y m xi 0.5 n yi 0.5 i 1 i 1 ln ln m m x 0.5 n n y 0.5 i 1 i i 1 i ^ ~ V logit Y 1 1 1 1 m x 0.5 m m x 0.5 n y 0.5 n n y 0.5 i 1 i i 1 i i 1 i i 1 i