Inner products and projection onto lines

advertisement

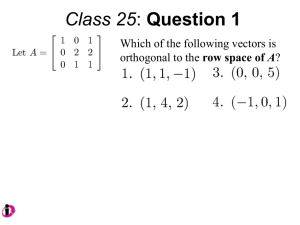

IV The four fundamental subspaces (p 90 Strang)

If A is an m (rows) x n (columns) matrix then the four fundamental subspaces are

The column space of A, R(A), a subspace of Rm (vectors with m real components)

The nullspace of A, N(A), a subspace of Rn (vectors with n real components)

The row space of A, i.e. the column space of AT, R(AT) (vectors with n real components)

The left null space of A, N(AT) (vectors with m real components)

The row space of A

For the echelon matrix U

1 3 3 2

U 0 0 3 1

0 0 0 0

the row space has the same dimension as the rank, r, which is 2 for U (2 non-zero rows). If A has

been reduced to the echelon form U, the rows of U constitute a basis for the row space of A.

The nullspace of A

The nullspace of A is defined by solutions to A.x = 0. If we transform A.x = 0 to U.x = 0 by a set

of row operations then it is obvious that solutions to U.x = 0 must be the same as those to A.x = 0

and so the nullspace of A is the same as the nullspace of U. It has dimension n – r (nullity), i.e. the

number of columns minus the rank. A basis for the nullspace can be constructed using the method

illustrated in the previous lecture where variables are sorted into free or basic variables, depending

on whether a particular column contains a pivot or not after reduction to echelon form.

The column space of A

The name range comes from the range of a function, f(x), which takes values,x, within the domain

to the range, f(x). In linear algebra, all x in Rm (the row space + nullspace) form the domain and A.x

forms the range. To find a basis for R(A) we first find a basis for R(U), the range of the upper

triangular matrix obtained from A by row operations. Whenever certain columns of U form a basis

for R(U), the corresponding columns of A form a basis for R(A). Columns of U containing pivots

constitute a basis for U and corresponding columns of A constitute a basis for R(A). In the example

below the first and third columns of U are the basis for the column space of R(U) and the same

columns are a basis for R(A).

1 3 3 2

U 0 0 3 1

0 0 0 0

3 3 2

1

A2

6 9 5

1 3 3 0

The reason is that A.x = 0 and U.x = 0 express linear (in)dependence among the columns of A and

U, respectively. These sets of linear equations have the same solution, x, and so if a set of columns

of U is linearly independent then so are the corresponding columns of A.

The dimension of R(A) therefore equals the rank of A, r, which equals the dimension of the row

space. The number of independent columns therefore equals the number of independent rows

which is sometimes stated as ‘row rank equals column rank’. This is the first fundamental

theorem of linear algebra.

The left null space of A

If A is an m x n matrix then AT is n x m and the nullspace of AT is a subspace of Rm. Row vectors

yT which are members of the left nullspace have m components and obey,

yT.A = 0.

This equation shows that vectors in the left nullspace are orthogonal to the column vectors of A.

The dimension of the left nullspace plus the dimension of the column space must equal the number

of columns of A. Hence the left nullspace has dimension m – r.

In summary

Column space

Nullspace

Row space

Left nullspace

R(A)

N(A)

R(AT)

N(AT)

subspace of Rm

subspace of Rn

subspace of Rn

subspace of Rm

dimension r

dimension n - r

dimension r

dimension m - r

Orthogonality and orthogonal subspaces

A basis is a set of linearly independent vectors that span a vector space. The basis {wi} is linearly

independent if, for all a which are members of the space V, the expansion of a

a = c1w1 + c2w2 + c3w3 + … + cnwn,

is unique. The basis vectors {wi} may or may not be mutually perpendicular and they may or may

not be normalised. The condition that they be orthonormal is

wTi . wj = ij

In this notation the length of a vector squared is xT.x. In a pair of orthogonal subspaces, every

vector in one subspace is mutually perpendicular to every vector in the other subspace. For

example, if the basis vectors {a, b, c, d} for a 4 dimensional space are mutually orthogonal, then a

plus b and c plus d form bases for two mutually orthogonal subspaces. The fundamental subspaces

of linear algebra are orthogonal and they come in pairs: the first pair is the nullspace and row space

and the second is the column space and the left nullspace. We can see that the row space of A is

orthogonal to the nullspace through the homogeneous equation which defines the nullspace.

A.x = 0

From this we see that every row in A is orthogonal to every vector x in the nullspace. The equation

which defines the left nullspace

yT.A = 0.

shows that every column in A is orthogonal to every vector yT in the left nullspace. Given a

subspace v of Rn, the space of all vectors orthogonal to v is called the orthogonal complement, v┴.

N(AT) = R(A)┴

N(A) = R(AT) ┴

dimensions (m – r) + r

dimensions (n - r) + r

= m number of columns of A

= n number of rows of A

The nullspace is the orthogonal complement of the row space and the left nullspace is the

orthogonal complement of the column space. This is the second fundamental theorem of linear

algebra.

Column space

Row space

R(AT)

xr

R(A)

xr → A.xr = A.x

x → A.x

x = xr + xn

xn → A.xn = 0

0 = null vector

N(AT)

xn

Nullspace

N(A)

Left Nullspace

Action of the matrix A on the column vector x (Fig. 3.4 Strang)

The figure above shows the action of the matrix A on the vector x. In general, the vector x has a

row space component and a nullspace component. The part of x which lies in the row space is

carried into the column space while the part that lies in the nullspace is carried to the null vector.

Nothing is carried to the left nullspace.

VI Inner products and projection onto lines (p144 Strang)

If x and y are two column vectors, the inner (or dot or scalar) product of the two vectors is xT.y.

The condition for two vectors to be orthogonal is that their scalar product be zero. We want to find

a method for creating an orthogonal basis from a set of vectors which is non-orthogonal.

a

b

p

Projection of vector b onto vector a

The figure shows two position vectors a and b and the position vector, p, of the point on the line

from the origin to a that lies closest to b. The line from p to b is perpendicular to a; this is the

vector b – xa, where x is the length of p. It is perpendicular to a so

aT.(b – xa) = 0.

Rearranging this we get

x=

a T .b

a T .a

Since p is parallel to a then p = xa and this can be rewritten as

p = Pa .b =

aa T

.b

a Ta

Note that aaT is the outer product (a dyad) of a with itself and that this is a matrix while the

denominator is a scalar (as it must be). The outer product of a with itself is the projection operator

Pa which projects any vector it operates on onto a. A projection operator P2 is idempotent with P

(P2, P3, …, Pn has the same action as P). P is a square matrix and its nullspace is the plane

perpendicular to a.

Orthogonal bases, orthogonal matrices and Gram-Schmidt Orthogonalisation

In an orthogonal basis every basis vector is mutually perpendicular to every other basis vector. In

an orthonormal basis, in addition to being an orthogonal to other basis vectors, each vector is

normalised. A set of orthonormal vectors will be denoted {qi}. They have the property that

q Ti .q j δ ij

The matrix constructed from these vectors is denoted Q.

T

Q .Q

q1T

q T2

q 3T

q T4

q 5T

q1

|

. |

|

|

q2

|

|

|

|

q3

|

|

|

|

q4

|

|

|

|

q 5 1

| 0

| 0

| 0

| 0

0

1

0

0

0

0

0

1

0

0

0

0

0

1

0

0

0

0

0

1

QT .Q 1 and QT Q-1

If a general vector b is expanded in a basis of orthonormal vectors with coefficients, xi,

b x1q1 x 2q 2 ... x n q n

Multiply on the left by qT and use orthonormality of the basis vectors to find x1 = q1T.b etc.

Any vector b may therefore be decomposed as

b q1q1T .b q 2q T2 .b ... qnqnT .b .

and it is obvious that the unit matrix may be written as

q q

i

T

i

1.

i

Here qiqiT are projection operators onto qi (there are no denominators because the qi are

normalised). The coefficients along each q are

b q1T .b q1 q T2 .b q 2 ... qnT .b qn

If the q vectors form a basis then this expansion is unique. Orthogonal matrices, whose columns

consist of sets of orthonormal vectors, have the important property that multiplication of a vector by

an orthogonal matrix preserves the length of the vector.

Q.x x

Q.x x

2

2

becomes

Q.x T .Q.x x T .Q TQ.x x T .x

Inner products and angles between vectors are also preserved

Q.x T .Q.y x T .Q TQ.y x T .y

Angles are preserved because the angle between vectors is given by

x T .y

x

y

arccos

The Gram-Schmidt Orthogonalisation process, for a pair of vectors which are non-orthogonal to

begin with, works by subtracting the projection of one vector on another from one of the vectors to

leave a pair of orthogonal vectors. Suppose that these vectors are a and b

- a aT.b

b – a aT.b

b

a

aT.b

Gram-Schmidt orthogonalisation of b to a

We can generate a new vector b – a aT.b from a and b which is orthogonal to a. We can call these

two orthogonal vectors q1 and q2. If a and b are part of a larger set of non-orthogonal vectors then

this process can be continued, trimming off the parts that are non-orthogonal to the qi already

computed. Usually the vectors are normalised at each step so that the {qi} form an orthonormal

basis. The Gram-Schmidt orthogonalisation process can be written as

a

q1

a

q2

b'

b'

b' 1 q 1 q 1T .b

q3

c'

c'

c' 1 q 1 q 1T q 2 q T2 .c etc

In matrix form the relationship between the non-orthogonal vectors, a, b, c, and the orthonormal

basis {qi} is

a b c q1

| | | |

| | | |

q2

|

|

q 3 q1T a q1T b q1T c

| . 0 q T2 b q T2 c

| 0

0 q 3T c

Here the matrix A on the left contains the original non-orthogonal basis in columns, the matrix at

left on the RHS is an orthogonal matrix, Q, and the matrix on the right on the RHS is upper

triangular and denoted R (because U is already taken in LU decomposition). This is the QR

factorisation of A, A = QR.

VII QR decomposition

Householder transformation H

This is a unitary transformation which takes the first column of the original matrix into minus the

first basis vector while retaining the original length (because it is a unitary transformation). A single

Householder transformation produces a vector anti-parallel to one of the basis vectors and so the

transformed matrix has zeroes in all but the first entry of the first column to which the

transformation is applied. If v is the vector v = x1 + e1 then the Householder transformation is

H 12

vv T

v

2

1 2uu T u

v

v

1 2uu

1 2uu 1 2uu

1 2uu 1 2uu

H T H 1 2uu T

T

T

T T

T

T

T

1 4uu T 4uu T uu T

1

e3

H1x1 = - e1 x1

x1 First column vector of A

e2

e1

The action of a Householder transformation on the first column vector of a matrix

and H is unitary and preserves lengths of vectors and angles between them. The action of H on x1

is

H.x 1 x1 2

vv T

v

2

.x1

x1 x1 e1

2x1 e1

T

x1 e1 2

H.x 1 x1 x1 e1 e1

2x1 e1 .x1

T

x1 e1 2

2x1 e1

T

.x1

x1 e1 2

.x1 1

2x1T .x1 2 e1T .x1

2 2 2 e1T .x1

2 2 2 e1T .x1

x1T .x1 x1T .e1 e1T .x1 2 e1T .e1 2 x1T .e1 e1T .x1 2

2 2 2 x1T .e1

In order to bring the original matrix to Hessenberg or tridiagonal form, a sequence of unitary

transformations,

U -13 U -12 U 1-1 AU 1 U 2 U 3 ,

1 0

0 H

11

U1

0 H 21

0 H 31

0

H12

H 22

H 32

0

1 0 0

0 1 0

H13

U2

0 0 H'11

H 23

H 33

0 0 H' 21

0

1 0

0 1

0

U3

0 0

H'12

H' 22

0 0

0

0

0

1

0

0 H' '11

0

is applied to the original matrix, A, which brings A to upper Hessenberg form if A is nonsymmetric and to tridiagonal form if A is symmetric.

b.H T

1 0 a b 1 0 a

.

0 H c d 0 HT

T

H.c H.d.H

The column H.c contains only one non-zero entry and the row b.HT contains only one non-zero

entry if b = c (i.e. if A is symmetric).

Application of H without the 1 0 row and column does not result in a diagonal matrix.

If H1 .A 0

0

b c

e f

h i

σH11 bH12 cH13 * *

H1 .A.H

eH12 fH 13

* *

hH 12 iH 13

* *

T

1

We see that multiplication by H1T on the right hand side destroys the zeros produced by H1 in the

first column. Note that this is not the case when the 1 0 row and column are included.

The subroutine DSYTRD factorises a real symmetric matrix A into a tridiagonal matrix, T.

T = Q-1.A.Q

The diagonal elements of T and the first off-diagonal elements are stored in those positions in A on

exit from DSYTRD. The vectors in each Householder matrix Hi (elementary reflectors) vi used to

construct the orthogonal matrix Q contain n-1 (U1), n-2 (U2), etc. non-zero elements and the

remainder are zeros. The first non-zero element of each v is unity and the remaining elements are

stored in the upper or lower triangular part of A not used for T. The subroutine DORGTR can be

used to assemble the orthogonal matrix Q from the elementary reflectors in A.

QR factorisation

In order to employ the QR algorithm to find the eigenvalues of the original matrix A, we need to

bring the matrix T to tridiagonal or upper Hessenberg form. This can be done using Gram-Schmidt

orthogonalisation or using a further Householder transformation. This time a sequence of

Householder transformation is applied on the left of T to generate an upper triangular matrix, R.

Since Hi are only applied on the left, the zeros in a particular column are not undone as they would

be if T were subjected to a sequence of unitary transformations (as was done to bring A to

tridiagonal form).

b'

1 b c

1

H1 .A 0

e f

H 2 .H1 .A 0

2

0

0

h i

0

H 3 .H 2 .H1 .A Q -1 .A R

c'

f'

i'

1 b' '

H 3 .H 2 .H1 .A 0

2

0

0

c' '

f' ' R

3

Q H 3 .H 2 .H1 H1-1 .H -21 .H -31 H1T .H T2 .H T3

1

The QR method for obtaining eigenvalues of a matrix

We are now ready to obtain the eigenvalues of A. The eigenvalues of a triangular matrix can be

read off the diagonal – they are simply the diagonal elements of the triangular matrix. This can

easily be demonstrated by expanding the secular determinant for a triangular matrix.

Suppose that a matrix has been brought to QR form

Ao = QoRo

A new matrix A1 is generated by reversing the order of Q and R

A1 = RoQo

Since orthogonal matrices have the property that QQT = 1 and Q-1 = QT

A1 = RoQo = Qo-1(QoRo)Qo = Qo-1AoQo= Q1R1

A2 = R1Q1 = Q1-1(Q1R1)Q1 = Q1-1A1Q1 = Q2R2

Reversing the order of QoRo can therefore be seen to be equivalent to performing an orthogonal

transformation on Ao. The eigenvalues of a matrix are not changed by an orthogonal

transformation. This is a similarity transformation of Ao etc. and this procedure can be iterated until

convergence is reached when An is an upper triangular matrix. The eigenvalues of an upper

triangular matrix can be read off the diagonal. This can easily be demonstrated by expanding the

secular determinant for a triangular matrix.

VIII Eigenvalues and eigenvectors (p243 Strang)

Everything that has been covered so far has had to do with solving the system A.x = b. The other

important equation of linear algebra is A.x = x where A is a square (n x n) matrix usually

representing an operator, and x and are one of the n eigenvectors and eigenvalues of A,

respectively.

If none of the eigenvalues is degenerate then each eigenvector is uniquely determined up to a

normalisation constant and mutually orthogonal – if they are normalised then they will also be

orthonormal. If some of the eigenvalues are degenerate then linear combinations of eigenvectors

belonging to the same eigenvalue are also eigenvectors of A (check by substitution). In the case of

degenerate eigenvalues, it is possible to find eigenvectors, corresponding to the degenerate

eigenvalues, which are mutually perpendicular to eigenvectors belonging to the same eigenvalue

and all others.

Eigenvector directions

uniquely determined when all

eigenvalues non-degenerate

Freedom to choose in a plane for two

eigenvectors with degenerate eigenvalues

Freedom to choose eigenvectors within a plane when two eigenvalues are degenerate

If the eigenvector equation is written as

A λ1.x 0

where 1 is the n x n unit matrix, then we immediately realise that the eigenvector, x, lies in the

nullspace of the matrix A – 1. That is, the eigenvalues, , are chosen so that A – 1 has a

nullspace and the matrix A – 1 becomes singular (if A – 1 has an inverse then the only solution to

the eigenvalue equation is the trivial solution, x = 0). One way of requiring a matrix to be singular

is that its determinant be equal to zero.

A - 1 0

Setting the (secular) determinant to zero and solving the resulting nth order polynomial for values

of is convenient when the matrix is small (n ~ 3) but for larger n is impractical. Numerical

methods are required then.

Suppose that an n x n matrix A has n linearly independent eigenvectors. Then if these vectors are

chosen to be the columns, si, of a matrix S, it follows that S-1.A.S is a diagonal matrix . To prove

this, form the matrix A.S

s1

A.S A. |

|

s2

|

|

s 3 λ 1s 1

| |

| |

λ 2s 2

|

|

λ 3 s 3 s1

| |

| |

s2

|

|

s 3 λ 1

| . 0

| 0

0

λ2

0

0

0 S.Λ

λ 3

which, by inspection is equal to S., because A.s1 = 1s1, etc. and this can be rewritten as S..

Provided that S has an inverse then A.S = S. can be rearranged to

S-1.A.S =

or

A = SS-1

In the former case, S is the matrix which is said to diagonalise A and in the latter we see a new

decomposition of A in terms of its eigenvectors and eigenvalues. The matrix S is invertible if all n

eigenvectors, which combine to form the columns of S, are linearly independent and the rank of S =

n. Not all square n x n matrices possess n linearly dependent eigenvectors and so not all matrices

are diagonalisable. This prompts the question: Which matrices are diagonalisable? (p290 Strang)

Diagonalisability is concerned with the linear independence of a matrix’s eigenvectors. Invertibility

is concerned with the magnitude of the eigenvalues (zero eigenvalue implies non-invertible).

Diagonalisation can fail only if there are repeated eigenvalues – if there are no repeated eigenvalues

then each of the corresponding eigenvectors is linearly independent and the matrix is

diagonalisable. Suppose two eigenvectors with distinct eigenvalues are not independent:

c1 x1 + c2 x2 = 0

c1 A.x1 + c2 A.x2 = 0

c1 1 x1 + c2 2 x2 = 0

c1 2 x1 + c2 2 x2 = 0

c1 (1 - 2) x1 = 0

c1, c2 not zero

operate with A A.x1 = 1 x1 A.x2 = 2 x2

subtract first equation times 2

(1 - 2) not = 0 so c1 = c2 = 0 and x1, x2 are linearly independent

If one or more eigenvalues is the same (eigenvalues are degenerate) then, provided a set of linearly

independent eigenvectors can be found for the degenerate eigenvalue then the matrix is still

diagonalisable. This is so if, for p-fold degenerate, the rank of (A – p1) = n – p. i.e. there are p

linearly independent vectors in the null space of A – p1, which can be chosen to be mutually

orthogonal.

In general, a non-symmetric real matrix will have complex eigenvalues and eigenvectors. In

particular, real symmetric and hermitian matrices have real eigenvalues. Eigenvalues are generally

associated with (1) frequencies in classical or quantum mechanical systems, (2) stability of classical

mechanical systems whereby a change in character of the eigenvalue (e.g. crossing zero) indicates

an instability appearing in the system. Since frequencies of real physical systems are generally real

numbers, real systems are represented by real symmetric matrices (classical mechanics) or

Hermitian matrices (quantum mechanics).

Real symmetric and Hermitian matrices (p290 Strang)

A real symmetric matrix is defined by A = AT whereas an hermitian matrix is defined by B = BH.

BH is the Hermitian conjugate of B, defined by

B B

*

H

ij

ji

where the * indicates complex conjugation of the matrix element. If x, y are complex vectors ( Cn

the space of complex vectors with n components) then

the inner product of x and y is xH.y and the vectors are orthogonal if xH.y = 0.

the length of x is xH.x

(AB)H = BH AH

if A = AH then xH.A.x is real

every eigenvalue of an hermitian matrix is real

the eigenvalues of a real symmetric or hermitian matrix corresponding to different

eigenvalues are orthogonal to one another.

a real symmetric matrix can be factored into A = Q..QT and an hermitian matrix can be

factored into B = U..UH

U is a unitary matrix which satisfies U.UH = UH.U = 1 c.f. Q.QT = QT.Q = 1 for a real

symmetric matrix. It contains orthonormal eigenvectors of B in columns.

multiplication of a vector by U does not change its length nor does it change the angles

between vectors.

The singular value decomposition (p 443 Strang)

We have seen that a square Hermitian matrix can be factored into B = U..UH. This is a particular

case of a more general decomposition, the singular value decomposition or SVD. Any m x n matrix

can be factored into

C = Q1..Q2T

Where the columns of Q1 (m x m) are eigenvectors of C.CT and the columns of Q2 are eigenvectors

of CT.C (n x n). The r singular values on the diagonal of (m x n) are the square roots of the nonzero eigenvalues of both C.CT and CT.C.

For positive definite matrices (n x n with all eigenvalues > 0) this is identical to A = Q..QT and for

indefinite matrices (n x n with eigenvalues > 0 or < 0), any negative eigenvalues in become

positive in and Q1 differs from Q2. For complex matrices, remains real but Q1 and Q2 become

unitary. Then C = U1..U2H . Columns of Q1, Q2 give orthonormal bases for each of the four

fundamental subspaces:

First r columns of Q1

Last m-r columns of Q1

First r columns of Q2

Last n-r columns of Q2

column space of C

left nullspace of C

row space of C

nullspace of C

Bases for the four spaces are chosen such that if C multiplies a column of Q2 it produces a multiple

of a column of Q1 C.Q2 = Q1.

To see the connection with C.CT and CT.C, form those matrices

C.CT = Q1..Q2.(Q1..Q2)T

= Q1..Q2.Q2T.T.Q1T

= Q1..T.Q1T

CT.C = Q2.T..Q2T

Q1 must therefore be an eigenvector matrix for C.CT and Q2 must be an eigenvector matrix for

CT.C.

.T is m x n x n x m (m x m) has eigenvalues 12, …, r2 on the first r elements of the diagonal.

T..is n x n and has the same r elements on the diagonal.

The effective rank of an m x n matrix can be established by counting the number of eigenvalues i2

which are less than some small threshold value.

The size of array required to store an image can be greatly reduced by computing the SVD for the

array and its effective rank. By retaining only eigenvectors for which the eigenvalues i2 exceed

the threshold value, the matrix can be reconstructed using a limited set of eigenvectors and

eigenvalues.

Numerical computation of eigenvalues and eigenvectors (p370 Strang)

We will briefly cover the power method and the QR algorithm for diagonalising a matrix. In the

latter approach, the matrix to be diagonalised is brought to QR form by Gram-Schmidt

orthogonalisation. However, this process can be speeded up considerably for large, dense matrices

by first of all applying a transformation which introduces as many off-diagonal zeroes as possible

into the matrix. The Householder transformation reduces the original matrix to upper Hessenberg

form, where non-zero elements appear only in the upper triangular part of the matrix plus the first

diagonal below the diagonal elements of the matrix. In the special case where the original matrix is

symmetric the Hessenberg form is a tridiagonal matrix.

*

*

0

0

B Hess

* * *

* * *

* * *

0 * *

C Tridiagonal

*

*

0

0

* 0 0

* * 0

* * *

0 * *

Once the matrix is in Hessenberg form then that matrix is transformed into QR form by applying a

Gram-Schmidt orthogonalisation.

Extraction of eigenvectors using the inverse power method.

The power method is one which relies on iteration of a vector, u, which contains some part of all of

the eigenvectors, {xi}, (i.e. it is not orthogonal to any eigenvector). Note that a complete set of

eigenvectors form a basis for any problem. Such an eigenvector can be generated as a random

vector by selecting its elements at random.

u ci xi

i

When uo is operated on by A

A.u o c i A.x i c i i x i u 1

i

i

Repeated iteration results in

A.u k -1 c i ki x i u k

i

c1 1

k

n

n

uk

k

x 1 c 2 2

n

k

x 2 ... c n x n

k

If n is the largest eigenvalue then i 0 for all except the largest eigenvalue and the random

n

vector will converge to the eigenvector with the largest eigenvalue. The factor which determines

how rapidly convergence takes place is |n|/|n-1|. The random vector may have to be scaled in

magnitude at each iteration step. This is the power method for calculating the eigenvector of a

matrix with the largest eigenvalue.

The inverse power method can be used to obtain the smallest eigenvalue of a matrix. This is

similar in spirit to the power method except that it uses the eigenvalue equation in the form

1

x i A 1 .x i

λi

The iterated series is

A.v k 1 v k or

A -1 .v k -1 d i

i

1

ki

xi vk

v k d 1 x 1 d 2 1

2

k

1

k

x 2 ... d m 1

m

k

x m

and if m is the smallest eigenvalue of A then the random vector converges to the eigenvector with

the smallest eigenvalue. The factor which determines how rapidly convergence takes place is

|1|/|2|. Note that the iteration series for the inverse power method requires solution of the system

of linear equations A.vk = vk-1.

In order to obtain eigenvalues between the largest and smallest eigenvalues, we can adopt the

shifted inverse power method. Here we replace A by A – I so that all eigenvalues are shifted by

the same amount since

A I.x i A.x i I.x i i xi

The factor which determines how rapidly convergence takes place is now |1- |/|2 – |. Thus

convergence can be greatly accelerated if is chosen to be close to one of the eigenvalues. Each

step of the method solves

A - I .w k 1 w k

or

A - I -1 .w k -1 e i

i

1

i k

xi wk

If is close to one of the eigenvalues (precomputed by another algorithm such as QR) then the

algorithm will very rapidly converge to the eigenvector corresponding to the selected value of .

The standard procedure is to factor A into LU and to solve U.x = (1,1,1,…,1) by back substitution.