Notes 17 - Wharton Statistics Department

advertisement

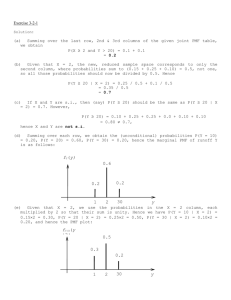

Statistics 510: Notes 17 Reading: Sections 5.6, 5.7 Hazard functions, Section 5.5.1 – read for your own interest, I’ll be happy to discuss with you but will not cover this on the exam. I. Gamma distribution: Suppose events occur according to a Poisson process, i.e., (a) the probability of an event occurring in a given small time period t ' is approximately proportion to t ' (b) the probability of two or more events occurring in a given small time period t ' is much smaller than t ' (c) the number of events occurring in two non-overlapping time periods are independent. Suppose we start observing the process at some time (which we denote by time 0). The time until the first event occurs has an exponential ( ) distribution. Let X denote the time until the first events occur. Let W denote the number of occurrences of the event in the interval [0, x] . Then W is a Poisson random variable with parameter x . The cdf of X can be obtained using W as follows: FX ( x) P( X x) 1 P( X x) 1 P(fewer than events occur in the interval [0,x]) 1 FW ( 1) 1 1 e x k 0 ( x ) k k! Therefore, d 1 x ( x) k f X ( x) e dx k 0 k! k -1 ( x) k 1 x x ( x ) x e ( )e e ( ) k! (k 1)! k=1 k k 1 1 1 x ( x ) x ( x) e e k ! (k 1)! k 0 k 1 1 e x k 0 e x ( x ) k 2 x ( x ) k e k ! k 0 k! ( x) 1 ( 1)! x 1e x ( 1)! What we have just derived is a special case of the gamma family of probability distributions. Before examining the gamma in detail, we generalize the above density to include cases in which is positive but not necessarily an integer. To do this, it is necessary to replace ( 1)! with a continuous function of (nonnegative) , ( ) , the latter reducing to ( 1)! when is a positive integer. For any real number 0 , the gamma function (of ) is given by ( ) x 1e x dx . 0 Let X be a random variable such that e x ( x) 1 x0 f ( x) ( ) 0 x0 Then X is said to have a gamma distribution with parameters and . The mean and variance of the gamma distribution are E( X ) , Var ( X ) 2 . The gamma family of distributions is a flexible family of probability distributions for modeling nonnegative valued random variables. Some gamma densities are shown in the figure below. Several other families of continuous distributions are given in Chapter 5.6 III. Distribution of a function of a random variable (Chapter 5.7) It is often the case that we know the probability distribution of a random variable and are interested in determining the distribution of some function of it. Suppose that we know the distribution of X and we want to find the distribution of g ( X ) . To do so, it is necessary to express the event that g ( X ) y in terms of X being in some set. Example 1: Suppose that a random variable X has pdf 0 x 1 6 x(1 x) f X ( x) elsewhere 0 Let Y be a random variable that equals 2 X 1 . What is the pdf of Y ? Note, first that the random variable Y maps the range of Xvalues (0,1) onto the interval (1,3). Let 1 y 3 . Then y 1 FY ( y ) P(Y y ) P(2 X 1 y ) P X 2 y 1 FX 2 We have t0 0 t FX (t ) 6 x(1 x)dx 3t 2 2t 3 0 t 1 0 t 1 1 Therefore, y 1 0 2 3 3 y-1 2 y 1 1 y 3 y 1 2 2 FY ( y ) FX 2 y3 3 y 2 9 y 4 2 4 1 y3 1 Differentiating FY ( y ) gives fY ( y) : y 1 0 9 3y2 fY ( y ) 3y 1 y 3 4 4 y3 0 In finding the distribution of functions of random variables, extreme care must be exercised in identifying the proper set of x’s that get mapped into the event Y y . The best approach is to always sketch a diagram. Example 2: Let X have the uniform density over the interval (-1,2): 1 f X ( x) 3 0 1 x 2 elsewhere Find the pdf for Y, where Y X 2 . IV. Joint distribution functions (Chapter 6.1) Thus far, we have focused on probability distributions for single random variables. However, we are often interested in probability statements concerning two or more random variables. The following examples are illustrative: In ecological studies, counts, modeled as random variables, of several species are often made. One species is often the prey of another; clearly, the number of predators will be related to the number of prey. The joint probability distribution of the x, y and z components of wind velocity can be experimentally measured in studies of atmospheric turbulence. The joint distribution of the values of various physiological variables in a population of patients is often of interest in medical studies. A model for the joint distribution of age and length in a population of fish can be used to estimate the age distribution from the length distribution. The age distribution is relevant to the setting of reasonable harvesting policies. The joint behavior of two random variables X and Y is determined by the joint cumulative distribution function (cdf): FXY (a, b) P( X x, Y y) , where X and Y are continuous or discrete. The joint cdf gives the probability that the point ( X , Y ) belongs to a semi-infinite rectangle in the plane, as shown in Figure 3.1 below. The probability that ( X , Y ) belongs to a given rectangle is, from Figure 3.2, P( x1 X x2 , y1 Y y2 ) F (x , y ) F (x , y ) F (x , y ) F (x , y ) . 2 2 2 1 1 2 1 1 In general, if X 1 , , X n are jointly distributed random variables, the joint cdf is F ( x1 , , xn ) P( X1 x1 , X 2 x2 , , X n xn ) . Two- and higher-dimensional versions of probability distribution functions and probability mass functions exist. We start with a detailed description of joint probability mass functions. Joint probability mass functions: Let X and Y be discrete random variables defined on the sample space that take on values x1 , x2 , and y1 , y2 , respectively. The joint probability mass function of ( X , Y ) is p ( xi , y j ) P( X xi , Y y j ) . Example 3: A fair coin is tossed three times independently: let X denote the number of heads on the first toss and Y denote the total number of heads. Find the joint probability mass function of X and Y. The joint pmf is given in the following table: y x 0 1 2 0 1/8 2/8 1/8 1 0 1/8 2/8 3 0 1/8 Marginal probability mass functions: Suppose that we wish to find the pmf of Y from the joint pmf of X and Y. pY (0) P(Y 0) P(Y 0, X 0) P(Y 0, X 1) 1 0 8 1 8 pY (1) P (Y 1) P(Y 1, X 0) P(Y 1, X 1) 3 8 In general, to find the frequency function of Y, we simply sum down the appropriate column of the table giving the joint pmf of X and Y. For this reason, pY is called the marginal probability mass function of Y. Similarly, summing across the rows gives p X ( x) p( x, yi ) i which is the marginal pmf of X. The case for several random variables is analogous. If X 1 , , X n are discrete random variables defined on the sample space, their joint pmf is p( x1 , , xn ) P( X1 x1 , , X n xn ) . The marginal pmf of X1 , for example, is pX1 ( x1 ) p( x1 , x2 , x2 , , xn , xn ) . Example 4: One of the most important joint distributions is the multinomial distribution whcih arises when a sequence of n independent and identical experiments is performed, where each experiment can result in any one of r possible outcomes, with respective probabilities p1 , , pr , p1 , , pr . If we let X i denote the number of the n experiments that result in outcome i, then n! P( X 1 n1 , , X r nr ) p1n1 prnr n1 ! nr ! r whenever n i 1 i n. Suppose that a fair die is rolled 9 times. What is the probability that 1 appears three times, 2 and 3 twice each, 4 and 5 once each and 6 not at all?