Notes 9 - Wharton Statistics Department

advertisement

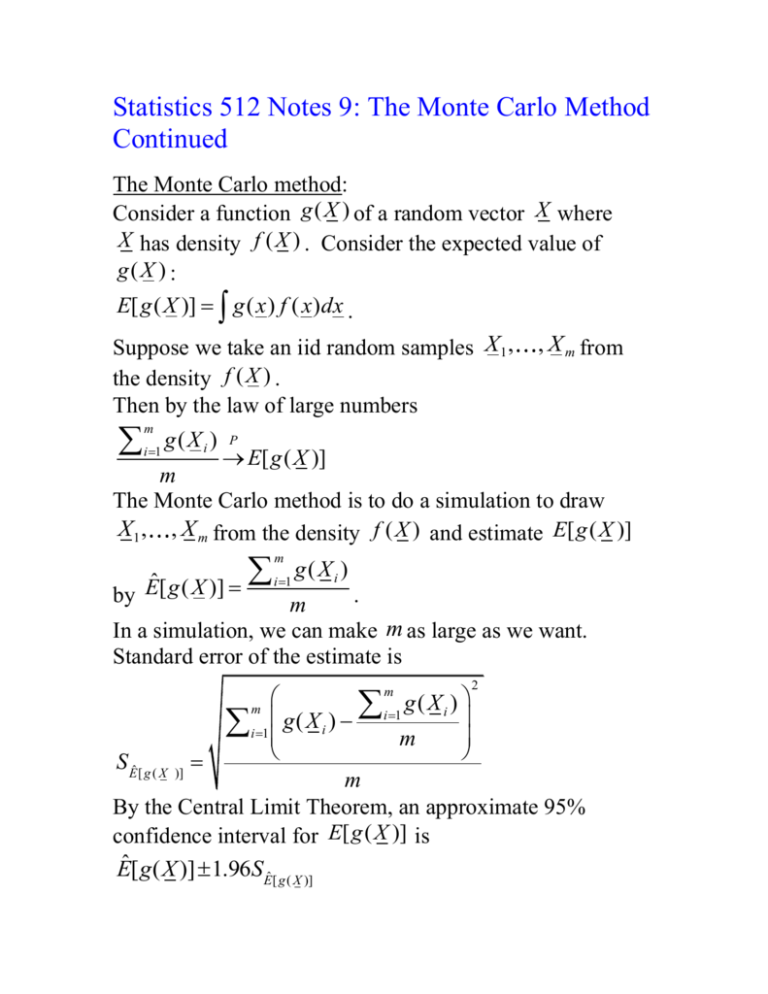

Statistics 512 Notes 9: The Monte Carlo Method

Continued

The Monte Carlo method:

Consider a function g ( X ) of a random vector X where

X has density f ( X ) . Consider the expected value of

g( X ) :

E[ g ( X )] g ( x ) f ( x )dx .

Suppose we take an iid random samples X1 ,

the density f ( X ) .

Then by the law of large numbers

m

i 1

g( Xi )

, X m from

P

E[ g ( X )]

m

The Monte Carlo method is to do a simulation to draw

X1 , , X m from the density f ( X ) and estimate E[ g ( X )]

by

Eˆ [ g ( X )]

m

i 1

g( Xi )

.

m

In a simulation, we can make m as large as we want.

Standard error of the estimate is

2

m

g( Xi )

m

i

1

i1 g ( X i ) m

S Eˆ [ g ( X )]

m

By the Central Limit Theorem, an approximate 95%

confidence interval for E[ g ( X )] is

Eˆ [ g ( X )] 1.96SEˆ [ g ( X )]

Example: Monte Carlo estimation of

Define the unit square as a square centered at (0.5,0.5) with

sides of length 1 and the unit circle as the circle centered at

the origin with a radius of length 1. The ratio of the area of

the unit circle that lies in the first quadrant to the area of the

unit square is / 4 .

Let U1 and U 2 be iid uniform (0,1) random variables. Let

g (U1 ,U 2 ) =1 if (U1 ,U 2 ) is in the unit circle and 0

otherwise. Then E[ g (U1 , U 2 )] 4 .

Monte Carlo method: Repeat the experiment of drawing

X (U1 ,U 2 ) , U1 and U 2 iid uniform (0,1) random

variables, m times and estimate by

ˆ 4

n

i 1

g (U i1 , U i 2 )

m

interval for is

. An approximate 95% confidence

m

g (U i1 ,U i 2 )

m

i

1

i 1 g (U i1 ,U i 2 )

m

ˆ 1.96* 4

m

Because g (U1 ,U 2 ) =0 or 1, (1) is equivalent to

ˆ (1 ˆ )

ˆ 1.96*4

m

2

(1)

In R, the command runif(n) draws n iid uniform (0,1)

random variables.

Here is a function for estimating pi:

piest=function(m){

#

# Obtains the estimate of pi and its standard

# error for the simulation discussed in Example 5.8.1

#

# n is the number of simulations

#

# Draw u1, u2 iid uniform (0,1) random variables

u1=runif(m);

u2=runif(m);

cnt=rep(0,m);

# chk=Vector which checks if (u1,u2) is in the unit circle

chk=u1^2+u2^2-1;

# cnt[i]=1 if (u1,u2) is in unit circle

cnt[chk<0]=1;

# Estimate of pi

est=4*mean(cnt);

# Lower and upper confidence interval endpoints

lci=est-4*(mean(cnt)*(1-mean(cnt))/m)^.5;

uci=est+4*(mean(cnt)*(1-mean(cnt))/m)^.5;

list(estimate=est,lci=lci,uci=uci);

}

> piest(100000)

$estimate

[1] 3.13912

$lci

[1] 3.133922

$uci

[1] 3.144318

Back to Example 5.8.5:

The true size of the 0.05 nominal size t-test for random

samples of size 20 contaminated normal distribution A?

We want to estimate

E[ I {t ( x1 , , x20 ) 1.729}]

Monte Carlo method:

Eˆ [ I {t ( x1 ,

, x20

) 1.729}]

m

i 1

I {t ( xi ,1 ,

, xi ,20 ) 1.729}

m

where ( xi ,1 , , xi ,20 ) is a random sample of size 20 from the

contaminated normal distribution A.

[Here X ( X1 , , X 20 ) and f ( X ) is the density of a

random sample of size 20 from the contaminated normal

distribution A and g ( X ) I {t ( X1 , , X 20 ) 1.729} .]

How to draw a random observation from the contaminated

normal distribution A?

(1) Draw a Bernoulli random variable B with p=0.25;

(2) If B=0, draw a random observation from the

standard normal distribution. If B=1, draw a

random observation from the normal distribution

with mean 0 and standard deviation 25.

In R, the command rnorm(n,mean=0,sd=1) draws a random

sample of size n from the normal distribution with the

specified mean and SD. The command rbinom(n,size=1,p)

draws a random sample of size n from Bernoulli

distribution with probability of success p.

R function for obtaining Monte Carlo estimate

Eˆ [ I{t ( x1 , , x20 ) 1.729}]

empalphacn=function(nsims){

#

# Obtains the empirical level of the test discussed in

# Example 5.8.5

#

# nsims is the number of simulations

#

sigmac=25; # SD when observation is contaminated

probcont=.25; # Probability of contamination

alpha=.05; # Significance level for t-test

n=20; # Sample size

tc=qt(1-alpha,n-1); # Critical value for t-test

ic=0; # ic will count the number of times t-test is rejected

for(i in 1:nsims){

# Bernoulli random variable which determines whether

# each observation in sample is from standard normal or

# normal with SD sigmac

b=rbinom(n,size=1,prob=probcont);

# Sample observations from standard normal when b=0 and

# normal with SD sigmac when b=1

samp=rnorm(n,mean=0,sd=1+b*24);

# Calculate t-statistics for testing mu=0 based on sample

tstat=mean(samp)/(var(samp)^.5/n^.5);

# Check if we reject the null hypothesis for the t-test

if(tstat>tc){

ic=ic+1;

}

}

# Estimated true significance level equals proportion of

# rejections

empalp=ic/nsims;

# Standard error for estimate of true significance level

se=1.96*((empalp*(1-empalp))/nsims)^.5;

lci=empalp-1.96*se;

uci=empalp+1.96*se;

list(empiricalalpha=empalp,lci=lci,uci=uci);

}

> empalphacn(100000)

$empiricalalpha

[1] 0.04086

$lci

[1] 0.03845507

$uci

[1] 0.04326493

Based on these results the nominal 0.05 size t-test appears

to be slightly conservative when a sample of size 20 is

drawn from this contaminated normal distribution.

Generating random observations with given cdf F

Theorem 5.8.1: Suppose the random variable U has a

uniform (0,1) distribution. Let F be the cdf of a random

variable that is strictly increasing on some interval I, where

F=0 to the left of I and F=1 to the right of I. Then the

1

1

random variable X F (U ) has cdf F, where F (0) =left

1

endpoint of I and F (1) =right endpoint of I.

Proof: A uniform distribution on (0,1) has the CDF

FU (u ) u for u (0,1) . Using the fact that the CDF F is a

strictly monotone increasing function on the interval I, then

on

P[ X x] P[ F 1 (U ) x]

=P[ F ( F 1 (U )) F ( x)]

=P[U F ( x)]

=F ( x)

Difficult to use this method when simulating random

variables whose inverse CDF cannot be obtained in closed

form.

Other methods for simulating a random variable:

(1) Accept-Reject Algorithm (Chapter 5.8.1)

(2) Markov chain Monte Carlo Methods (Chapter 11.4)

R commands for generating random variables

runif

-- uniform random variables

rbinom

rnorm

rt

rpois

rexp

rgamma

rbeta

rcauchy

rchisq

rF

rgeom

rnbinom

-- binomial random variables

-- normal random variables

-- t random variables

-- Poisson random variables

-- exponential random variables

-- gamma random variables

-- beta random variables

-- Cauchy random variables

-- chisquared random variables

-- F random variables

-- geometric random variables

-- negative binomial random variables

Bootstrap Procedures

Bootstrap standard errors

X1 ,

, X n iid with CDF F and variance 2 .

X Xn 1

Var 1

2 Var X1

n

n

SD( X )

Xn

2

n .

n.

s

SE

(

X

)

s

We estimate SD( X ) by

n where is the

sample standard deviation.

What about SD{Median( X1 , , X n )} ? This SD depends in

a complicated way on the distribution F of the X’s. How to

approximate it?

Real World: F X1 ,

, X n Tn Median( X 1 ,

, Xn).

The bootstrap principle is to approximate the real world by

assuming that F Fˆn where Fˆn is the empirical CDF, i.e.,

1

the distribution that puts n probability on each of

X 1 , , X n . We simulate from Fˆn by drawing one point at

random from the original data set.

Bootstrap World:

Fˆn X1* , , X n* Tn* Median( X1* ,

, X n* )

The bootstrap estimate of SD{Median( X1 , , X n )} is

SD{Median( X 1* , , X n* )} where X 1* , , X n* are iid draws

from Fˆ .

n

*

How to approximate SD{Median( X 1 ,

The Monte Carlo method.

, X n* )} ?

2

1 m

1 m

g

(

X

)

g

(

X

)

i m i1 i

m i 1

2

1 m

2

1 m

P

g ( X i ) i 1 g ( X i )

i 1

m

m

2

2

E g ( X ) E g ( X ) Var g ( X )

Bootstrap Standard Error Estimation for Statistic

Tn g ( X1 , , X n ) :

*

*

1. Draw X 1 , , X n .

*

*

2. Compute Tn g ( X 1 ,

, X n* ) .

*

3. Repeat steps 1 and 2 m times to get Tn,1 ,

4. Let seboot

*

1

1

m *

m

Tn,i m r 1T n,r

m i 1

, Tn*,m

2

The bootstrap involves two approximations:

not so small approx. error

SDF (Tn )

small approx. error

SDFˆ (Tn )

n

seboot

R function for bootstrap estimate of SE(Median)

bootstrapmedianfunc=function(X,bootreps){

medianX=median(X);

# vector that will store the bootstrapped medians

bootmedians=rep(0,bootreps);

for(i in 1:bootreps){

# Draw a sample of size n from X with replacement and

# calculate median of sample

Xstar=sample(X,size=length(X),replace=TRUE);

bootmedians[i]=median(Xstar);

}

seboot=var(bootmedians)^.5;

list(medianX=medianX,seboot=seboot);

}

Example: In a study of the natural variability of rainfall, the

rainfall of summer storms was measured by a network of

rain gauges in southern Illinois for the year 1960.

>rainfall=c(.02,.01,.05,.21,.003,.45,.001,.01,2.13,.07,.01,.0

1,.001,.003,.04,.32,.19,.18,.12,.001,1.1,.24,.002,.67,.08,.003

,.02,.29,.01,.003,.42,.27,.001,.001,.04,.01,1.72,.001,.14,.29,.

002,.04,.05,.06,.08,1.13,.07,.002)

> median(rainfall)

[1] 0.045

> bootstrapmedianfunc(rainfall,10000)

$medianX

[1] 0.045

$seboot

[1] 0.02167736