Chapter 18: Sampling Distribution Models How can surveys

advertisement

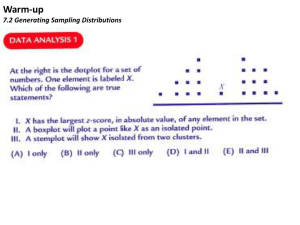

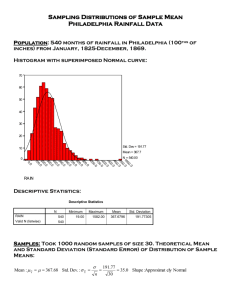

Chapter 18: Sampling Distribution Models How can surveys conducted at essentially the same time by organizations asking the same questions get different results? You probably easily accept that observations vary but how much variability among polls should we expect to see? The proportions vary from sample to sample because the samples are composed of different people. The Central Limit Theorem for Sample Proportions We want to imagine the results from all the random samples of the same size that we didn’t take. What would the histogram of all the sample proportions look like? The center of the histogram will be at the true proportion in the population, and we call that p. How about the shape of the histogram? To figure this out, we could actually simulate a bunch of random samples that we didn’t really draw. It should be no surprise that we don’t get the same proportion for each sample we draw. Each Ù p comes from a different simulated sample. If you create a histogram of all the proportions from all the possible samples it would be called the sampling distribution of the proportions. It ends up that the sampling distribution of the proportions is centered at the true proportion in the population (p) and is unimodal and symmetric in shape. It looks like we can use the Normal model for the histogram of the sample proportions. Sampling proportions vary from sample to sample and that is one of the very powerful ideas of this course. A sampling distribution model for how a sample proportion varies from sample to sample allows us to quantify that variation and to talk about how likely it is that we’d observe a sample proportion in any particular interval. (for example, if you would take several different 10 person random samples asking whether or not people believed in ghosts, how likely is it that one group will get 10% while another will get 90%??? Is there a reason why these results occurred?? Was the survey biased??? What’s going on!?) To use the Normal model we need two parameters – mean and standard deviation. We will put the mean, m, at the center of the histogram, p. Once we know the mean p, we can find the standard deviation with the formula: s ( pˆ ) = SD( pˆ ) = pq . n As long as the number of samples is reasonably large, we can model the distribution of sample proportions with a probability model that is N(p, pq ). n And don’t forget that once you have a Normal model, you can use the 68-95-99.7 rule or look up other probabilities using a table or calculator. This is what we mean by sampling error – it’s not really an error at all! Its just variability you’d expect to see from one sample to another. A better term would be sampling variability. The Normal model becomes a better and better representation of the distribution of the sample proportions as the sample size gets bigger. (formally, we say the claim is true in the limit as n grows) Assumptions & Conditions To use a model, we usually must make some assumptions. To use the sampling distribution model for sample proportions, we need two assumptions: 1. The Independence Assumption – the sampled values must be independent of each other 2. The Sample Size Assumption – the sample size n must be large enough Conditions: 1. Randomization Condition – if your data come from an experiment, subjects should have been randomly assigned to treatments. If you have a survey, your sample should be a simple random sample of the population. The sampling method should not be biased and the data should be representative of the population. 2. 10% condition – the sample size n must be no larger than 10% of the population 3. Success/Failure Condition – the sample size has to be big enough so that we expect at least 10 successes and at least 10 failures. The last two conditions seem to contradict each other and as always, you would be correct to say that a larger sample is better. It’s just that if the sample were more than 10% of the population we’d need to use different methods to analyze the data. Luckily, this is not usually a problem in practice. A Sampling Distribution Model for a Proportion We’ve simulated repeated samples and looked at a histogram of the sample proportions. We modeled that histogram with a Normal model. We model it because this model will give us insight into how much the sample proportion can vary from sample to sample. The fact that the sample proportion is a random variable taking on a different value in each random sample and that we can say something specific about the distribution of those values is a fundamental insight that will be used in each of the next four chapters. No longer is a proportion something we just compute for a set of data. We now see it as a random variable quantity that has a probability distribution, and thanks to Laplace we have a model for that distribution. We call that the sampling distribution model for the proportion. SAMPLING DISTRIBUTION MODEL FOR A PROPORTION Provided that the sampled values are independent and the sample size is large enough, the sampling distribution of pˆ is modeled by a Normal model with mean m( pˆ ) = p and standard deviation pq . s ( pˆ ) = SD( pˆ ) = n Sampling models are what make statistics work. They inform us about the amount of variation we should expect when we sample. The sampling model quantifies the variability, telling us how surprising any sample proportion is. Rather than thinking about the sample proportion as a fixed quantity calculated from our data, we now think of it as a random variable – our value is just one of the many we might have seen had we chosen a different random sample. Example: The centers for disease control and prevention report that 22% of 18 year old women in the US have a body mass index of 25 or higher – a value considered by the national heart lung and blood institute to be associated with increased health risk. As part of a routine health check at a large college, the physical education department usually requires students to come in to be measured and weighed. This year, the department decided to try out a selfreport system. It asked 200 randomly selected female students to report their heights and weights. Only 31 of these students has BMI’s greater than 25. Is this proportion of high BMI students unusually small??? Example: Suppose in a large class of introductory statistics students, the professor has each person toss a coin 16 times and calculate the proportion of his or her tosses that were heads. Suppose the class repeats the coin-tossing experiment. a) The students toss the coins 25 times each now. Use the 68-95-99.7 rule to describe the sampling distribution model. b) Confirm that you can use a Normal model here. c) They increase the number of tosses to 64 each. Draw and label the appropriate sampling distribution model. d) Explain how the sampling distribution model changes as the number of tosses increases. End of Day 1: Complete pages 432-433 #2, 5, 6, 8, 9 Example: Suppose that about 13% of the population is left-handed. A 200-seat auditorium has been built with 15 left seats, seats that have the built-in desk on the left rather than the right arm of the chair. Question: In a class of 90 students, what’s the probability that there will not be enough seats for the lefthanded students? What About Quantitative Data? The good news is that we can do something similar when we talk about quantitative data! Simulating the Sampling Distribution of a Mean Please read/consult pages 420-421 for the simple simulation of dice tosses and their averages. Remember that the Large of Large Numbers says that as the sample size gets larger, each sample average is more likely to be closer to the population mean. The shape of the distribution becomes bell-shaped – and not just bell-shaped – it’s approaching the Normal model. But can we count on this happening for situations other than dice throws? It turns out that Normal models work well amazingly often. The Fundamental Theorem of Statistics The dice simulation may look like a special situation, but it turns out that what we saw with dice is true for means of repeated samples for almost every situation. And, there are almost no conditions at all!! The sampling distribution of any mean becomes more nearly Normal as the sample size grows. All we need is for the observations to be independent and collected with randomization. We don’t even care about the shape of the population distribution! This surprising fact is the result Laplace proved in a fairly general form in 1810. At the time, Laplace’s theorem caused quite a stir because it is so unintuitive. Laplace’s result is called the Central Limit theorem (CLT). Not only does the distribution of means of many random samples get closer and closer to a Normal model as the sample size grows, this is true regardless of the shape of the population distribution! It works better and faster the closer the population distribution is to a Normal model. And it works better for larger samples. The CENTRAL LIMIT THEOREM (CLT) The mean of a random sample is a random variable whose sampling distribution can be approximated by a Normal model. The larger the sample, the better the approximation will be. Assumptions & Conditions Independence Assumption: the sampled values must be independent of each other Sample Size Assumption: the sample size must be sufficiently large Randomization condition: the data values must be sampled randomly or the concept of a sampling distribution makes no sense 10% condition: when the sample is drawn without replacement, the sample size, n, should be no more than 10% of the population Large Enough Sample Condition: although the CLT tells us that a Normal model is useful in thinking about the behavior of sample means when the sample size is large enough, it doesn’t tell us how large a sample we need. The truth is, it depends; there’s no one-size-fits-all rule. If the population is unimodal and symmetric, even a fairly small sample is okay. If the population is strongly skewed, it can take a pretty large sample to allow use of a Normal model to describe the distribution of sample means. For now you’ll just need to think about your sample size in the context of what you know about the population, and then tell whether you believe the Large Enough Sample Condition has been met. But Which Normal Model should we use??? For means, our center is at the population mean. What about standard deviations though? Means vary less than the individual observations. Which would be more surprising, having one person in your stat class who is over 6’9” tall or having the mean of 100 students taking the course is over 6’9”??? The second situation just won’t happen because means have smaller standard deviations than individuals. THE SAMPLING DISTRIBUTION MODEL FOR A MEAN (CLT) When a random sample is drawn from any population with mean m and standard deviation s , its sample mean, y , has a sampling distribution with the same mean m but whose standard deviation is s . No matter what population the random sample comes from, n the shape of the sampling distribution is approximately Normal as long as the sample size is large enough. The larger the sample used, the more closely the Normal approximates the sampling distribution for the mean. So, when we have categorical data, we calculate a sample proportion, p̂ . The sampling distribution of this random variable has a Normal model with a mean at the true proportion p and a standard deviation of SD( p̂) = model in chapters 19-22). pq . (we’ll use this n When we have quantitative data, we calculate a sample mean, y . The sampling distribution of this random variable has a Normal model with a mean at the true mean, m , and a standard deviation of SD( y ) = s n . We’ll use this model in chapters 23-25. These are standard deviations of the statistics p̂ and y . They both have a square root of n in the denominator. That tells us that the larger the sample, the less either statistic will vary. Example: A college physical education department asked a random sample of 200 female students to self-report their heights and weights, but the percentage of students with BMI’s over 25 seemed suspiciously low. One possible explanation may be that the respondents ‘shaded’ their weights down a bit. The CDC reports that the mean weight of 18-year-old women is 143.74 lbs, with a standard deviation of 51.54 lbs, but these 200 randomly selected women reported a mean weight of only 140 lbs. Question: based on the Central Limit Theorem and the 68-95-99.7 Rule, does the mean weight in this sample seem exceptionally low, or might this just be random sample-to-sample variation? Example: The Centers for Disease Control and Prevention reports that the mean weight of adult men in the US is 190 lbs with a standard deviation of 59 lbs. Question: An elevator in our building has a weight limit of 10 persons or 2500 lbs. What’s the probability that if 10 men get on the elevator, they will overload its weight limit? Means vary less than individual data values. Averages should be more consistent for larger groups. It’s harder to get the standard deviation down because that nasty square root limits how much we can make a sample tell about the population. This is an example of something that’s known as the Law of Diminishing Returns. The Real World and the Model World BE CAREFUL!!! We have been slipping smoothly between the real world, in which we draw random samples of data, and a magical mathematical model world, in which we describe how the sample means and proportions we observe in the real world behave as random variables in all the random sample that we might have drawn. How we have two distributions to deal with. The first is the real-world distribution of the sample, which we might display with a histogram (for quantitative data) or a bar chart or table (for categorical data). The second is the math world sampling distribution model of the statistic, a Normal model based on the Central Limit Theorem. Don’t confuse the two. For example, don’t mistakenly think the CLT says that the data are Normally distributed as long as the sample is large enough. In fact, as samples get larger, we expect the distribution of the data to look more and more like the population from which they are drawn – skewed, bimodal, whatever – but not necessarily Normal. You can collect a sample of CEO salaries for the next 1000 years but the histogram will never look Normal. The Central Limit Theorem doesn’t talk about the distribution of the data from the sample. It talks about the sample means and sample proportions of many different random samples drawn from the same population. Just Checking: 1. Human gestation times have a mean of about 266 days, with a standard deviation of about 16 days. If we record the gestation times of a sample of 100 women, do we know that a histogram of the times will be well modeled by a Normal model? 2. Suppose we look at the average gestation times for a sample of 100 women. If we imagined all the possible random samples of 100 women we could take and looked at the histogram of all the sample means, what shape would it have? 3. Where would the center of that histogram be? 4. What would be the standard deviation of that histogram? Sampling Distribution Models At the heart is the idea that the statistic itself is a random variable. We can’t know what our statistic will be because it comes from a random sample. This sample-to-sample variability is what generates the sampling distribution. The sampling distribution shows us the distribution of possible values that the statistic could have had. The two basic truths about sampling distributions are: 1. Sampling distributions arise because samples vary. Each random sample will contain different cases and, so, a different value of the statistic. 2. Although we can always simulate a sampling distribution, the Central Limit Theorem saves us the trouble for means and proportions. Example: A tire manufacturer designed a new tread pattern for its all-weather tires. Repeated tests were conducted on cars of approximately the same weight traveling at 60 miles per hour. The tests showed that the new tread pattern enables the cars to stop completely in an average distance of 125 feet with a standard deviation of 6.5 feet and that the stopping distances are approximately normally distributed. (a) What is the 70th percentile of the distribution of stopping distances? (b) What is the probability that at least 2 cars out of 5 randomly selected cars in the study will stop in a distance that is greater than the distance calculated in part (a)? (c) What is the probability that a randomly selected sample of 5 cars in the study will have a mean stopping distance of at least 130 feet? Example: