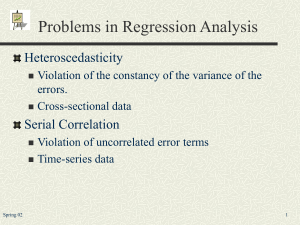

Lecture 6 - Testing Restrictions on the Disturbance Process

advertisement

Lecture 6 - Testing Restrictions on the

Disturbance Process

(References – Sections 2.7 and 2.10, Hayashi)

We have developed sufficient conditions for the

consistency and asymptotic normality of the OLS

estimator that allow for

conditionally heteroskedastic and serially

correlated regressors

conditionally heteroskedastic, serially

uncorrelated disturbances.

In this lecture we will consider testing the

disturbances for conditional heterokedasticity and

serial correlation.

It is clear why we would want to test for serial

correlation in the distrubances: one of the maintained

assumptions of the model is that there is no serial

correlation.

The case for testing conditional heteroskedasticity is

less compelling since our theory provides procedures

that do not depend on whether the disturbances are

conditionally homoskedastic or conditionally

heteroskedastic. But, such tests still might be useful.

Under assumptions A.1-A.5, if the disturbances

are conditionally homoskedastic we showed last

time that the inference procedures that are

appropriate in the classical linear regression

model with strictly exogenous regressors and

spherical disturbances can be applied for testing

and interval construction and these procedures

will be asymptotically valid.

If the disturbances are conditionally

homskedastic, procedures that take this into

account may perform better in finite samples.

(Monte Carlo studies might be helpful in this

regard.)

If the disturbances are conditionally

heteroskedastic, the OLS estimator is not

asymptotically efficient. If the disturbances are

conditionally heteroskedastic the FGLS

estimator will be asymptotically efficient. (To

apply the FGLS estimator we need to be able to

model the conditional heteroskedasticity and

have a consistent estimator of the parameters of

that model.)

The most popular nonparametric tests for serial

correlation (Box-Pierce and Ljung-Box tests)

rely on the assumption that the disturbances are

conditionally homoskedastic.

There are two ways to approach these testing

problems: parametric tests and nonparametric tests.

A parametric test specifies a model for the serial

correlation or the conditional heteroskedasticity and

then tests a set of restrictions on the parameters of

that model.

For instance, we might think that if the disturbances

are conditionally heteroskedastic it is because the

disturbances follow the first-order ARCH process:

t vt 0 1 t 1

In this case, conditional homoskedasticity is the

restriction that 1 = 0 and so a natural way to test for

conditional homoskedasticity would be to construct a

test of the null hypothesis, H0: 1=0.

A nonparametric test does not require you to

formulate a specific model of the conditional

heteroskedasticity or the serial correlation under the

alternative hypothesis.

Testing for Conditional Heteroskedasticity

Most tests for conditional heteroskedasticity in time

series regressions are parametric tests that formulate

the possible heteroskedasticity in terms of an ARCHtype model. These models will be discussed in more

detail in Econ 674.

Note Engle’s TR2 test here

Section 2.7 of Hayashi’s textbook outlines a

nonparametric test of heteroskedasticity developed by

White (1980). This test relies on the assumption of

i.i.d regressors and so it is not particularly useful in

time series settings.

The idea is that when regression disturbances are

conditionally homoskedastic, there are (at least) two

consistent estimators of the matrix S that appears in

the asymptotic variance formula for the OLS

2

'

E

(

x

x

estimator, where S =

t t t ):

1 T 2 '

ˆ

S ˆt xt xt

T 1

and

1 T

2

2 xt xt'

s Sxx = s T

, where s2 = SSR/T.

1

So, if the disturbances are homoskedastic, the

difference between these two estimators should be

getting “small” as sample size increases. In fact, the

difference will converge in probability to zero if the

disturbances are homoskedastic. White constructed a

statistic based on this difference that converges in

distribution to a χ2 random variable if the

disturbances are conditionally homoskedastic.

Testing for Serial Correlation

Both parametric and nonparametric tests are widely

used in time series to test for serial correlation in the

regression disturbances. The next major section of

the course will be concerned with “time series

models” and one of the applications of time series

will be to parameterize serial correlation in regression

models: that will allow to test for serial correlation

and, if it appears to be present, apply the FGLS

estimator and test procedures.

Our focus today will be on nonparametric tests. The

advantage of these tests is that they do not require us

to formulate a specific model of serial correlation.

The disadvantages are that 1) they will rely on the

assumption of conditionally homoskedastic

disturbances and 2) if we find evidence of serial

correlation, then what do we do?

Note: nonparametric tests for serial correlation in the

presence of conditional heteroskedasticity do exist

and nonparamteric adjustment procedures that

account for potential serial correlation in regression

models do exist. (HAC robust testing; HAC robust

standard errors.)

Let’s begin by assuming that {zt} is a stationary and

ergodic process with finite variance (i.e., it is

covariance stationary,too). Then the j-th

autocovariance of the process is:

cov( zt , zt j ) E[( zt z )( zt j z )] j

for all t and j, where z E ( z t )

The sample j-th autocovariance is defined by:

1 T

ˆ j ( zt zT )( zt j zT )

T t j 1

where

zT

is the sample mean of z1,…,zT

Corresponding to these are the j-th autocorrelations

and the sample j-th autocorrelations:

j corr ( z t , z t j ) cov( z t , z t j ) / var( zt ) j / 0

and

ˆ j ˆ j / ˆ0

We have already claimed as a fact that under the

assumption that zt is a stationary and ergodic process,

the sample autocovariances and autocorrelations are

consistent estimators of the population

autocovariances and autocorrelations.So, if zt is a

serially uncorrelated process, the sample

autocorrelations will converge almost surely to zero

for all j > 0. (Note: Since the autocovariance and

autocorrelation functions are symmetric around 0, we

only have to consider j > 0.)

Assume that {zt – μz} is a conditionally

homoskedastic m.d.s. That is:

zt = μz + t

where

E(t │ t-1,…) = 0 and E(t2 │ t-1,…) = 2 for all t.

Then, for any positive integer p,

T ˆ N (0, I p ) , ˆ [ ˆ1 ...ˆ p ]'

d

and, since uncorrelated normally distributed random

variables are independent random variables, { T ̂ j }

is asymptotically an i.i.d. N(0,1) sequence.

To test for first-order serial correlation

(H0: ρ1=0, vs. HA: ρ1≠0) use

ˆ1 (1 / T ) N (0,1)

d

To test for j-th order serial correlation

(H0: ρj=0, vs. HA: ρj≠0) use

ˆ j (1 / T ) N (0,1)

d

To test H0: 1=0,2=0,…,p=0 vs. HA: ρ1≠0 or ρ2≠0…

p

Q T ˆ 2j 2 ( p)

1

d

which is the Box-Pierce Q statistic. (This result

follows from the fact that the T ̂ j ’s are

asymptotically i.i.d. N(0,1).)

The Ljung-Box Q statistic, QLB,

p

QLB =

T (T 2)

1

ˆ 2j

Tj

is asymptotically equivalent to the B-P Q statistic

2

Q

( p) ), but seems to

(i.e., Q-QLB 0 and LB

p

d

work a little better in practice.

These tests can be applied to any stationary time

series to test for serial correlation.

We are interested in applying them to test for serial

correlation in regression disturbances. These

disturbances are unobservable! It would seem natural

to consider whether we can apply these tests using

the residuals ( ˆ' s) from the fitted regression in place

of the unobservable regression disturbances (’s).

Let

1 T

~

~

~

~

j j / 0 , where j t t j , for j = 0,1,2,…

T j 1

and

ˆ j ˆ j / ˆ0 ,

1 T

ˆ j ˆt ˆt j for j = 0,1,2,…

T j 1

Recall, we have already modified our assumptions

A.1-A.5 to restrict the disturbances to be

conditionally homoskedastic. If, in addition, the

regressors are strictly exogenous,

T ˆ N (0, I p ) , ˆ [ ˆ1 ...ˆ p ]'

d

i.e., the limiting distribution of the t and Q statistics

do not depend on whether we construct the sample

autocorrelations using the ~ ’s or the ̂ ’s.

Suppose the regressors are predetermined but not

strictly exogenous. In particular, suppose:

E(t │t-1,t-2,…,xt,xt-1,…) =0

and

E(t2 │t-1,t-2,…,xt,xt-1,…) =2

for all t.

N (0, I p ) , ˆ [ ˆ1 ...ˆ p ]'

In this case, T ˆ

d

where Φ is a pxp matrix whose ij-th element is:

Φij = E(xtt-i)’E(xtxt’)-1E(xtt-j)/2

This leads to the modified Box-Pierce Q statistic

ˆ ) 1 ˆ

QMBP = T ˆ ' ( I p

where ̂ is the consistent estimator of

equation (2.10.19) in Hayashi.

, given by

Under the null hypothesis of no serial correlation,

QMBP Χ2(p)

d