Stats Chapter I

advertisement

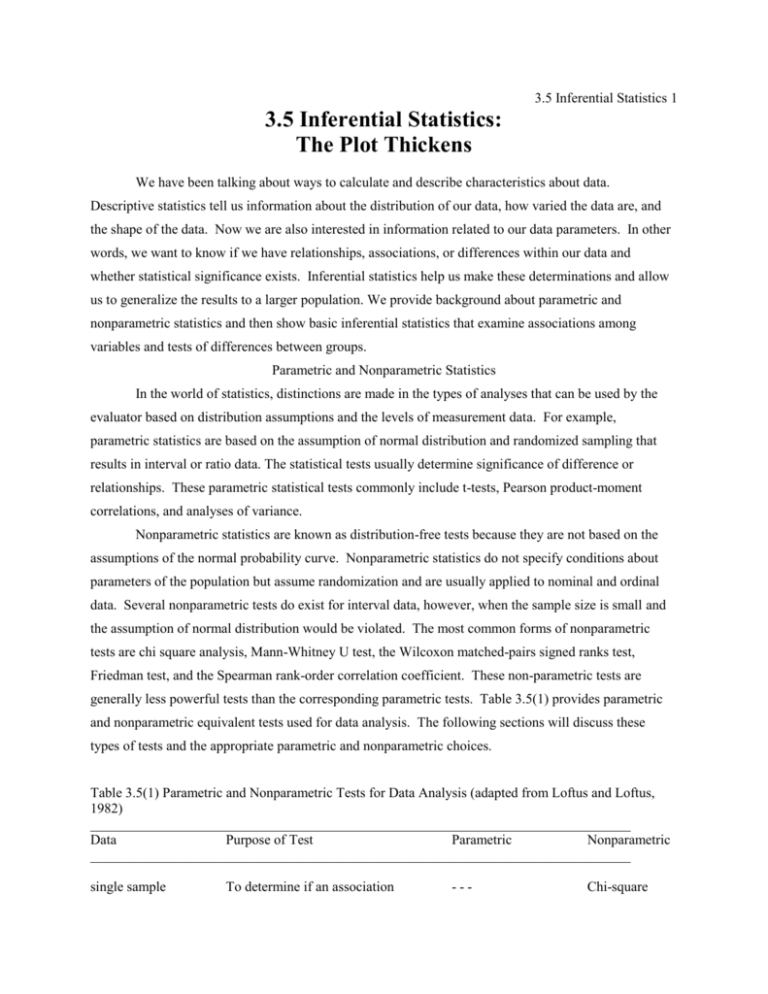

3.5 Inferential Statistics 1 3.5 Inferential Statistics: The Plot Thickens We have been talking about ways to calculate and describe characteristics about data. Descriptive statistics tell us information about the distribution of our data, how varied the data are, and the shape of the data. Now we are also interested in information related to our data parameters. In other words, we want to know if we have relationships, associations, or differences within our data and whether statistical significance exists. Inferential statistics help us make these determinations and allow us to generalize the results to a larger population. We provide background about parametric and nonparametric statistics and then show basic inferential statistics that examine associations among variables and tests of differences between groups. Parametric and Nonparametric Statistics In the world of statistics, distinctions are made in the types of analyses that can be used by the evaluator based on distribution assumptions and the levels of measurement data. For example, parametric statistics are based on the assumption of normal distribution and randomized sampling that results in interval or ratio data. The statistical tests usually determine significance of difference or relationships. These parametric statistical tests commonly include t-tests, Pearson product-moment correlations, and analyses of variance. Nonparametric statistics are known as distribution-free tests because they are not based on the assumptions of the normal probability curve. Nonparametric statistics do not specify conditions about parameters of the population but assume randomization and are usually applied to nominal and ordinal data. Several nonparametric tests do exist for interval data, however, when the sample size is small and the assumption of normal distribution would be violated. The most common forms of nonparametric tests are chi square analysis, Mann-Whitney U test, the Wilcoxon matched-pairs signed ranks test, Friedman test, and the Spearman rank-order correlation coefficient. These non-parametric tests are generally less powerful tests than the corresponding parametric tests. Table 3.5(1) provides parametric and nonparametric equivalent tests used for data analysis. The following sections will discuss these types of tests and the appropriate parametric and nonparametric choices. Table 3.5(1) Parametric and Nonparametric Tests for Data Analysis (adapted from Loftus and Loftus, 1982) ______________________________________________________________________________ Data Purpose of Test Parametric Nonparametric ______________________________________________________________________________ single sample To determine if an association --- Chi-square 3.5 Inferential Statistics 2 exists between two nominal variables single sample To determine if sample mean or median differs from some hypothetical value Matched t-test Sign test Two samples, between subjects To determine if the populations of two independent samples have the same means or median Independent t-test Mann-Whitney U test Two conditions, test or within subjects To determine if the populations Independent t-test of two samples have the same mean or median Sign Wilcoxon signed ranks test More than two conditions, between subjects To determine if the populations of more than two independent samples have the same mean or median One-way ANOVA Kruskal-Wallis More than two conditions, within subjects To determine if the populations or more than two samples have the same mean or median Repeated measures ANOVA Friedman Analysis of Variance Set of items with To determine if the two Pearson correlation Spearman two measures on measures are associated each item ______________________________________________________________________________ Associations Among Variables Chi Square (Crosstabs) One of the most common statistical tests of association is the chi-square test. For this test to be used, data must be discrete and at nominal or ordinal levels. During the process of the analysis, the frequency data are arranged in tables that compare the observed distribution (your data) with the expected distributions (what you would expect to find if no difference existed among the values of a given variable or variables). In most computerized statistical packages, this chi-square procedure is called "crosstabs" or "tables." This statistical procedure is relatively easy to hand calculate as well. The basic chi square formula is: X2=∑[(O-E)2/E] where: X2= chi square O= observed E= expected 3.5 Inferential Statistics 3 Sigma= sum of Spearman and Pearson Correlations Sometimes we want to determine relationships between scores. As an evaluator, you have to determine whether the data are appropriate for parametric or nonparametric procedures. Remember, this determination is based upon the level of data and sample size. If the sample is small or if the data are ordinal (rank-order) data, then the most appropriate choice is the nonparametric Spearman rank order correlation statistic. If the data are thought to be interval and normally distributed, then the basic test for linear (straight line) relationships is the parametric Pearson product-moment correlation technique. The correlation results for Spearman or Pearson correlations can fall between +1 and -1. For the parametric Pearson or nonparametric Spearman the data are interpreted in the same way even though a different statistical procedure is used. You might think of the data as sitting on a matrix with an x and yaxis. A positive correlation of +1 (r = 1.0) would mean that the slope of the line would be at 45 degrees upward. A negative correlation of -1 (r = -1) would be at 45 degrees downward (See Figure 3.5(1)). No correlation at all would be r = 0 with a horizontal line. The correlation approaching +1 means that as one score increases, the other score increases and is said to be positively correlated. A correlation approaching -1 means that as one score increases, the other decreases, so is negatively correlated. A correlation of 0 means no linear relationship exists. In other words, a perfect positive correlation of 1 occurs only if the values of the x and y scores are identical, and a perfect negative correlation of -1 only if the values of x are the exact reverse of the values of y (Hale, 1990). Insert Figure 3.5(2) about here For example, if we found that the correlation between age and number of times someone swam at a community pool was r = -.63, we could conclude that older individuals were less likely than younger people to swim at a community pool. Or suppose we wanted to see if a relationship existed between body image scores of adolescent girls and self-esteem scores. If we found r=.89, then we would say a positive relationship was found (i.e., as positive body image scores increased, so did positive self-esteem scores). If r=-.89, then we would show a negative relationship, (i.e., as body image scores increased, self-esteem scores decreased). If r=.10, we would say little to no relationship was evident between body image and self-esteem scores. If the score was r=.34 you might say a weak positive relationship exists between body image and self-esteem scores. Anything above r=.4 or below r=-.4 might be considered a moderate relationship between two variables. Of course, the closer the correlation is to r=+1 or -1, the stronger the relationship. Correlations can also be used for determining reliability as was discussed in Chapter 2.3 on trustworthiness. Let's say that we administered a questionnaire about nutrition habits for the adults in an 3.5 Inferential Statistics 4 aerobics class for the purpose of establishing reliability of the instrument. A week later we gave them the same test over. All of the individuals were ranked based upon the scores for each test, then compared through the Spearman procedure. If we found a result of r = .94, we would feel comfortable with the correlation referred to as the reliability coefficient of the instrument because this finding would indicate that the respondents scores were consistent from test to re-test. If r = .36, then we would believe the instrument to have weak reliability because the scores differed from the first test to the re-test. Two cautions should be noted in relation to correlations. First, you cannot assume that a correlation implies causation. These correlations should be used only to summarize the strength of a relationship. In the above example where r=.89, we can't say that improved body image causes increased self-esteem, because many other variables could be exerting an influence. We can however, say that as body image increases, so does self-esteem and vice versa. Correlations point to the fact that there is some relationship between two variables whether it is negative, nonexistent, or positive. Second, most statistical analyses of correlations also include a probability (p-value). A correlation might be statistically significant as shown in the probability statistics, but the reliability value (-1 to +1) gives much more information. The p-value suggests that a relationship exists but one must examine the correlation statistic to determine the direction and strength of the relationship. Tests of Differences Between and among Groups Sometimes, you may want to evaluate by comparing two or more groups through some type of bivariate or multivariate procedure. Again, it will be necessary to know the types of data you have (continuous or discrete), the levels of measurement data (nominal, ordinal, interval, ratio), and the dependent and independent variables of interest to analyze. In parametric analyses, the statistical process for determining difference is usually accomplished by comparing the means of the groups. The most common parametric tests of differences between means are the t-tests and analysis of variance (ANOVA). In nonparametric analyses, you are usually checking the differences in rankings of your groups. For nonparametrics, the most common statistical procedures for testing differences are the Mann-Whitney U test, the Sign test, the Wilcoxon Signed Ranks test, Kruskal-Wallis, and the Friedman analysis of variance. While these parametric and nonparametric tests can be calculated by hand, they are fairly complicated and a bit tedious, so most evaluators rely on computerized statistical programs. Therefore, each test will be described in this section, but no hand calculations will be provided. For individuals interested in the manual calculations, any good statistics book will provide the formulas and necessary steps. Parametric Choices For Determining Differences T-tests 3.5 Inferential Statistics 5 Two types of t-tests are available to the evaluator: the two-sample or independent t-test and the matched pairs or dependent t-test. The independent t-test, also called the two-sample t-test, is used to test the differences between the means of two groups. The result of the analysis is reported as a t value. It is important to remember that the two groups are mutually exclusive and not related to each other. For example, you may want to know if youth coaches who attended a workshop performed better on some aspect of teaching than those coaches who didn't attend. In this case, the teaching score is the dependent variable and the two groups of coaches (attendees and non-attendees) is the independent variable. If the t statistic that results from the t-test has a probability <.05, then we know the two groups are different. We would examine the means for each group (those who attended as one group and those who did not) and determine which set of coaches had the higher teaching scores. The dependent t-test, often called the matched pairs t-test, is used to show how a group differs during two points in time. The matched t-test is used if the two samples are related. This test is used if the same group was tested twice or when the two separate groups are matched on some variable. For example, in the above situation where you were offering a coaching clinic, it may be that you would want to see if participants learned anything at the clinic. You would test a construct such as knowledge of the game before the workshop and then again after the training to see if any difference existed in knowledge gained by the coaches that attended the clinic. The same coaches would fill out the questionnaire as a pretest and a posttest. If a statistical difference of p <.05 occurs, you can assume that the workshop made a difference in the knowledge level of the coaches. If the t-test was not significant (p>.05), you could assume that the clinic made little difference in the knowledge level of coaches before and after the clinic. Analysis of Variance (ANOVA) Analysis of variance is used to determine differences among means when there are more than two groups examined. It is the same concept as the independent t-tests except you have more than two groups. If there is only one independent variable (usually nominal or ordinal level data) consisting of more than two values, then the procedure is called a one-way ANOVA. If there are two independent variables, you would use a two-way ANOVA. The dependent variable is the measured variable and is usually interval or ratio level data. Both one-way and two-way ANOVAs are used to determine if three or more sample means are different from one another (Hale, 1990). Analysis of variance results are reported as an F statistic. For example, a therapeutic recreation specialist may want to know if inappropriate behaviors of psychiatric patients are affected by the type of therapy used (behavior modification, group counseling, or nondirective). In this example, the measured levels of inappropriate behavior would be the dependent variable while the independent variable would be type of therapy that is divided into three groups. If you found a statistically significant difference in the behavior of the patients (p<. 05), you would examine the means for the three groups and determine which therapy was best. 3.5 Inferential Statistics 6 Additional statistical post-hoc tests, such as Bonferroni or Scheffes, may be used with ANOVA to ascertain which groups differ from one another. Other types of parametric statistical tests are available for more complex analysis purposes. The t-tests and ANOVA described here are the most frequently used parametric statistics by beginning evaluators. Nonparametric Choices for Determining Differences For any of the common parametric statistics, a parallel nonparametric statistic exists in most cases. The use of these statistics depends on the level of measurement data and the sample as is shown in Table 3.5. The most common nonparametric statistics include the Mann-Whitney U, Sign Test, Wilcoxon Signed Ranks Test, Kruskal-Wallis, and Friedman Analysis of Variance. Mann-Whitney U Test The Mann-Whitney U test is used to test for differences in rankings on some variable between two independent groups when the data are not scaled in intervals (Lundegren & Farrell, 1985). For example, you may want to analyze self-concept scores of high fit and low fit women who participated in a weight-training fitness program. You administer a nominal scale form where respondents rank characteristics as "like me" or "not like me." Thus, this test will let you determine the effects of this program on your participants by comparing the two groups and their self-concept scores. This test is equivalent to the independent t-test. The Sign Test The Sign test is used when the independent variable is categorical and consists of two levels. The dependent variable is assumed to be continuous but can't be measured on a continuous scale, so a categorical scale is substituted (Hale, 1990). This test becomes the nonparametric equivalent to the dependent or matched t-test. The Sign test is only appropriate if the dependent variable is binary (i.e., takes only two different values). Suppose you want to know if switching from wood to fiber daggerboards on your Sunfish sailboats increases the likelihood of winning regattas. The Sign test will let you test this question by comparing the two daggerboards as the independent variable and the number of races won as the dependent variable. Wilcoxon Signed Ranks Test The Wilcoxon test is also a nonparametric alternative to the t-test and is used when the dependent variable has more than two values that are ranked. Positions in a race are a good example of this type of dependent variable. As in the Sign test, the independent variable is categorical and has two levels. For example, you might wish to know if a difference exists between runners belonging to a running club and those that do not compared to their finish positions in a road race. The results of this 3.5 Inferential Statistics 7 analysis indicate the difference in ranks on the dependent variable (their finish position) between the two related groups (the two types of runners). Kruskal-Wallis The Kruskal-Wallis test is equivalent to the one-way ANOVA. It is used when you have an independent variable with two or more values that you wish to compare to a dependent variable. You use this statistic to see if more than two independent groups have the same mean or median. Since this procedure is a nonparametric test, you would use the statistic when your sample size is small or you are not sure of the distribution. An example of an application would be to compare the attitudes of counselors at a camp (the dependent variable) toward three different salary payment plans: weekly, twice a summer, or end of the summer (independent variable). Friedman Analysis of Variance The Friedman Analysis of Variance test is used for repeated measures analysis when you measure a subject two or more times. You must have a categorical independent variable with more than two values and a rank-order dependent variable that is measured more than twice (Hale, 1990). This test is the nonparametric equivalent to repeated measures of analysis of variance (two-way ANOVA). An example of a situation when you might use this statistical procedure would be if you had ranked data from multiple judges. For example, suppose you were experimenting with four new turf grasses on your four soccer fields. You ask your five maintenance workers to rank the grasses on all four fields for durability. You could analyze these results with the Friedman's procedure. Making Decisions about Statistics The decision model for choosing the appropriate type of statistical procedure is found in Figure 3.5(3). This model provides a logical progression of questions about your parametric and nonparametric data that will help you in your selection of appropriate statistics. Many other forms of statistical measures are possible, but the ones described here will provide you with the most common forms used in evaluations. Insert Figure 3.5(3) about here __________________ From Ideas to Reality This chapter initially may seem complex and full of terms not familiar to you. We realize that statistics are often confusing and overwhelming to some people, but as you begin to understand what they mean, they can be extremely helpful. We acknowledge that one short chapter will not make you an expert on these statistics, but we hope this chapter will at least show you some of the ways that we can gain valuable information by examining the relationships between and among variables. The more that you use statistical procedures, the more you will understand them. Thank goodness we have computers 3.5 Inferential Statistics 8 these days to save time and effort. Computers, however, do not absolve us from knowing what statistics to use to show associations or differences within our data. If you want more information than is offered in this short chapter, you may want to consult one of the many statistics books that exist. __________________ Now that You have Studied this Chapter, You Should be Able to: --Explain when parametric and nonparametric statistics should be used --Given a situation with the levels of measurement data known, choose the correct statistical procedure to use --Use the decision model for choosing appropriate statistical procedures to make decisions about a statistical problem 40 40 35 35 + 1 correlation 30 -1 correlation 30 25 25 20 20 15 15 10 10 5 5 0 0 0 10 20 30 40 0 Positive Correlation 10 20 Negative Correlation Figure 3.5(2) Examples of Correlation Relationships Figure 3.5(3) A Statistical Decision Tree (adapted from Hall, 1990) 30 40 3.5 Inferential Statistics 9 Is the dependent variable ordinal, interval, or ratio? No Is the dependent variable measured more than once? No No Is there more than one independent variable? Choose the one-way chi-square Yes Yes Are the dependent variables ranks related to one another or from the same case? Choose the two-way chi-square Yes No No Is the dependent variable binary? Stop Yes Is the dependent variable measured more than twice? Yes Yes Choose Friedman nonparametric analysis or variance Choose the Sign test No Choose the Wilcoxon test Are you comparing a sample mean to a known or hypothesized population mean? Yes Do you know the population standard deviation? Are these two samples related? Yes Choose the one-sample z-test Choose the one-sample t-test. No No Are you comparing the means of two samples? Yes Yes No Choose the dependent t-test Choose the independent t-test No Are you comparing the means of more than two groups? Is there only a single independent variable? Yes Yes Choose the one-way ANOVA No Are there two or more categorical independent variables? Yes Choose the two-way ANOVA No No Are you interested in describing the relation between two variables? Stop Yes Are the variables interval or ordinal? Yes Choose the Pearson correlation coefficient Yes Choose the Spearman rank correlation No Are the variables ranked?