Chapter 9

advertisement

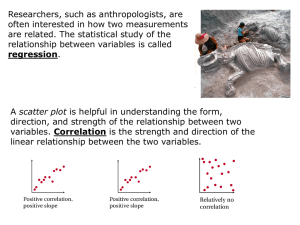

Chapter 9: Notes Statistical Inferences Concering One/Two Correlation Coefficients Statistical Tests involving single Correlation Coefficient A sample drawn from a population, of which the correlation coefficient between two variables is computed. From this computed correlation, we want to make an inference to the population’s correlation between the two variables. The null hypothesis in this case, is that there is zero correlation between the two variables of interest in the population, i.e. Ho : = 0.00. Two ways to determine whether to reject or fail-to-reject null hypothesis: 1. Using the correlation coefficient as a calculated value The correlation coefficient is used as calculated value, which it will compare with a correlation coefficient critical value. This value is obtained from tables where correlation coefficients are used as entries to determine the critical value. If the correlation coefficient is equal or greater than the critical value, null hypothesis is rejected. 2. Using t-test Alternatively, a t-test could be used. Correlation coefficient is put into a t-formula to yield a calculated value referred to as t. This is compared against a critical t-value (located from a t-table). If t is equal or greater than the critical t-value, the null hypothesis is rejected. Regardless of which method used, the same decision should be arrived at. Correlation coefficient so far mostly referred to Pearson’s product-moment but can include other types: Spearman’s, biserial etc. Test on Many Single Correlation Coefficients This refers to multiple tests conducted for the multiple single correlation coefficients. These coefficients are sometimes shown as a correlation matrix. Each test is treated separately, but as with multiple one-way tests, inflated Type I Error is a risk. Bonferroni adjustment technique could be used. Test of Reliability and Validity Coefficients Reliability and validity tests often rely on correlation coefficients. Hence, researchers often apply a statistical test to determine whether or not their reliability and validity coefficients are significant. Again, the null hypothesis set up in this case is that their correlation coefficient is zero. Statistically Comparing Two Correlation Coefficients Refer to textbook Pg. 221 Figure 9.2 Two possible scenarios: 1. Two population, two same variables in each (e.g. x and y). One sample drawn from each population. For each sample, compute r xy . The inference is that there is no difference between the correlation coefficient (of x and y) of each population. One example: Two population, male and female. Sample drawn. Within male sample, correlation between x and y computed. Within female, correlation between x and y computed. The null hypothesis is that for the two populations, the correlation between x and y in male population is the same as the correlation between x and y in female population. 2. One population, correlation between x and y computed, Correlation between x and z computed. Null hypothesis is that in the population, correlation between x and y is the same as correlation between x and z. Cautions 1. Relationship strength, Effect size and power Give credit for calculating strength of association, effect size or post hoc power analysis to see if there are practical significance. 2. Linearity and Homoscedasticity For Pearson’s correlation coefficient, two assumptions: a. Linearity of data b. Homoscedasticity of data (means variance of one variable is independent from the value of the other variable) Best way to check: scatter diagrams. If diagrams not provided, researcher should at least talk about it. 3. Causality Credit to those who said correlation does not mean causality. 4. Attenuation If measuring instruments are not perfect, the correlation coefficient will be underestimated. One way to rectify is: if researchers have info concerning the reliabilities of the measuring instruments, correlation-forattenuation adjustment can be made to increase the correlation coefficient. 5. Fisher’s r-to-z Transformation Some kind of transformation.