Sampling Distributions

advertisement

Sampling Distributions

Introduction

Distribution of Mean

Finite Population

Chi-Square Distribution

T Distribution

F Distribution

Order Statistics

Introduction

Statistics concerns itself mainly with conclusions and predictions resulting

from chance outcomes that occur in carefully planned experiments or

investigations.

o These chance outcomes constitute a subset, or sample, of

measurements or observations from a larger set of values called the

population.

o In the continuous case they are usually values of identically distributed

random variables, whose distribution referred to as the population

distribution, or the infinite population sampled.

If X1, X2... Xn are independent and identically distributed random variables, we

say that they constitute a random sample from the infinite population given by

their common distribution.

o If f ( x1 , x2 , , xn ) is the value of the joint distribution of such a set of

n

random variable at ( x1 , x2 , , xn ) , then f ( x1 , x2 , , x n ) f ( xi ) .

i 1

Statistical inferences are usually based on statistics, that is, on random

variables that are functions of a set of random variables X1, X2... Xn

constituting a random sample. Typical of these “statistics” are:

o If X1, X2... Xn constitute a random sample, then

n

X

X

i

i 1

n

is called the sample mean and

n

S2

(X

i 1

i

X )2

n 1

is called the sample variance.

Distribution of Mean

Since statistics are themselves random variables, their values will vary from

sample to sample, and it is customary to refer to their distributions as

sampling distributions.

Sampling distribution of the mean: If X1, X2... Xn constitute a random sample

from an infinite population with the mean and the variance 2 , then

E ( X ) and var( X )

2

n

.

o

1

n X n 1

E ( X ) E i E ( X i ) n

n

i 1 n i 1 n

o

2

n X i n 1

var( X ) E ([ X E ( X )] ) E

E ( X i )

i 1 n i 1 n

2

2

n 1

n 1

E ( X i ) 2 E[( X i ) 2 ]

i 1 n

i 1 n

1

2

2

n 2

n

n

o It is customary to write E ( X ) as X and var( X ) as X2 , and refer to

X as the standard error of the mean.

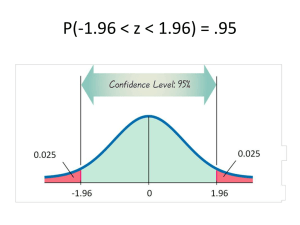

Central limit theorem: If X1, X2... Xn constitute a random sample from an

infinite population with the mean and the variance 2 , and the momentgenerating function M X (t ) , then the limiting distribution of

X

2 n

as n , is the standard normal distribution.

Z

o Sometimes, the central limit theorem is interpreted incorrectly as

implying that the distribution of X approaches a normal distribution

when n . This is incorrect because var( X ) 0 when n .

o On the other hand, the central limit theorem does justify approximating

the distribution of X with a normal distribution having the mean

and the variance 2 n when n is large. (i.e. n 30 regardless of the

actual shape of the population sampled).

o If the population in normal, the distribution of X is a normal

distribution regardless of the size of n: If X is the mean of a random

sample of size n from a normal population with the mean and the

variance 2 , its sampling distribution is a normal distribution with

the mean and the variance 2 n .

Distribution of Mean: Finite Population (without replacement)

If an experiment consists of selecting one or more values from a finite set of

numbers {c1 , c2 , c N } , this set is referred to as a finite population of size N.

If X1 is the first value drawn form a finite population of size N, X2 is the

second value drawn... Xn is the nth value drawn, and the joint probability

distribution of these n random variables is given by

1

N ( N 1) ( N n 1)

for each ordered n-tuple of values of these random variables, then X1, X2... Xn

f ( x1 , x2 , , xn )

are said to constitute a random sample from the given finite population.

o The probability for each subset of n of the N elements of the finite

population (regardless of the order) is

n!

1

N ( N 1) ( N n 1) N

n

This is often given as alternative definition or as a criteria for the

selection of a random sample of size n from a finite population of size

N

N: Each of the possible samples must have the same probability.

n

The marginal distribution of Xr is given by f ( x r )

1

for xr c1 , c2 , c N ,

N

for r 1,2, , n .

The mean and the variance of the finite population {c1 , c2 , c N } are

N

ci

i 1

N

1

1

and 2 ( ci ) 2

N

N

i 1

The joint marginal distribution of any two of the random variables X1, X2, ...,

Xn is given by g ( x r , x s )

1

for each ordered pair of elements of the

N ( N 1)

finite population.

If Xr and Xs are the rth and sth random variables of a random sample of size n

drawn from the finite population {c1 , c2 , c N } , then cov( X r , X s )

2

N 1

If X is the mean of a random sample of size n from a finite population of size

N with the mean and the variance 2 , then E ( X ) and

var X

2 N n

n

N 1

.

o Note that var X differs from that of a infinite population only by the

finite population correction factor

N n

. When N is large compared

N 1

to n, the difference between the two formulas for var X is usually

negligible.

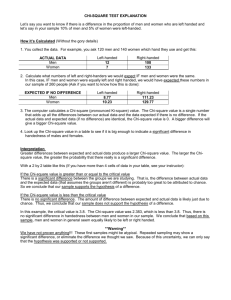

The Chi-Square Distribution

If X has the standard normal distribution, then X 2 has the chi-square

distribution with 1 degree of freedom. Thus, chi-square distribution plays an

important role in problems of sampling from normal distribution.

A random variable X has a chi-square distribution, and it is referred to as a

chi-square random variable, if and only if its probability density is given by

f ( x)

1

x

2

2 ( 2)

2

2

e

x

2

for x > 0.

o The mean and variance of the chi-square distribution are given by

and 2 2 , and its moment-generating function is given by

M X (t ) (1 2t ) 2 .

If X1, X2... Xn are independent random variables having standard normal

n

distributions, then Y X i2 has the chi-square distribution with n

i 1

degree of freedom.

If X1, X2... Xn are independent random variables having chi-square

n

distributions, with 1 , 2 , , n degrees of freedom, then Y X i2 has the

i 1

chi-square distribution with 1 2 n degrees of freedom.

If X1 and X2 are independent random variables, X1 has a chi-square distribution

with 1 degrees of freedom, and X1 + X2 has a chi-square distribution with

1 degrees of freedom, then X2 has a chi-square distribution with

1 degrees of freedom.

If X and S 2 are the mean and the variance of a random sample of size n from

a normal population with the mean and the variance 2 , then

1. X and S 2 are independent;

2. the random variable

degrees of freedom.

(n 1) S 2

2

has a chi-square distribution with n - 1

o Proof for part 2: Begin with the identity

n

X

i 1

n

Xi X

2

i

2

n X

2

i 1

then divide each term by 2 and substitute n 1S 2 for

X

n

i 1

2

n 1S 2 X

Xi

n

2

i 1

n

i

X

,

2

2

The term on the left-hand side is a random variable having a chi-square

distribution with n degrees of freedom, the second term on the righthand side is a random variable having a chi-square distribution with 1

degree of freedom, and since X and S are independent (without proof

here), it follows that the first term on the right-hand side is a random

variable with n – 1 degree of freedom.

When the degree of freedom is greater than 30, the probabilities related to chisquare distributions are usually approximated with normal distributions: If X

is a random variable having a chi-square distribution with degrees of

freedom and is large, the distribution of

X

2

, and alternatively

2 X 2 , can be approximated with the standard normal distribution.

The t Distribution

In realistic applications the population standard deviation is unknown. This

makes it necessary to replace with an estimate, usually with the sample

standard deviation S.

If Y and Z are independent random variables, Y has a chi-square distribution

with degrees of freedom, and Z has the standard normal distribution, then

the distribution of

is given by

T

Z

Y

1

1

2 2

t

2

1

f (t )

2

for t , and is called the t distribution with degrees of freedom.

If X and S 2 are the mean and the variance of a random sample of size n from

a normal population with the mean and the variance 2 , then

X

S n

has the t distribution with n - 1 degrees of freedom.

T

o The random variables Y

n 1S 2

2

and Z

X

n

have,

respectively, a chi-square distribution with n – 1 degrees of freedom

and the standard normal distribution. Since they are also independent,

substitution into the formula for T

Z

Y

yields the result.

When the degree of freedom is 30 or more, probabilities related to the t

distribution are usually approximated with the use of normal distributions.

The F Distribution

If U and V are independent random variables having chi-square distribution

with 1 and 2 degrees of freedom, then

U 1

V 2

is a random variable having an F distribution, namely, a random variable

F

whose probability density is given by

2

1

1

( 1 2 )

1

1

2

1

2

1 f 2 1 1 f 2

g( f )

2

1 2 2

2 2

for f 0 and g ( f ) 0 elsewhere.

If S12 and S 22 are the variances of independent random samples of sizes n1 and

n2 from normal distributions with the variances 12 and 22 , then

S12 12

S 22 22

is a random variable having an F distribution with n1 1 and n2 1 degrees

F

of freedom.

o

12

n1 1s12

12

and 22

n2 1s 22

22

are values of independent

random variables having chi-square distributions with n1 1 and

n2 1 degrees of freedom. Substitution of the values for U and V

yields the result.

Applications of the distribution arise in problems in which the variances of

two normal populations are compared.

Order Statistics

Consider a random sample of size n from an infinite population with a

continuous density and suppose we arrange the values of X1, X2... Xn according

to size. If we look upon the smallest of the x's as a value of the random

variable Y1, the next largest as a value of the random variable Y2, the next

largest after that as a value of the random variable Y3, ..., and the largest as a

value of the random variable Yn, we refer to these Y's as order statistics.

o In particular, Y1 is the first order statistic, Y2 is the second order

statistic and so on. (As the population in infinite and continuous, the

probability that any two of the x's will be alike is zero).

For random samples of size n from an infinite population which has the value

f(x) at x, the probability density of the rth order statistic Yr is given by

r 1

nr

n!

yr f ( x )dx f ( y ) f ( x )dx

r

yr

( r 1)! ( n r )!

for yr .

g r ( yr )

For large n, the sampling distribution of the median for random samples of

size 2n 1 is approximately normal with the mean ~ and the variance

1

.

8[ f ( ~ )] 2 n