Here is a full lecture on the F-test which I gave today

advertisement

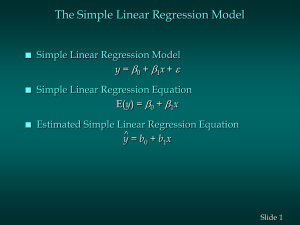

F-Tests in Econometrics

There are three types of F-tests commonly used in economics.

(1) zero slopes

(2) linear restrictions

(3) Chow test for regression stability

All three of these tests can be easily computed using the GRETL software. I will show you how to do

this later in class. Let’s begin with zero slopes.

(1) zero slopes – F-test

Suppose that we have the following regression:

1

Yt o X1t

XK

t

t

1

K1

for t = 1,...,N.

Clearly, we have K coefficients and N observations on data. The zero slopes F-test is

concerned with the following hypothesis:

Ho: β1 = 0, ..., βK-1 = 0

Ha: βj ≠ 0 for some j

How can we test this hypothesis? To test this, we consider two separate regressions.

(Restricted)

(Unrestricted)

Yt o R

t

Yt o X1t

X K1 UR

t

1

K1 t

You should study these two equations carefully. Note how that the restricted equation

simply assumes that all the β’s are all zero (except for the constant βo). The error term in

the first equation is R while the error term in the unrestricted equation is UR .

t

t

R

UR

When we run these regressions, we will get estimated residuals ̂ and ̂

t

t . In

general, these estimated residuals will be different. However, if Ho is true, then it makes

sense to believe that ˆ R ̂ UR . That is, they will be nearly the same. Therefore, if

t

t

Ho is true then the following logic holds:

UR

ˆ R

t ̂ t

2 ˆ UR 2

(ˆ R

t ) ( t )

N

R )2 N (ˆ UR )2

t

t

t 1

t 1

(ˆ

SSRR – SSRUR 0

where SSRR

N

(ˆ

t 1

R )2 .

t

We next “normalize” this statistic (since any change in the units of measurement of Yt

will also change the units of measurement of εt). We do this by dividing by SSRUR.

{SSR R SSR UR }

0. We can now see the F statistic

SSR UR

can be defined in the following way:

It follows that if Ho is true, then

F̂

{SSR R SSR UR } ( N K )

SSR UR

( K 1)

We multiply the statistic by (N-K)/(K-1) because it allows us to determine the

distribution of the statistic for any linear model and any amount of data. If Ho is true

then the F̂ statistic is distributed as an FK-1 ,N-K distribution. Textbooks on econometrics

will have F distribution tables. You can also find such tables on the Internet.

Our decision rule for the test is as follows:

If F̂ is “large”, then we should reject Ho. If, on the other hand, F̂ is “small”, then we

should not reject Ho. What do we mean by “large” and “small” here? This is given by

the distribution of F̂ . On the next page is a drawing of the F distribution (thanks to my

daughter Sonya -- age 13 -- for making this drawing) with degrees of freedom df1 = K-1,

df2 = N-K. Our notion of “small” would be a value of F-hat that lands us in the

aquamarine colored area. Our notion of “large” would be any value of F-hat that lands us

in the red region.

Our rule becomes reject Ho is F-hat is larger than the critical value and do not reject

Ho if F-hat is smaller than the critical value. Another way of saying this is to reject Ho

if the p-value of F-hat is less than 0.05 and do not reject Ho if the p-value of F-hat is

larger than 0.05.

Reject Ho if F̂o critical value . Do not reject Ho if F̂o critical value .

Note that the critical value will change whenever N and K change. Note also that if we

do not reject Ho then we are saying that our regression is not any better at explaining Yt

than the mean of Y. If we reject Ho, then we are saying that the regression model is

better at explaining the variation in Yt than Y.

(2) linear restrictions – F-test

To see how we encounter linear restrictions in econometrics, suppose that we have the

following Cobb-Douglas production function.

e u t

Yt AL

K

t t

We usually just assume β = 1- α. However, this may not be justified by the data. We

should at least test to see if α + β = 1. Such a test is a test of linear restrictions. In fact it

is a test of exactly ONE restriction – namely α + β = 1.

First we convert the above model into a linear model by taking the natural logarithm of

both sides to get

log( Yt ) log( A) log( L t ) log( K t ) u t

We can write this again as

log( Yt ) o log( L t ) log( K t ) u t

1

2

Our hypothesis is that Ho: β2 = 1- β1 with an alternative hypothesis H1: β2 ≠ 1 – β1. How

can we test this hypothesis? To test Ho we need to run two regressions.

(Restricted)

{log( Yt ) log( K t )} o {log( L t ) log( K t )} u R

t

1

(Unrestricted)

log( Yt ) o log( L t ) log( K t ) u UR

t

1

2

We run these two regressions and get estimated û R and û UR . Once again we know

t

t

R

UR

that if Ho is true then the estimated residuals û û

. We can therefore form the

t

t

following F-test statistic:

F̂

{SSR R SSR UR } ( N K )

~ F1,N-K

SSR UR

1

In this case, the unrestricted regression has N-K degrees of freedom. In addition, there is

only 1 restriction used in the restricted regression. Therefore, we multiply the statistic by

the ratio (N-K)/1 and get the F statistic.

Our decision rule is to reject Ho if F̂ > the critical value. We will not reject Ho if the

calculated F̂ < the critical value. Note that the F distribution is now F1 ,N-K.

The critical value shown in the figure can be determined by looking up the value in an Ftable or the Internet. The critical value changes whenever K or N changes.

This leads us to the final type of F-test.

(3) Chow Test for Regression Stability

The last F-test concerns the issue of whether the regression is stable or not. We begin by

assuming a regression model

Yt o X1t

X K1 t

1

K1 t

for t = 1, ..., N.

The question we ask is whether our estimated model will be roughly the same, whether

we estimate it over the first half of the data or the second half of the data.

Consider splitting our data into two equal parts.

t = 1,2,...,N/2

and

t = (N/2)+1, (N/2) + 2, ..., N.

Both intervals have N/2 observations of data.

We next consider two regressions—one on the first data set, the other on the second data

set.

1

X K1 UR

t

K 1 t

Unrestricted Regression on data1: Y 1 1X1 1

t

o

for

1 t

t = 1,2,...,N/2

2

X K1 UR

t

K 1 t

Unrestricted Regression on data2: Yt 2 2 X1t 2

o

for

1

t = (N/2)+,(N/2)+2, ..., N

Our hypothesis is that: Ho: 1o 2o , 11 12 , ..., 1K 1 2K 1

Ha: 1j 2j for some j

This leads to K constraints in Ho. We run a third regression over the entire data set and

impose the K constraints in Ho. Therefore we can write this regression as

Restricted Regression on all data:

Yt o X1t

X K1 Rt

1

K 1 t

Once again we form an F statistic using the SSR from these three regressions above.

F̂

{SSR R SSR UR1 SSR UR 2 } ( N 2 K )

{SSR UR1 SSR UR 2 }

K

We divide by K because there are K constraints in Ho. We multiply by (N-2K) because

the two unrestricted regressions each have (N/2) – K degrees of freedom. Adding these

two together gives (N – 2K).

The F-statistic above is distributed with an F distribution having K and N-2K degrees of

freedom. The graph of the F distribution is shown below.

Like before we will reject Ho if F̂ > the critical value. We will not reject Ho if the

calculated F̂ < the critical value. Note that the F distribution is now FK, N-2K.

This means we should reject Ho if the p-value for F̂ is less than 0.05 and we should not

reject Ho if the p-value for F̂ > 0.05.