2. Complications in Object Oriented Testing

advertisement

Testing Object Oriented

Software

A Research Report

4-Week Project (F05)

Submitted to:

Yvonne Dittrich

Submitted by:

Hafiz Saqif Chaudhry saqifcha@itu.dk

Kashif Javaid kasjavaid@itu.dk

Wajid Qureshi wajid@itu.dk

Dated: 27th May 2005

Testing Object Oriented Software

Table of Contents

1. Introduction .................................................................... 4

1.4. Software Testing: ..................................................................................................... 5

1.5. The Testing Levels:.................................................................................................. 5

1.6. Why Object Oriented Testing is complicated: ......................................................... 5

2. Complications in Object Oriented Testing...................... 6

2.1. Encapsulation and Information Hiding: ................................................................... 6

2.2. Polymorphism and Dynamic Binding:..................................................................... 8

2.3. Inheritance: ............................................................................................................ 10

2.4. Genericity:.............................................................................................................. 13

2.5. Sub-Conclusion:..................................................................................................... 14

3. Testing Techniques ....................................................... 15

4. An overview of Testing Tools: ..................................... 18

5. Conclusion: ................................................................... 19

6. Suggestions for Extension: ........................................... 19

7. References: ................................................................... 19

2

Testing Object Oriented Software

Preface

In this report, we will investigate the effects of object oriented approach on software

testing and how to carry out successful testing. We will evaluate several techniques and

tools for testing object oriented software, proposed in diverse research articles.

The report mainly consists of following sections:

Introduction- contains the software testing theory, testing levels and an approach to test

object oriented software.

Complications in object oriented testing- addresses the obstacles in object oriented

testing. This part enlists the problems in sub-section which include the description of the

problem followed by example and proposed solution.

Testing Techniques- this section is more general which describes the techniques for

testing object oriented software to overcome the problems mentioned in the previous

section as well as to remove other complications.

An overview of testing tools- this section is about testing tools which contains the

integral properties of a good software test tool for object oriented software.

After the classification of tools, we conclude the report with some suggestions for

exertion.

3

Testing Object Oriented Software

1. Introduction

1.1. Purpose:

The purpose of this project is to investigate the complications introduced by the powerful

new features of object oriented languages and how to deal with those problems. The

project is research based which covers the research articles on aspects of object oriented

languages. The research is focused on the concerns by discussing what testing an object

oriented system means and presents several techniques for doing so.

The research articles have been chosen in view to the comprehensiveness of the problems

they mention. We are considering more general problems but specific to object oriented

approach, hence the common problems for traditional software and object oriented

software are not covered in this report.

1.2. Motivation:

Software is the key to success for small scale to the largest scale organizations. The

companies are buying more software to fulfill their business requirements. And their first

and foremost goal to buy software is, solution oriented, easy to use, fast and bug free.

Furthermore robustness, reliability and flexibility are other key features in a good

software solution. Software developing companies are working hard to achieve these

goals. The methodology used to achieve all these is to test the software at every stage of

its development. The development of the test strategy starts with the software

specification and goes on with the development of the software.

Object oriented technology is becoming more and more popular due to its advantages in

improving the productivity and reliability in software development. On the other hand

object oriented systems present new and different problems with respect to traditional

structured programs, due to its different approach. Significant research has been carried

out in object oriented analysis, design and programming languages, but relatively a little

attention has been paid towards the testing of object oriented systems.

1.3. Methodology:

This research project spans the articles of different authors that mention the tricky

situations in the testing of object oriented software. We also looked at the testing books

but most of them were not directly addressing the techniques to overcome the problems

occurred by object oriented approach. The research articles were found at ITU library’s

databases, i.e., ACM, CiteSeer, DADS and Springer.

4

Testing Object Oriented Software

1.4. Software Testing:

The authors present the following comprehensive IEEE definition of testing: "Testing is

the process of executing a program with the intention to yield measurable errors. It

requires that there be an oracle to determine whether or not the program has functioned as

required, with comparison of performance against a defined specification." They also

present the following definition of the testing process: "The process of exercising the

routines provided by an object with the goal of uncovering errors in the implementation

of the routines or the state of the object or both."

1.5. The Testing Levels:

The introduction of object-oriented programming has created new types of testing, as

well as utilizing the traditional types. Smith and Robson discuss four levels:

1) The algorithm level, which involves the churning of the routine (method) on some

data.

2) The class level which, is concerned with the interaction between the attributes and

methods for a particular class.

3) The cluster level, which considers interactions between a groups of co-operating

classes.

4) The system level, which involves testing an entire system in the aggregate.

Of these four levels, the class and cluster levels are specific to the object oriented

paradigms, while the algorithm and system levels resemble traditional unit and system

test. Jorgensen and Erickson segment the testing process into three levels: unit,

integration, and system. They agree with Smith and Robson that unit testing begins at the

method level. They view system testing as a testing with threads (sequence of normal

usage steps) and interaction between threads. This is a more defined view of Smith and

Robson's system level.

1.6. Why Object Oriented Testing is complicated:

While it is still possible to apply traditional testing techniques to object oriented

problems, the process of testing object oriented software is more difficult than the

traditional approach, since programs are not executed in a sequential manner. Object

oriented components can be combined in an arbitrary order, thus defining test cases,

becomes a search for the order of routines that causes an error.

The state-based nature of object oriented systems can have a negative effect on testing.

This is because the state of the object is not defined solely by state values in general, but

5

Testing Object Oriented Software

through associations with other objects. Additionally, objects can be compared by

equality and identity, which adds a level of confusion concerning what needs to be

verified.

2. Complications in Object Oriented Testing

Since new problems are related to object oriented specific characteristics, their

identification requires an analysis of the features provided by object oriented languages.

We describe the critical features in object oriented testing in the following sub-sections.

Each sub-section describes the problem first, and then examples followed by proposed

solutions.

2.1. Encapsulation and Information Hiding:

Information hiding refers to the likelihood for the programmer to specify whether one

feature, encapsulated into some module, is visible to the client modules or not; it allows

for clearly distinguishing between the module interface and its implementation.

In conventional procedural programming, the basic component is the subroutine and the

testing method for such component is input/output based. While in object-oriented

programming the basic component is represented by a class, composed of a data structure

and a set of operations. Objects are run-time instances of classes. The data structure

defines the state of the object which is modified by the class operations (methods). In this

case, correctness of an operation is based not only on the input/output relation, but also

on the initial and resulting state of the object. Furthermore, in general, the data structure

is not directly accessible, but can only be accessed using the class’s public operations.

Encapsulation and information hiding make it hard for the tester to check what happens

inside an object during testing. Due to data abstraction there is no visibility of the insight

of objects. Thus it is impossible to directly examine their state. Encapsulation implies the

converse of visibility, which in the worst case means that objects can be more difficult, or

even impossible to test.

Encapsulation and information hiding raise the following main problems:

1. Problems in identifying which is the basic component to test, and how to select test

data for exercising it.

2. The state of an object could be inaccessible.

3. The private state is observable only through class methods (thus relying on the tested

software)

6

Testing Object Oriented Software

The possibility of defining classes which cannot be instantiated, e.g., abstract classes,

generic classes, and interfaces, introduces additional problems related to their non

straightforward testability.

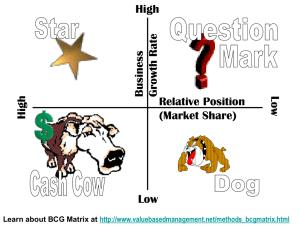

Figure 2.1 : An example of information hiding

Above figure illustrates an example of information hiding. The attribute status is not

accessible, and the behavior of checkPressure is strongly dependent on it.

We find two proposed approaches in literatures for testing object oriented programs in

the presence of encapsulation and information hiding.

Breaking encapsulation:

It can be achieved either by exploiting features of the language (e.g., the C++ friend

construct or the Ada child unit) or instrumenting the code. This approach allows for

inspection of private parts of a class. The drawback in this case is the intrusive character

of the approach. An example of this approach can be found in [55].

Equivalence scenarios:

This technique is based on the definition of pairs of sequences of method invocations.

Such pairs are augmented with a tag specifying whether the two sequences are supposed

to leave the object in the same state or not. In this way it is possible to verify the

consistence of the object state by comparison of the resulting states instead of directly

inspecting the object private parts. In the presence of algebraic specifications this kind of

testing can be automated. The advantage of this approach is that it is less intrusive than

the one based on the breaking of encapsulation. However, it is still interfering, since the

analyst needs to augment the class under test with a method for comparing the state of its

instances. The main drawback of this technique is that it allows for functional testing

7

Testing Object Oriented Software

only. Moreover, the fault hypothesis is non-specific: different kind of faults may lead to

this kind of failure and many possible faults may not be caught by this kind of testing.

Equivalence scenarios have been introduced in [29]. Another application of this approach

can be found in [85]. In this case, when testing a class, states are identified by

partitioning data member domains. Then, interactions between methods and state of the

object are investigated. The goal is to identify faults resulting in either the transition to an

undefined state, or the reaching of a wrong state, or the incorrectly remaining in a state.

2.2. Polymorphism and Dynamic Binding:

The term polymorphism refers to the capability for a program entity to dynamically

change its type at run-time. This introduces the possibility of defining polymorphic

references (i.e., references that can be bound to objects of different types). In the

languages we consider, the type of the referred object must belong to a type hierarchy.

For example, in C++ or Java a reference to an object of type A can be assigned an object

of any type B as long as B is either a inheritor of A or A itself.

A feature closely related to polymorphism is dynamic binding. In traditional procedural

programming languages, procedure calls are bound statically, i.e., the code associated to

a call is known at link time. In the presence of polymorphism, the code invoked as a

consequence of a message invocation on a reference depends on the dynamic type of the

reference itself and is in general impossible to identify it statically. In addition, a message

sent to a reference can be parametric, and parameters can also be polymorphic references.

Void foo(Shape polygon) {

...

area=polygon.area();

...

}

Figure 2.2 : A simple example of polymorphism

8

Testing Object Oriented Software

Above figure shows a simple Java example of method invocation on a polymorphic

object. In the proposed example, it is impossible to say at compile time which

implementation of the method area will be actually executed.

Late binding can lead to messages being sent to the wrong object. Overriding changes the

semantics of a method and can fool the clients of the class it belongs to. Since subclassing is not inherently sub-typing, dynamic binding on an erroneous hierarchical

chain, can produce undesirable results. Moreover, even when the hierarchy is well

formed, errors are still possible, since the correctness of a redefined method is not

guaranteed by the correctness of the super class method.

Polymorphism and late binding introduce decision concerns in program-based testing due

to their dynamic nature. To gain confidence in code containing method calls on

polymorphic entities, all the possible bindings should be exercised, but the exhaustive

testing of all possible combinations of bindings may be impractical.

Figure 2.3 : An example of polymorphic invocation

Above figure illustrates a method invocation (represented in a message sending fashion),

where both the sender and the receiver of the message are polymorphic entities. In

addition, the message has two parameters, at their turn polymorphic. In such a case, the

number of possible combinations (type of the sender, type of the receiver and type of

parameters) is combinatorial. Moreover, the different objects may behave differently

depending on their state, and this leads to a further explosion of the number of test cases

to be generated. In such a situation, a technique is needed, which allows for selecting

satisfactory test cases. The trade-off here is between the possible infeasibility of the

approach and its incompleteness.

9

Testing Object Oriented Software

Further problems arise when classes to be tested belong to a library. Classes built to be

used in one specific system can be tested by restricting the set of possible combinations

to the ones identifiable analyzing the code. Re-usable classes need a higher degree of

polymorphic coverage, because such classes will be used in different and sometimes

unpredictable contexts.

The problems introduced by polymorphism can be summarized as follows:

- Program based testing in the presence of polymorphism may become infeasible, due to

the combinatorial number of cases to be tested.

- New definitions of coverage are required to cope with the testing of operations on a

polymorphic object.

- The creation of test sets to cover all possible calls to a polymorphic operation can not be

achieved with traditional approaches.

- The presence of polymorphic parameters introduces additional problems for the creation

of test cases.

2.3. Inheritance:

Inheritance is probably the most powerful feature provided by object oriented languages.

Classes in object-oriented systems are usually organized in a hierarchy originated by the

inheritance relationship. In the languages considered in this work, sub-classes can

override inherited method and add new methods not present in their super class.

Inheritance, when conceived as a mechanism for code reuse, raises the following issues:

It is necessary to test whether a subclass specific constructor (i.e.,

the method in charge for initializing the class) is correctly invoking the constructor of the

parent class.

Initialization problems:

In the case of sub-typing inheritance we are considering, methods in

the sub-classes may have a different semantics and thus they may need different test

suites.

Semantic mismatch:

The problem here is to know whether we can trust features

of classes we inherit from, or we need to re-test derived classes from scratch. An

optimistic view claims that only little or even no test is needed for classes derived from

thoroughly tested classes.

Opportunity for test reduction:

10

Testing Object Oriented Software

A deeper and more realistic approach argues that methods of derived classes need to be

re-tested in the new context. An inherited method can behave incorrectly due to either the

derived class having redefined members in an inappropriate way or the method itself

invoking a method redefined in the subclass.

We want to know if it is possible to use the same test cases

generated for the base class during the testing of the derived class. If this is not possible,

we should at least be able to find a way of partially reusing such test cases.

Re-use of test cases:

We should test whether the inheritance is truly expressing an “isa” relationship or we are just in the presence of code reuse. This issue is in some way

related to the misleading interpretation of inheritance as a way of both reusing code and

defining subtypes, and can lead to other problems.

Inheritance correctness:

Abstract classes can not be instantiated and thus can not be

thoroughly tested. Only classes derived from abstract classes can be actually tested, but

errors can be present also in the super (abstract) class.

Testing of abstract classes:

Figure 2.4 : An

example of inheritance in C++

Above figure shows an example of inheritance. Questions which may arise looking at the

example are whether we should retest the method Circle::moveTo() and whether is it

possible to reuse test sets created for the class Shape to test the class Circle.

11

Testing Object Oriented Software

Different approaches have been proposed in literature for coping with the testing

problems introduced by inheritance. Basic approaches assume that inherited code needs

only minimal testing. As an example, Fiedler [32] states that methods provided by a

parent class (which has already been tested) do not require heavy testing. This viewpoint

is usually shared by practitioners, committed more to quick results than to rigorous

approaches.

Techniques addressing the problem from a more theoretical viewpoint have also been

proposed. They can be divided in two main classes:

1. Approaches based on the flattening of classes

2. Approaches based on incremental testing

Flattening-based approaches perform testing of sub-classes as if every inherited feature

had been defined in the sub-class itself (i.e., flattening the hierarchy tree). The advantage

of these approaches is related to the possibility of reusing test cases previously defined

for the super class (es) for the testing of subclasses (adding new test cases when needed

due to the addition of new features to the subclass). Redundancy is the price to be paid

when following such approach.

All features are re-tested in any case without any further consideration. Examples of

flattening-based approaches can be found in the work of Fletcher and Sajeev [33], and

Smith and Robson [78]. Fletcher and Sajeev present a technique that, besides flattening

the class structure, reuses specifications of the parent classes. Smith and Robson present a

technique based on the flattening of subclasses performed avoiding to test “unaffected”

methods, i.e., inherited methods that are not redefined and neither do not invoke

redefined methods, nor did use redefine attributes.

Incremental testing approaches are based on the idea that both re-testing all inherited

features and not re-testing any inherited features are wrong approaches for opposite

reasons. Only a subset of inherited features needs to be re-tested in the new context of the

subclass. The approaches differ in the way this subset is identified.

An algorithm for selecting the methods that need to be re-tested in subclasses is presented

by Cheatham and Mellinger [18]. A similar, but more rigorous approach is presented by

Harrold, McGregor, and Fitzpatrick [39]. The approach is based on the definition of a

testing history for each class under test, the construction of a call graph to represent

intra/inter class interactions, and the classification of the members of a derived class.

Class members are classified according to two criteria:

- The first criterion distinguishes added, redefined, and inherited members;

- The second criterion classifies class members according to their kind of relations with

other members belonging to the same class.

Based on the computed information, an algorithm is presented. The algorithm allows for

identifying, for each subclass, which members have to be re-tested, which test cases can

be re-used, and which attributes require new test cases.

12

Testing Object Oriented Software

2.4. Genericity:

Most traditional procedural programming languages do not support generic modules.

Supporting genericity means providing constructs allowing the programmer to define

abstract data types in a parametric way (i.e., to define ADTs as templates, which need to

be instantiated before being used). To instantiate such generic ADTs the programmer

needs to specify the parameters the class is referring to. Genericity is considered here

because, although not strictly an object-oriented feature, it is present in most objectoriented languages. Moreover, genericity is a key concept for the construction of reusable

component libraries. For instance, both the C++ Standard Template Library and Booch

components for C++, Ada and Eiffel are strongly based on genericity.

Figure 2.5 : An example of genericity in C++

A generic class could not be tested without being instantiated specifying its parameters.

In order to test a generic class it is necessary to chose one (or more) type to instantiate the

generic class with and then to test this instance(s).

Above figure shows a template class representing a vector defined in C++. The main

problem in this case is related to the assumptions that can be made on the types used to

instantiate the class when testing the method sort (int and complex in the example).

In detail, the following topics must be addressed when testing in the presence of generic

classes: parametric classes must be instantiated to be tested, no assumptions can be made

on the parameters that will be used for instantiation, and “trusted” classes are needed to

be used as parameters. Such classes can be seen as a particular kind of “bumps” and a

strategy is needed which allows for testing reused generic components.

The problem of testing generic classes is addressed by Overbeck, which shows the

necessity for instantiating generic classes to test them, and investigate the opportunities

for reducing testing on subsequent instantiations of generic classes. Overbeck reaches the

following conclusions:

13

Testing Object Oriented Software

Once instantiated, classes can be tested in the same way non-generic classes are; each

new instantiation needs to be tested only with respect to how the class acting as a

parameter is used; interactions between clients of the generic class and the class itself

have to be retested for each new instantiation.

2.5. Sub-Conclusion:

In this section, we described the most general issues in testing object oriented software.

The problems were identified by various researchers with the suggestions for proposed

solution. However, we expressed the most general problems, there are still other

problems that have been identified without suggestions for solutions. Besides the

techniques we mentioned in this section, there exist other techniques as well that don’t

directly address the mentioned problems but are useful in solving supplementary

problems. We describe those techniques in the next section.

14

Testing Object Oriented Software

3. Testing Techniques

This section addresses techniques discussed by several authors. The majority of the

research is centered around the class testing, cluster testing, and successful test case

generation techniques. Smith and Robson discuss a framework for class testing called

FOOT (Framework for Object-Oriented Testing). This framework partitions testing into

various groups and guides the process with test strategies or by manual human

interaction. The testing concentrates on interroutine and intraroutine errors, using the

following guidance strategies:

1) Minimal - provide a small set of features that allow the framework to interact with the

object that is being tested.

2) Exhaustive - exercise all legal variations of routines for a given object (This may not

be practical for a large object).

3) Tester Guided - carry out random testing by a human being.

4) Inheritance -flatten the hierarchy and test only the routines not already tested in the

super classes.

5) Memory - ensure languages with "garbage collection" properly dispose heap objects.

6) Data Flow - monitor the construction and destruction of an object.

7) Identity - search for state transitions that give the same outcomes regardless of the

operation order.

8) Set and Examine – set attributes to values, and observe the outcome as the object's

state changes.

Smith and Robson

Smith and Robson agree with test cases being defined for each class, but they differ in

saying that the subclass should not test attributes from the parent class.

Harold et al.

Harold et al. presents a technique that takes advantage of the hierarchical nature of

classes, utilizing information from the super classes to test related groups of classes. They

begin with testing base classes, since these classes are at the top of the inheritance

hierarchy. Each routine (method, member function, etc.) is tested individually; then

interactions between routines of the same class are verified to insure they yield no errors.

This is similar to Smith and Robson's intraroutine and interroutine testing. Harold et al.

then associate a testing history with each attribute it tests. When another class inherits

15

Testing Object Oriented Software

from this base class, the testing history for the base class is passed along with the

attributes and routines. This scheme drives the test procedures, since it indicates which

cases are needed to test the newly derived class.

Harold et al. feel that the additional testing is minimal, since the test cases can be easily

derived from the parent class. The actual test cases have a traditional flavor, in that they

use both specification-based (black box) and program-based (white box) techniques. The

authors suggest following unit testing procedures such as stubbing nonexistence methods

and providing drivers to exercise other class methods.

Obviously, the attributes without a test history are defined within the new class, so a test

suite needs to be defined for them. Harold et al. suggest that attributes from previous

classes be retested in the subclass with respect to their interaction with attributes within

the new class.

D'Souza and LeBlanc

D'Souza and LeBlanc present a testing technique based on examining pointers to objects,

to see if any unwanted aliasing, is occurring. They remark that most techniques verify the

objects value, and cannot directly determine if an object has an alias. When looking for

duplicate pointer references, the tester needs to be able to examine the name space to

determine if attributes share a state. This technique can be used with routine or cluster

testing without causing additional executions. This is because the technique "watches"

the attributes as the system executes along a path chosen by the tester. When aliasing

occurs the tester is notified and can determine at the point of execution if the additional

reference should exist. These authors state and prove that this technique is direct,

efficient, and as good as sequence testing when used in conjunction with routine and

cluster testing.

They also discuss a testing tool designed to allow a tester to examine attribute state. This

tool is illustrated in the Eiffel OO language, and provides a test class that contains an

instance variable to the class being tested. A table is created containing the path name

(root.z.instvar), an object ID, and the dynamic type of the object that is being referenced

with the path name. The table is searched for duplicate object ID entries. Any duplicates

indicate that the object is referenced by more than one pointer, and the entry is printed for

examination. D'Souza and LeBlanc concede that this technique was only tested on a

small system (less than 50 classes) and that a large amount of alias data was created even

for their small system. Before this technique can be used on a larger system, a pruning

algorithm needs to be added, to limit the alias output. Parrish et al.[7] present a technique

for testing OO systems that is based entirely on generating test cases from the class

implementation. They feel this is an advantage in the current software development

environment, because many systems are developed without a formal specification for

each class. Their technique also differs from traditional methods in that its generation of

cases is not random but systematic. The technique involves making every message a

node, and a directed edge between nodes represents the possibility that one routine might

be invoked followed by a second routine.

16

Testing Object Oriented Software

These authors discuss two specific formal specification methods that can be applied to

their technique: algebraic (axioms) and model-based (require and ensure clauses). They

acknowledge that there is a problem generating infeasible test case paths, and that the

generated cases are not guaranteed to be better than manual testing. They suggest

supplementing the generated cases with manual cases, especially when testing critical

classes.

McGregor and Korson

McGregor and Korson discuss a high-level view of testing OO systems within the entire

software development cycle. They begin by testing the development process and the

supporting documents. This involves verifying and updating the iterative development

process with each iteration to improve effectiveness. These authors discuss testing

analysis and design models, and identify three attributes common to all modules that

required verification: correctness, completeness, and consistency.

A correct model is one whose set of analysis entities is semantically correct with respect

to the problem domain. A complete model is declared so if its entities accurately

represent the knowledge being modeled well enough to satisfy the goal of the current

iteration. A consistent model is one whose relationships among model entities are well

designed and cohesive in nature.

The authors feel that test cases should be created to test these aspects during each

iteration, and that test reviews should be held to insure that the cases exercise the model

at a detailed level. The actual design testing is done via instance diagrams that show the

network of resulting objects. McGregor and Korson state that the actual verification

should be done with class/unit, then cluster, followed by system testing. They also

discuss partitioning the test suite into three "flavors": functional, structural, and

interaction. This allows the tester to execute cases designed with a different emphasis,

and diversifies the testing to insure a broader range of coverage. This partitioning

resembles the Smith and Robson method, but divides cases at a higher level of

abstraction.

Murphy et al.

Murphy et al. discusses verifying a telecommunications troubleshooting tool (TRACS)

using class testing, cluster testing, and system testing techniques. The TRACS system

was developed over several releases, and the authors augmented their testing philosophies

to improve coverage with each release. The initial release was tested solely with cluster

and system testing methods, since the authors felt that class testing was too costly and

time-consuming. The system test cases were designed using functional and nonfunctional

system requirements. Each test case consisted of a series of steps that a user would

probably select and an expected outcome for comparison. Clusters were developed by

grouping classes that had similar functions or that worked together to accomplish a

particular goal. The cluster testing was functional and dynamic in nature, and was

executed over the entire system. This meant that drivers were needed to simulate

17

Testing Object Oriented Software

incomplete classes. The least-dependant cluster was tested first, then this cluster was used

in testing the next cluster until the entire system had been built and tested. This method

provided an implicit integration test due to its layered approach. After the initial release

was completed, the authors noticed that some clusters were beginning to grow too large;

hence, were not being tested adequately. Also, the iterative nature of the development

cycle had caused an elimination of code reviews. Defects were hiding within the layers of

cluster testing and class hierarchies, and the cost of fixing these problems increased

exponentially with time. These two problems resulted in a large number of defects that

were eventually discovered in system test. The authors decided that the cost of fixing

these problems outweighed the cost of executing class testing, so a class testing strategy

was incorporated along with an automated testing tool. The cluster testing was also

improved by creating a test plan based on the analysis and design for a cluster of classes.

The plan included:

1) any prerequisite cluster testing

2) a description of the functionality being tested

3) special configurations that should be tested

4) all classes contained in this cluster, and whether the class has been class tested or not

5) a set of cluster test cases including a description, execution steps, and expected

outcome Once the case is created, an automated test tool is used to execute the case and

record the outputs.

The authors' class testing technique utilized three levels:

Compilation, Walkthroughs, and Dynamic Testing.

Compilation was used for static type-checking to remove class incompatibilities, and for

compiler-generated warnings such as unused attributes.

The walkthroughs were performed by peer groups using state-reporting methods to probe

the code. The code inspectors also looked for missing function, unnecessary code, and

violations of coding standards.

Dynamic testing was accomplished via the same testing tool used for cluster testing. A

suite of cases is sent to the tool, and the tool executes them and reports the results.

Inheritance is leveraged in that test cases defined for a superclass are inherited and

executed unless they are overridden by the current class. This is similar to the techniques

discussed by Smith and Robson and by Harold et al., except that Smith and Robson's

method does not execute tests that have been previously run. This testing technique

reduced the number of non-system test defects found at system test from 70 to one, and

reduced development time by 50 percent.

4. An overview of Testing Tools:

This section describes the essential characteristics of a good test tool. The survey of test

tools came to end that there are no critical studies for testing tools. Most of the

commercial tools claim to be good in specific areas but we couldn’t testify their claims.

There are no specific tools for object oriented software but they eliminate the mentioned

problems.

18

Testing Object Oriented Software

5. Conclusion:

The purpose of this project is to investigate the general restrictions in testing object

oriented software and studies of proposed solutions have been achieved successfully. We

looked through a number of research articles and the problems were explained followed

by examples and proposed solutions. We couldn’t cover the whole set of problems but

hope that the report can be a good source to overcome mentioned problems and could be

a good starting point for further work.

The project has helped us clarifying the issues in testing object oriented software hence

provided us with better approach to overcome them.

6. Suggestions for Extension:

However the project has covered major problems in testing object oriented software,

further work can still be followed in the direction of other problems that couldn’t be

identified here or redesigning the techniques to crack the problems in less time with

fewer resources. Furthermore, the survey of tools is an open topic which needs additional

research.

7. References:

19