Evidence-Based Medicine

advertisement

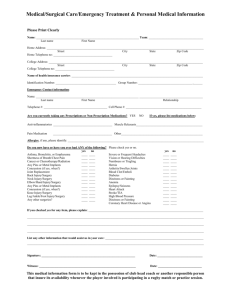

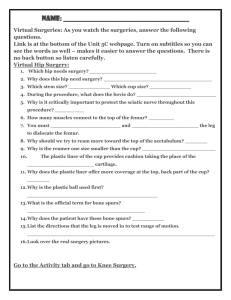

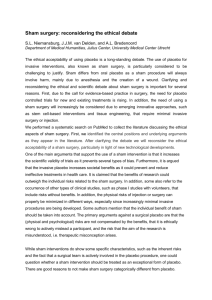

FPIN Journal Club RANDOMIZED CONTROLLED TRIAL SPEAKER NOTES Title: Surgery for persistent knee pain not so fast. Journal Club Author: Anne Mounsey, MD- University of North Carolina Journal Club Editor: Kate Rowland, MD, MS – Rush-Copley Medical Center PURL Citation: Brown M M, Mounsey A. Surgery for persistent knee pain not so fast. J Fam Prac. 2014;63:534-535. Original Article: Arthroscopic Partial Meniscectomy versus Sham Surgery for a Degenerative Meniscal Tear. N Engl J Med. 2013;369:2515-2524. 1. What question did the study attempt to answer? Patients – aged 35-65 yrs with 3 months of knee pain and a degenerative medial meniscus tear but no arthritis. Intervention – Arthroscopic partial medial meniscectomy with preservation of as much of the normal meniscus as possible. Comparison – Sham meniscectomy using same instruments and suction and took same length of time as real surgery. Outcome – Knee pain after exercise, measured on 11 point (0-10) scale , Knee pain on the Lysholm scale and the Western Ontario meniscal evaluation tool (WOMET), both 100 point scales where lower scores indicate worse pain Did the study address an appropriate and clearly focused question 2. Determining Relevance: a. Did the authors study a clinically meaningful Yes and/or a patient oriented outcome? b. The patients covered by the review similar to your population Although the population is very specific Yes No No Yes No 3. Determining Validity: Study design; a. Was it a controlled trial? Yes No b. Were patients randomly allocated to comparison groups? Yes No Unclear c. Were groups similar at the start of a trial? Yes No Unclear d. Were patients and study personnel “blind” to treatment? Yes No Unclear The surgeon performing the surgery was not blinded but did not participate in the trial or follow up after the surgery. e. Aside from allocated treatment, were groups treated equally? Yes No Unclear f. Were all patients who entered the trial properly accounted for at it’s conclusion Yes No Unclear follow up was 100%! 4. What are the results? a. What are the overall results of the study? There were no between the groups from baseline to 12 months in change in any of the 3 scores. Both groups improved. Between group difference in Lysholm was -1.6 (95% confidence interval [CI] -7.2 to 4.0). WOMET -2.5 (95% CI -9.2 to 4.1) and knee pain -0.1 (95% CI -0.9 to 0.7). No significant difference in sham and real surgery groups in number of patients who thought they had sham surgery, 47% vs 38% (p=0.39). 7% (5) patients in the sham surgery group ended up having surgery. b. Are the results statistically significant? No difference Yes No c. Are the results clinically significant? Yes No d. Were there other factors that might have affected the outcome? Yes No It could be that arthroscopic lavage which both groups received shows a benefit. However, a previous study that evaluated lavage as a treatment for knee pain showed no benefit. 5. Applying the evidence: a. If the findings are valid and relevant, will this change your current practice? Yes No b. Is the change in practice something that can be done in a medical care setting of a family physician? Do not refer for arthroscopy Yes No c. Can the results be implemented? Yes No d. Are there any barriers to immediate implementation? Yes No e. How was this study funded? Privately, no drug industry funding 6. Teaching Points. Power analysis. This was a negative study (i.e.; the results showed no difference in the outcomes between the actual surgery and sham surgery groups). There may have been no difference between the 2 groups due to insufficient participants. When this happens, it is called a type II error (i.e.; the study shows no difference when there really is one). To assess the risk of type II error, we need to ensure the study had enough participants by looking at the power analysis. A negative study with enough people (an “adequately powered trial”) is just a negative study, but a negative study that did not enroll enough people may have committed a type two error, and the results are at risk being invalid. You need to refer back to the section in the paper usually headed “statistical analysis” to search for the power analysis. At the beginning of this section the authors should state, and did in this paper, what the minimal meaningful clinical difference is in the primary outcomes. In this study differences of over 11.5, 15.5 or 2.0 in the Lysholm, WOMET and knee pain scores respectively were considered clinically relevant. A calculation is then done using level of significance, the power and the effect size to estimate how many patients would need to be in each of the intervention and control groups to show a difference for each of the 3 outcomes (i.e.; the sample size). Level of significance: this is the probability cut-off (usually 0.05 or 5%). In this case it means we are 95% certain that there is no true difference between the 2 treatments. A power level of 80% is nearly always used and means there is an eight in ten chance of detecting a difference of the specified effect size (in this case, the 11.5, 15.5, or 2.0 on the knee pain scales above) The effect size is a measure of the difference in the outcomes of the experimental and control groups. It’s a measure of the effectiveness of the treatment, which is calculated from the standard deviation and the absolute difference in outcomes. It is often derived from previous studies looking at similar outcomes, as it was in this case. The values for the clinically meaningful changes on the knee pain scales came from previous studies that asked patients how much their pain had to change before they felt it was really significantly better. In other cases, where no previous information exists, pilot data or the investigators’ best guesses may have to suffice. As it states in this section for the study to be 80% sure that there was a difference between the 2 groups they need 40,54 and 40 in each group for the 3 outcomes. The samples sizes for both groups were over 69 so we know that there were enough participants to show a difference if one had been present. Of course if there had been a difference found it would not be so important for us to refer to the power analysis as it would be inferred that there were enough participants to show the difference in outcomes. Power calculations are usually only calculated for the primary outcome which is why the results from secondary outcomes need to be interpreted with more caution particularly if they show no difference in outcomes. A type I error—a discussion for another PURLs journal club—happens when the study incorrectly detects a difference when one does not really exist.