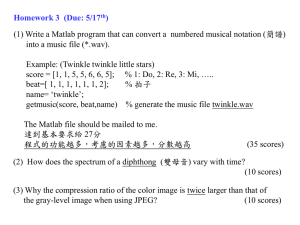

Inter-rater Reliability

advertisement

Principles of Inter-rater Reliability (IRR) Dr. Daniel R. Winder NEW IRR for each NEW study • Inter-rater reliability of judges scores must be demonstrated for each additional study even if the study is using an instrument that has been validated in previous studies. • WHY? NEW IRR for each NEW study • Because the correlation coefficients were established on previous judges scores, not the current judges. • Principle: IRR of judge’s scores is sample dependent. • Therefore, it is considered good practice to report IRR in all studies involving more than one rater. Why IRR? 1) To show agreement of judges scores. • Why? • Personnel ratings are expensive—if the judges agree on the ratings, only one will have to rate, saving time, money, resources, etc. • However, a good practice is to have judges overlap on their ratings (e.g. with 24 cases, one rates 1-8, the other rates 17-24, both rate 9-16). Why IRR? 2) To show the validity of scores from a method, a rubric, judge, or other instrument. • Why? • If the rubric is consistent for a sample of several different raters, then it may be generalizable to the population the raters come from. This is more of a generalizability question for which a G-study or D-study will be utilized (more later). Why IRR? 3) To identify problem areas of a rubric or scoring method. • Where is the inconsistency coming from? (untrained raters, occasions, unexplained error, outliers, etc). Three types of IRR 1. Agreement/consensus of scores 2. Consistency of scores 3. Measurement of scores • Notice that IRR is about scores, not the construct (If we were focusing on the construct, we would be focusing on the validity, not reliability). Agreement of Raters (i.e. a classification consensus) • When data is nominal, use an agreement coefficient. For example, when classifying a type of behavior (schizophrenia) rather than a quantity of a behavior (dexterity of left hand). • Example: Do the doctors agree that the type of irrational mental behavior is the same rather than the degree of irrational mental behavior? • Example: Grading themes in writing vs. grading quality of writing skills. • Example: Does this business qualify as an Scorp, LLC, non-profit organization, etc vs. is it profitable. Agreement coefficients can be use to measure reliability of ordered likert-data (e.g. 1-5 ordered ratings), but there are more powerful methods for analyzing this type of ordinal-level data than mere agreement. How to compute agreement • Number of agreement of cases divided by total number of cases. Usually reported as percentages. – E.g. Rater 1 and 2’s scores agree on 60 of the 80 cases. – 60/80 = .75 – Thus, 75% agreement. Adjacent Agreement • Used when looking at Likert-scale agreement with a large number of categories. • You relax a bit and give equivalence to scores in adjacent categories (if I gave a 4 and the other judge gave a 5, we generally agree, so we count this as agreement, dude). • Why should this not be used on scales of 5 or less categories? • Because almost all categories are adjacent. Agreement Guidelines • Scores are proportions between 0 and 1, usually made percentages. • 0 = no agreement, 1 = perfect agreement • Quality of inter-rater reliability should be 70% or greater (Stemler, Steven E. (2004). A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Practical Assessment, Research & Evaluation, 9(4). Retrieved March 22, 2010 from http://PAREonline.net/getvn.asp?v=9&n=4 .) Agreement-Cohen’s Kappa (most common IRR stat) • The big hoorah of this stat is that it takes into account the amount of agreement that would occur from chance alone. For example, with 5 equally used categories, there is a 20% chance that agreement would occur by chance alone. So is it more than chance, that my scores agree with another raters? (Cohen, 1960, 1968). Agreement-Cohen’s Kappa (most common IRR stat) • If concerned that agreement is due to lack of options in rating categories this is helpful. Generally, less than 5 categories. Agreement-Kendall’s W • Use this when data is non-parametric (not normally distributed). • Suppose, for instance, that a number of people have been asked to rank a list of political concerns, from most important to least important. Kendall's W can be calculated from these data. If the test statistic W is 1, then all the survey respondents have been unanimous, and each respondent has assigned the same order to the list of concerns. If W is 0, then there is no overall trend of agreement among the respondents, and their responses may be regarded as essentially random. Intermediate values of W indicate a greater or lesser degree of unanimity among the various responses. • While tests using the standard Pearson correlation coefficient assume normally distributed values and compare two sequences of outcomes at a time, Kendall's W makes no assumptions regarding the nature of the probability distribution and can handle any number of distinct outcomes. • http://en.wikipedia.org/wiki/Kendall's_W Agreement-Guidelines • 0 = agreement is no greater than what would occur by chance alone. • What would happen if judges agreed less than chance alone would predict? • (a negative value). • Moderate values = 0.41–0.60, Substantial values = 0.60 or > (Landis and Koch ,1977) • Report IRR agreement stats between all judges or for several judges report the minimum, mean, and maximum IRR stat. Problems with Agreement • What if one of the judges exhibits a leniency factor (e.g. he/she is consistently 2 points higher?). What would this systematic difference do to an agreement statistic? • (it would misrepresent the ratings by reporting a low IRR when in fact, the scores covary quite nicely and one score can predict the other score quite well—we overcome this by using consistency rather than agreement/consensus stats). Consistency Stats • Most useful when using continuous data and reported raw scores are not used as cut-off scores. • However, if the instrument from a raw summed score, say a 70 out of 80, is a cut-off score for diagnosing a disability or other diagnosis, then you should be concerned more with agreement than consistency (i.e. go back to the previous slides on agreement and follow those guidelines/methods). • Consistency is important when the main thing that a scale measures is rank-order. • That said, many report agreement and consistency. Consistency Stats • Useful for scales with categories that measure unidimensional traits (one thing) and higher categories represent more of the trait. • Useful when training judges is not practical or not important (because judges can develop their own interpretation of the severity or leniency of the rating scale as long as they are consistent with themselves—intra-rater reliability is more important than agreement). • Some methods can handle multiple raters rather than only two at a time (cronbach’s alpha). Consistency Stats • Could two scores agree but not be consistent? (generally, no) • Could two scores be consistent but not agree? (yes). • Could two raters means and/or medians be significantly different but have high consistency? (yes—see Stemler 2004 for the rare exception) • Consistency stats also identify whether the scores can be corrected by a lenient of severe judge • For example, if one judge consistently rates 2 points lower than all other judges and the consistency stat shows a high consistency, then it is worth it to look into covariance to decide if you should correct the severity factor in this judge (i.e. if one judge’s scores are always 2 points lower, you may want to bump those scores up to compare with other judges for agreement). Consistency Stat-Pearson Correlation Coefficient • Assumes data is normally distributed. • Because data is continuous, scores can be in between categories (1.5 vs. 1 or 2) • Can easily be computed by hand (Glass & Hopkins, 1996). • Limited by only being able to be computed for one pair of judges at a time and only one item at a time. Consistency Stat Intra Class Coefficient (ICC) • Quite common among OT. • Similar to Pearson’s r but allows multiple raters. Consistency Stat Spearman Rank Coefficient • Can be used for non-normally distributed data. • Ratings can be correlated based on rank-order agreement (Crocker & Algina, 1986). • However, all raters have to rate all cases, can only compare two ordinal rated sets at a time. • Kendall's tau: Another common correlation for use with two ordinal variables or an ordinal and an interval variable. Prior to computers, rho was preferred to tau due to computational ease. Now that computers have rendered calculation trivial, tau is generally preferred. Consistency Stat Cronbach’s Alpha • Measures observed rating + error divided by estimated true score. Thus a proportion between 0 and 1 ensues (Crocker & Algina, 1986). • What would happen if there was little error? • (close to 1, much agreement due to little error) • What would happen if there was a lot of error? • (close to 0, no agreement due to error) • Used when multiple judges rate so all scores can be analyzed together to check if observed ratings are due to consistency or error. • Limited-every judge must rate every case. Do not use consistency stat when… • Nominal data • It’s NOT okay for judges to agree to disagree in the rating number as long as they are consistent with themselves (e.g. the total raw score is used as a cut-off for a diagnosis or conclusion). • judges have severe differences in variability of categories used (one uses many 1-7 categories and another only uses 1-3). The lack of variance cannot be corrected by a mean adjustment. • Most of the ratings fall into one or two categories. In this case, the correlation coefficient may be deflated due to lack of variability rather than lack of agreement. Consistency Stat Guidelines • Values greater than .70 are acceptable (Barrat, 2001). Measurement Stats • Assumes that information from all the judges, whether they agree or not, is valuable information. Linacre suggests that training can bias results but measurement stats don’t require so much training that it will bias results (2004). • Use when different levels of the trait are represented in different levels of the unidimensional scale (for example a 1 means that a person was rated lower than 3). • Use when multiple judges rate different but not all cases or items. Measurement Stats Factor Analysis • Determines the amount of shared variance from rater’s scores that is attributed to a single factor. If the amount of shared variance from that factor is high (it explains 60% of the rater’s scores variance), this adds evidence that the raters are consistently rating the same construct. • Once this factor has been established, each case can receive a score based solely on how well their score predict this single factor. Harman, 1967 Measurement Stats Generalizability Theory • The goal of generalizability theory is to separate the variance components of raters scores. • These components can be based on persons, raters, occasions, or other components of a rating as well as unexplained error. • When you know where the variance is coming from, you can isolate these components to get a more stable comparison of agreement without the confounding component. • Predictive formulas can be used to determine how many raters, occasions, and compenents should be considered to reduce error to an optimal low rate. Shavelson and Webb, 1991 Generalizability Theory—parsing out variance components Person x Rater interaction Person Rater Occasion Person x Occasion interaction Rater x Occasion interaction Error or unexplained variance Shavelson and Webb, 1991 D study • Using generalizability theory, we can determine how many components should be studied as well as how many raters, occasions, etc are needed to get an optimal rating (cost benefit analysis—if I add three more judges, how much error do I eliminate—is it worth it.). www.courseoutcomes.com • Visit www.courseoutcomes.com to download a free tool for Generalizability studies. This tool conducts a G-study and D-study for you and teaches the basic concepts of Generalizability Theory. Measurement Stats Many-facets Rasch Model • This model allows for multiple raters who don’t have to rate the same items or persons. • Essentially, uses a logit scale to determine the difficulty of getting different scores from different judges. For example, how much harder is it to get an “18” from rater 1 vs. rater 5? Is an 18 from rater 5 like getting an 15 from rater 1? • Let’s you know which items are the most difficult and the least difficult. • Should be used when it is assumed that a ratee’s ability may play into the accuracy of the score. • Yields reliability statistics for the overall trait/scale. Linacre, 1994; Rasch, 1960/1980; Wright & Stone, 1979; Bond and Fox, 2001 Measurement Stats Many-facets Rasch Model • Fit statistics tell you whether the a judge is consistent with their own rating scale across persons and items. • Measurement errors are taken at each level of the trait rather than assuming that they remain constant across the whole scale or trait. For example, a scale may have less error for persons in the middle of the scale then those on the ends, thus it would be more reliable to rate persons who are in the middle of the ability or trait rather than the ends of the trait. Linacre, 1994; Rasch, 1960/1980; Wright & Stone, 1979; Bond and Fox, 2001 Measurement Stats Problems • You need specialized knowledge to run programs as these cannot be computed by hand. In addition, you need specialized knowledge to interpret the outputs of programs and conceptually make sense of the output. • Only works with ordinal data. Linacre, 1994; Rasch, 1960/1980; Wright & Stone, 1979; Bond and Fox, 2001 The most clear IRR article • Stemler, Steven E. (2004). A comparison of consensus, consistency, and measurement approaches to estimating interrater reliability. Practical Assessment, Research & Evaluation, 9(4). Retrieved March 22, 2010 from http://PAREonline.net/getvn.asp?v=9&n=4 . • Much of this training follows Dr. Stemler’s format. Bibliography (I have only referenced in the slides the ones I directly used but I have left all of these as resources). • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • Barrett, P. (2001, March). Assessing the reliability of rating data. Retrieved June 16, 2003, from http://www.liv.ac.uk/~pbarrett/rater.pdf Bock, R., Brennan, R. L., & Muraki, E. (2002). The information in multiple ratings. Applied Psychological Measurement, 26(4), 364-375. Bond, T., & Fox, C. (2001). Applying the Rasch model. Mahwaw, NJ: Lawrence Erlbaum Associates. Burke, M. J., & Dunlap, W. P. (2002). Estimating interrater agreement with the average deviation index: A user's guide. Organizational Research Methods, 5(2), 159-172. Cohen, J. (1960). A coefficient for agreement for nominal scales. Educational and Psychological Measurement, 20, 37-46. Cohen, J. (1968). Weighted kappa: Nominal scale agreement with provision for scale disagreement or partial credit. Psychological Bulletin, 70, 213-220. Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Applied multiple regression/correlation analysis for the behavioral sciences (Third ed.). Mahwah, NJ: Lawrence Erlbaum Associates. Crocker, L., & Algina, J. (1986). Introduction to classical and modern test theory. Orlando, FL: Harcourt Brace Jovanovich. Glass, G. V., & Hopkins, K. H. (1996). Statistical methods in education and psychology. Boston: Allyn and Bacon. Harman, H. H. (1967). Modern factor analysis. Chicago: University of Chicago Press. Hayes, J. R., & Hatch, J. A. (1999). Issues in measuring reliability: Correlation versus percentage of agreement. Written Communication, 16(3), 354-367. Hopkins, K. H. (1998). Educational and psychological measurement and evaluation (Eighth ed.). Boston: Allyn and Bacon. Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33, 159-174. LeBreton, J. M., Burgess, J. R., Kaiser, R. B., Atchley, E., & James, L. R. (2003). The restriction of variance hypothesis and interrater reliability and agreement: Are ratings from multiple sources really dissimilar? Organizational Research Methods, 6(1), 80-128. Linacre, J. M. (1988). FACETS: a computer program for many-facet Rasch measurement (Version 3.3.0). Chicago: MESA Press. Linacre, J. M. (1994). Many-facet Rasch measurement. Chicago: MESA Press. Linacre, J. M. (2002). Judge ratings with forced agreement. Rasch Measurement Transactions, 16(1), 857-858. Linacre, J. M., Englehard, G., Tatem, D. S., & Myford, C. M. (1994). Measurement with judges: many-faceted conjoint measurement. International Journal of Educational Research, 21(4), 569-577. Mertler, C. A. (2001). Designing scoring rubrics for your classroom. Practical Assessment, Research and Evaluation, 7(25). Moskal, B. M., & Leydens, J. A. (2000). Scoring rubric development: Validity and reliability. Practical Assessment, Research and Evaluation, 7(10). Myford, C. M., & Cline, F. (2002, April 1-5). Looking for patterns in disagreements: A Facets analysis of human raters' and e-raters' scores on essays written for the Graduate Management Admission Test (GMAT). Paper presented at the Annual meeting of the American Educational Research Association, New Orleans, LA. Rasch, G. (1960/1980). Probabilistic models for some intelligence and attainment tests (Expanded ed.). Chicago: University of Chicago Press. Shavelson, R. J., & Webb, N. M. (1991). Generalizability theory: A primer. Newbury Park, CA: Sage Publications. Stemler, S. E. (2001). An overview of content analysis. Practical Assessment, Research and Evaluation, 7(17), Available online: http://PAREonline.net/getvn.asp?v=7&n=17. Stemler, S. E., & Bebell, D. (1999, April). An empirical approach to understanding and analyzing the mission statements of selected educational institutions. Paper presented at the New England Educational Research Organization (NEERO), Portsmouth, NH. Tierney, R., & Simon, M. (2004). What's still wrong with rubrics: Focusing on consistency of performance criteria across scale levels. Practical Assessment, Research & Evaluation, 9(2), Retreived February 16, 2004 from http://PAREonline.net/getvn.asp?v=9&n=2. Uebersax, J. (1987). Diversity of decision-making models and the measurement of interrater agreement. Psychological Bulletin, 101(1), 140-146. Uebersax, J. (2002). Statistical methods for rater agreement. Retrieved August 9, 2002, from http://ourworld.compuserve.com/homepages/jsuebersax/agree.htm Winer, B. J. (1962). Statistical principals in experimental design. New York: McGraw-Hill. Wright, B. D., & Stone, M. H. (1979). Best test design. Chicago: MESA.