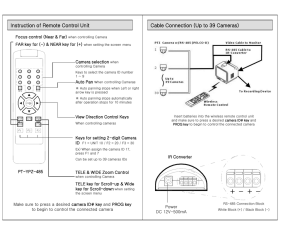

P j - Digital Camera and Computer Vision Laboratory

advertisement

Advanced Computer Vision

Chapter 7

STRUCTURE FROM MOTION

Presented by

Prof. Chiou-Shann Fuh & Pradnya Borade

0988472377

r99922145@ntu.edu.tw

Structure from Motion

1

Today’s Lecture

Structure from Motion

• What is structure from motion?

• Triangulation and pose

• Two-frame methods

Structure from Motion

2

What Is Structure from Motion?

1. Study of visual perception.

2. Process of finding the three-dimensional

structure of an object by analyzing

local motion signals over time.

3. A method for creating 3D models from

2D pictures of an object.

Structure from Motion

3

Example

Picture 1

Picture 2

Structure from Motion

4

Example (cont).

3D model created from the two images

Structure from Motion

5

Example

Figure7.1: Structure from motion systems: Orthographic factorization

Structure from Motion

6

Example

Figure 7.2: line matching

Structure from Motion

7

Example

a

b

c

d

e

Figure 7.3: (a-e) incremental structure from motion

Structure from Motion

8

Example

Figure 7.4: 3D reconstruction of Trafalgar Square

Structure from Motion

9

Example

Figure 7.5: 3D reconstruction of Great Wall of China.

Structure from Motion

10

Example

Figure 7.6: 3D reconstruction of the Old Town Square, Prague

Structure from Motion

11

7.1Triangulation

• A problem of estimating a point’s 3D location

when it is seen from multiple cameras is

known as triangulation.

• It is a converse of pose estimation problem.

• Given projection matrices, 3D points can be

computed from their measured image

positions in two or more views.

Structure from Motion

12

Triangulation (cont).

• Find the 3D point p that lies closest to all of

the 3D rays corresponding to the 2D

matching feature locations {xj} observed by

cameras

{Pj = Kj [Rj | tj] }

tj = -Rjcj

cj is the jth camera center.

Structure from Motion

13

Triangulation (cont).

Figure 7.7: 3D point triangulation by finding the points p that lies nearest to all of

the optical rays

Structure from Motion

14

Triangulation (cont).

• The rays originate at cj in a direction

• The nearest point to p on this ray, which is

denoted as qj, minimizes the distance.

which has a minimum at

Hence,

Structure from Motion

15

Triangulation (cont).

Structure from Motion

16

Triangulation (cont).

Structure from Motion

17

Triangulation (cont).

• The squared distance between p and qj is

• The optimal value for p, which lies closest to

all of the rays, can be computed as a regular

least square problem by summing over all the

rj2 and finding the optimal value of p,

Structure from Motion

18

Triangulation (cont).

• If we use homogeneous coordinates

p=(X,Y,Z,W), the resulting set of equation is

homogeneous and is solved as singular value

decomposition (SVD).

• If we set W=1, we can use regular linear least

square, but the resulting system may be

singular or poorly coordinated (i.e. all of the

viewing rays are parallel).

Structure from Motion

19

Triangulation (cont).

For this reason; it is generally preferable to

parameterized 3D points using homogeneous

coordinates, especially if we know that there are

likely to be points at generally varying distances

from the cameras.

Structure from Motion

20

7.2Two-Frame Structure from Motion

• In 3D reconstruction we have always

assumed that either 3D points position or the

3D camera poses are known in advance.

Structure from Motion

21

Two-Frame Structure from Motion (cont).

Figure 7.8: Epipolar geometry: The vectors t=c1 – c0, p – c0 and p-c1 are

co-planar and the basic epipolar constraint expressed in terms

of the pixel measurement x0 and x1

Structure from Motion

22

Two-Frame Structure from Motion (cont).

• Figure shows a 3D point p being viewed from

two cameras whose relative position can be

encoded by a rotation R and a translation t.

• We do not know anything about the camera

positions, without loss of generality.

• We can set the first camera at the origin c0=0

and at a canonical orientation R0=I

Structure from Motion

23

Two-Frame Structure from Motion (cont).

• The observed location of point p in the first

image,

is mapped into the second

image by the transformation

: the ray direction vectors.

Structure from Motion

24

Two-Frame Structure from Motion (cont).

• Taking the cross product of both the sides

with t in order to annihilate it on the right hand

side yields

• Taking the dot product of both the sides with

yields

Structure from Motion

25

Two-Frame Structure from Motion (cont).

• The right hand side is triple product with two

identical entries

• We therefore arrive at the basic epipolar

constraint

: essential matrix

Structure from Motion

26

Two-Frame Structure from Motion (cont).

• The essential matrix E maps a point

in image 0 into a line

in image 1

since

All such lines must pass through the second

epipole e1, which is therefore defined as the

left singular vector of E with 0 singular value,

or, equivalently the projection of the vector t

into image 1.

Structure from Motion

27

Two-Frame Structure from Motion (cont).

• The transpose of these relationships gives us

the epipolar line in the first image as

and e0 as the zero value right singular vector

E.

Structure from Motion

28

Two-Frame Structure from Motion (cont).

Given the relationship

How can we use it to recover the camera

motion encoded in the essential matrix E?

If we have n corresponding measurements

{(xi0,xi1)}, we can form N homogeneous

equations in the elements of E= {e00…..e22}

Structure from Motion

29

Two-Frame Structure from Motion (cont).

: element-wise multiplication and summation

of matrix elements

zi and f: the vector forms of the

and E matrices.

Given N>8 such equation, we can compute an

estimate for the entire E using a Singular

Value Decomposition (SVD).

Structure from Motion

30

Two-Frame Structure from Motion (cont).

• In the presence of noisy measurement, how

close is this estimate to being statistically

optimal?

• In the matrix, some entries are product of

image measurement such as xi0yi1

and others are direct image measurements

(even identity).

Structure from Motion

31

Two-Frame Structure from Motion (cont).

• If the measurements have noise, the terms

that are product of measurement have their

noise amplified by the other element in the

product, which lead to poor scaling.

• In order to deal with this, a suggestion is that

the point coordinate should be translated and

scaled so that their centroid lies at the original

variance is unity; i.e.

Structure from Motion

32

Two-Frame Structure from Motion (cont).

such that

and

n= number of points.

Once the essential matrix has been computed from the

transformed coordinates; the original essential matrix

E can be recovered as

Structure from Motion

33

Two-Frame Structure from Motion (cont).

• When the essential matrix has been recovered, the

direction of the translation vector t can be estimated.

• The absolute distance between two cameras can

never be recovered from pure image measurement

alone.

• Ground control points in Photogrammetry:

knowledge about absolute camera, point

positions or distances.

• Required to establish the final scale, position and

orientation.

Structure from Motion

34

Two-Frame Structure from Motion (cont).

• To estimate direction

observe that under the ideal

noise-free conditions, the essential matrix E is

singular, i.e.,

• This singularity shows up as a singular value of 0

when an SVD of E is performed,

Structure from Motion

35

Pure Translation

Figure 7.9: Pure translation camera motion results in visual motion where all

the points move towards (or away from) a common focus of

expansion (FOE). They therefore satisfies the triple product

condition (x0,x1 *e) = e * (xo ˣ x1) = 0

Structure from Motion

36

Pure Translation (cont).

• Known rotation:

The resulting essential matrix E is (in the

noise-free case) skew symmetric and can

estimate more directly by setting eij= -eji and

eii = 0.

Two-point parallax now suffices to estimate

the FOE.

Structure from Motion

37

Pure Translation (cont).

• A more direct derivation of FOE estimates can be

obtained by minimizing the triple product.

which is equivalent to finding null space for the set of

equations

Structure from Motion

38

Pure Translation (cont).

• In a situation where large number of points at infinity

are available, (when the camera motion is small

compared to distant objects, this suggests a strategy.

• Pick a pair of points to estimate a rotation, hoping

that both of the points lie at infinity (very far from

camera).

• Then compute FOE and check whether residual error

is small and whether the motions towards or away

from the epipoler (FOE) are all in the same direction.

Structure from Motion

39

Pure Rotation

• This results in a degenerate estimate of the essential

matrix E and the translation direction.

• If we consider that the rotation matrix is known, the

estimates for the FOE will be degenerate, since

and hence

is degenerate.

Structure from Motion

40

Pure Rotation (cont).

• Before comparing a full essential matrix to first

compute a rotation estimate R, potentially with just a

small number of points.

• Then compute the residuals after rotating the points

before processing with a full E computation.

Structure from Motion

41

Projective Reconstruction

• When we try to build 3D model from the photos taken

by unknown cameras, we do not know ahead of time

the intrinsic calibration parameters associated with

input images.

• Still, we can estimate a two-frame reconstruction,

although the true metric structure may not be

available.

•

: the basic epipoler constraint.

Structure from Motion

42

Projective Reconstruction (cont.)

• In the unreliable case, we do not know the calibration

matrices

Kj, so we cannot use the

normalized ray directions.

• We have access to the image coordinate xj, so

essential matrix becomes:

• fundamental matrix:

Structure from Motion

43

Projective Reconstruction (cont.)

• Its smallest left singular vector indicates the epipole

e1 in the image 1.

• Its smallest right singular vector is e0.

Structure from Motion

44

Projective Reconstruction (cont.)

• To create a projective reconstruction of a scene, we

pick up any valid homography

that satisfies

and hence

: singular value matrix with the smallest value

replaced by the middle value.

Structure from Motion

45

Self-calibration

• Auto-calibration is developed for covering a projective

reconstruction into a metric one, which is equivalent

to recovering the unknown calibration matrix Kj

associated with each image.

• In the presence of additional information about

scene, different methods can be applied.

• If there are parallel lines in the scene, three or more

vanishing points, which are the images of points at

infinity, can be used to establish homography for the

plane at infinity, from which focal length and rotation

can be recovered.

Structure from Motion

46

Self-calibration (cont).

• In the absence of external information:

consider all sets of camera matrices Pj = Kj[ Rj | tj ]

projecting world coordinates pi=(Xi,Yi,Zi,Wi) into screen

coordinates xij ~ Pjpi.

• Consider transforming the 3D scene {pi} through an

arbitrary 4 4 projective transformation

yielding a

new model consisting of points

• Post-multiplying each other Pj matrix by

still

produces the same screen coordinates and a new set

of calibration matrices can be computed by applying

RQ decomposition to the new camera matrix .

Structure from Motion

47

Self-calibration (cont).

• A technique that can recover the focal lengths (f0,f1)

of both images from fundamental matrix F in a twoframe reconstruction.

• Assume that camera has zero skew, a known aspect

ratio, and known optical center.

• Most cameras have square pixels and an optical

center near middle of image and are likely to deviate

from simple camera model due to radial distortion

• Problem occurs when images have been cropped offcenter.

Structure from Motion

48

Self-calibration (cont).

• Take left to right singular vectors {u0,u1,v0,v1} of

fundamental matrix F and their associated singular

values

and form the equation:

two matrices:

Structure from Motion

49

Self-calibration (cont).

• Encode the unknown focal length. Write numerators

and denominators as:

Structure from Motion

50

Application: View Morphing

• Application of basic two-frame structure from motion.

• Also known as view interpolation.

• Used to generate a smooth 3D animation from one

view of a 3D scene to another.

• To create such a transition: smoothly interpolate

camera matrices, i.e., camera position, orientation,

focal lengths. More effect is obtained by easing in

and easing out camera parameters.

• To generate in-between frames: establish full set of

3D correspondences or 3D models for each

reference view.

Structure from Motion

51

Application: View Morphing

• Triangulate set of matched feature points in each

image .

• As the 3D points are re-projected into their

intermediate views, pixels can be mapped from their

original source images to their new views using affine

projective mapping.

• The final image then composited using linear blend of

the two reference images as with usual morphing.

Structure from Motion

52

Factorization

• When processing video sequences, we often get

extended feature track from which it is possible to

recover the structure and motion using a process

called factorization.

Structure from Motion

53

Factorization (cont.)

Figure 7.10: 3D reconstruction of a rotating ping pong ball using factorization

(Tomasi and Kanade 1992) : (a) sample image with tracked features

overlaid; (b)sub-sampled feature motion stream ; (c) two views of the

reconstructed 3D model.

Structure from Motion

54

Factorization (cont.)

• Consider orthographic and weak perspective

projection models.

• Since the last row is always [0001], there is no

perspective division

xij: location of ith point in jth frame

: upper 2 4 portion of projection matrix Pj

= (Xi, Yi, Zi,1): augmented 3D point position

Structure from Motion

55

Factorization (cont.)

• Assume that every point i is visible in every frame j.

We can take the centroid (average) of the projected

point locations xij in frame j.

: augmented 3D centroid of the point cloud.

•

so that

• Centroid of 2D points in each frame

directly gives

us last element of

Structure from Motion

56

Factorization (cont.)

• Let

be the 2D point locations after their

image centroid has been subtracted .

we can write;

Mj: upper 2 by 3 portion of the projection matrix Pj and pi=(Xi,Yi,Zi)

• We can concatenate all of these measurements into

one large matrix.

Structure from Motion

57

Factorization (cont.)

: measurement matrix

: motion matrices

: structure matrices

Structure from Motion

58

Factorization (cont.)

• If SVD of

directly returns the matrices

and ; but it does not. Instead we can write the

relationship

= UQ and

• To recover values of the 3 3 matrix Q depends on motion

model being used.

• In the case of orthographic projection, the entries in Mj are the

first two rows of rotation matrices Rj.

Structure from Motion

59

Factorization (cont.)

• So we have

uk: 3 1 rows of matrix U.

• This gives us a large set of equations for the entries

in matrix QQT from which matrix Q can be recovered

using matrix square root.

Structure from Motion

60

Factorization (cont.)

• A disadvantage is they require a complete set of

tracks i.e., each point must be visible in each frame,

in order for the factorization approach to work.

• To deal with this problem, apply factorization to

smaller denser subsets and then use known camera

(motion) or point (structure) estimates to hallucinate

additional missing values, which allows them to

incrementally incorporate more features and

cameras.

Structure from Motion

61

Perspective and Projective Factorization

• Factorization disadvantage is that it cannot deal with

perspective cameras.

• Perform an initial affine (e.g.,orthographic)

reconstruction and to then correct for the perspective

effects in an iterative manner.

• Observe that object-centered projection model

differ from scaled orthographic projection model

Structure from Motion

62

Perspective and Projective Factorization

(cont).

• By inclusion of denominator terms

• If we knew correct values of

and motion

parameters Rj and pj; we cross multiply left hand side

by denominator and get correct values for which

bilinear projection model is exact.

Structure from Motion

63

Perspective and Projective Factorization (cont).

• Once the nj have been estimated, the feature

locations can then be corrected before applying

another factorization.

• Because of the initial depth reversal ambiguity both

reconstructions have to be tried while computing nj.

Structure from Motion

64

Perspective and Projective Factorization (cont).

• Alternative approach which does not assume

calibrated cameras (known optical center, square

pixels, and zero skew) is to perform fully projective

factorization.

• The inclusion of third row of camera matrix

Is equivalent to multiplying each reconstructed

measurement xij=Mjpi by its inverse depth

• Or equivalently multiplying each measured position

by its projective depth dji.

Structure from Motion

65

Perspective and Projective Factorization (cont).

• Factorization method provides a “closed form” (linear)

method to initialize iterative techniques such as

bundle adjustment.

Structure from Motion

66

Application: Sparse 3D Model Extraction

• Create a denser 3D model than the sparse point

cloud that structured from motion produces.

(b)

(a)

Structure from Motion

67

Application: Sparse 3D Model Extraction

(cont).

(d)

(c)

Figure 7.11: 3D teacup model from a 240-frame video sequence: (a) first frame of

video; (b) last frame of video; (c) side view of 3D model; (d) top view of

the model.

Structure from Motion

68

Application: Sparse 3D Model Extraction

(cont).

• To create more realistic model, a texture map can be

extracted for each triangle face.

• The equations to map points on the surface of a 3D

triangle to a 2D image are straightforward: just pass

local 2D coordinates on the triangle through the 3 4

camera projection matrix to obtain 3 by 3

homography

• Alternative is to create a separate texture map from

each reference camera and to blend between them

during rendering, which is known as viewindependent texture mapping.

Structure from Motion

69

Application: Sparse 3D Model Extraction

(cont).

Figure 7.12: A set of chained transforms for projecting a 3D point pi into a 2D

measurement xij through a series of transformation f(k), each of which is

controlled by its own set of parameters. The dashed lines which indicate the

flow of information as partial derivatives are computed during a backward

pass.

fRD(x) = (1+ k1r2 + k2r4)x: formula for radial distortion function.

Structure from Motion

70

Bundle Adjustment

• The most accurate way to recover structure from

motion is to perform robust nonlinear minimization of

the measurement (re-projection) errors, which is

known as photogrammetry (in computer vision)

communities as bundle adjustment.

• Our feature location measurement xij now depends

only on the point (track index) i but also on the

camera pose index j.

• xij = f(pi,Rj,cj,Kj)

• 3D point positions pi are also updated simultaneously

Structure from Motion

71

Bundle Adjustment ( cont.)

• Figure : The leftmost box performs a robust

comparison of the predicted and measured 2D

locations

and

after re-scaling by the

measurement noise covariance

• Operation can be written as

Structure from Motion

72

(a)

(b)

Figure 7.13: A camera rig and its associated transform chain. (a) As

the mobile rig (robot) moves around in the world, its pose with

respect to world at time t is captured by (Rrt,crt). Each camera’s pose

with respect to the rig captured by (Rrt,crt). (b) A 3D point with world

coordinates pwi is first transformed into rig coordinates pri, and then

through rest of the camera-specific chain.

73

Exploiting Sparsity

• Large bundle adjustment problems, such as those

involving 3D scenes from thousands of Internet

photographs can require solving non-linear least

square problems with millions of measurements

• Structure from motion is bipartite problem in structure

and motion.

• Each feature point xij in a given image depends on

one 3D point position pi and 3D camera pose (Rj,cj).

Structure from Motion

74

Exploiting Sparsity (cont).

(a)

(b)

(c)

Figure 7.14: (a) Bipartite graph for a toy structure from motion problem and

(b) its associated Jacobian J and (c) Hessian A. Numbers indicate cameras.

The dashed arcs and light blue squares indicate the fill-in that occurs when

the structure (point) variables are eliminated.

Structure from Motion

75

Uncertainty and Ambiguity

• Structure from motion involves the estimation of so

many highly coupled parameters, often with no

known “ground truth” components.

• The estimates produces by structure from motion

algorithm can often exhibit large amounts of

uncertainty .

• Example: bas-relief ambiguity, which makes it hard to

simultaneously estimate 3D depth of scene and the

amount of camera motion.

Structure from Motion

76

Uncertainty and Ambiguity (cont).

• A unique coordinate frame and scale for a

reconstructed scene can not be recovered from

monocular visual measurements alone.

• This seven-degrees-of-freedom gauge ambiguity

makes it tricky to compute the variance matrix

associated with a 3D reconstruction.

• To compute a convex matrix that ignores gauge

freedom is to throw away the seven smallest

eigenvalues of the information matrix, whose values

are equivalent to the problem Hessian A up to noise

scaling.

Structure from Motion

77

Reconstruction from Internet Photos

• Widely used application of structure from motion: the

reconstruction of 3D objects and scenes from video

sequences and collection of images.

• Before structure from motion comparison can begin,

it is first necessary to establish sparse

correspondences between different pairs of images

and to then link such correspondences into feature

track, which associates individual 2D image feature

with global 3D points .

Structure from Motion

78

Reconstruction from Internet Photos

(cont).

• For the reconstruction process, it is important to

select good pair of images and a significant amount

of out-of-plane parallax to ensure that a stable

reconstruction can be obtained .

• The EXIF tags associated with photographs can be

used to get good initial estimates for camera focal

lengths.

• This is not always strictly necessary, since these

parameters are re-adjusted as part of the bundle

adjustment process.

Structure from Motion

79

Reconstruction from Internet Photos

(cont).

Figure 7.15: Incremental structure from motion: Starting with an initial

two-frame reconstruction of Trevi Fountain, batches of images are

added using pose estimation, and their positions (along with 3D

model) are refined using bundle adjustment

Structure from Motion

80

Reconstruction from Internet Photos

(cont).

Figure7.16: 3D reconstruction produced by the incremental structure from motion

algorithm. (a) cameras and point cloud from Trafalgar Square; (b) cameras

and points overlaid on an image from the Great Wall of China.; (c) overhead

view of reconstruction of Old Town Square in Prague registered to an

aerial photograph.

Structure from Motion

81

Reconstruction from Internet Photos

(cont).

Figure 7.17: Large scale structure from motion using skeletal sets: (a) original

match graph for 784 images; (b) skeletal set containing 101 images;

(c) top-down view of scene (Pantheon) reconstructed from the skeletal

set; (d) reconstruction after adding in the remaining images using pose

estimation; (e) final bundle adjusted reconstruction, which is almost

identical.

Structure from Motion

82

Constrained Structure and Motion

• If the object of interest is rotating around a fixed but

unknown axis, specialized techniques can be used to

recover this motion.

• In other situation, the camera itself may be moving in

a fixed arc around some center of rotation.

• Specialized capture steps, such as mobile stereo

camera rings or moving vehicles equipped with

multiple fixed cameras, can also take advantage of

the knowledge that individual cameras are mostly

fixed with respect to the capture rig.

Structure from Motion

83

Constrained Structure and Motion (cont).

Line-based technique:

• Pairwise epipolar geometry cannot be recovered from

line matches alone, even if the cameras are

calibrated.

• Consider projecting the set of lines in each image into

a set of 3D planes in space. You can move the two

cameras around into any configuration and still obtain

a valid reconstruction for 3D lines.

Structure from Motion

84

Constrained Structure and Motion (cont).

• When lines are visible in three or more views, the

trifocal tensor can be used to transfer lines from one

pair of image to another.

• The trifocal tensor can also be computed on the basis

line matches alone.

• For triples of images, the trifocal tensor is used to

verify that the lines are in geometric correspondence

before evaluating the correlations between line

segments.

Structure from Motion

85

Constrained Structure and Motion (cont).

Figure 7.18: Two images of toy house along with their matched 3D

line segments.

Structure from Motion

86

Constrained Structure and Motion (cont).

• Plane-based technique:

Better approach is to hallucinate virtual point

correspondences within the area from which each

homography was computed and to feed them into a

standard structure from motion algorithm.

Structure from Motion

87

End

Structure from Motion

88