Lecture Notes

advertisement

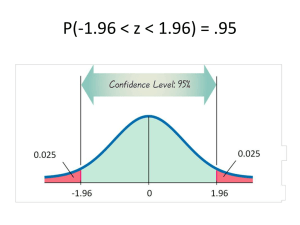

Psych 5500/6500 Introduction to Chi-Square and Test for Goodness of Fit Fall, 2008 1 Chi-square Test Chi-square (χ²) can be used to test inferences about the variance of the population from which a sample was drawn. Let’s say you draw 30 scores from some population and you want to test the following hypotheses. H0: σ² = 6.25 Ha: σ² 6.25 2 The Chi-Square Distribution Formula for computing the value of chi-square with N-1 degrees of freedom. χ 2 (N 1) N - 1(est.σ σ 2 ) 2 Where: est. σ² comes from your data, and σ² is the value of the population variance according to H0. 3 Expected value 2 N - 1(est.σ ) 2 χ (N 1) 2 σ From this formula it is obvious that: 1. If H0 is true then the expected value of chi-square = its degrees of freedom (i.e. N-1). 2. If the variance of the population from which the sample was drawn is greater than the variance proposed by H0 then chi-square will be greater than N-1. 3. If the variance of the population is less than the value proposed by H0 then the value of chi-square will be 4 less than N-1. Sampling Distribution The shape of the sampling distribution depends upon the df 5 Image from ‘Psychological Statistics Using SPSS for Windows’ by R. Gardner. Testing H0 H0: σ² = 6.25 H1: σ² 6.25 The N of our sample is 30, so df= 29. If H0 is true then we expect the value of χ² to be around 29 (but probably not exactly equal to 29 due to chance) How big or small χ² has to be to reject H0 can be found by using the Chi-Square stat tool in the Oakley Stat Tools link on the course web site: χ²critical =16.05 (left tail, % above = 97.5) χ²critical =45.72 (right tail, % above = 2.5) 6 Coming to a Conclusion From our sample we estimate the variance of the population from which the sample was drawn to equal 4.55. Is this significantly different than 6.25? χ 2 (N 1) N - 1est. 2 294.55 21.11 σ 2 6.25 If H0 were true we would expect chi-square to equal 29, and there would be a 95% chance it would be between 16.05 and 45.72. 2 ( 29) 21.11, p .05 7 One-tail Tests It is also possible to do one-tail tests, it just involves having one rejection region (either greater than d.f. or less than d.f.). 8 Assumption of Normality The χ² test assumes that the data are normally distributed. Unlike tests concerning the mean, the central limit theorem does not come into play for χ² (i.e. having a large N does not allow you to ignore this assumption). For χ² to be valid it is important that the population be normally distributed. 9 Confidence Interval of σ² χ² gives us a way to create a confidence interval of the true value of the variance of the population. N 1est.σ2 χ 2 critical,upper_boun d σ 2 N 1est.σ2 χ 2 critical,lower_bound 95% confidence interval 29(4.25) 2 29(4.25) 45.72 16.05 2.70 σ 2 7.70 10 Chi-square Tests of Frequencies We know turn to another use of chi-square, as a means of testing hypotheses about frequencies. These tests are among the relative few that can be used on nominal data. The two tests we will look at are: 1. Goodness of fit 2. Test for association 11 Comparing Sample and Population Distributions using ‘Goodness of Fit’ This is used to determine whether or not the frequency of scores in a sample differs from what would be predicted by H0. As rejecting H0 indicates there is a difference, it really should be called the ‘Badness of Fit’ test. 12 Example 1: Fair Die? We have a six-sided die and we want to know if it is ‘fair’ (i.e. each side will come up an equal number of times). H0 is that it is a fair die, if H0 is true then the probability of any one side coming up = 1/6 = 0.1667 (approx) Ha is that it is not a fair die and the probability of each side coming up is not 0.1667. Our approach will be to sample from the die, and compare the frequencies of how many times each side appears to the expected frequencies if H0 were true. 13 Our Hypotheses H0: Every side has an equal probability of occurring. HA: Every side does not have an equal probability of occurring. I suppose I could have written these in a more mathematical manner that would fit every use of this test, but I think conceptually this way is clearer. 14 Symbols We plan on rolling the die 100 times, and counting how frequently each side occurs. N = the size of the sample = 100 j = the outcome category Oj = the observed frequency of each outcome category. Ej = the expected frequency of each outcome category if H0 were true. 15 Determining Ej If H0 is true then each outcome has a 0.1667 probability of occurring, if we roll the die 100 times each side is expected to occur 100*0.1667 = 16.67 times. Thus for each outcome category the expected frequency if H0 is true (i.e. Ej) would be: Ej = 16.67 16 Observed vs Expected Frequencies Side Observed Frequency Expected Frequency 1 2 3 4 5 6 10 30 5 14 25 16 16.67 16.67 16.67 16.67 16.67 16.67 N= 100 100 17 Testing H0 There were some differences between the observed frequencies and what we would have expected if H0 were true. How to test whether those differences are statistically signficant? O E j j j won’t work because it will always equal zero. 18 Pearson Chi-square statistic O j Ej 2 j O j Ej Ej 2 This could work, but the following one is preferred. This has two properties, one is that it weights more heavily changes in cells that have a small expected frequency, the second is that under many circumstances it approximately fits the theoretical Chi-square distribution, with j-1 degrees of freedom. 19 Chi-square Distribution for Goodness of Fit • Chi-square is a distribution that has a table where we can look up critical values assuming H0 is true. • If H0 true, the expected value of Chi-square is: 2 χ degrees of freedom j -1 • If H0 is false, the expected value of Chi-square is: 2 χ degrees of freedom 20 Computing Chi-square and the resultant p value 2 2 2 10 16 . 67 30 16 . 67 5 16 . 67 2 16.67 16.67 16.67 14 16.672 25 16.672 16 16.672 26.12 16.67 16.67 16.67 If H0 were true we would expect chi-square to equal j-1= 6-1=5. If H0 is false then chi-square should be greater than 5. Chi square is greater than 5, is it enough greater that it would only occur 5% of the time or less if H0 were true? 21 Chi-square Critical Values and the resultant p value The test for goodness of fit is always one-tailed (if H0 is false then chi-square will be greater than its df). The critical value for the test may be obtained from the table I provide in class or through the Chisquare statistical tool I wrote. The tool has the advantage of giving us the exact p value of our obtained value of chi-square. With df=5, χ²critical =11.07 χ²(5)=26.12, p=.0001 22 Example 2: Smoking In a previous year the following proportion of men in some population smoked the following number of packs of cigarettes per day. Category (# of packs) Proportion none .43 one .17 two .24 three .10 four or more .06 23 This year we sample 863 men and we are interested in knowing whether the new data fit (have the same distribution as) the previous data. N=863 j=5 H0: distribution hasn’t changed Ha: distribution has changed Expected value of χ² if H0 is true = 4, critical value of χ²=9.49 24 Determining Ej If H0 is true then the same proportion of men should fall into each category this year as well. To use the Chisquare test, we will need to turn those proportions into what frequencies we would expect in each category if H0 were true. Category (# of packs) Expected Proportion Expected frequency out of 863 men none .43 .43 x 863 = 371.09 one .17 .17 x 863 = 146.71 two .24 .24 x 863 = 207.12 three .10 .10 x 863 = 86.3 four or more .06 .06 x 863 = 51.78 25 Category (# of packs) Observed frequency Expected frequency none 406 371.09 one 164 146.71 two 189 207.12 three 78 86.3 four or more 26 51.78 N=863 N=863 2 2 2 406 371 . 09 164 146 . 71 189 207 . 12 2 371.09 146.71 78 86.32 26 51.782 20.54 86.3 51.78 207.12 2 (4) 20.54, p .0004 26 Assumptions 1) Each and every sample observation falls into one and only one category. 2) The outcomes for the N respective observations in the sample are independent. 3) Deviations (Oj – Ej) are normally distributed. The next slide takes a closer look at this. 27 Assumption of Normality So we are assuming that the deviations of the expected from observed frequencies (Oj – Ej) are normally distributed. This assumption becomes untenable when the expected frequency in a group is small. For example, if the expected frequency is ‘1’, then there is obviously more room for the observed frequency to be greater than ‘1’ then there is for it to be less than ‘1’, so the distribution of (Oj – Ej) will be neither normal nor symmetrical. 28 Solution A variety of approaches are available for handling problems that occur when the expected frequency in any one cell is small (e.g. less than ‘5’). The various approaches have their supporters and critics. I’ll simply refer you to a review article and let you explore this area if you plan on doing Chi-square: Delucchi, K. L. (1993). On the use and misuse of Chisquare. In G. Keren & C. Lewis (eds.). A handbook for data analysis in the behavioral sciences: Statistical Issues. Hillsdale, NJ: Lawrence Erlbaum. 29 Fitting the Sample Distribution to the Normal Distribtuion An interesting use of chi-square goodness of fit is to determine if the population from which you sampled is normally distributed. Compare the obtained frequencies in your data to what you would expect if the scores came from a normal population. H0: frequencies fit normal distribution HA: frequencies do not fit normal distribution. 30 One (flawed) approach Change the data to standard scores and compare to the following proportions from the normal table: Category Expected proportion if from normal population z = 3.00 or more z = 2.00 to 3:00 z = 1.00 to 2.00 z = 0 to 1.00 z = 0 to –1.00 z = -1.00 to –2.00 z = -2.00 to – 3.00 z = -3.00 or more .0013 .0215 .1359 .3413 .3413 .1359 .0215 .0013 31 If N = 100 then the expected frequencies would be Category Expected frequencies z = 3.00 or more z = 2.00 to 3:00 z = 1.00 to 2.00 z = 0 to 1.00 z = 0 to –1.00 z = -1.00 to –2.00 z = -2.00 to – 3.00 z = -3.00 or more .13 2.15 13.59 34.13 34.13 13.59 2.15 .13 32 A better approach Categories of z values z = 1.15 or more Expected proportions if from normal distribution .125 z = 0.68 to 1.15 .125 z = 0.32 to 0.68 .125 z = 0 to 0.32 .125 z = 0 to -0.32 .125 z = -0.32 to -0.68 .125 z = -0.68 to -1.15 .125 z = -1.15 or less .125 33 Example Categories of z values Observed Frequencies of z in the sample Expected Proportions Expected Frequencies (N x 0.125) z = 1.15 or more 10 .125 17.5 z = 0.68 to 1.15 25 .125 17.5 z = 0.32 to 0.68 15 .125 17.5 z = 0 to 0.32 30 .125 17.5 z = 0 to -0.32 15 .125 17.5 z = -0.32 to -0.68 20 .125 17.5 z = -0.68 to -1.15 5 .125 17.5 z = -1.15 or less 20 .125 17.5 N=140 34 2 2 2 2 10 17 . 5 25 17 . 5 15 17 . 5 30 17 . 5 2 17.5 17.5 17.5 17.5 15 17.52 20 17.52 5 17.52 20 17.52 17.5 d.f.=7 17.5 17.5 17.5 25.71 χ²(7) = 25.71, p=.0006 35