Issues and Challenges

advertisement

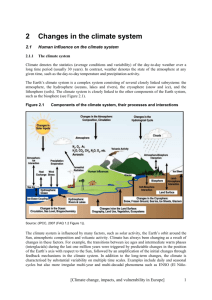

Credibility of Climate Model Projections of Future Climate: Issues and Challenges Linda O. Mearns National Center for Atmospheric Research SAMSI 2011-12 Program on Uncertainty Quantification Pleasanton, CA, August 29, 2011 “Doubt is not a pleasant condition, but certainty is an absurd one.” -Voltaire How can we best evaluate the quality of climate models? Different Purposes • Reasons for establishing reliability/credibility – for recommending what scenarios should be used for impacts assessments, – for selecting which global models should be used to drive regional climate models, – for differential weighting to provide better measures of uncertainty (e.g, probabilistic methods) • Mainly going to discuss this in terms of multimodel ensembles (however, there are important limitations to the types of uncertainty that can be represented in MMEs) Reliability, confidence, credibility • What do we mean? • For a long time, climate modelers/analysts would make statements that if model reproduced observations ‘well’ then we had confidence in future projections – but this really isn’t adequate – synonymous with Gabbi’s naïve view Average Changes in Temperature and Precipitation over the Grid Boxes of the Lower 48 States Three Climate Model 2xCO2 Experiments Smith et al., 1989 Global Model Change in Precipitation - Summer Relationship to Uncertainty •Historically (and even in the most recent IPCC Reports) each climate model is given equal weight in summarizing model results. Does this make sense, given different model performances? •Rapid new developments in how to differentially weight climate model simulations in probabilistic models of uncertainty of regional climate change REA Method • Summary measure of regional climate change based on weighted average of climate model responses • Weights based on model ‘reliability‘ • Model Reliability Criteria: • Performance of AOGCM (validation) • Model convergence (for climate change) Giorgi and Mearns, 2002, 2003 REA Results for Temperature A2 Scenario DJF Summary • REA changes differ from simple averaging method – by few tenths to 1 K for temperature – by few tenths to 10% for precipitation • Uncertainty range is narrower in the REA method • Overall reliability from model performance was lower than that from model convergence • Therefore to improve reliability, must reduce model biases Tebaldi & Knutti, 2007 Search for ‘correct’ performance metrics for climate models – where are we • Relative ranking of models varies depending on variable considered - points to difficulty of using one grand performance index • Importance of evaluating a broad spectrum of climate processes and phenomena • Remains largely unknown what aspects of observed climate must be simulated well to make reliable predictions about future climate Gleckler et al., 2008, JGR Model Performance over Alaska and Greenland • RMSEs of seasonal cycles of temperature, precipitation, sea level pressure • Tendency of models with smaller errors to simulate a larger greenhouse gas warming over the arctic and greater increases in precipitation • Choice of subset of models may narrow uncertainty and obtain more robust estimates of future climate change in Arctic Walsh et al., 2008 Selection of ‘reliable’ scenarios in the Southwest • Evaluation of CMIP3 models for winter temperature and precipitation (using modified Giorgi and Mearns REA method) • Reproduction of 250 mb geopotential height field (reflecting location of subtropical jet stream) • Two models (ECHAM5, HadCM3 score best for these three variables) Dominguez et al., 2010 Dominguez et al., 2010 SW Reliability Scores Dominguez et al., 2010 Dominguez et al., 2010 Studies where selection did not make a difference • Pierce et al., 2009 - future average temperature over the western US – 14 randomly selected GCMs produced results indistinguishable from those produced by subset of ‘best’ models. • Knutti et al., 2010 – metric of precipitation trend, 11 randomly selected GCMs, produced same results as those from 11 ‘best’ GCMs. ENSEMBLES Methodological Approach Six metrics are identified based on ERA40-driven runs – F1: Large scale circulation and weather regimes (MeteoFrance) – F2: Temperature and precipitation meso-scale signal (ICTP) – F3: Pdf’s of daily precipitation and temperature (DMI, UCLM,SHMI) – F4: Temperature and precipitation extremes (KNMI, HC) – F5: Temperature trends (MPI) – F6: Temperature and precipitation annual cycle (CUNI) 6 n i f w R i i 1 The result 0,30,3 0,25 0,20,2 Wprod Wredu Wrank 0,15 0,10,1 0,05 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Christensen et al., 2010 The result RCM f1 f2 f3 f4 f5 f6 Wprod Wredu Wrank C4I-RCA3.0 0,058 0,050 0,067 0,044 0,066 0,069 0,026 0,058 0,057 CHMI-ALADIN 0,071 0,058 0,067 0,070 0,060 0,069 0,054 0,066 CNRM-ALADIN 0,069 0,059 0,067 0,113 0,066 0,061 0,084 0,064 0,066 DMI-HIRHAM5 0,068 0,039 0,066 0,062 0,070 0,068 0,035 0,066 0,053 ETHZ-CLM 0,075 0,073 0,067 0,036 0,059 0,069 0,038 0,067 0,073 ICTP-RegCM3 0,073 0,112 0,065 0,066 0,069 0,067 0,112 0,075 0,073 KNMI-RACMO2 0,070 0,137 0,069 0,132 0,066 0,068 0,268 0,094 0,123 Met.No-HIRHAM 0,070 0,041 0,067 0,057 0,065 0,067 0,032 0,064 0,055 Meto-HC-HadRM3Q0 0,061 0,048 0,067 0,054 0,071 0,066 0,034 0,063 0,057 Meto-HC-HadRM3Q3 0,061 0,049 0,066 0,030 0,064 0,062 0,016 0,047 0,036 Meto-HC-HadRM3Q16 0,061 0,051 0,067 0,080 0,073 0,066 0,055 0,069 0,071 MPI-REMO OURANOSMRCC4.2.3 0,068 0,072 0,066 0,038 0,069 0,069 0,039 0,068 0,063 SMHI-RCA3.0 0,057 0,053 0,067 0,054 0,067 0,069 0,035 0,063 0,062 UCL-PROMES 0,067 0,068 0,067 0,099 0,070 0,065 0,096 0,074 0,073 0,08 0,072 0,089 0,065 0,063 0,065 0,066 0,077 0,065 0,057 An application for 20202050 • Changes for European capitals 2021-2050 (Déqué, 2009; Déqué & Somot 2010) • 17 RCMs in 5(7) GCMs • Convert discrete data set into a continuous PDFs of climate change variables. – This is done using a Gaussian Kernel algorithm applied to the discrete dataset with the aim to take into account also the model specific weights Temperature Pdf Climate Change Pdf of daily temperature (°C) for DJF (left) and JJA (right) for the 1961-1990 (solid line) and 2021-2050 (dash line) with ENSEMBLES weights (thick line) and for a single model based on median Ranked Probability Score (thin line). Deque & Somot 2010 To weight or not to weight • Recommendation from IPCC Expert Meeting on Evaluating MMEs (Knutti et al. 2010) – Rankings or weighting could be used to select subsets of models – But useful to test statistical significance of the difference between models and the subset vs. the full ensemble to establish if subset is meaningful – Selection of metric is crucial - is it a truly meaningful one from a process point of view? More process-oriented approaches Process-oriented approaches • Hall and Qu snow-albedo feedback example • What we are trying in NARCCAP SNOW ALBEDO FEEDBACK In the AR4 ensemble, intermodel variations in snow albedo feedback strength in the seasonal cycle context are highly correlated with snow albedo feedback strength in the climate change context It’s possible to calculate an observed value of the SAF parameter in the seasonal cycle context based on the ISCCP data set (1984-2000) and the ERA40 reanalysis. This value falls near the center of the model distribution. observational estimate based on ISCCP 95% confidence interval It’s also possible to calculate an estimate of the statistical error in the observations, based on the length of the ISCCP time series. Comparison to the simulated values shows that most models fall outside the observed range. However, the observed error range may not be large enough because of measurement error in the observations. (Hall and Qu, 2007) What controls the strength of snow albedo feedback? snow cover component snow metamorphosis component It turns out that the snow cover component is overwhelmingly responsible not only for the overall strength of snow albedo feedback in any particular model, but also the intermodel spread of the feedback. Qu and Hall 2007a Establishing Process-level Differential Credibility of Regional Scale Climate Simulations Determining through in depth process-level analysis of climate simulations of current (or past) climate, the ability of the model to reproduce those aspects of the climate system most responsible for the particular regional climate; then analyzing the model response to future forcing and determining specifically how model errors in the current simulation affect the model’s response to the future forcing. Which model errors really matter? Essentially it is a process-based integrated expert judgment of to what degree the model’s response to the future forcing is deemed credible. The North American Regional Climate Change Assessment Program (NARCCAP) www.narccap.ucar.edu •Explores multiple uncertainties in regional and global climate model projections 4 global climate models x 6 regional climate models • Develops multiple high resolution regional (50 km, 30 miles) climate scenarios for use in impacts and adaptation assessments •Evaluates regional model performance to establish credibility of individual simulations for the future •Participants: Iowa State, PNNL, LNNL, UC Santa Cruz, Ouranos (Canada), UK Hadley Centre, NCAR • Initiated in 2006, funded by NOAA-OGP, NSF, DOE, USEPA-ORD – 5-year program NARCCAP Domain Organization of Program • Phase I: 25-year simulations using NCEP-Reanalysis boundary conditions (1980—2004) • Phase II: Climate Change Simulations – Phase IIa: RCM runs (50 km res.) nested in AOGCMs current and future – Phase IIb: Time-slice experiments at 50 km res. (GFDL and NCAR CAM3). For comparison with RCM runs. • Quantification of uncertainty at regional scales – probabilistic approaches • Scenario formation and provision to impacts community led by NCAR. • Opportunity for double nesting (over specific regions) to include participation of other RCM groups (e.g., for NOAA OGP RISAs, CEC, New York Climate and Health Project, U. Nebraska). Process Credibility Analysis of the Southwest M. Bukovsky What do we need to make further progress? • Many more in depth process oriented studies that examine plausibility of process change under future forcing - what errors really matter and which don’t? What is the danger of false certainty? The End How is this done? • Using performance metrics – e.g., Gleckler et al., 2008, Reichler and Kim, 2010 – For variously weighting schemes – For selecting good (or bad) performers – Example from ENSEMBLES program (Christensen et al.) – In probabilistic or selection approaches (does selection or weighting really provide different estimates? Usually not - but, example of Walsh et al., Dominguez et al.