Statistical Tests

advertisement

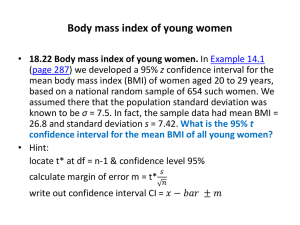

Statistical Tests Karen H. Hagglund, M.S. Karen.Hagglund@stjohn.org Research: How Do I Begin??? Take It “Bird by Bird” Anne Lamott Let’s Take it Step by Step... Identify topic Literature review Variables of interest Research hypothesis Design study Power analysis Write proposal Design data tools Committees Collect data Set up spreadsheet Enter data Statistical analysis Graphs Slides / poster Write paper / manuscript Confused by Statistics ? Goals To understand why a particular statistical test was used for your research project To interpret your results To understand, evaluate, and present your results Free Statistics Software Mystat: http://www.systat.com/MystatProducts.aspx List of Free Statistics Software: http://statpages.org/javasta2.html Before choosing a statistical test… Figure out the variable type – Scales of measurement (qualitative or quantitative) Figure out your goal – Compare groups – Measure relationship or association of variables Scales of Measurement Nominal Ordinal Interval Ratio } Qualitative } Quantitative Nominal Scale (discrete) Simplest scale of measurement Variables which have no numerical value Variables which have categories Count number in each category, calculate percentage Examples: – – – – – Gender Race Marital status Whether or not tumor recurred Alive or dead Ordinal Scale Variables are in categories, but with an underlying order to their values Rank-order categories from highest to lowest Intervals may not be equal Count number in each category, calculate percentage Examples: – – – – Cancer stages Apgar scores Pain ratings Likert scale Interval Scale Quantitative data Can add & subtract values Cannot multiply & divide values – No true zero point Example: – Temperature on a Celsius scale • 00 indicates point when water will freeze, not an absence of warmth Ratio Scale (continuous) Quantitative data with true zero – Can add, subtract, multiply & divide Examples: – – – – – Age Body weight Blood pressure Length of hospital stay Operating room time Scales of Measurement Nominal Ordinal Interval Ratio to nonparametric } Lead statistics } Lead to parametric statistics Two Branches of Statistics Descriptive – Frequencies & percents – Measures of the middle – Measures of variation Inferential – Nonparametric statistics – Parametric statistics Descriptive Statistics First step in analyzing data Goal is to communicate results, without generalizing beyond sample to a larger group Frequencies and Percents Number of times a specific value of an observation occurs (counts) For each category, calculate percent of sample SMOKING Valid Missing Total smoker non-smoker Total unknown Frequency 26 79 105 22 127 Percent 20.5 62.2 82.7 17.3 100.0 Valid Percent 24.8 75.2 100.0 Cumulative Percent 24.8 100.0 Measures of the Middle or Central Tendency Mean – Average score • sum of all values, divided by number of values – Most common measure, but easily influenced by outliers Median – 50th percentile score • half above, half below – Use when data are asymmetrical or skewed Measures of Variation or Dispersion Standard deviation (SD) – Square root of the sum of squared deviations of the values from the mean divided by the number of values SD = sum of (individual value – mean value) 2 ________________________________________________ number of values Standard error (SE) – Standard deviation divided by the square root of the number of values Measures of Variation or Dispersion Variance – Square of the standard deviation Range – Difference between the largest & smallest value nocigs_b Valid Missing Total 1 2 3 5 6 12 13 14 15 17 18 19 20 22 24 30 39 40 45 100 Total System Frequency 2 1 1 3 1 1 1 1 2 1 1 2 2 1 1 1 1 1 1 1 26 101 127 Percent 1.6 .8 .8 2.4 .8 .8 .8 .8 1.6 .8 .8 1.6 1.6 .8 .8 .8 .8 .8 .8 .8 20.5 79.5 100.0 Valid Percent 7.7 3.8 3.8 11.5 3.8 3.8 3.8 3.8 7.7 3.8 3.8 7.7 7.7 3.8 3.8 3.8 3.8 3.8 3.8 3.8 100.0 Cumulative Percent 7.7 11.5 15.4 26.9 30.8 34.6 38.5 42.3 50.0 53.8 57.7 65.4 73.1 76.9 80.8 84.6 88.5 92.3 96.2 100.0 Statistics nocigs_b N Mean Std. Error of Mean Median Mode Std. Deviation Variance Range Minimum Maximum Valid Missing 26 101 19.62 3.985 16.00 5 20.320 412.886 99 1 100 Inferential Statistics Sample Population Nonparametric tests – Used for analyzing nominal & ordinal variables – Makes no assumptions about data Parametric tests – Used for analyzing interval & ratio variables – Makes assumptions about data • Normal distribution • Homogeneity of variance • Independent observations Which Test Do I Use? Step 1 Know the scale of measurement Step 2 Know your goal – Is it to compare groups? How many groups do I have? – Is it to measure a relationship or association between variables? Key Inferential Statistics Chi-Square – Fisher’s exact test T-test – Unpaired – Paired } } Nonparametric Association/Relationship Parametric Compare groups Analysis of Variance (ANOVA) Pearson’s Correlation Linear Regression } } Parametric Compare groups Parametric Association/Relationship Probability and p Values p < 0.05 – 1 in 20 or 5% chance groups are not different when we say groups are significantly different p < 0.01 – 1 in 100 or 1% chance of error p < 0.001 – 1 in 1000 or .1% chance of error Research Hypothesis Topic Research question – hypothesis Null hypothesis (H0) • – research question Predicts no effect or difference Alternative hypothesis (H1) • Predicts an effect or difference Example Topic: Cancer & Smoking Research Question: Is there a relationship between smoking & cancer? H0: Smokers are not more likely to develop cancer compared to nonsmokers. H1: Smokers are more likely to develop cancer than are non-smokers. Are These Categorical Variables Associated? SMOKING * SES Crosstabulation low SMOKING smoker non-smoker Total Count % within SES Count % within SES Count % within SES 7 38.9% 11 61.1% 18 100.0% SES middle 13 20.3% 51 79.7% 64 100.0% high Total 6 26.1% 17 73.9% 23 100.0% 26 24.8% 79 75.2% 105 100.0% 2 Chi-Square Most common nonparametric test Use to test for association between categorical variables Use to test the difference between observed & expected proportions – The larger the chi-square value, the more the numbers in the table differ from those we would expect if there were no association Limitation – Expected values must be equal to or larger than 5 Let’s Test For Association Low SES 38.9%, Middle SES 20.3%, High SES 26.1% Chi-Square Tests Pearson Chi-Square Likelihood Ratio Linear-by-Linear Association N of Valid Cases Value 2.630a 2.476 .653 2 2 Asymp. Sig. (2-sided) .268 .290 1 .419 df 105 a. 1 cells (16.7%) have expected count less than 5. The minimum expected count is 4.46. Alternative to Chi-Square Fisher’s exact test – Is based on exact probabilities – Use when expected count <5 cases in each cell and – Use with 2 x 2 contingency table R A Fisher 1890-1962 LUNG_CA * SMOKING Crosstabulation LUNG_CA positive negative Total Count % within SMOKING Count % within SMOKING Count % within SMOKING SMOKING smoker non-smoker 3 1 11.5% 1.3% 23 78 88.5% 98.7% 26 79 100.0% 100.0% Total 4 3.8% 101 96.2% 105 100.0% Chi-Square Tests Pearson Chi-Square Continuity Correction a Likelihood Ratio Fisher's Exact Test Linear-by-Linear Association N of Valid Cases Value 5.633b 3.179 4.664 5.580 df 1 1 1 1 Asymp. Sig. (2-sided) .018 .075 .031 Exact Sig. (2-sided) Exact Sig. (1-sided) .046 .046 .018 105 a. Computed only for a 2x2 table b. 2 cells (50.0%) have expected count less than 5. The minimum expected count is .99. Do These Groups Differ? Group Statistics BMI SMOKING smoker non-smoker N 26 79 Mean 25.1846 26.2228 Std. Deviation 5.27209 5.47664 Std. Error Mean 1.03394 .61617 Unpaired t-test or Student’s t-test William Gossett 1876-1937 Frequently used statistical test Use when there are two independent groups Unpaired t-test or Student’s t-test Test for a difference between groups – Is the difference in sample means due to their natural variability or to a real difference between the groups in the population? Outcome (dependent variable) is interval or ratio Assumptions of normality, homogeneity of variance & independence of observations Let’s Test For A Difference Smokers’ BMI = 25.18 ± 5.27 Non-Smokers’ BMI = 26.22 ± 5.48 Independent Samples Test Levene's Test for Equality of Variances F BMI Equal variances assumed Equal variances not assumed Sig . .079 .779 t-test for Equality of Means t df Sig . (2-tailed) Mean Difference Std. Error Difference 95% Confidence Interval of the Difference Lower Upper -.846 103 .400 -1.0382 1.22719 -3.47200 1.39566 -.863 44.127 .393 -1.0382 1.20362 -3.46371 1.38737 Do These Groups Differ? Light smoker < 1 pack/day Heavy smoker > 1 pack/day Descriptives BMI N non-smoker light smoker heavy smoker Total 79 17 9 105 Mean 26.2228 26.1765 23.3111 25.9657 Std. Deviation 5.47664 4.96154 5.62015 5.42028 Std. Error .61617 1.20335 1.87338 .52896 95% Confidence Interval for Mean Lower Bound Upper Bound 24.9961 27.4495 23.6255 28.7275 18.9911 27.6311 24.9168 27.0147 Minimum 17.70 18.90 17.90 17.70 Maximum 40.20 35.00 35.90 40.20 Analysis of Variance (ANOVA) or F-test Three or more independent groups Test for a difference between groups – Is the difference in sample means due to their natural variability or to a real difference between the groups in the population? Outcome (dependent variable) is interval or ratio Assumptions of normality, homogeneity of variance & independence of observations Let’s Test For A Difference Non-Smokers’ BMI = 26.22 ± 5.48 Light Smokers’ BMI = 26.18 ± 4.96 Heavy Smokers’ BMI = 23.31 ± 5.62 ANOVA BMI Between Groups Within Groups Total Sum of Squares 69.398 2986.058 3055.457 df 2 102 104 Mean Square 34.699 29.275 F 1.185 Sig . .310 Is there a relationship between the variables? No_Cigs 1 1 2 3 5 5 5 6 12 13 14 15 15 17 18 19 19 20 20 22 24 30 39 40 45 100 BMI 30.1 18.9 22.8 22.6 24.2 26.2 33.3 19.1 35 23 22.2 28.7 28.6 24.3 30.9 22.5 32.6 19 26.7 18.8 23.4 23.2 25 35.9 17.9 19.9 Pearson’s Correlation Karl Pearson 1857-1936 Measures the degree of relationship between two variables Assumptions: – Variables are normally distributed – Relationship is linear – Both variables are measured on the interval or ratio scale – Variables are measured on the same subjects Scatterplots Perfect positive correlation r = -1.0 ---- +1.0 Perfect negative correlation No correlation Let’s Test For A Relationship Correlations NOCIGS_B BMI Pearson Correlation Sig . (2-tailed) N Pearson Correlation Sig . (2-tailed) N NOCIGS_B 1 . 26 -.169 .410 26 BMI -.169 .410 26 1 . 105 40 30 BMI 20 10 0 20 NOCIGS_B 40 60 80 100 120 Interpretation of Results The size of the p value does not indicate the importance of the result Appropriate interpretation of statistical test – Group differences – Association or relationship – “Correlation does not imply causation” Don’t Lie With Statistics !