here

advertisement

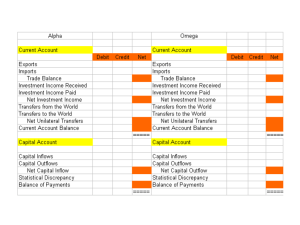

Alpha to Omega and Beyond! Presented by Michael Toland Educational Psychology & Dominique Zephyr Applied Statistics Lab Hypothetical Experiment Suppose you measured a person’s perceived self-efficacy with the general self-efficacy scale (GSES) 1,000 times Suppose the measurements we observe vary between 13 and 17 points The person’s perceived self-efficacy score has seemingly remained constant, yet the measurements fluctuate The problem is that it is difficult to get at the true score because of random errors of measurement True Score vs. Observed Score An observed score is made up of 2 components Observed Score = True score + Random Error True score is a person’s average score over repeated (necessarily hypothetical) administrations The 1,000 test scores are observed scores Reliability Estimation: Classical Test Theory (CTT) Across people we define the variability among scores in a similar way SX2 = ST2 + SE2 Reliability is the ratio of true-score variance relative to its observed-score variance According to CTT xx’= σ2T/σ2x Unfortunately, the true score variability can’t be measured directly. So, we have figured out other ways of estimating how much of a score is due to true score and measurement error Concept of Reliability Reliability is not an all-or-nothing concept, but there are degrees of reliability High reliability tells us if people were retested they would probably get similar scores on different versions of a test It is a property of a set of test scores – not a test But we are not interested in just performance on a set of items Why is reliability important? Reliability affects not only observed score interpretations, but poor reliability estimates can lead to deflated effect size estimates nonsignificant results When something is more reliable it is closer to the true score The more we can differentiate among individuals of different levels When something is less reliable it is further away from the true score The less it differentiates among individuals Measurement Models Under CTT Parallel Tau Equivalent Essentially Tau-Equivalent Congeneric Unidimensional Y Y Y Y Equal item covariances Y Y Y Equal item-construct relations Y Y Y Equal item variance Y Y Equal item error variance Y Assumptions Alpha () Cronbach (1951) Omega () McDonald (1970, 1999) Coefficient Alpha ˆ (covavg )k 2 ScaleV covavg = average covariance among items k = the number of items ScaleV = scale score variance Why have we been using alpha for so long? Simple equation Easily calculated in standard software (default) Tradition Easy to understand Researchers are not aware of other approaches Problems with alpha () Unrealistic to assume all items have same equal item-construct relation and item covariances are the same Underestimates population reliability coefficient when congeneric model assumed What do we gain with Omega? Does not assume all items have the same item-construct relations and equal item covariances (assumptions relaxed) More consistent (precise) estimator of reliability Not as difficult to estimate as folks come to believe One-Factor CFA Model 1 Perceived General Self-Efficacy 1 2 3 5 4 Item1 Item2 Item3 Item4 1 1 1 1 1 2 3 4 6 Item5 Item6 1 5 1 6 Coefficient Omega 2 i i 1 2 k k i ii i 1 i 1 k i = factor pattern loading for item i k = the number of items ii = unique variance of item I Assumes latent variance is fixed at 1 within CFA framework Include CI along Reliability Point Estimate Measures a range that estimates the true population value for reliability, while acknowledging the uncertainty in our reliability estimate Recommended by APA and most peer reviewed journals Mplus input for alpha () Mplus output for alpha () Mplus input for omega Mplus output for omega APA style write-up for coefficients with CIs = .61, Bootstrap corrected [BC] 95% CI [.56, .66] = .62, Bootstrap corrected [BC] 95% CI [.56, .66] Limitations of CTT Reliability Coefficients and Future QIPSR Talks Although a better estimate of reliability than alpha, CTT still assumes a constant amount of reliability exists across the score continuum However, it is well known in the measurement community that reliability/precision is conditional on a person’s location along the continuum Modern measurement techniques such as Item Response Theory (IRT) do not make this assumption and focus on items instead of the total scale itself References Revelle, W., Zinbarg, R. E. (2008). Coefficients Alpha, Beta, Omega, and the glb: Comments on Sijtsma. Psychometrika, 74, 145-154. doi:10.1007/s11336-008-9102-z Gadermann, A. M., Guhn, M., & Zumbo, B. D. (2012). Estimating ordinal reliability for Likert-type and ordinal item response data: A conceptual, empirical, and practical guide. Practical Assessment, Research and Evaluation, 17. Retrieved from: http://pareonline.net/getvn.asp?v=17&n=3 Zumbo, B. D., Gadermann, A. M., & Zeisser, C. (2007). Ordinal versions of coefficients alpha and theta for Likert rating scales. Journal of Modern Applied Statistical Methods, 6. Retrieved from: http://digitalcommons.wayne.edu/jmasm/vol6/iss1/4 Starkweather, J. (2012). Step out of the past: Stop using coefficient alpha; there are better ways to calculate reliability. Benchmarks RSS Matters Retrieved from: http://web3.unt.edu/benchmarks/issues/2012/06/rss-matters Sijtsma, K. (2009). On the use, the misuse, and the very limited usefulness of Cronbach's alpha. Psychometrika, 74, 107-120.doi:10.1007/s11336-008-9101-0 Peters., G-J. Y. (2014). The alpha and the omega of scale reliability and validity: Why and how to abandon Cronbach's alpha and the route towards more comprehensive assessment of scale quality. The European Health Psychologist, 16, 56–69. Retrieved from: http://www.ehps.net/ehp/issues/2014/v16iss2April2014/4%20%20Peters%2016_2_EHP_April%202014.pdf Crutzen, R. (2014). Time is a jailer: What do alpha and its alternatives tell us about reliability?. The European Health Psychologist, 16, 70-74. Retrieved from: http://www.ehps.net/ehp/issues/2014/v16iss2April2014/5%20Crutzen%2016_2_EHP_April%202014.pdf Dunn, T., Baguley, T., & Brunsden, V. (2014). From alpha to omega: A practical solution to the pervasive problem of internal consistency estimation. British Journal of Psychology, 105, 399-412. doi:10.1111/bjop.12046 Geldhof, G., Preacher, K. J., & Zyphur, M. J. (2014). Reliability estimation in a multilevel confirmatory factor analysis framework. Psychological Methods, 19, 72-91. doi:10.1037/a0032138 Acknowledgements APS Lab members Angela Tobmbari Zijia Li Caihong Li Abbey Love Mikah Pritchard