Residual i

advertisement

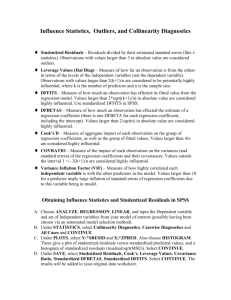

Regression Diagnostics Checking Assumptions and Data Questions • What is the linearity • What is a residual? assumption? How • How can you use can you tell if it residuals in assuring seems met? that the regression model is a good • What is representation of the homoscedasticity data? (heteroscedasticity)? • What is a studentized How can you tell if residual? it’s a problem? • What is an outlier? • What is leverage? Linear Model Assumptions • Linear relations between X and Y • Independent Errors • Normal distribution for errors & Y • Equal Variance of Errors: Homoscedasticity ( spread of error in Y across levels of X) Good-Looking Graph 9 Y 6 3 0 No apparent departures from line. -3 -2 0 2 X 4 6 Problem with Linearity 50 Miles per Gallon 40 30 20 R Sq Linear = 0.595 10 50 100 150 Horsepower 200 250 Problem with Heteroscedasticity 10 Common problem when Y = $ 8 Y 6 4 2 0 -2 0 2 3 X 5 6 Outliers 10 Outlier = pathological point 8 Y 6 3 1 Outlier -1 -2 0 2 3 X 5 6 Residual Plots • • • • Histogram of Residuals Residuals vs Fitted Values Residuals vs Predictor Variable Normal Q-Q Plots • Studentized Residuals or standardized Residuals Residuals • Standardized Residuals Residual i Standard deviation Look for large values (some say |>2) • Studentized residual: The studentized residual considers the distance of the point from the mean. The farther X is from the mean, the smaller the standard error and the larger the residual. Look for large values. Residual Plots Normal Probability Plot of the Residuals Normal Probability Plot of the Residuals (response is Crimrate) Histogram of the Residuals (response is Crimrate) (response is Crimrate) 10 2 2 Normal Score 1 Normal Score Frequency 1 5 0 0 -1 -1 -2 -2 0 -40 -40 -30 -20 -10 0 10 20 30 40 -40 50 -30 -20 -30 -20 -10 -10 Residual 60 100 Residuals Versus the Fitted Values (response is Crimrate) 60 50 Residual 30 20 East West North 50 10 0 -10 -20 -30 -40 10 10 20 20 Res idual Res idual 30 30 40 40 50 50 60 60 Residuals Versus the Order of the Data 100 (response is Crimrate) 50 0 1st Qtr 50 3rd Qtr 100 Fitted Value 40 30 Residual 40 0 0 20 10 0 -10 -20 -30 -40 150 200 East West North 50 0 1st Qtr 5 10 15 3rd Qtr 20 25 30 Obs ervation Order 35 40 45 Abnormal Patterns in Residual Plots Figures a), b) Non-linearity Figure c) Augtocorrelations Figure d) Heteroscedasticity Patterns of Outliers a) Outlier is extreme in both X and Y but not in pattern. Removal is unlikely to alter regression line. b) Outlier is extreme in both X and Y as well as in the overall pattern. Inclusion will strongly influence regression line c) Outlier is extreme for X nearly average for Y. d) Outlier extreme in Y not in X. e) Outlier extreme in pattern, but not in X or Y. Influence Analysis • Leverage: h_ii (in page8) • Leverage is an index of the importance of an observation to a regression analysis. – – – – Function of X only Large deviations from mean are influential Maximum is 1; min is 1/n It is considered large if more than 3 x p /n (p=number of predictors including the constant). Cook’s distance • measures the influence of a data point on the regression equation. i.e. measures the effect of deleting a given observation: data points with large residuals (outliers) and/or high leverage • Cook’s D > 1 requires careful checking (such points are influential); > 4 suggests potentially serious outliers. Sensitivity in Inference • All tests and intervals are very sensitive to even minor departures from independence. • All tests and intervals are sensitive to moderate departures from equal variance. • The hypothesis tests and confidence intervals for β0 and β1 are fairly "robust" (that is, forgiving) against departures from normality. • Prediction intervals are quite sensitive to departures from normality. Remedies • If important predictor variables are omitted, see whether adding the omitted predictors improves the model. • If there are unequal error variances, try transforming the response and/or predictor variables or use "weighted least squares regression." • If an outlier exists, try using a "robust estimation procedure." • If error terms are not independent, try fitting a "time series model." • If the mean of the response is not a linear function of the predictors, try a different function. • For example, polynomial regression involves transforming one or more predictor variables while remaining within the multiple linear regression framework. • For another example, applying a logarithmic transformation to the response variable also allows for a nonlinear relationship between the response and the predictors. λ λ λ λ Data Transformation • The usual approach for dealing with nonconstant variance, when it occurs, is to apply a variance-stabilizing transformation. • For some distributions, the variance is a function of E(Y). • Box-Cox transformation