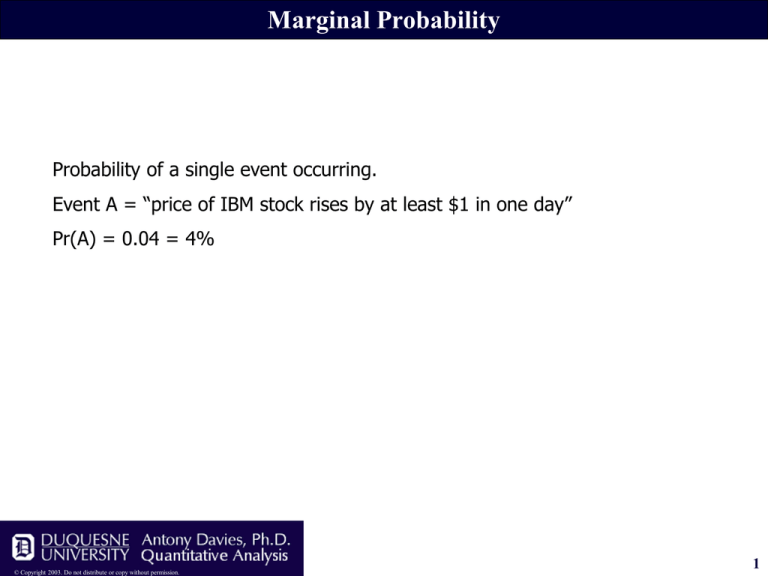

Marginal Probability

Probability of a single event occurring.

Event A = “price of IBM stock rises by at least $1 in one day”

Pr(A) = 0.04 = 4%

© Copyright 2003. Do not distribute or copy without permission.

1

Joint Probability

Probability of all of multiple events occurring.

Event A = “price of IBM stock rises by at least $1 in one day”

Event B = “price of GE stock rises by at least $1 in one day”

Pr(A) = 0.04 = 4%

Pr(B) = 0.01 = 1%

“Probability of both IBM and GE rising by at least $1 in one day”

= Pr(A and B) = 0.02 = 2%

© Copyright 2003. Do not distribute or copy without permission.

2

Joint Probability

Two events are independent if the occurrence of one is not contingent on the

occurrence of the other.

A = “Price of IBM rises by at least $1 in one day.”

B = “Price of IBM rises by at least $1 in one week.”

The events are not independent because, the an increase in the probability of

A implies an increase in the probability of B.

For independent events:

Pr(A and B) = Pr(A) Pr(B)

© Copyright 2003. Do not distribute or copy without permission.

3

Disjoint Probability

Probability of any of multiple events occurring.

Event A = “price of IBM stock rises by at least $1 in one day”

Event B = “price of GE stock rises by at least $1 in one day”

Pr(A) = 0.04 = 4%

Pr(B) = 0.01 = 1%

Pr(A or B) = P(A) + P(B) – P(A and B)

“Probability of either IBM or GE rising by at least $1 in one day”

= Pr(A or B) = 0.04 + 0.01 – 0.02 = 0.03

© Copyright 2003. Do not distribute or copy without permission.

4

Venn Diagram

Area

1

B

A

2

© Copyright 2003. Do not distribute or copy without permission.

3

4

Meaning

1

A’ and B’

2

A and B’

3

A and B

4

A’ and B

23

A

34

B

124

A’ or B’

123

A or B’

234

A or B

134

A’ or B

1234

A or A’

1234

B or B’

Empty

A and A’

Empty

B and B’

5

Venn Diagram

A = “Price of IBM stock

rises by at least $1 in

one day.”

1 – 0.02 – 0.02 – 0.01 = 0.95

B

A

0.02

0.02

0.01

B = “Price of GE stock

rises by at least $1 in

one day.”

Pr(A and B) = 0.02

Pr(A) = 0.04

Pr(B) = 0.03

© Copyright 2003. Do not distribute or copy without permission.

6

Venn Diagram

A = “Price of IBM stock rises by at least $1 in one day.”

B = “Price of GE stock rises by at least $1 in one day.”

0.95

B

A

What is the probability of the

price of GE rising by at least

$1 and the price of IBM not

rising by at least $1?

Pr(B and A’) = Pr(A’ and B)

0.02

0.02

0.01

= 0.01

What is the probability of

neither the price of IBM rising

by at least $1 nor the price of

GE rising by at least $1?

Pr(A’ and B’) = 0.95

© Copyright 2003. Do not distribute or copy without permission.

7

Conditional Probability

Probability of an event occurring given that another event has already

occurred.

Event A = “price of IBM stock rises by at least $1 in one day”

Event B = “price of IBM stock rises by at least $1 in same week”

Pr(B|A) = Pr(A and B) / Pr(A)

Pr(A|B) = Pr(A and B) / Pr(B)

Pr(A) = 0.04

Pr(B) = 0.02

Pr(A and B) = 0.01

Pr(B|A) = 0.01 / 0.04 = 0.25

Pr(A|B) = 0.01 / 0.02 = 0.50

© Copyright 2003. Do not distribute or copy without permission.

8

Conditional Probability

A = “Price of IBM stock rises

by at least $1 in one

day.”

B

A

0.03

0.01

0.01

B = “Price of IBM stock rises

by at least $1 in same

week.”

Pr(A and B) = 0.01

Pr(A) = 0.04

Pr(B) = 0.02

Pr(A|B) = 0.01 / (0.01+0.01)

Pr(B|A) = 0.01 / (0.01+0.03)

© Copyright 2003. Do not distribute or copy without permission.

9

Conditional Probability

Table shows number of NYC police officers promoted and not promoted.

Question: Did the force exhibit gender discrimination in promoting?

Promoted

Not Promoted

Male

288

672

Female

36

204

Define the events. There are two events…

1. An officer can be male.

2. An officer can be promoted.

Being female is not a separate event it is “not being a male.”

Events

M = Being a male

P = Being promoted

© Copyright 2003. Do not distribute or copy without permission.

M’ = Being a female

P’ = Not being promoted

10

Conditional Probability

Promoted

Not Promoted

Male

288

672

Female

36

204

204

17%

P

M

672

288

36

56%

24%

3%

© Copyright 2003. Do not distribute or copy without permission.

Divide all areas by 1,200 to

find the probability

associated with each area.

11

Conditional Probability

Promoted

Not Promoted

Male

288

672

Female

36

204

What is the probability of

being male and being

promoted?

17%

P

M

56%

24%

3%

Pr(M and P) = 0.24

What is the probability of

being female and being

promoted?

Pr(M’ and P) = 0.03

Males appear to be

promoted at 8 times the

frequency of females.

© Copyright 2003. Do not distribute or copy without permission.

12

Conditional Probability

Promoted

Not Promoted

Male

288

672

But, perhaps Pr(M and P) is

greater than Pr(M’ and P)

simply because there are more

males on the force.

Female

36

204

17%

P

M

56%

24%

3%

The comparison we want to

make is

Pr(P|M) vs. Pr(P|M’).

Pr(P|M) = Pr(P and M) / Pr(M)

= 0.24 / (0.56 + 0.24) = 0.3

Pr(P|M’) = Pr(P and M’) / Pr(M’)

= 0.03 / (0.03 + 0.17) = 0.15

Males are promoted at 2 times the

frequency of females.

© Copyright 2003. Do not distribute or copy without permission.

13

Mutually Exclusive and Jointly Exhaustive Events

A set of events is mutually exclusive if no more than one of the events can occur.

A = IBM stock rises by at least $1, B = IBM stock falls by at least $1

A and B are mutually exclusive but not jointly exhaustive

A set of events is jointly exhaustive if at least one of the events must occur.

A = IBM stock rises by at least $1, B = IBM stock rises by at least $2,

C = IBM stock rises by less than $1 (or falls)

A, B, and C are jointly exhaustive but not mutually exclusive

A set of events is mutually exclusive and jointly exhaustive if exactly one of the

events must occur.

A = IBM stock rises, B = IBM stock falls, C = IBM stock does not change

A, B, and C are mutually exclusive and jointly exhaustive

© Copyright 2003. Do not distribute or copy without permission.

14

Bayes’ Theorem

Pr(A|B) = Pr(B|A) Pr(A) / Pr(B)

For N mutually exclusive and jointly exhaustive events,

Pr(B) = Pr(B|A1) Pr(A1) + Pr(B|A2) Pr(A2) + … + Pr(B|AN) Pr(AN)

© Copyright 2003. Do not distribute or copy without permission.

15

Bayes’ Theorem

Your firm purchases steel bolts from two suppliers: #1 and #2.

65% of the units come from supplier #1; the remaining 35% come from supplier

#2.

Inspecting the bolts for quality is costly, so your firm only inspects periodically.

Historical data indicate that 2% of supplier #1’s units fail, and 5% of supplier #2’s

units fail.

During production, a bolt fails causing a production line shutdown. What is the

probability that the defective bolt came from supplier #1?

The naïve answer is that there is a 65% chance that the bolt came from supplier

#1 since 65% of the bolts come from supplier #1.

The naïve answer ignores the fact that the bolt failed. We want to know not

Pr(bolt came from #1), but Pr(bolt came from #1 | bolt failed).

© Copyright 2003. Do not distribute or copy without permission.

16

Bayes’ Theorem

Define the following events:

S1 = bolt came from supplier #1, S2 = bolt came from supplier #2

F = bolt fails

Solution:

We know:

Pr(F | S1) = 2%, Pr(S1) = 65%, Pr(F | S2) = 5%

We want to know: Pr(S1 | F)

Bayes’ Theorem:

Pr(S1 | F) = Pr(F | S1) Pr(S1) / Pr(F)

Because S1 and S2 are mutually exclusive and jointly exhaustive:

Pr(F) = Pr(F | S1) Pr(S1) + Pr(F | S2) Pr(S2)

= (2%)(65%) + (5%)(35%) = 3.1%

Therefore:

© Copyright 2003. Do not distribute or copy without permission.

Pr(S1 | F) = (2%) (65%) / (3.1%) = 42%

17

Probability Measures: Summary

Pr(A and B) = Pr(A) Pr(B)

where A and B are independent events

Pr(A or B)

= Pr(A) + Pr(B) – Pr(A and B)

Pr(A|B)

= Pr(A and B) / Pr(B)

= Pr(B|A) Pr(A) / Pr(B)

Pr(A)

= Pr(A|B1) Pr(B1) + Pr(A|B2) Pr(B2) + … + Pr(A|Bn) Pr(Bn)

where B1 through Bn are mutually exclusive and jointly exhaustive

© Copyright 2003. Do not distribute or copy without permission.

18

Probability Measures: Where We’re Going

Random events

Probabilities Given

Simple Probability

Joint Probability

Disjoint Probability

Conditional Probability

© Copyright 2003. Do not distribute or copy without permission.

Probabilities not Given

Random event is discrete

Random event is continuous

Binomial

Hypergeometric

Poisson

Negative binomial

Exponential

Normal

t

Log-normal

Chi-square

F

19

Probability Distributions

So far, we have seen examples in which the probabilities of events are known

(e.g. probability of a bolt failing, probability of being male and promoted).

The behavior of a random event (or “random variable”) is summarized by the

variable’s probability distribution.

A probability distribution is a set of probabilities, each associated with a

different event for all possible events.

Example: A die is a random variable. There are 6 possible events that can

occur. The probability of each event occurring is the same (1/6) for all the

events. We call this distribution a uniform distribution.

© Copyright 2003. Do not distribute or copy without permission.

20

Probability Distributions

Mechanism that selects one event

out of all possible events.

Example:

Let X be the random variable defined as the roll of a die. There are six

possible events: X = {1, 2, 3, 4, 5, 6}.

Pr(X

Pr(X

Pr(X

Pr(X

Pr(X

Pr(X

=

=

=

=

=

=

1)

2)

3)

4)

5)

6)

=

=

=

=

=

=

1/6

1/6

1/6

1/6

1/6

1/6

=

=

=

=

=

=

16.7%

16.7%

16.7%

16.7%

16.7%

16.7%

Function that gives the probability

of each event occurring.

In general, we say that the probability distribution function for X is:

Pr(X = k) = 0.167

and the cumulative distribution function for X is:

Pr(X k) = 0.167 k

Function that gives the probability of any

one of a set of events occurring.

© Copyright 2003. Do not distribute or copy without permission.

21

Discrete vs. Continuous Distributions

Discrete vs. Continuous Distributions

In discrete distributions, the random variable takes on specific values.

For example:

If X can take on the values {1, 2, 3, 4, 5, …}, then X is a discrete random

variable.

Number of profitable quarters is a discrete random variable.

If X can take on any value between 0 and 10, then X is a continuous random

variable.

P/E ratio is a continuous random variable.

© Copyright 2003. Do not distribute or copy without permission.

22

Discrete Distributions

Terminology

Trial

Success

An opportunity for an event to occur or not occur.

The occurrence of an event.

© Copyright 2003. Do not distribute or copy without permission.

23

Binomial Distribution

Binomial Distribution

The binomial distribution gives the probability of an event occurring multiple

times.

N

x

p

Number of trials

Number of successes

Probability of a single success

N

Pr(x successes out of N trials) p x (1 p )N x

x

where

N

N!

x x !(N x )!

© Copyright 2003. Do not distribute or copy without permission.

mean Np

variance Np 1 p

24

Binomial Distribution

N

Pr(x successes out of N trials) p x (1 p )N x

x

N

N!

x x !(N x )!

Example

A CD manufacturer produces CD’s in batches of 10,000. On average, 2% of the

CD’s are defective.

A retailer purchases CD’s in batches of 1,000. The retailer will return any

shipment if 3 or more CD’s are found to be defective. For each batch received,

the retailer inspects thirty CD’s. What is the probability that the retailer will return

the batch?

N = 30 trials

30

x = 3 successes Pr(3 successes out of 30 trials) 0.023 (1 0.02)303 0.019 1.9%

3

p = 0.02

© Copyright 2003. Do not distribute or copy without permission.

25

Binomial Distribution

Example

A CD manufacturer produces CD’s in batches of 10,000. On average, 2% of the

CD’s are defective.

A retailer purchases CD’s in batches of 1,000. The retailer will return any

shipment if 3 or more CD’s are found to be defective. For each batch received,

the retailer inspects thirty CD’s. What is the probability that the retailer will return

the batch?

N = 30 trials

30

x = 3 successes Pr(3 successes out of 30 trials) 0.023 (1 0.02)303 0.019 1.9%

3

p = 0.02

Error

The formula gives us the probability of exactly 3 successes out of 30 trials. But,

the retailer will return the shipment if it finds at least 3 defective CD’s. What we

want is

Pr(3 out of 30) + Pr(4 out of 30) + … + Pr(30 out of 30)

© Copyright 2003. Do not distribute or copy without permission.

26

Binomial Distribution

N = 30 trials

30

x = 3 successes Pr(3 successes out of 30 trials) 0.023 (1 0.02)303 0.019 1.9%

3

p = 0.02

N = 30 trials

30

x = 4 successes Pr(4 successes out of 30 trials) 0.024 (1 0.02)304 0.003 0.3%

4

p = 0.02

N = 30 trials

30

x = 5 successes Pr(5 successes out of 30 trials) 0.025 (1 0.02)305 0.0003 0.03%

5

p = 0.02

Etc. out to x = 30 successes.

Alternatively

Because Pr(0 or more successes) = 1, we have an easier path to the answer:

Pr(3 or more successes) = 1 – Pr(2 or fewer successes)

© Copyright 2003. Do not distribute or copy without permission.

27

Binomial Distribution

N = 30 trials

x = 0 successes

p = 0.02

30

Pr(0 successes out of 30 trials) 0.020 (1 0.02)300 0.545

0

N = 30 trials

x = 1 successes

p = 0.02

30

Pr(1 successes out of 30 trials) 0.021 (1 0.02)301 0.334

1

N = 30 trials

x = 2 successes

p = 0.02

30

Pr(2 successes out of 30 trials) 0.022 (1 0.02)302 0.099

2

Pr(2 or fewer successes) = 0.545 + 0.334 + 0.099 = 0.978

Pr(3 or more successes) = 1 – 0.978 = 0.022 = 2.2%

© Copyright 2003. Do not distribute or copy without permission.

28

Binomial Distribution

Using the Probabilities worksheet:

1.

2.

3.

4.

5.

Find the section of the worksheet titled “Binomial Distribution.”

Enter the probability of a single success.

Enter the number of trials.

Enter the number of successes.

For “Cumulative?” enter FALSE to obtain Pr(x successes out of N trials); enter

TRUE to obtain Pr( x successes

out of N trials).

Example:

Binomial Distribution

Prob of a Single Success

Number of Trials

Number of Successes

Cumulative?

P(# of successes)

1 - P(# of successes)

0.02

30

2

TRUE

0.978

0.022

TRUE yields Pr(x 2) instead of Pr(x = 2)

Pr(x 2)

1 – Pr(x 2) = Pr(x 3)

© Copyright 2003. Do not distribute or copy without permission.

29

Binomial Distribution

Application:

Management proposes tightening quality control so as to reduce the defect rate from 2% to

1%. QA estimates that the resources required to implement the additional quality controls

will cost the firm an additional $70,000 per year.

Suppose the firm ships 10,000 batches of CD’s annually. It costs the firm $1,000 every time

a batch is returned. Is it worth it for the firm to implement the additional quality controls?

Low QA:

Defect rate = 2%

Pr(batch will be returned) = Pr(3 or more defects out of 30) = 2.2%

Expected annual cost of product returns

= (2.2%)($1,000 per batch)(10,000 batches shipped annually)

= $220,000

Going with improved QA results

in cost savings of $190,000 at a

High QA:

cost of $70,000 for a net gain of

$120,000.

Defect rate = 1%

Pr(batch will be returned) = Pr(3 or more defects out of 30) = 0.3%

Expected annual cost of product returns

= (0.3%)($1,000 per batch)(10,000 batches shipped annually)

= $30,000

© Copyright 2003. Do not distribute or copy without permission.

30

Binomial Distribution

Application:

Ford suspects that the tread on Explorer tires will separate from the tire causing a fatal

accident. Tests indicate that this will happen on one set of (four) tires out of 5 million. As of

2000, Ford had sold 875,000 Explorers. Ford estimated the cost of a general recall to be

$30 million. Ford also estimated that every accident involving separated treads would cost

Ford $3 million to settle.

Should Ford recall the tires?

What we know:

Success = tread separation

Pr(a single success) = 1 / 5 million = 0.0000002

Number of trials = 875,000

Employing the pdf for the binomial distribution, we have:

Pr(0

Pr(1

Pr(2

Pr(3

successes)

success)

successes)

successes)

=

=

=

=

© Copyright 2003. Do not distribute or copy without permission.

83.9%

14.7%

1.3%

0.1%

31

Binomial Distribution

Expectation:

An expectation is the sum of the probabilities of all possible events multiplied by the

outcome of each event.

If there are three mutually exclusive and jointly exhaustive events: A, B, and C.

The costs to a firm of events A, B, and C occurring are, respectively, TCA, TCB, and TCC.

The probabilities of events A, B, and C occurring are, respectively, pA, pB, and pC.

The expected cost to the firm is:

E(cost) = (TCA)(pA) +(TCB)(pB) + (TCC)(pC)

Should Ford issue a recall?

Issue recall:

Cost = $30 million

Do not issue recall:

E(cost) = Pr(0 incidents)(Cost of 0 incidents) + Pr(1 incident)(Cost of 1 incident) + …

(83.9%)($0 m) + (14.7%)($3 m) + (1.3%)($6 m) + (0.1%)($9 m)

$528,000

© Copyright 2003. Do not distribute or copy without permission.

32

Hypergeometric Distribution

Hypergeometric Distribution

The hypergeometric distribution gives the probability of an event occurring

multiple times when the number of possible successes is fixed.

N

n

X

x

Number

Number

Number

Number

of

of

of

of

possible trials

actual trials

possible successes

actual successes

X N X

x n x

Pr(x successes out of n trials)

N

n

where

N

N!

x x !(N x )!

© Copyright 2003. Do not distribute or copy without permission.

33

Hypergeometric Distribution

X N X

x n x

Pr(x successes out of n trials)

N

n

Example

A CD manufacturer ships a batch of 1,000 CD’s to a retailer. The manufacturer

knows that 20 of the CD’s are defective. The retailer will return any shipment if 3

or more CD’s are found to be defective. For each batch received, the retailer

inspects thirty CD’s. What is the probability that the retailer will return the batch?

N

n

X

x

=

=

=

=

1,000 possible trials

30 actual trials

20 possible successes

3 actual successes

© Copyright 2003. Do not distribute or copy without permission.

20 1000 20

3 30 3

Pr(3 successes out of 30 trials)

0.017

1000

30

34

Hypergeometric Distribution

Example

A CD manufacturer ships a batch of 1,000 CD’s to a retailer. The manufacturer

knows that 20 of the CD’s are defective. The retailer will return any shipment if 3

or more CD’s are found to be defective. For each batch received, the retailer

inspects thirty CD’s. What is the probability that the retailer will return the batch?

N

n

X

x

=

=

=

=

1,000 possible trials

30 actual trials

20 possible successes

3 actual successes

20 1000 20

3 30 3

Pr(3 successes out of 30 trials)

0.017

1000

30

Error

The formula gives us the probability of exactly 3 successes. The retailer will

return the shipment if there are 3 or more defects. Therefore, we want

Pr(return shipment) = Pr(3 defects) + Pr(4 defects) + … + Pr(20 defects)

Note: There are a maximum of 20 defects.

© Copyright 2003. Do not distribute or copy without permission.

35

Hypergeometric Distribution

N

n

X

x

=

=

=

=

1,000 possible trials

30 actual trials

20 possible successes

0 actual successes

20 1000 20

0 30 0

Pr(0 successes out of 30 trials)

0.541

1000

30

N

n

X

x

=

=

=

=

1,000 possible trials

30 actual trials

20 possible successes

1 actual successes

20 1000 20

1 30 1

Pr(1 successes out of 30 trials)

0.341

1000

30

N

n

X

x

=

=

=

=

1,000 possible trials

30 actual trials

20 possible successes

2 actual successes

20 1000 20

2 30 2

Pr(2 successes out of 30 trials)

0.099

1000

30

Pr(return shipment) = 1 – (0.541 + 0.341 + 0.099 = 0.019 = 1.9%

© Copyright 2003. Do not distribute or copy without permission.

36

Hypergeometric Distribution

Using the Probabilities worksheet:

1.

2.

3.

4.

5.

Find the section of the worksheet titled “Hypergeometric Distribution.”

Enter the number of possible trials.

Enter the number of possible successes.

Enter the number of actual trials.

Enter the number of actual successes.

Note: Excel does not offer the option of calculating the cumulative distribution

function. You must do this manually.

Example:

Hypergeometric Distribution

Number of Possible Trials

Number of Possible Successes

Number of Actual Trials

Number of Actual Successes

P(# of successes in sample)

1 - P(# successses in sample)

1,000

20

30

3

0.017

0.983

Pr(x = 3)

1 – Pr(x = 3) = Pr(x 3)

© Copyright 2003. Do not distribute or copy without permission.

37

Hypergeometric Distribution

If we erroneously use the binomial distribution, what is our estimate of the

probability that the retailer will return the batch?

Results using hypergeometric distribution

Possible Trials = 1,000

Actual Trials = 30

Possible Successes = 20

Actual Successes = 0, 1, 2

Pr(return shipment) = 1 – (0.541 + 0.341 + 0.099 = 0.019 = 1.9%

Results using binomial distribution

Trials = 30

Successes = 0, 1, 2

Probability of a single success = 20 / 1000 = 0.02

Pr(return shipment) = 2.2%

© Copyright 2003. Do not distribute or copy without permission.

38

Hypergeometric Distribution

Using the incorrect distribution underestimates the probability of return by only

0.7% who cares?

Suppose each return costs us $1,000 and we ship 10,000 cases per year.

Estimated cost of returns using hypergeometric distribution

($1,000)(10,000)(1.9%) = $190,000

Estimated cost of returns using binomial distribution

($1,000)(10,000)(2.2%) = $220,000

Using the incorrect distribution resulted in a $30,000 overestimation of costs.

© Copyright 2003. Do not distribute or copy without permission.

39

Hypergeometric Distribution

How does hypergeometric distribution differ from binomial distribution?

With binomial distribution, the probability of a success does not change as trials are

realized.

With hypergeometric distribution, the probabilities of subsequent successes change as trials

are realized.

Binomial Example:

Suppose the probability of a given CD being defective is 50%. You have a shipment of 2

CD’s.

You inspect one of the CD’s. There is a 50% chance that it is defective.

You inspect the other CD. There is a 50% chance that it is defective.

On average, you expect 1 defective CD. However, it is possible that there are no defective

CD’s. It is also possible that both CD’s are defective.

Because the probability of defect is constant, this process is binomial.

© Copyright 2003. Do not distribute or copy without permission.

40

Hypergeometric Distribution

How does hypergeometric distribution differ from binomial distribution?

With binomial distribution, the probability of a success does not change as trials are

realized.

With hypergeometric distribution, the probabilities of subsequent successes change as trials

are realized.

Hypergeometric Example:

Suppose there is one defective CD in a shipment of two CD’s.

You inspect one of the CD’s. There is a 50% chance that it is defective. You inspect the

second CD. Even without inspecting, you know for certain whether the second CD will be

defective or not.

Because you know that one of the CD’s is defective, if the first one is not defective, then

the second one must be defective.

If the first one is defective, then the second one cannot be defective.

Because the probability of the second CD being defective depends on whether or not the

first CD was defective, the process is hypergeometric.

© Copyright 2003. Do not distribute or copy without permission.

41

Hypergeometric Distribution

Example

Andrew Fastow, former CFO of Enron, was tried for securities fraud. As is usual in these

cases, if the prosecution requests documents, then the defense is obligated to surrender

those documents – even if the documents contain information that is damaging to the

defense. One tactic is for the defense to submit the requested documents along with many

other documents (called “decoys”) that are not damaging to the defense. The point is to

bury the prosecution under a blizzard of paperwork so that it becomes difficult for the

prosecution to find the few incriminating documents among the many decoys.

Suppose that the prosecutor requests all documents related to Enron’s financial status.

Fastow’s lawyers know that there are 10 incriminating documents among the set requested.

Fastow’s lawyers also know that the prosecution will be able to examine only 50 documents

between now and the trial date.

If the prosecution finds no incriminating documents, it is likely that Fastow will be found not

guilty. Assuming that each document requires the same amount of time to examine, and

assuming that the prosecution will randomly select 50 documents out of the total for

examination, how many documents (decoys plus the 10 incriminating documents) should

Fastow’s lawyers submit so that the probability of the prosecution finding no incriminating

documents is 90%?

© Copyright 2003. Do not distribute or copy without permission.

42

Hypergeometric Distribution

Example

Success = an incriminating document

N = unknown

n = 50

X = 10

x =0

N = 4775 Pr(0 successes out of 50 trials) = 0.900

Hypergeometric Distribution

Number of Possible Trials

Number of Possible Successes

Number of Actual Trials

Number of Actual Successes

P(# of successes in sample)

1 - P(# successses in sample)

© Copyright 2003. Do not distribute or copy without permission.

4,775

10

50

0.900

0.100

43

Poisson Distribution

Poisson Distribution

The Poisson distribution gives the probability of an event occurring multiple times

within a given time interval.

δ

x

e

Average number of successes per unit time.

Number of successes

2.71828…

e x

Pr(x successes per unit time)

x!

© Copyright 2003. Do not distribute or copy without permission.

44

Poisson Distribution

e x

Pr(x successes per unit time)

x!

Example

Over the course of a typical eight hour day, 100 customers come into a store.

Each customer remains in the store for 10 minutes (on average). One

salesperson can handle no more than three customers in 10 minutes. If it is likely

that more than three customers will show up in a single 10-minute interval, then

the store will have to hire another salesperson.

What is the probability that more than 3 customers will arrive in a single 10minute interval?

Time interval = 10 minutes

There are 48 ten-minute intervals during an 8 hour work day.

100 customers per day / 48 ten-minute intervals = 2.08 customers per interval.

δ = 2.08 successes per interval (on average)

x = 4, 5, 6, … successes

© Copyright 2003. Do not distribute or copy without permission.

45

Poisson Distribution

Time interval = 10 minutes

δ = 2.08 successes per interval

x = 4, 5, 6, … successes

Pr(x 4) 1 Pr(x 0) Pr(x 1) Pr(x 2) Pr(x 3)

e 2.08 2.080

Pr(0 successes)

0.125

0!

e 2.08 2.081

Pr(1 successes)

0.260

1!

e 2.08 2.082

Pr(2 successes)

0.270

2!

e 2.08 2.083

Pr(3 successes)

0.187

3!

© Copyright 2003. Do not distribute or copy without permission.

Pr(x 4) = 1 – (0.125 + 0.260 + 0.270 + 0.187)

= 0.158 = 15.8%

46

Poisson Distribution

Using the Probabilities worksheet:

1.

2.

3.

4.

Find the section of the worksheet titled “Poisson Distribution.”

Enter the average number of successes per time interval.

Enter the number of successes per time interval.

For “Cumulative?” enter FALSE to obtain Pr(x successes out of N trials); enter

TRUE to obtain Pr( x successes

out of N trials).

Example:

Poisson Distribution

E(Successes / time interval)

Successes / time interval

Cumulative?

2.08

3

TRUE yields Pr(x 3) instead of Pr(x = 3)

TRUE

P(# successes in a given interval)

0.842

1 - P(# successes in a given interval)

0.158

Pr(x 3)

1 – Pr(x 3) = Pr(x 4)

© Copyright 2003. Do not distribute or copy without permission.

47

Poisson Distribution

Suppose you want to hire an additional salesperson on a part-time basis. On

average, for how many hours per week will you need this person? (Assume a 40hour work week.)

There is a 15.8% probability that, in any given 10-minute interval, more than 3

customers will arrive. During these intervals, you will need another salesperson.

In one work day, there are 48 ten-minute intervals. In a 5-day work week, there

are (48)(5) = 240 ten-minute intervals.

On average, you need a part-time worker for 15.8% of these, or (0.158)(240) =

37.92 intervals.

37.92 ten-minute intervals = 379 minutes = 6.3 hours, or 6 hours 20 minutes.

Note: An easier way to arrive at the same answer is: (40 hours)(0.158) = 6.3

hours.

© Copyright 2003. Do not distribute or copy without permission.

48

Negative Binomial Distribution

Negative Binomial Distribution

The binomial distribution gives the probability of the xth occurrence of an event

happening on the Nth trial.

N

x

p

Number of trials

Number of successes

Probability of a single success occurring

N 1 x

N x

Pr(x th success occurring on the N th trials)

p (1 p )

x 1

where

N 1 !

N 1

x 1 x 1 !(N x )!

© Copyright 2003. Do not distribute or copy without permission.

49

Discrete Distributions: Summary

Pertinent Information

Distribution

Probability of a single success

Number of trials

Number of successes

Binomial

Number

Number

Number

Number

Hypergeometric

of

of

of

of

possible trials

actual trials

possible successes

actual successes

Average successes per time interval

Number of successes per time interval

© Copyright 2003. Do not distribute or copy without permission.

Poisson

50

Continuous Distributions

While the discrete distributions are useful for describing phenomena in which the

random variable takes on discrete (e.g. integer) values, many random variables

are continuous and so are not adequately described by discrete distributions.

Example:

Income, Financial Ratios, Sales.

Technically, financial variables are discrete because they measure in discrete units

(cents). However, the size of the discrete units is so small relative to the typical

values of the random variable, that these variables behaves like continuous

random variables.

E.g.

A firm that typically earns $10 million has an income level that is 1 billion

times the size of the discrete units in which the income is measured.

© Copyright 2003. Do not distribute or copy without permission.

51

Continuous Distributions

The continuous uniform distribution is a distribution in which the probability of

the random variable taking on a given range of values is equal for all ranges of

the same size.

Example:

X is a uniformly distributed random variable that can take on any value in the

range [1, 5].

Pr(1

Pr(2

Pr(3

Pr(4

<

<

<

<

X

X

X

X

<

<

<

<

2)

3)

4)

5)

=

=

=

=

1/4

1/4

1/4

1/4

=

=

=

=

0.25

0.25

0.25

0.25

Note: The probability of X taking on a specific value is zero.

© Copyright 2003. Do not distribute or copy without permission.

52

Continuous Uniform Distribution

The continuous uniform distribution is a distribution in which the probability of

the random variable taking on a given range of values is equal for all ranges of

the same size.

Example:

X is a uniformly distributed random variable that can take on any value in the

range [1, 5].

Pr(1

Pr(2

Pr(3

Pr(4

<

<

<

<

X

X

X

X

<

<

<

<

2)

3)

4)

5)

=

=

=

=

1/4

1/4

1/4

1/4

=

=

=

=

0.25

0.25

0.25

0.25

Note: The probability of X taking on a specific value is zero.

© Copyright 2003. Do not distribute or copy without permission.

53

Continuous Uniform Distribution

Example:

Pr(1

Pr(2

Pr(3

Pr(4

<

<

<

<

X

X

X

X

<

<

<

<

2)

3)

4)

5)

=

=

=

=

1/4

1/4

1/4

1/4

=

=

=

=

0.25

0.25

0.25

0.25

In general, we say that the probability density function for X is:

pdf(X) = 0.2 for all k

(note: Pr(X = k) = 0 for all k)

and the cumulative density function for X is:

Pr(X k) = (k – 1) / 4

mean

a b

2

variance

b a b a

12

a minimum value of the random variable

b maximum value of the random variable

© Copyright 2003. Do not distribute or copy without permission.

54

Exponential Distribution

Exponential Distribution

The exponential distribution gives the probability of the maximum amount of time

required until the next occurrence of an event.

λ

x

Average number of time intervals between the occurrence of successes.

Maximum time intervals until the next success occurs.

Pr(the next success occuring in x or fewer time intervals) 1 ex

mean 1

variance 2

© Copyright 2003. Do not distribute or copy without permission.

55

Normal Distribution

Many continuous random processes are normally distributed. Among them are:

1.

Proportions (provided that the proportion is not close to the extremes of 0 or

1).

2.

Sample Means (provided that the means are computed based on a large

enough sample size).

3.

Differences in Sample Means (provided that the means are computed based

on a large enough sample size).

4.

Mean Differences (provided that the means are computed based on a large

enough sample size).

5.

Most natural processes (including many economic, and financial processes).

© Copyright 2003. Do not distribute or copy without permission.

56

Normal Distribution

There are an infinite number of normal distributions, each with a different mean

and variance.

We describe a normal distribution by its mean and variance:

µ = Population mean

σ2 = Population variance

The normal distribution with a mean of zero and a variance of one is called the

standard normal distribution.

µ =0

σ2 = 1

© Copyright 2003. Do not distribute or copy without permission.

57

Normal Distribution

The pdf (probability density function) for normal distributions are bell-shaped. This means

that the random variable can take on any value over the range + to – , but the

probability of the random variable straying from its mean decreases as the distance from

the mean increases.

© Copyright 2003. Do not distribute or copy without permission.

58

Normal Distribution

For all normal distributions, approximately:

50% of the observations lie within 2 / 3

68% of the observations lie within

95% of the observations lie within 2

99% of the observations lie within 3

Example:

Suppose the return on a firm’s stock price is normally distributed with a mean of 10%

and a standard deviation of 6%. We would expect that, at any given point in time:

1. There is a 50% probability that the return on the stock is between 6% and 14%

2. There is a 68% probability that the return on the stock is between 4% and 16%.

3. There is a 95% probability that the return on the stock is between –2% and 22%.

4. There is a 99% probability that the return on the stock is between –8% and 28%.

© Copyright 2003. Do not distribute or copy without permission.

59

Normal Distribution

Population Measures:

Population mean

Calculated using all possible observations.

2

Population variance

Sample Measures (estimates of population measures):

Sample mean

x

Sample variance

s

Calculated using a subset of all possible observations.

2

Variance measures the square of the average dispersion of observations around a mean.

2

1 N

Sample Variance s

x i x

N 1 i 1

2

Population Variance 2

1

N

x i

N i

1

© Copyright 2003. Do not distribute or copy without permission.

2

60

Problem of Unknown Population Parameters

If we do not have all possible observations, then we cannot compute the

population mean and variance. What to do?

Take a sample of observations and use the sample mean and sample variance

as estimates of the population parameters.

Problem: If we use the sample mean and sample variance instead of the

population mean and population variance, then we can no longer say that “50%

of observations lie within 2 / 3 , etc.”

In fact, the normal distribution no longer describes the distribution of

observations. We must use the t-distribution.

The t-distribution accounts for the fact that (1) the observations are normally

distributed, and (2) we aren’t sure what the mean and variance of the

distribution is.

© Copyright 2003. Do not distribute or copy without permission.

61

t-Distribution

There are an infinite number of t-distributions, each with different degrees of

freedom. Degrees of freedom is a function of the number of observations in a

data set. The more degrees of freedom (i.e. observations) exist, the closer the tdistribution is to the standard normal.

For most purposes, degrees of freedom = N – 1, where N is the number of

observations in the sample.

The more degrees of freedom that exist, the closer the t-distribution is to the

standard normal distribution.

© Copyright 2003. Do not distribute or copy without permission.

62

t-Distribution

The standard normal distribution is the same as the t-distribution

with an infinite number of degrees of freedom.

© Copyright 2003. Do not distribute or copy without permission.

63

t-Distribution

Degrees of Freedom

Standard Deviations

© Copyright 2003. Do not distribute or copy without permission.

5

10

20

30

∞

2/3

47%

48%

49%

49%

50%

1

64%

66%

67%

68%

68%

2

90%

93%

94%

95%

95%

3

97%

98%

99%

99%

99%

64

t-Distribution

Example:

Consumer reports tests the gas mileage of seven SUV’s. They find that the sample of

SUV’s has a mean mileage of 15 mpg with a standard deviation of 3 mpg. Assuming

that the population of gas mileages is normally distributed, based on this sample,

what percentage of SUV’s get more than 20 mpg?

We don’t know the area indicated because we

don’t know the properties of a t-distribution with

a mean of 15 and a standard deviation of 3.

s = 3 mpg

area = ?

However, we can convert this distribution to a

distribution whose properties we do know.

The formula for conversion is:

15 mpg

20 mpg

Test statistic

Test value mean

standard deviation

“Test value” is the value we are examining (in

this case, 20 mpg), “mean” is the mean of the

sample observations (in this case, 15 mpg), and

“standard deviation” is the standard deviation of

the sample observations (in this case, 3 mpg).

© Copyright 2003. Do not distribute or copy without permission.

65

t-Distribution

Example:

Consumer reports tests the gas mileage of seven SUV’s. They find that the sample of

SUV’s has a mean mileage of 15 mpg with a standard deviation of 3 mpg. Assuming

that the population of gas mileages is normally distributed, based on this sample,

what percentage of SUV’s get more than 20 mpg?

s=1

s = 3 mpg

area = ?

t6

15 mpg

0

20 mpg

Test value mean

Test statistic

standard deviation

20 15

1.67

3

© Copyright 2003. Do not distribute or copy without permission.

1.67

We can look up

the area to the

right of 1.67 on a

t6 distribution.

66

t-Distribution

s=1

s = 3 mpg

area = ?

t6

15 mpg

0

20 mpg

1.67

Test value mean

Test statistic

standard deviation

20 15

1.67

3

area = 0.073

t Distribution

© Copyright 2003. Do not distribute or copy without permission.

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

1.670

6

7.30%

Pr(t < Test statistic)

92.70%

67

t-Distribution

Example:

A light bulb manufacturer wants to monitor the quality of the bulbs it produces. To monitor

product quality, inspectors test one bulb out of every thousand to find its burn-life. Since the

production machinery was installed, inspectors have tested 30 bulbs and found an average

burn-life of 1,500 hours with a standard deviation of 200. Management wants to recalibrate its

machines anytime a particularly short-lived bulb is discovered. Management defines “short-lived”

as a burn-life so short that 999 out of 1,000 bulbs burns longer. What is the minimum number

of hours a test bulb must burn for production not to be recalibrated?

s=1

s = 200 hrs

area = 0.001

area = 0.001

area = 1 – 0.001 = 0.999

X hrs

1,500 hrs

-3.3963

t29

0

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

29

Pr(t < Test statistic)

© Copyright 2003. Do not distribute or copy without permission.

Pr(t > Critical value)

99.90%

Critical Value

-3.3963

68

t-Distribution

Example:

A light bulb manufacturer wants to monitor the quality of the bulbs it produces. To monitor

product quality, inspectors test one bulb out of every thousand to find its burn-life. Since the

production machinery was installed, inspectors have tested 30 bulbs and found an average

burn-life of 1,500 hours with a standard deviation of 200. Management wants to recalibrate its

machines anytime a particularly short-lived bulb is discovered. Management defines “short-lived”

as a burn-life so short that 999 out of 1,000 bulbs burns longer. What is the minimum number

of hours a test bulb must burn for production not to be recalibrated?

s=1

s = 200 hrs

area = 0.001

area = 0.001

t29

821 hrs

1,500 hrs

-3.3963

0

Test value mean

Test statistic

standard deviation

X 1500

200

© Copyright 2003. Do not distribute or copy without permission.

3.3963 X 821

69

t-Distribution

Example:

Continuing with the previous example, suppose we had used the normal distribution

instead of the t-distribution to answer the question.

The probabilities spreadsheet gives us the

following results.

Standard Normal Distribution (Z)

Test statistic

Pr(Z > Test statistic)

Pr(Z < Test statistic)

Pr(Z > Critical value)

Critical Value

Test statistic

3.09

0.10%

3.0902

Test value mean

standard deviation

Test value 1,500

200

Test value (3.09)(200) 1,500 882

© Copyright 2003. Do not distribute or copy without permission.

70

t-Distribution vs. Normal Distribution

Correct distribution

Using the t-distribution, we recalibrate production whenever we observe a light bulb

with a life of 821 or fewer hours.

Incorrect distribution

Using the standard normal distribution, we recalibrate production whenever we

observe a light bulb with a life of 882 or fewer hours.

By incorrectly using the standard normal distribution, we would recalibrate

production too frequently.

When can we use the normal distribution?

As an approximation, when the number of observations is large enough that the

difference in results is negligible. The difference starts to become negligible at 30

or more degrees of freedom. For more accurate results, use the t-distribution.

© Copyright 2003. Do not distribute or copy without permission.

71

Test Statistic vs. Critical Value

Terminology

We have been using the terms “test statistic” and “critical value” somewhat

interchangeably. Which term is appropriate depends on whether the number

described is being used to find an implied probability (test statistic), or represents a

known probability (critical value).

When we wanted to know the probability of an SUV getting more than 20 mpg, we

constructed the test statistic and asked “what is probability of observing the test

statistic?”

When we wanted to know what cut-off to impose for recalibrating production of light

bulbs, we found the critical value that gave us the probability we wanted, and then

asked “what test value has the probability implied by the critical value?”

© Copyright 2003. Do not distribute or copy without permission.

72

Test Statistic vs. Critical Value

Example

The return on IBM stock has averaged 19.3% over the past 10 years with a standard

deviation of 4.5%. Assuming that past performance is indicative of future results and

assuming that the population of rates of return is normally distributed, what is the

probability that the return on IBM next year will be between 10% and 20%?

1. Picture the problem with respect to the

appropriate distribution.

2. Determine what area(s) represents the

answer to the problem.

3. Determine what area(s) you must find

(this depends on how the probability

table or function is defined).

4. Perform computations to find desired

area based on known areas.

Question asks for this area.

Look up these areas.

© Copyright 2003. Do not distribute or copy without permission.

73

t-Distribution

Example

The return on IBM stock has averaged 19.3% over the past 10 years with a standard

deviation of 4.5%. Assuming that past performance is indicative of future results and

assuming that the population of rates of return is normally distributed, what is the

probability that the return on IBM next year will be between 10% and 20%?

Convert question

to form that can

be analyzed.

© Copyright 2003. Do not distribute or copy without permission.

74

t-Distribution

Example

The return on IBM stock has averaged 19.3% over the past 10 years with a standard

deviation of 4.5%. Assuming that past performance is indicative of future results and

assuming that the population of rates of return is normally distributed, what is the

probability that the return on IBM next year will be between 10% and 20%?

Test statistic

Test value mean

standard deviation

Left Test statistic

10% 19.3%

2.07

4.5%

Right Test statistic

© Copyright 2003. Do not distribute or copy without permission.

20% 19.3%

0.16

4.5%

75

t-Distribution

Example

The return on IBM stock has averaged 19.3% over the past 10 years with a standard

deviation of 4.5%. Assuming that past performance is indicative of future results and

assuming that the population of rates of return is normally distributed, what is the

probability that the return on IBM next year will be between 10% and 20%?

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

(2.070)

9.0

96.58%

0.160

9.0

43.82%

Pr(t > Critical value)

0.50%

Pr(t > Critical value)

0.50%

Critical Value

3.250

Critical Value

3.250

100% – 96.58% = 3.42%

-2.07

© Copyright 2003. Do not distribute or copy without permission.

0.16

76

t-Distribution

Example

The return on IBM stock has averaged 19.3% over the past 10 years with a standard

deviation of 4.5%. Assuming that past performance is indicative of future results and

assuming that the population of rates of return is normally distributed, what is the

probability that the return on IBM next year will be between 10% and 20%?

3.42%

43.82%

3.42% + 43.82% = 47.24%

100% – 47.24% = 52.76%

There is a 53% chance that IBM will yield a return between 10% and 20% next year.

© Copyright 2003. Do not distribute or copy without permission.

77

t-Distribution

Example

Your firm has negotiated a labor contract that requires that the firm provide annual raises no

less than the rate of inflation. This year, the total cost of labor covered under the contract will

be $38 million. Your CFO has indicated that the firm’s current financing can support up to a

$2 million increase in labor costs. Based on the historical inflation numbers below, calculate

the probability of labor costs increasing by at least $2 million next year.

Year

Inflation Rate

Year

Inflation Rate

1982

6.2%

1993

3.0%

1983

3.2%

1994

2.6%

1984

4.3%

1995

2.8%

1985

3.6%

1996

3.0%

1986

1.9%

1997

2.3%

1987

3.6%

1998

1.6%

1988

4.1%

1999

2.2%

1989

4.8%

2000

3.4%

1990

5.4%

2001

2.8%

1991

4.2%

2002

1.6%

1992

3.0%

2003

1.8%

© Copyright 2003. Do not distribute or copy without permission.

Calculate the mean

and standard

deviation for inflation.

Sample mean = 3.2%

Sample stdev = 1.2%

78

t-Distribution

Example

Your firm has negotiated a labor contract that requires that the firm provide annual raises no

less than the rate of inflation. This year, the total cost of labor covered under the contract will

be $38 million. Your CFO has indicated that the firm’s current financing can support up to a

$2 million increase in labor costs. Based on the historical inflation numbers below, calculate

the probability of labor costs increasing by at least $2 million next year.

A $2 million increase on a $38 million base is a 2/38 = 5.26% increase

Sample mean = 3.2%

Sample stdev = 1.2%

N = 22

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

t21

Pr(t < Test statistic)

Test Statistic

© Copyright 2003. Do not distribute or copy without permission.

1.717

21

5.03%

94.97%

5.26% 3.2%

1.717

1.2%

79

t-Distribution

Example

Your firm has negotiated a labor contract that requires that the firm provide annual raises no

less than the rate of inflation. This year, the total cost of labor covered under the contract will

be $38 million. Your CFO has indicated that the firm’s current financing can support up to a

$2 million increase in labor costs. The CFO wants to know what the magnitude of a possible

“worst-case” scenario. Answer the following: “There is a 90% chance that the increase in

labor costs will be no more than what amount?”

1.3232

Test Value 3.2%

Test Value 4.79%

1.2%

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

21

50.00%

Pr(t < Test statistic)

50.00%

Pr(t > Critical value)

10.00%

Critical Value

1.3232

A 4.79% increase on a $38 million base is (4.79%)($38 million) = $1.82 million.

© Copyright 2003. Do not distribute or copy without permission.

80

t-Distribution

The government has contracted a private firm to produce hand grenades. The specifications

call for the grenades to have 10 second fuses. The government has received a shipment of

100,000 grenades and will test a sample of 20 grenades. If, based on the sample, the

government determines that the probability of a grenade going off in less than 8 seconds

exceeds 1%, then the government will reject the entire shipment.

The test results are as follows.

Time to Detonation

8 seconds

9 seconds

10 seconds

11 seconds

12 seconds

13 seconds

Number of Grenades

2

3

10

3

1

1

In general, one would not expect time measures to be normally distributed (because time

cannot be negative). However, if the ratio of the mean to the standard deviation is large

enough, we can use the normal distribution as an approximation.

Should the government reject the shipment?

© Copyright 2003. Do not distribute or copy without permission.

81

t-Distribution

Time to Detonation

8 seconds

9 seconds

10 seconds

11 seconds

12 seconds

13 seconds

Number of Grenades

2

3

10

3

1

1

First: What is the ratio of the mean to the standard deviation?

Mean = 10.05 seconds

Standard deviation = 1.20 seconds

Ratio is 8.375.

A ratio of greater than 8 is a decent heuristic.

This is not a rigorous test for the appropriateness of the normal distribution. But,

it is not too bad for a “quick and dirty assessment.”

© Copyright 2003. Do not distribute or copy without permission.

82

t-Distribution

Should the government reject the shipment?

Naïve answer: Don’t reject the shipment because none of the grenades detonated in

less than 8 seconds Pr(detonation in less than 8 seconds) = 0.

12

No grenades

detonated in less

than 8 seconds.

Number of Grenades

10

8

6

4

2

0

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

Seconds to Detonation

Histogram: Shows number of observations according to type

© Copyright 2003. Do not distribute or copy without permission.

83

t-Distribution

Should the government reject the shipment?

Correct answer:

We use the sample data to infer the shape of the population distribution.

Inferred population

distribution shows a

positive probability

of finding detonation

times of less than 8

seconds.

© Copyright 2003. Do not distribute or copy without permission.

84

t-Distribution

Should the government reject the shipment?

Correct answer:

1.

Find the test statistic that corresponds to 8 seconds.

Test statistic

2.

8 10.05

1.71

1.2

Find the area to the left of the test statistic.

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

(1.710)

19

94.82%

Pr(t < Test statistic)

5.18%

Pr(detonation < 8 seconds) = 5.2%

-1.71

3.

Reject the shipment because probability of early detonation is too high.

© Copyright 2003. Do not distribute or copy without permission.

85

Lognormal Distribution

In the previous example, we noted that the normal distribution may not properly

describe the behavior of random variables that are bounded.

A normally distributed random variable can take on any value from negative infinity to

positive infinity. If the random variable you are analyzing is bounded (i.e. it cannot

cover the full range from negative to positive infinity), then using the normal

distribution to predict the behavior of the random variable can lead to erroneous

results.

Example:

Using the data from the hand grenade example, the probability of a single grenade

detonating in less than zero seconds is 0.0001. That means that, on average, we can

expect one grenade out of every 10,000 to explode after a negative time interval.

Since this is logically impossible, we must conclude that the normal distribution is not

the appropriate distribution for describing time-to-detonation.

© Copyright 2003. Do not distribute or copy without permission.

86

Lognormal Distribution

In instances in which a random variable must take on a positive value, it is often the

case that the random variable has a lognormal distribution.

A random variable is lognormally distributed when the natural logarithm of the

random variable is normally distributed.

Example: Return to the hand grenade example.

Time to Detonation

8 seconds

9 seconds

10 seconds

11 seconds

12 seconds

13 seconds

Log of Time to Detonation

2.0794

2.1972

2.3026

2.3979

2.4849

2.5649

Number of Grenades

2

3

10

3

1

1

As time approaches positive infinity, ln(time) approaches positive infinity.

As time approaches zero, ln(time) approaches negative infinity.

© Copyright 2003. Do not distribute or copy without permission.

87

Lognormal Distribution

Assuming that the times-to-detonation were normally distributed, we found a 2.6%

probability of detonation occurring in under 8 seconds.

Assuming that the times-to-detonation are lognormally distributed, what is the

probability of detonation occurring in under 8 seconds?

Log of Time to Detonation

2.0794

2.1972

2.3026

2.3979

2.4849

2.5649

Number of Grenades

2

3

t Distribution

10

Test statistic

Degrees of Freedom

3

Pr(t > Test statistic)

1

Pr(t < Test statistic)

1

(1.886)

19

96.27%

3.73%

Mean = 2.3010

Standard deviation = 0.1175

Test statistic

ln(8) 2.3010

1.8856

0.1175

Pr(detonation < 8 seconds) = 3.7%

© Copyright 2003. Do not distribute or copy without permission.

88

Lognormal Distribution

Example

You are considering buying stock in a small cap firm. The firm’s sales over the past

nine quarters are shown below. You expect your investment to appreciate in value

next quarter provided that the firm’s sales next quarter exceed $27 million. Based on

this assumption, what is the probability that your investment will appreciate in value?

Quarter

1

2

3

4

5

6

7

8

9

Sales (millions)

$25.2

$12.1

$27.9

$28.9

$32.0

$29.9

$34.4

$29.8

$23.2

© Copyright 2003. Do not distribute or copy without permission.

Because sales cannot be negative, it may

be more appropriate to model the firm’s

sales as lognormal rather than normal.

89

Lognormal Distribution

Example

What is the probability that the firm’s sales will exceed $27 million next quarter?

Quarter

1

2

3

4

5

6

7

8

9

Sales (millions)

$25.2

$12.1

$27.9

$28.9

$32.0

$29.9

$34.4

$29.8

$23.2

ln(Sales)

3.227

2.493

3.329

3.364

3.466

3.398

3.538

3.395

3.144

Mean = 3.261

Standard deviation = 0.311

Test statistic

ln(27) 3.261

0.1106

0.311

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

0.1106

8.0

45.73%

Pr(t > Critical value)

1.00%

Critical Value

2.896

Pr(sales exceeding $27 million next quarter) = 46%

Odds are that the investment will decline in value.

© Copyright 2003. Do not distribute or copy without permission.

90

Lognormal Distribution

Example

Suppose we, incorrectly, assumed that sales were normally distributed.

Quarter

1

2

3

4

5

6

7

8

9

Sales (millions)

$25.2

$12.1

$27.9

$28.9

$32.0

$29.9

$34.4

$29.8

$23.2

Mean = 27.044

Standard deviation = 6.520

Test statistic

27 27.044

0.007

6.520

t Distribution

Test statistic

Degrees of Freedom

Pr(t > Test statistic)

(0.007)

8.0

50.27%

Pr(t > Critical value)

1.00%

Critical Value

2.896

Pr(sales exceeding $27 million next quarter) > 50%

Odds are that the investment will increase in value.

Incorrect distribution yields opposite conclusion.

© Copyright 2003. Do not distribute or copy without permission.

91

Lognormal Distribution

Warning:

The “mean of the logs” is not the same as the “log of the mean.”

Mean sales = 27.04

ln(27.044) = 3.298

But:

Mean of log sales = 3.261

The same is true for standard deviation the “standard deviation of the logs” is not

the same as the “log of the standard deviations.”

In using the lognormal distribution, we need the “mean of the logs,” and the

“standard deviation of the logs.”

© Copyright 2003. Do not distribute or copy without permission.

92

Lognormal Distribution

When should one use the lognormal distribution?

You should use the lognormal distribution if the random variable is either non-negative or non-positive

Can one use the normal distribution as an approximation of the lognormal distribution?

Yes, but only when the ratio of the mean to the standard deviation is large (e.g. greater than 8).

Note: If the random variable is only positive (or only negative), then you are always better off using the

lognormal distribution vs. the normal or t-distributions. The rules above give guidance for using the

normal or t-distributions as approximations.

Hand grenade example

Mean / Standard deviation = 10.05 / 1.20 = 8.38

Normal distribution underestimated probability of early detonation by 1.1% (3.7% for lognormal vs.

2.6% for t-distribution)

Quarterly sales example

Mean / Standard deviation = 27.04 / 6.52 = 4.15

Normal distribution overestimated probability of appreciation by 4.6%

(45.7% for lognormal vs. 50.3% for t-distribution)

© Copyright 2003. Do not distribute or copy without permission.

93

Distribution of Sample Means

So far, we have looked at the distribution of individual observations.

Gas mileage for a single SUV.

Burn life for a single light bulb.

Return on IBM stock next quarter.

Inflation rate next year.

Time to detonation for a single hand grenade.

Firm’s sales next quarter.

In each case, we had sample means and sample standard deviations and asked,

“What is the probability of the next observation lying within some range?”

Note: Although we drew on information contained in a sample of many observations,

the probability questions we asked always concerned a single observation.

In these cases, the random variable we analyzed was a “single draw” from the

population.

© Copyright 2003. Do not distribute or copy without permission.

94

Distribution of Sample Means

We now want to ask probability questions about sample means.

Example:

EPA standards require that the mean gas mileage for a manufacturer’s cars be at least

20 mpg. Every year, the EPA takes a sampling of the gas mileages of a manufacturer’s

cars. If the mean of the sample is below 20 mpg, the manufacturer is fined.

In 2001, GM produced 145,000 cars. Suppose five EPA analysts each select 10 cars

and measures their mileages. The analysts obtain the following results.

Analyst #1

17

16

19

21

19

21

16

16

19

22

Analyst #2

22

22

19

22

25

18

16

24

18

15

© Copyright 2003. Do not distribute or copy without permission.

Analyst #3

16

20

17

17

23

23

19

22

20

15

Analyst #4

21

20

22

20

18

22

19

23

17

21

Analyst #5

24

24

20

22

17

23

22

15

19

15

95

Distribution of Sample Means

Analyst #1

17

16

19

21

19

21

16

16

19

22

Analyst #2

22

22

19

22

25

18

16

24

18

15

Analyst #3

16

20

17

17

23

23

19

22

20

15

Analyst #4

21

20

22

20

18

22

19

23

17

21

Analyst #5

24

24

20

22

17

23

22

15

19

15

Notice that each analyst obtained a different sample mean. The sample means are:

Analyst

Analyst

Analyst

Analyst

Analyst

#1:

#2:

#3:

#4:

#5:

18.6

20.1

19.2

20.3

20.1

The analysts obtain different sample means because their samples consist of

different observations. Which is correct?

Each sample mean is an estimate of the population mean.

The sample means vary depending on the observations picked.

The sample means are, themselves, random variables.

© Copyright 2003. Do not distribute or copy without permission.

96

Distribution of Sample Means

Notice that we have identified two distinct random variables:

1.

The process that generates the observations is one random variable (e.g. the

mechanism that determines each car’s mpg).

2.

The mean of a sample of observations is another random variable (e.g. the

average mpg of a sample of cars).

The distribution of sample means is governed by the central limit theorem.

Central Limit Theorem

Regardless of the distribution of the random variable generating the observations, the

sample means of the observations are t-distributed.

Example:

It doesn’t matter whether mileage is distributed normally, lognormally, or according to

any other distribution, the sample means of gas mileages are t-distributed.

© Copyright 2003. Do not distribute or copy without permission.

97

Distribution of Sample Means

Example:

The following slides show sample means taking from a uniformly distributed random

variable.

The random variable can take on any number over the range 0 through 1 with equal

probability.

For each slide, we see the mean of a sample of observations of this uniformly

distributed random variable.

© Copyright 2003. Do not distribute or copy without permission.

98

Distribution of Sample Means

One Thousand Sample Means: Each Derived from 1 Observation

35

Number of Sample Means Observed

30

25

20

15

10

5

0.92

0.88

0.84

0.80

0.76

0.72

0.68

0.64

0.60

0.56

0.52

0.48

0.44

0.40

0.36

0.32

0.28

0.24

0.20

0.16

0.12

0.08

0.04

0.00

0

Value of Sample Mean

© Copyright 2003. Do not distribute or copy without permission.

99

Distribution of Sample Means

One Thousand Sample Means: Each Derived from 2 Observations

60

Number of Sample Means Observed

50

40

30

20

10

0.92

0.88

0.84

0.80

0.76

0.72

0.68

0.64

0.60

0.56

0.52

0.48

0.44

0.40

0.36

0.32

0.28

0.24

0.20

0.16

0.12

0.08

0.04

0.00

0

Value of Sample Mean

© Copyright 2003. Do not distribute or copy without permission.

100

Distribution of Sample Means

One Thousand Sample Means: Each Derived from 5 Observations

80

Number of Sample Means Observed

70

60

50

40

30

20

10

0.92

0.88

0.84

0.80

0.76

0.72

0.68

0.64

0.60

0.56

0.52

0.48

0.44

0.40

0.36

0.32

0.28

0.24

0.20

0.16

0.12

0.08

0.04

0.00

0

Value of Sample Mean

© Copyright 2003. Do not distribute or copy without permission.

101

Distribution of Sample Means

One Thousand Sample Means: Each Derived from 20 Observations

160

Number of Sample Means Observed

140

120

100

80

60

40

20

0.92

0.88

0.84

0.80

0.76

0.72

0.68

0.64

0.60

0.56

0.52

0.48

0.44

0.40

0.36

0.32

0.28

0.24

0.20

0.16

0.12

0.08

0.04

0.00

0

Value of Sample Mean

© Copyright 2003. Do not distribute or copy without permission.

102

Distribution of Sample Means

One Thousand Sample Means: Each Derived from 200 Observations

400

Number of Sample Means Observed

350

300

250

200

150

100

50

0.92

0.88

0.84

0.80

0.76

0.72

0.68

0.64

0.60

0.56

0.52

0.48

0.44

0.40

0.36

0.32

0.28

0.24

0.20

0.16

0.12

0.08

0.04

0.00

0

Value of Sample Mean

© Copyright 2003. Do not distribute or copy without permission.

103

Distribution of Sample Means

Notice two things that occur as we increase the number of observations that feed into

each sample.

1. The distribution of sample means very quickly becomes “bell shaped.” This is the

result of the central limit theorem – basing a sample mean on more observations

causes the sample mean’s distribution to approach the normal distribution.

2. The variance of the distribution decreases. This is the result of our next topic – the

variance of a sample mean.

The variance of a sample mean decreases as the number of observations comprising the

sample increases.

Standard deviation of the observations

Standard deviation of sample means

Number of observations comprising the sample means

(called the “standard error”)

© Copyright 2003. Do not distribute or copy without permission.

104

Distribution of Sample Means

Example:

In the previous slides, we saw sample means of observations drawn from a uniformly

distributed random variable.

The variance of a uniformly distributed random variable that ranges from 0 to 1 is 1/12.

Therefore:

1 /12

0.0833

1

1 /12

2 observations

0.0417

2

1 /12

5 observations

0.0167

5

1 /12

20 observations

0.0042

20

1 /12

200 observations

0.0004

200

Variance of sample means based on 1 observation

Variance of sample means based on

Variance of sample means based on

Variance of sample means based on

Variance of sample means based on

© Copyright 2003. Do not distribute or copy without permission.

105

Distribution of Sample Means

Example:

Let us return to the EPA analysts.