Topic_9

advertisement

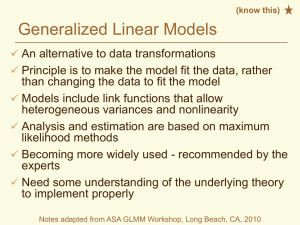

Topic 9: Remedies Outline • Review diagnostics for residuals • Discuss remedies – Nonlinear relationship – Nonconstant variance – Non-Normal distribution – Outliers Diagnostics for residuals • Look at residuals to find serious violations of the model assumptions – nonlinear relationship – nonconstant variance – non-Normal errors • presence of outliers • a strongly skewed distribution Recommendations for checking assumptions • Plot Y vs X (is it a linear relationship?) • Look at distribution of residuals • Plot residuals vs X, time, or any other potential explanatory variable • Use the i=sm## in symbol statement to get smoothed curves Plots of Residuals • Plot residuals vs – Time (order) – X or predicted value (b0+b1X) • Look for –nonrandom patterns –outliers (unusual observations) Residuals vs Order • Pattern in plot suggests dependent errors / lack of indep • Pattern usually a linear or quadratic trend and/or cyclical • If you are interested read KNNL pgs 108-110 Residuals vs X • Can look for –nonconstant variance –nonlinear relationship –outliers –somewhat address Normality of residuals Tests for Normality • H0: data are an i.i.d. sample from a Normal population • Ha: data are not an i.i.d. sample from a Normal population • KNNL (p 115) suggest a correlation test that requires a table look-up Tests for Normality • We have several choices for a significance testing procedure • Proc univariate with the normal option provides four proc univariate normal; • Shapiro-Wilk is a common choice Tests for Normality Test Shapiro-Wilk Statistic p Value 0.978904 Pr < W 0.8626 W Kolmogorov-Smirnov D Cramer-von Mises W-Sq 0.033263 Pr > W-Sq >0.2500 Anderson-Darling A-Sq 0.207142 Pr > A-Sq >0.2500 0.09572 Pr > D >0.1500 All P-values > 0.05…Do not reject H0 Other tests for model assumptions • Durbin-Watson test for serially correlated errors (KNNL p 114) • Modified Levene test for homogeneity of variance (KNNL p 116-118) • Breusch-Pagan test for homogeneity of variance (KNNL p 118) • For SAS commands see topic9.sas Plots vs significance test • Plots are more likely to suggest a remedy • Significance tests results are very dependent on the sample size; with sufficiently large samples we can reject most null hypotheses Default graphics with SAS 9.3 proc reg data=toluca; model hours=lotsize; id lotsize; run; Will discuss these diagnostics more in multiple regression Provides rule of thumb limits Questionable observation (30,273) Additional summaries • Rstudent: Studentized residual…almost all should be between ± 2 • Leverage: “Distance” of X from center…helps determine outlying X values in multivariable setting…outlying X values may be influential • Cooks’D: Influence of ith case on all predicted values Lack of fit • When we have repeat observations at different values of X, we can do a significance test for nonlinearity • Browse through KNNL Section 3.7 • Details of approach discussed when we get to KNNL 17.9, p 762 • Basic idea is to compare two models • Gplot with a smooth is a better (i.e., simpler) approach SAS code and output proc reg data=toluca; model hours=lotsize / lackfit; run; Analysis of Variance Sum of Squares 252378 Mean Square 252378 Source Model DF 1 Error 23 54825 2383.71562 Lack of Fit 9 17245 1916.06954 Pure Error 14 37581 2684.34524 Corrected Total 24 307203 F Value 105.88 Pr > F <.0001 0.71 0.6893 Nonlinear relationships • We can model many nonlinear relationships with linear models, some have several explanatory variables (i.e., multiple linear regression) –Y = β0 + β1X + β2X2 + e (quadratic) –Y = β0 + β1log(X) + e Nonlinear Relationships • Sometimes can transform a nonlinear equation into a linear equation • Consider Y = β0exp(β1X) + e • Can form linear model using log log(Y) = log(β0) + β1X + log(e) • Note that we have changed our assumption about the error Nonlinear Relationship • We can perform a nonlinear regression analysis • KNNL Chapter 13 • SAS PROC NLIN Nonconstant variance • Sometimes we model the way in which the error variance changes –may be linearly related to X • We can then use a weighted analysis • KNNL 11.1 • Use a weight statement in PROC REG Non-Normal errors • Transformations often help • Use a procedure that allows different distributions for the error term –SAS PROC GENMOD Generalized Linear Model • Possible distributions of Y: – Binomial (Y/N or percentage data) – Poisson (Count data) – Gamma (exponential) – Inverse gaussian – Negative binomial – Multinomial • Specify a link function for E(Y) Ladder of Reexpression (transformations) p 1.5 1.0 0.5 0.0 -0.5 -1.0 Transformation is xp Circle of Transformations X down, Y up Y X up, Y up X X down, Y down X up, Y down Box-Cox Transformations • Also called power transformations • These transformations adjust for non-Normality and nonconstant variance • Y´ = Y or Y´ = (Y - 1)/ • In the second form, the limit as approaches zero is the (natural) log Important Special Cases • • • • • = 1, Y´ = Y1, no transformation = .5, Y´ = Y1/2, square root = -.5, Y´ = Y-1/2, one over square root = -1, Y´ = Y-1 = 1/Y, inverse = 0, Y´ = (natural) log of Y Box-Cox Details • We can estimate by including it as a parameter in a non-linear model • Y = β0 + β1X + e and using the method of maximum likelihood • Details are in KNNL p 134-137 • SAS code is in boxcox.sas Box-Cox Solution • Standardized transformed Y is –K1(Y - 1) if ≠ 0 –K2log(Y) if = 0 where K2 = ( Yi)1/n (the geometric mean) and K1 = 1/ ( K2 -1) • Run regressions with X as explanatory variable • estimated minimizes SSE Example data a1; input age plasma @@; cards; 0 13.44 0 12.84 0 11.91 0 20.09 0 15.60 1 10.11 1 11.38 1 10.28 1 8.96 1 8.59 2 9.83 2 9.00 2 8.65 2 7.85 2 8.88 3 7.94 3 6.01 3 5.14 3 6.90 3 6.77 4 4.86 4 5.10 4 5.67 4 5.75 4 6.23 ; Box Cox Procedure *Procedure that will automatically find the Box-Cox transformation; proc transreg data=a1; model boxcox(plasma)=identity(age); run; Transformation Information for BoxCox(plasma) Lambda R-Square Log Like -2.50 0.76 -17.0444 -2.00 0.80 -12.3665 -1.50 0.83 -8.1127 -1.00 0.86 -4.8523 * -0.50 0.87 -3.5523 < 0.00 + 0.85 -5.0754 * 0.50 0.82 -9.2925 1.00 0.75 -15.2625 1.50 0.67 -22.1378 2.00 0.59 -29.4720 2.50 0.50 -37.0844 < - Best Lambda * - Confidence Interval + - Convenient Lambda *The first part of the program gets the geometric mean; data a2; set a1; lplasma=log(plasma); proc univariate data=a2 noprint; var lplasma; output out=a3 mean=meanl; data a4; set a2; if _n_ eq 1 then set a3; keep age yl l; k2=exp(meanl); do l = -1.0 to 1.0 by .1; k1=1/(l*k2**(l-1)); yl=k1*(plasma**l -1); if abs(l) < 1E-8 then yl=k2*log(plasma); output; end; proc sort data=a4 out=a4; by l; proc reg data=a4 noprint outest=a5; model yl=age; by l; data a5; set a5; n=25; p=2; sse=(n-p)*(_rmse_)**2; proc print data=a5; var l sse; Obs 1 2 3 4 5 6 7 8 9 10 l -1.0 -0.9 -0.8 -0.7 -0.6 -0.5 -0.4 -0.3 -0.2 -0.1 sse 33.9089 32.7044 31.7645 31.0907 30.6868 30.5596 30.7186 31.1763 31.9487 33.0552 symbol1 v=none i=join; proc gplot data=a5; plot sse*l; run; data a1; set a1; tplasma = plasma**(-.5); tage = (age+.5)**(-.5); symbol1 v=circle i=sm50; proc gplot; plot tplasma*age; proc sort; by tage; proc gplot; run; plot tplasma*tage; Background Reading • Sections 3.4 - 3.7 describe significance tests for assumptions (read it if you are interested). • Box-Cox transformation is in nknw132.sas • Read sections 4.1, 4.2, 4.4, 4.5, and 4.6