Slides PDF - Spark Summit

advertisement

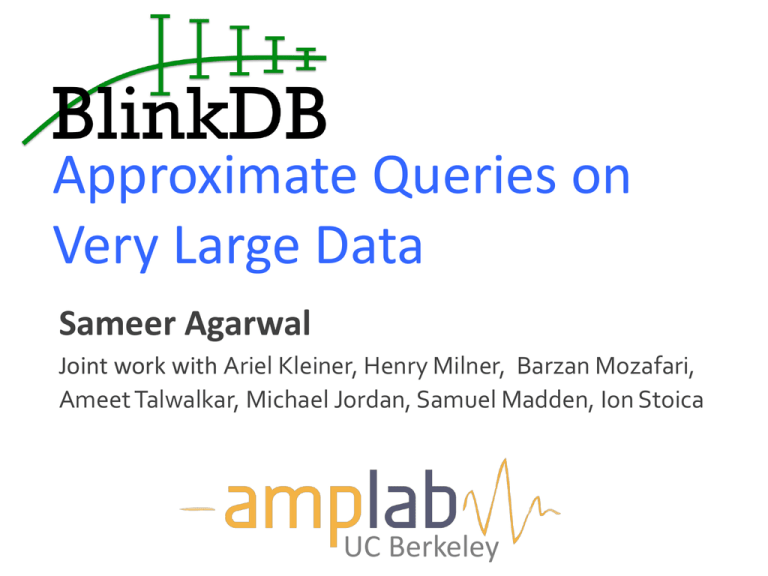

Approximate Queries on Very Large Data Sameer Agarwal Joint work with Ariel Kleiner, Henry Milner, Barzan Mozafari, Ameet Talwalkar, Michael Jordan, Samuel Madden, Ion Stoica UC Berkeley Our Goal Support interactive SQL-like aggregate queries over massive sets of data Our Goal Support interactive SQL-like aggregate queries over massive sets of data blinkdb> SELECT AVG(jobtime) FROM very_big_log AVG, COUNT, SUM, STDEV, PERCENTILE etc. Our Goal Support interactive SQL-like aggregate queries over massive sets of data blinkdb> SELECT AVG(jobtime) FROM very_big_log WHERE src = ‘hadoop’ FILTERS, GROUP BY clauses Our Goal Support interactive SQL-like aggregate queries over massive sets of data blinkdb> SELECT AVG(jobtime) FROM very_big_log WHERE src = ‘hadoop’ LEFT OUTER JOIN logs2 ON very_big_log.id = logs.id JOINS, Nested Queries etc. Our Goal Support interactive SQL-like aggregate queries over massive sets of data blinkdb> SELECT my_function(jobtime) FROM very_big_log WHERE src = ‘hadoop’ LEFT OUTER JOIN logs2 ML Primitives, User Defined Functions ON very_big_log.id = logs.id 100 TB on 1000 machines ½ - 1 Hour 1 - 5 Minutes 1 second ? Hard Disks Memory Query Execution on Samples Query Execution on Samples ID City Buff Ratio 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 What is the average buffering ratio in the table? 0.2325 Query Execution on Samples ID City Buff Ratio 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 What is the average buffering ratio in the table? Uniform Sample ID City Buff Ratio Sampling Rate 2 NYC 0.13 1/4 6 Berkeley 0.25 1/4 8 NYC 0.19 1/4 0.2325 0.19 Query Execution on Samples ID City Buff Ratio 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 What is the average buffering ratio in the table? Uniform Sample ID City Buff Ratio Sampling Rate 2 NYC 0.13 1/4 6 Berkeley 0.25 1/4 8 NYC 0.19 1/4 0.2325 0.19 +/- 0.05 Query Execution on Samples ID City Buff Ratio 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 What is the average buffering ratio in the table? Uniform Sample ID City Buff Ratio 2 NYC 0.13 1/2 3 Berkeley 0.25 1/2 5 NYC 0.19 1/2 6 Berkeley 0.09 1/2 8 NYC 0.18 1/2 12 Berkeley 0.49 1/2 0.2325 0.19 +/- 0.05 $0.22 +/- 0.02 Sampling Rate Speed/Accuracy Trade-off Error Interactive Queries Time to Execute on Entire Dataset 2 sec 30 mins Execution Time (Sample Size) Speed/Accuracy Trade-off Error Interactive Queries Pre-Existing Noise Time to Execute on Entire Dataset 2 sec 30 mins Execution Time (Sample Size) Query Response Time (Seconds) Sampling Vs. No Sampling 1000 900 800 700 600 500 400 300 200 100 0 1020 10x as response time is dominated by I/O 103 18 1 10-1 13 10 10-2 10-3 10-4 Fraction of full data 8 10-5 Query Response Time (Seconds) Sampling Vs. No Sampling 1000 900 800 700 600 500 400 300 200 100 0 1020 Error Bars (0.02%) (0.07%) (1.1%) (3.4%) 103 18 13 10 1 10-1 10-2 10-3 10-4 Fraction of full data (11%) 8 10-5 What is BlinkDB? A framework built on Shark and Spark that … - creates and maintains a variety of uniform and stratified samples from underlying data - returns fast, approximate answers with error bars by executing queries on samples of data - verifies the correctness of the error bars that it returns at runtime What is BlinkDB? A framework built on Shark and Spark that … - creates and maintains a variety of uniform and stratified samples from underlying data - returns fast, approximate answers with error bars by executing queries on samples of data - verifies the correctness of the error bars that it returns at runtime Uniform Samples 4 3 1 2 Uniform Samples 4 U 3 1 2 Uniform Samples ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 4 U 3 1 2 Uniform Samples ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 4 1. 2. 3. U 3 1 2 FILTER rand() < 1/3 Adds per-row Weights (Optional) ORDER BY rand() Uniform Samples ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 4 ID City Data Weight 2 NYC 0.13 1/3 8 NYC 0.25 1/3 6 Berkeley 0.09 1/3 11 NYC 1/3 0.19 U Doesn’t change Shark RDD Semantics 3 1 2 Stratified Samples 4 3 1 2 Stratified Samples 4 S 3 1 2 Stratified Samples ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 4 S 3 1 2 Stratified Samples ID City Data ID City Data 1 NYC 0.78 1 NYC 0.78 2 NYC 0.13 2 NYC 0.13 3 Berkeley 0.25 3 Berkeley 0.25 4 NYC 0.19 4 NYC 0.19 5 NYC 0.11 5 NYC 0.11 6 Berkeley 0.09 6 Berkeley 0.09 7 NYC 0.18 7 NYC 0.18 8 NYC 0.15 8 NYC 0.15 9 Berkeley 0.13 9 Berkeley 0.13 10 Berkeley 0.49 10 Berkeley 0.49 11 NYC 0.19 11 NYC 0.19 12 Berkeley 0.10 12 Berkeley 0.10 4 S1 SPLIT 3 1 2 Stratified Samples 4 City Count NYC 7 Berkele y 5 S2 GROUP S1 3 1 2 ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 Stratified Samples 4 City Count Ratio NYC 7 2/7 Berkele y 5 2/5 S2 GROUP S1 3 1 2 ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 Stratified Samples 4 City Count Ratio NYC 7 2/7 Berkele y 5 2/5 S2 JOIN S2 S1 3 1 2 ID City Data 1 NYC 0.78 2 NYC 0.13 3 Berkeley 0.25 4 NYC 0.19 5 NYC 0.11 6 Berkeley 0.09 7 NYC 0.18 8 NYC 0.15 9 Berkeley 0.13 10 Berkeley 0.49 11 NYC 0.19 12 Berkeley 0.10 Stratified Samples 4 U S2 ID City Data Weight 2 NYC 0.13 2/7 8 NYC 0.25 2/7 6 Berkeley 0.09 2/5 12 Berkeley 0.49 2/5 S2 S1 Doesn’t change Shark RDD Semantics 3 1 2 What is BlinkDB? A framework built on Shark and Spark that … - creates and maintains a variety of uniform and stratified samples from underlying data - returns fast, approximate answers with error bars by executing queries on samples of data - verifies the correctness of the error bars that it returns at runtime Error Estimation Closed Form Aggregate Functions - Central Limit Theorem - Applicable to AVG, COUNT, SUM, VARIANCE and STDEV Error Estimation Closed Form Aggregate Functions - Central Limit Theorem - Applicable to AVG, COUNT, SUM, VARIANCE and STDEV Error Estimation Closed Form Aggregate Functions A Central Limit Theorem A AVG - Applicable to AVG, COUNT, SUM, SUM COUNT A A VARIANCE and STDEV STDEV VARIANCE 2 2 1 1 Sample Sample ±ε Error Estimation Generalized Aggregate Functions - Statistical Bootstrap - Applicable to complex and nested queries, UDFs, joins etc. Error Estimation Generalized Aggregate Functions - Statistical Bootstrap B - AApplicable to complex and nested A A A queries, UDFs, joins etc. … 100 2 1 ±ε A … Sample Sample What is BlinkDB? A framework built on Shark and Spark that … - creates and maintains a variety of random and stratified samples from underlying data - returns fast, approximate answers with error bars by executing queries on samples of data - verifies the correctness of the error bars that it returns at runtime Kleiner’s Diagnostics More Data Higher Accuracy Error 300 Data Points 97% Accuracy [KDD 13] Sample Size What is BlinkDB? A framework built on Shark and Spark that … - creates and maintains a variety of random and stratified samples from underlying data - returns fast, approximate answers with error bars by executing queries on samples of data - verifies the correctness of the error bars that it returns at runtime Single Pass Execution A Approximate Query on a Sample Sample Single Pass Execution A Sample State Age Metric Weight CA 20 1971 1/4 CA 22 2819 1/4 MA 22 3819 1/4 MA 30 3091 1/4 Single Pass Execution A Resampling Operator R Sample State Age Metric Weight CA 20 1971 1/4 CA 22 2819 1/4 MA 22 3819 1/4 MA 30 3091 1/4 Single Pass Execution A Sample “Pushdown” R Sample State Age Metric Weight CA 20 1971 1/4 CA 22 2819 1/4 MA 22 3819 1/4 MA 30 3091 1/4 Single Pass Execution A Sample “Pushdown” R Sample Metric Weight 1971 1/4 3819 1/4 Single Pass Execution A Resampling Operator R Sample Metric Weight 1971 1/4 3819 1/4 Single Pass Execution A Resampling Operator R Sample Metric Weight Metric Weight Metric Weight 1971 1/4 1971 1/4 3819 1/4 3819 1/4 1971 1/4 3819 1/4 Single Pass Execution A A1 An A … R Sample Metric Weight Metric Weight Metric Weight 1971 1/4 1971 1/4 3819 1/4 3819 1/4 1971 1/4 3819 1/4 Single Pass Execution A A1 An A … R Sample Metric Weight Metric Weight Metric Weight 1971 1/4 1971 1/4 3819 1/4 3819 1/4 1971 1/4 3819 1/4 Single Pass Execution A Sample Metric Weight 1971 1/4 3819 1/4 Leverage Poissonized Resampling to generate samples with replacement Single Pass Execution A A1 Sample Metric Weight S1 1971 1/4 2 3819 1/4 1 Sample from a Poisson (1) Distribution Single Pass Execution A A1 Ak Sample Metric Weight S1 Sk 1971 1/4 2 1 3819 1/4 1 0 Construct all Resamples in Single Pass Single Pass Execution A S SAMPLE Sample S1 … S100 BOOTSTRAP WEIGHTS Da1 … Da100 Db1 … Db100 Dc1 DIAGNOSTICS WEIGHTS … Dc100 Single Pass Execution Additional Overhead: 200 bytes/row A S SAMPLE Sample S1 … S100 BOOTSTRAP WEIGHTS Da1 … Da100 Db1 … Db100 Dc1 DIAGNOSTICS WEIGHTS … Dc100 Single Pass Execution A Sample Embarrassingly Parallel S SAMPLE S1 … S100 BOOTSTRAP WEIGHTS Da1 … Da100 Db1 … Db100 Dc1 DIAGNOSTICS WEIGHTS … Dc100 Response Time (s) Single Pass Execution Query Execution Response Time (s) Single Pass Execution Error Estimation Overhead Response Time (s) Single Pass Execution Diagnostics Overhead BlinkDB Architecture Command-line Shell Thrift/JDBC Driver Physical Plan Meta store SQL Parser Query Optimizer SerDes, UDFs Execution Hadoop/Spark/Presto Hadoop Storage (e.g., HDFS, Hbase, Presto) Getting Started 1. Alpha 0.1.1 released and available at http://blinkdb.org 2. Allows you to create random and stratified samples on native tables and materialized views 3. Adds approximate aggregate functions with statistical closed forms to HiveQL 4. Compatible with Apache Hive, AMP Lab’s Shark and Facebook’s Presto (storage, serdes, UDFs, types, metadata) Feature Roadmap 1. Support a majority of Hive Aggregates and User-Defined Functions 2. Runtime Correctness Tests 3. Integrating BlinkDB with Shark and Facebook’s Presto as an experimental feature 4. Automatic Sample Management 5. Streaming Support Summary 1. Approximate queries is an important means to achieve interactivity in processing large datasets 2. BlinkDB.. - approximate answers with error bars by executing queries on small samples of data supports existing Hive/Shark/Presto queries 3. For more information, please check out our EuroSys 2013 (http://bit.ly/blinkdb-1) and KDD 2014 (http://bit.ly/blinkdb-2) papers Thanks!