lecture_06

advertisement

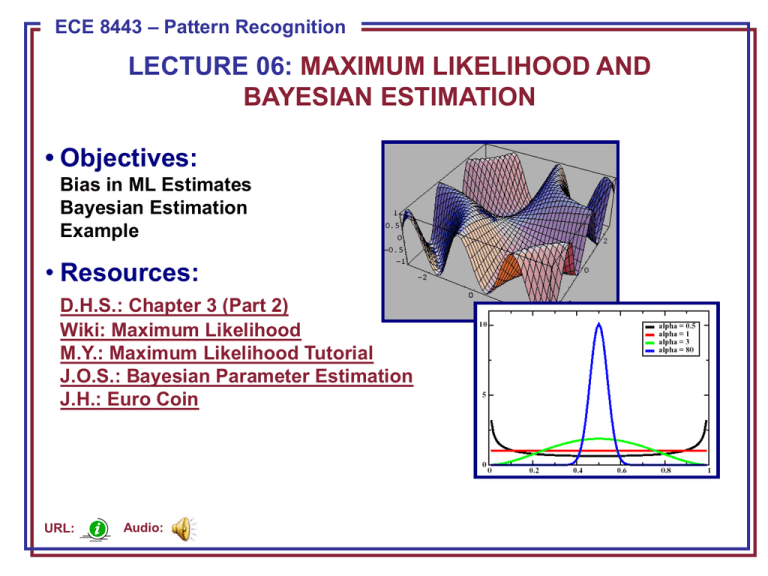

ECE 8443 – Pattern Recognition LECTURE 06: MAXIMUM LIKELIHOOD AND BAYESIAN ESTIMATION • Objectives: Bias in ML Estimates Bayesian Estimation Example • Resources: D.H.S.: Chapter 3 (Part 2) Wiki: Maximum Likelihood M.Y.: Maximum Likelihood Tutorial J.O.S.: Bayesian Parameter Estimation J.H.: Euro Coin URL: Audio: Gaussian Case: Unknown Mean (Review) • Consider the case where only the mean, = , is unknown: ln px k 0 n k 1 ln( p (xk )) ln[ 1 (2 ) d / 2 exp[ 1/ 2 1 (x k ) t 1 (x k )] 2 1 1 ln[( 2 ) d ] (x k ) t 1 (x k ) 2 2 which implies: ln( p (xk )) 1 (x k ) because: 1 1 d t 1 [ ln[( 2 ) ] ( x ) ( x )] k k 2 2 1 1 [ ln[( 2 ) d ] [ (x k ) t 1 (x k )] 2 2 1 (x k ) ECE 8443: Lecture 06, Slide 1 Gaussian Case: Unknown Mean (Review) • Substituting into the expression for the total likelihood: l ln px k 1 (x k ) 0 n n k 1 k 1 • Rearranging terms: n 1 (x k ˆ) 0 k 1 n (x k ˆ) 0 k 1 n n x k ˆ 0 k 1 n k 1 x k n ˆ 0 k 1 n 1 ˆ x k n k 1 • Significance??? ECE 8443: Lecture 06, Slide 2 Gaussian Case: Unknown Mean and Variance (Review) • Let = [,2]. The log likelihood of a SINGLE point is: 1 1 ln( p ( xk )) ln[( 2 ) 2 ] ( xk 1 )t 2 1(xk 1 ) 2 2 2 1 ( x ) k 1 2 θl θ ln( p( xk θ)) 2 1 ( xk 1) 2 2 2 22 • The full likelihood leads to: n 1 ˆ ( xk ˆ1 ) 0 k 1 2 n n 1 ( xk ˆ1 ) 2 2 ˆ 0 ( xk 1 ) ˆ2 ˆ 2 2ˆ2 k 1 2 2 k 1 k 1 n ECE 8443: Lecture 06, Slide 3 Gaussian Case: Unknown Mean and Variance (Review) 1 n ˆ • This leads to these equations: 1 ˆ xk n k 1 • In the multivariate case: 1 n 2 ˆ 2 ˆ ( xk ˆ ) 2 n k 1 1 n ˆ x k n k 1 ˆ 2 1 n t x k ˆ x k ˆ n k 1 • The true covariance is the expected value of the matrix x k ˆ x k ˆ , which is a familiar result. t ECE 8443: Lecture 06, Slide 4 Convergence of the Mean (Review) • Does the maximum likelihood estimate of the variance converge to the true value of the variance? Let’s start with a few simple results we will need later. • Expected value of the ML estimate of the mean: 1 n E[ ˆ ] E[ xi ] n i 1 n 1 E[ xi ] n i 1 1 n n i 1 ECE 8443: Lecture 06, Slide 5 var[ ˆ ] E[ ˆ 2 ] ( E[ ˆ ]) 2 E[ ˆ 2 ] 2 1 n 1 n E[ xi x j ] 2 n i 1 n j 1 2 1 n n 2 E[ xi x j ] 2 n i 1 j 1 Variance of the ML Estimate of the Mean (Review) • The expected value of xixj will be 2 for j k since the two random variables are independent. • The expected value of xi2 will be 2 + 2. • Hence, in the summation above, we have n2-n terms with expected value 2 and n terms with expected value 2 + 2. • Thus, var[ ˆ ] 1 n 2 n 2 n n 2 2 2 2 2 n which implies: E[ ˆ ] var[ ˆ ] ( E[ ˆ ]) 2 2 2 n 2 • We see that the variance of the estimate goes to zero as n goes to infinity, and our estimate converges to the true estimate (error goes to zero). ECE 8443: Lecture 06, Slide 6 Variance Relationships • We will need one more result: 2 E[( x ) 2 E[ x 2 ] 2 E[ x] E[ 2 ] E[ x 2 ] 2 2 E[ 2 ] E[ x 2 ] 2 n xi2 i 1 1 n 2 ( xi ) n i 1 Note that this implies: n 2 2 2 xi i 1 • Now we can combine these results. Recall our expression for the ML estimate of the variance: 1 n n i 1 ˆ 2 E[ xi ˆ 2 ] ECE 8443: Lecture 06, Slide 7 Covariance Expansion • Expand the covariance and simplify: n 1 n 2 1 ˆ E[ xi ˆ E[ ( xi2 2 xi ˆ ˆ 2 )] n i 1 n i 1 2 1 n ( E[ xi2 ] 2 E[ xi ˆ ] E[ ˆ 2 ]) n i 1 1 n (( 2 2 ) 2 E[ xi ˆ ] ( 2 2 n)) n i 1 • One more intermediate term to derive: n 1 n 1 n E[ xi ˆ ] E[ xi x j ] E[ xi x j ] ( E[ xi x j ] E[ xi xi ]) n j 1 n j 1 j 1 i j 2 1 1 2 2 2 2 2 2 (( n 1) ) (( n ) n n n ECE 8443: Lecture 06, Slide 8 Biased Variance Estimate • Substitute our previously derived expression for the second term: 1 n ˆ (( 2 2 ) 2 E[ xi ˆ ] ( 2 2 n)) n i 1 2 1 n (( 2 2 ) 2( 2 2 n) ( 2 2 n)) n i 1 1 n ( 2 2 2 2 2 2 2 n 2 n) n i 1 1 n ( 2 2 n) n i 1 1 n 2 (n 1) 1 n 2 1 n 2 2 ( n) (1 1 / n) n n i 1 n i 1 n i 1 (n 1) 2 n ECE 8443: Lecture 06, Slide 9 Expectation Simplification • Therefore, the ML estimate is biased: n 1 2 1 n ˆ E[ xi ˆ 2 ] 2 n ni 1 2 However, the ML estimate converges (and is MSE). • An unbiased estimator is: C 1 n t x i ˆ x i ˆ n 1 i 1 • These are related by: ˆ (n 1) C n which is asymptotically unbiased. See Burl, AJWills and AWM for excellent examples and explanations of the details of this derivation. ECE 8443: Lecture 06, Slide 10 Introduction to Bayesian Parameter Estimation • In Chapter 2, we learned how to design an optimal classifier if we knew the prior probabilities, P(i), and class-conditional densities, p(x|i). • Bayes: treat the parameters as random variables having some known prior distribution. Observations of samples converts this to a posterior. • Bayesian learning: sharpen the a posteriori density causing it to peak near the true value. • Supervised vs. unsupervised: do we know the class assignments of the training data. • Bayesian estimation and ML estimation produce very similar results in many cases. • Reduces statistical inference (prior knowledge or beliefs about the world) to probabilities. ECE 8443: Lecture 06, Slide 11 Class-Conditional Densities • Posterior probabilities, P(i|x), are central to Bayesian classification. • Bayes formula allows us to compute P(i|x) from the priors, P(i), and the likelihood, p(x|i). • But what If the priors and class-conditional densities are unknown? • The answer is that we can compute the posterior, P(i|x), using all of the information at our disposal (e.g., training data). • For a training set, D, Bayes formula becomes: P(i | x, D) likelihood prior evidence p(x i , D) P(i D) c p(x j , D) P( j D) j 1 • We assume priors are known: P(i|D) = P(i). • Also, assume functional independence: Di have no influence on This gives: P(i | x, D) p(x j , D) if i j p(x i , Di ) P(i ) c p(x j , D j ) P( j ) j 1 ECE 8443: Lecture 06, Slide 12 The Parameter Distribution • Assume the parametric form of the evidence, p(x), is known: p(x|). • Any information we have about prior to collecting samples is contained in a known prior density p(). • Observation of samples converts this to a posterior, p(|D), which we hope is peaked around the true value of . • Our goal is to estimate a parameter vector: p(x D) p(x, D)d • We can write the joint distribution as a product: p(x D) p(x , D ) p( D)d p(x ) p( D)d because the samples are drawn independently. • This equation links the class-conditional density p(x D) to the posterior, p( D) . But numerical solutions are typically required! ECE 8443: Lecture 06, Slide 13 Univariate Gaussian Case • Case: only mean unknown p(x ) N (, 2 ) • Known prior density: p( ) N (0 , 02 ) • Using Bayes formula: p( D) p( D) p( D ) p( ) • Rationale: Once a value of is known, the density for x is completely known. is a normalization factor that p( D) p( D ) p( ) p( D) p( D ) p( ) p ( D ) p ( ) d [ p ( D ) p ( )] n depends on the data, D. ECE 8443: Lecture 06, Slide 14 p(x k ) p( ) k 1 Univariate Gaussian Case • Applying our Gaussian assumptions: 2 n 1 1 x 1 k 1 0 p | D exp exp k 1 2 2 0 2 2 0 2 1 2 n x 2 0 k exp 2 0 k 1 1 2 2 0 02 n x 2k x k 2 2 2 2 2 exp 2 0 2 k 1 n 1 02 x 2k exp 2 2 2 0 k 1 1 2 2 0 exp 2 02 2 0 1 2 2 0 exp 2 02 2 0 ECE 8443: Lecture 06, Slide 15 n x k 2 (2 2 2 ) k 1 n x k 2 (2 2 2 ) k 1 Univariate Gaussian Case (Cont.) • Now we need to work this into a simpler form: 1 2 2 0 p | D exp 2 02 2 0 1 n 2 exp 2 2 k 1 n x k 2 (2 2 2 ) k 1 2 2 0 n x 2 k 2 2 20 k 1 0 1 2 2 exp n 2 2 2 2 0 2 1 n 1 exp( 2 2 0 2 1 n 1 exp( 2 2 0 2 1 n where ˆ n x k n k 1 ECE 8443: Lecture 06, Slide 16 n x k 2 k 1 0 2 0 2 1 n 0 2 x n 2 2 k k 1 0 2 2 1 (nˆ n ) 0 2 02 Univariate Gaussian Case (Cont.) • p(|D) is an exponential of a quadratic function, which makes it a normal distribution. Because this is true for any n, it is referred to as a reproducing density. • p() is referred to as a conjugate prior. • Write p(|D) ~ N(n,n 2): p( D) 1 n 2 exp[ ( ) ] 2 n 2 n 1 • Expand the quadratic term: 1 n 2 1 1 2 2 n n2 p( D) exp[ ( ) ] exp[ ( )] 2 2 n 2 n 2 n 2 n 1 • Equate coefficients of our two functions: 1 2 2 n n2 exp 2 n 2 n 2 1 1 n 1 1 2 exp 2 2 2 2 (nˆ n ) 02 2 0 0 ECE 8443: Lecture 06, Slide 17 Univariate Gaussian Case (Cont.) • Rearrange terms so that the dependencies on are clear: 1 n2 exp 2 2 2 n n 1 1 exp 2 2 2 n2 2 n n 1 1 n 1 exp 2 2 2 0 2 2 1 (nˆ n ) 0 2 02 • Associate terms related to 2 and : n2 2 : 1 n 1 n2 2 02 n 0 n ˆ n : n n2 2 02 • There is actually a third equation involving terms not related to : 1 n2 exp 2 or 2 2 n n 1 1 n2 1 exp 2 2 2 n 2 0 n 1 1 2 n but we can ignore this since it is not a function of and is a complicated equation to solve. ECE 8443: Lecture 06, Slide 18 Univariate Gaussian Case (Cont.) • Two equations and two unknowns. Solve for n and n2. First, solve for n2 : 2 02 02 2 n 1 n 02 2 n 02 2 2 2 0 1 2 n • Next, solve for n: n ˆ n ( n n2 2 n2 ) 0 2 0 n 02 2 ˆ n ( 2 ) n 02 2 n 02 ˆ n 2 2 n 0 1 0 2 0 02 2 n 2 2 0 2 0 n 2 2 0 • Summarizing: 2 n ( 2 ) ˆ 2 n 2 2 0 n 0 n 0 n 02 n2 02 2 n 02 2 ECE 8443: Lecture 06, Slide 19 Bayesian Learning • n represents our best guess after n samples. • n2 represents our uncertainty about this guess. • n2 approaches 2/n for large n – each additional observation decreases our uncertainty. • The posterior, p(|D), becomes more sharply peaked as n grows large. This is known as Bayesian learning. ECE 8443: Lecture 06, Slide 20 “The Euro Coin” • Getting ahead a bit, let’s see how we can put these ideas to work on a simple example due to David MacKay, and explained by Jon Hamaker. ECE 8443: Lecture 06, Slide 21 Summary • Review of maximum likelihood parameter estimation in the Gaussian case, with an emphasis on convergence and bias of the estimates. • Introduction of Bayesian parameter estimation. • The role of the class-conditional distribution in a Bayesian estimate. • Estimation of the posterior and probability density function assuming the only unknown parameter is the mean, and the conditional density of the “features” given the mean, p(x|), can be modeled as a Gaussian distribution. ECE 8443: Lecture 06, Slide 22