006_arizona_count_categorical

advertisement

- Word counts

Moving further

- Speech error counts

-Categorical

Metaphor countscount

data

- Active construction counts

Hissing Koreans

Winter & Grawunder (2012)

No. of Cases

Bentz & Winter (2013)

Alcohol Level

0

4

8

12

16

20

24

No. of Speech Errors

28

32

Alcohol Level

0

4

8

12

16

20

24

No. of Speech Errors

28

32

0

4

8

12

16

20

24

No. of Speech Errors

28

32

Poisson Model

Alcohol Level

The Poisson Distribution

few deaths

Army

Corps

with few

Horses

Siméon Poisson

Army

Corps

lots of

Horses

low

variability

many

deaths

high

variability

1898: Ladislaus Bortkiewicz

Poisson Regression

= generalized linear model

with Poisson error structure

and log link function

The Poisson Model

Y ~ log(b0 +

b1*X1

+

b2*X2)

In R:

lmer(my_counts ~ my_predictors +

(1|subject), mydataset,

family="poisson")

Poisson model output

ex

log

values

e

x

exponentiate

predicted

mean rate

0

4

8

12

16

20

24

No. of Speech Errors

28

32

Poisson Model

Alcohol Level

- Focus vs. no-focus

Moving further

- Yes vs. No

-Binary

Dative vs.categorical

genitive

- Correct vs. incorrect

data

Case yes vs. no ~ Percent L2 speakers

Bentz & Winter (2013)

1

0

Accuracy

5

10

Noise level (db)

15

20

1

0

Accuracy

5

10

Noise level (db)

15

20

1

0

Accuracy

5

10

Noise level (db)

15

20

1

0

Accuracy

5

10

Noise level (db)

15

20

Logistic Regression

= generalized linear model

with binomial error structure

and logistic link function

The Logistic Model

p(Y) ~ logit-1(b0 +

b1*X1

+

b2*X2)

In R:

lmer(binary_variable ~ my_predictors +

(1|subject), mydataset,

family="binomial")

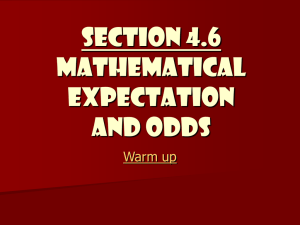

Probabilities and Odds

p(x)

Probability of an

Event

p(x)

1- p(x)

Odds of an

Event

Intuition about Odds

What are the odds that I

pick a blue marble?

N = 12

Answer:

2/10

Log odds

æ p(x) ö

log ç

÷

è 1- p(x) ø

= logit function

Representative values

Probability

Odds

Log odds (= “logits”)

0.1

0.2

0.3

0.4

0.111

0.25

0.428

0.667

-2.197

-1.386

-0.847

-0.405

0.5

0.6

0.7

1

1.5

2.33

0

0.405

0.847

0.8

4

1.386

0.9

9

2.197

Snijders & Bosker (1999: 212)

Bentz & Winter (2013)

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Error z value

0.6831

2.134

2.0335 -3.232

Log odds when Percent.L2 = 0

Pr(>|z|)

0.03286

0.00123

Bentz & Winter (2013)

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Error z value

0.6831

2.134

2.0335 -3.232

For each increase in Percent.L2

by 1%, how much the log odds

decrease (= the slope)

Pr(>|z|)

0.03286

0.00123

Bentz & Winter (2013)

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Error z value

0.6831

2.134

2.0335 -3.232

Pr(>|z|)

0.03286

0.00123

Exponentiate

Odds

Transform by

inverse logit

Probabilitie

Logits or

“log odds”

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Error z value

0.6831

2.134

2.0335 -3.232

Pr(>|z|)

0.03286

0.00123

exp(-6.5728)

Odds

Transform by

inverse logit

Probabilitie

Logits or

“log odds”

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Error z value

0.6831

2.134

2.0335 -3.232

Pr(>|z|)

0.03286

0.00123

exp(-6.5728)

0.001397

878

Transform by

inverse logit

Probabilitie

Logits or

“log odds”

Odds

p(x)

>1

1- p(x)

p(x)

<1

1- p(x)

Numerator

more likely

= event happens

more often than not

Denominator

more likely

= event is more

likely not to happen

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Error z value

0.6831

2.134

2.0335 -3.232

Pr(>|z|)

0.03286

0.00123

exp(-6.5728)

0.001397

878

Transform by

inverse logit

Probabilitie

Logits or

“log odds”

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Logits or

“log odds”

Error z value

0.6831

2.134

2.0335 -3.232

logit.inv(1.4576)

Pr(>|z|)

0.03286

0.00123

0.81

About

80%(makes

sense)

Bentz & Winter (2013)

Case yes vs. no ~ Percent L2 speakers

Estimate Std.

(Intercept)

1.4576

Percent.L2

-6.5728

Logits or

“log odds”

Error z value

0.6831

2.134

2.0335 -3.232

Pr(>|z|)

0.03286

0.00123

logit.inv(1.4576)

0.81

logit.inv(1.4576+

-6.5728*0.3)

0.37

Bentz & Winter (2013)

x

æ p(x) ö

log ç

÷

è 1- p(x) ø

e

x

1+ e

= logit

function

= inverse

logit function

x

This is the famous

“logistic function”

logit-1

e

x

1+ e

= inverse

logit function

Inverse logit function

logit.inv = function(x){exp(x)/(1+exp(x))}

(this defines the

function in R)

(transforms back to

probabilities)

General

Linear

Model

Generalized

Linear

Model

Generalized

Linear

Mixed

Model

General

Linear

Model

Generalized

Linear

Model

Generalized

Linear

Mixed

Model

General

Linear

Model

Generalized

Linear

Model

Generalized

Linear

Mixed

Model

= “Generalizing” the

General Linear Model to

cases that don’t include

continuous response

variables (in particular

categorical ones)

Generalized

Linear

Model

= Consists of two things:

(1) an error distribution,

(2) a link function

= “Generalizing” the

Logistic regression:

General

Linear

Model

to

Binomial distribution

cases that don’t include

Poisson regression:

continuous response

Poisson distribution

variables (in particular

categorical ones)

Logistic regression:

Logit link function

Poisson regression:

Log link function

= Consists of two things:

(1) an error distribution,

(2) a link function

=lm(response

“Generalizing”

the

~ predictor)

Logistic regression:

General

Linear

Model

to

Binomial distribution

cases

that don’t

glm(response

~ include

predictor,

Poisson regression: family="binomial")

continuous response

Poisson distribution

variables

(in particular

glm(response ~ predictor,

categorical

ones)

family="poisson")

Logistic regression:

Logit link function

Poisson regression:

Log link function

= Consists of two things:

(1) an error distribution,

(2) a link function

Categorical Data

Dichotomous/Binary

Count

Logistic

Regression

Poisson

Regression

General structure

Linear Model

continuous ~ any type of variable

Logistic Regression

dichotomous ~ any type of variable

Poisson Regression

count

~ any type of variable

For the generalized linear

mixed model…

… you only have to specify the family.

lmer(…)

lmer(…,family="poisson")

lmer(…,family="binomial")

That’s it

(for now)