Presenter 03 - Correlation

advertisement

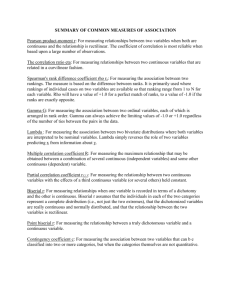

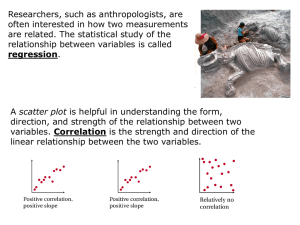

2013/12/10 The Kendall’s tau correlation is another nonparametric correlation coefficient Let x1, …, xn be a sample for random variable x and let y1, …, yn be a sample for random variable y of the same size n. There are C(n, 2) possible ways of selecting distinct pairs (xi, yi) and (xj, yj). For any such assignment of pairs, define each pair as concordant, discordant or neither as follows: concordant if (xi > xj and yi > yj) or (xi < xj and yi < yj) discordant if (xi > xj and yi < yj) or (xi < xj and yi > yj) neither if xi = xj or yi = yj (i.e. ties are not counted). Now let C = the number of concordant pairs and D = the number of discordant pairs. Then define tau as CD C (n , 2 ) To easily calculate C – D, it is best to first put all the x data elements in ascending order. If x and y are perfectly correlated, then all the values of y would be in ascending order too. Otherwise, there will be some inversions. For each i, count the number of j > i for which xj < xi. This sum is D. If there are no ties, then C = C(n, 2) – D. The value of τ is : 1 1 This is a result of the fact that there are C(n, 2) pairings. If there are a large number of ties, then C(n,2) should be replaced by [ C ( n , 2 ) n x ] [ C ( n , 2 ) n y ] where nx is the number of ties involving x and ny is the number of ties involving y. The calculation of ny is similar to that of D given above, namely for each i, count the number of j > i for which xi = xj. This sum is ny. Calculating nx is similar, although easier since the xi are in ascending order. Once D, nx and ny are determined then C = C(n, 2) – D– nx – ny. This works well assuming that there are no values of i and j for which xi = xj and yi = yj. there is a commonly accepted measure of standard error for Kendall’s tau, namely s 1 2n 5 3 C ( n ,2 ) For sufficiently large n (generally n ≥ 10), the following statistic has a standard normal distribution and so can be used for testing the null hypothesis of zero correlation. z s 3 C ( n ,2 ) 2n 5 For smaller values of n the table of critical values found in Kendall’s Tau Table can be used. A study is designed to check the relationship between smoking and longevity. A sample of 15 men fifty years and older was taken and the average number of cigarettes smoked per day and the age at death was recorded, as summarized in the table in Figure. Can we conclude from the sample that longevity is independent of smoking? We begin by sorting the original data in ascending order by longevity and then creating entries for inversions as ties as described above take a look at how we calculate the value in cell C8, i.e. the number of inversions for the data element in row 8. Since the number of cigarettes smoked by that person is 14 (the value in cell B8), we count the entries in column B below B8 that have value smaller than 14. This is 5 since only the entries in cells B10, B14, B15, B16 and B18 have smaller values. We carry out the same calculation for each of the rows and sum the result to get 76 (the value in cell C19). This calculation is carried out by putting the array formula =COUNTIF(B5:B18,”<”&B4) in cell C4. Ties are handled in a similar way, using, for example, the array formula =COUNTIF(B5:B18,”=”&B4) in cell E4. Since p-value < α, the null hypothesis is rejected, and so we conclude there is a negative correlation between smoking and longevity. We can also establish 95% confidence interval for tau as follows: τ ± Zcritical ∙ sτ = -0.471 ± (1.96)(.192) = (-0.848 , -0.094) 2013/12/10 Two sample comparison of means testing can be turned into a correlation problem by combining the two samples into one (random valuable x) and setting the random variable y (the dichotomous variable) to 0 for elements in one sample and to 1 for elements in the other sample. It turns out that the two-sample analysis using the t-test is equival to the analysis of the correlation coefficient using the t-test. To investigate the effect of a new hay fever drug on driving skills, a researcher studies 24 individuals with hay fever: 12 who have been taking the drug and 12 who have not. All participants then entered a simulator and were given a driving test which assigned a score to each driver as summarized in Figure. calculate the correlation coefficient γ for x and y, and then test the null hypothesis H0: ρ = 0. H0: μcontrol = μdrug Since t = 0.1 < 2.07 = tcrit ( p-value = 0.921 > α =0.05 ) we retain the null hypothesis; i.e. we are 95% confident that any difference between the two groups is due to chance. The values for p-value and t are exactly the same as those that result from the t-test in Example, again we conclude that the hay fever drug did not offer any significant improvement in driving results as compared to the control. correlation coefficient γ correlation degree 0.8 above very high 0.6 - 0.8 high 0.4 - 0.6 normal 0.2 - 0.4 low 0.2 below very low A variable is dichotomous if it only takes two values (usually set to 0 and 1). The point-biserial correlation coefficient is simply the Pearson’s product-moment correlation coefficient where one or both of the variables are dichotomous. 2 t 2 t df 2 where t is the test statistic for two means hypothesis testing of variables x1 and x2 with t ~T(df), x is a combination of x1 and x2 and y is the dichotomous variable t ( x y ) (x y) s 1 nx 1 ny The effect size for the comparison of two means is given by d x1 x 2 s df ( n 1 n 2 ) n 1 n 2 (1 ) 2 2 0 . 041 This means that the difference between the average memory recall score between the control group and the sleep deprived group is only about 4.1% of a standard deviation. Note that this is the same effect size that was calculated in Example Alternatively, we can use φ (phi) as a measure of effect size. Phi is nothing more than r. For this example φ = r = 0.0214. Since r2 = 0.00046, we know that 0.46% of the variation in the memory recall scores is based on the amount of sleep. A rough estimate of effect size is that r = 0.5 represents a large effect size (explains 25% of the variance), r = 0.3 represents a medium effect size (explains 9% of the variance), and r = 0.1 represents a small effect size (explains 1% of the variance). 2013/12/10 In Independence Testing we used the chisquare test to determine whether two variables were independent. We now look at the Example using dichotomous variables. A researcher wants to know whether there is a significant difference in two therapies for curing patients of cocaine dependence (defined as not taking cocaine for at least 6 months). She tests 150 patients and obtains the results in the figure. Calculate the pointbiserial correlation coefficient for the data using dichotomous variables. the point-biserial correlation coefficient : : the average of group A St : the standard deviation of group A and B A : the ratio of chosen one of A X A Chi-square tests for independence This time let x = 1 if the patient is cured and x = 0 if the patient is not cured, and let y = 1 if therapy 1 is used and y = 0 if therapy 2 is used. Thus for 28 patients x = 1 and y = 1, for 10 patients x = 0 and y = 1, for 48 patients x = 1 and y = 0 and for 46 patients x = 0 and y = 0. If we list all 150 pairs of x and y in a column we can calculate the correlation coefficient to get r = 0.207. if ρ = 0 (the null hypothesis), then nr 2 ~ 2 (1) This property provides an alternative method for carrying out chi-square tests such as the one we did. Using Property 1 in Example 1, determine whether there is a significant difference in the two therapies for curing patients of cocaine dependence based on the data in Figure. the p-value = CHITEST(5.67,1) = 0.017 < α = 0.05, we again reject the null hypothesis and conclude there is a significant difference between the two therapies. If we calculate the value of for independence as in Independence Testing, from the previous observation we conclude that 2 2 r n This gives us a way to measure the effect of the chi-square test of independence. there is clearly an important difference between the two therapies (not just a significant difference), but if you look at r we see that only 4.3% of the variance is explained by the choice of therapy. we calculated the correlation coefficient of x with y by listing all 132 values and then using Excel’s correlation function CORREL. The following is an alternative approach for calculating r, which is especially useful if n is very large. data needed for calculating First we repeat the data from Figure in Example 1 using the dummy variables x and y (in range F4:H7). Essentially this is a frequency table. We then calculate the mean of x and y. E.g. the mean of x (in cell F10) is calculated by the formula =SUMPRODUCT(F4:F7,H4:H7)/H8. Next we calculate , , (in cells L8, M8 and N8). E.g. the first of these terms is calculated by the formula =SUMPRODUCT(L4:L7,O4:O7). Now the point-serial correlation coefficient is the first of these terms divided by the square root of the product of the other two, i.e. r L 8 ( x i x )( y i y ) ( xi x ) 2 ( yi y ) ( M 8 N 8) 2