Keynotes3 张钹

advertisement

新的挑战

-基于内容的信息处理

张

钹

清华信息科学与技术国家实验室

智能技术与系统国家重点实验室

清华大学信息学院、计算机系

一、经典信息论

Classical Information Theory

Semantics vs. Information Processing

• Frequently the messages have meaning: that is

they refer to or are correlated according to some

system with certain physical or conceptual entities.

• These semantic aspects of communication

irrelevant to the engineering problem.

C. E. Shannon, A mathematical theory of communication, 1948

经典通讯模型

• Communication Model:

Sender

Receiver

P( x) P( y x) P( x y)

(Markov) Stochastic Process

-C. E. Shannon

图灵计算理论

Turing Computation Theory

Deterministic Turing Machine

(1937)

Finite

State

Controller

Computability

Algorithms

Computational

Complexity

P

tape

Q

Read-write Head

-4 -3 -2 -1 0 +1

经典信息论下的信息处理

Information Processing

The conversion of latent information into

manifest information

_C. E. Shannon

X -p(x)

Dissipation

Y-

p( x y )

Equivocation

Transformation=equivocation-dissipation

Uncertainty (error, noisy, deformation,…)

经典信息论在文本、图像处理

中的应用

The central paradigm of classical information

theory is the engineering problem of the

transmission of information over a noisy channel

• Communication: coding, data compression,

noisy-channel coding,..

• Text: editor, compression, spell and syntax

correction,…

• Image: editor, lossless compression, noise

suppression, enhancement, …

二、基于内容(语义)的信息

处理

Information (Web) Age

• Complex (variety of ) information:

text, image, speech, video,…

• A huge amount of data

• Man-Machine Interaction

Content (Semantics) based Information Processing

Information Retrieval, Classification, Summary,

Recognition, Understanding,..

从处理形式(Form)到与内容

(Semantics)相关的处理

Natural Man-Machine Interaction

Users

Input

(Encoding)

Codes

Form

Content

Computer

Computer

Network

Output

(Decoding)

三、句法分析(Syntact Analysis)

Holistic Coding (Gestalt)

?

Semantics S

The basic properties among different quotient

spaces such as the falsity preserving, the truth

preserving properties are discussed. There are

three quotient-space model construction

approaches.

数字视频编码技术发展至今已有半个世纪的历史,

已取得很大的进展。从五十年代的差分预测编码,

到七十年代的变换编码、基于块的运动预测编码,

直到如今兴起的分布式编码、立体视编码、多视

编码、视觉编码等等

11001001010100100010101010

01111100001010100000100011

11110111100100100010001000

00010010101000100111110100

Code (Form) X

00001001010100111110101010

00001110101011010001000111

01010001100101101110100100

11001000100111100100000111

Rule-based, Knowledge-based,

Top-down, Parsing (text)

Advantages:

Deliberative behaviors (AI expert systems:

decision making, diagnosis, design,…)

Specific domain, Small-size

Disadvantages:

Perception, Common sense, Nature language,..

Uncertainty (exception, ambiguity, vague,..)

Detector(检测子)

Semantically meaningful primitives

Text: word-sentence-chapterImage: subpart-part-objectThere is no clear boundary among parts

Segmentation Problem

Descriptor(描述子)

Structural uncertainty

K. S. Fu, Syntactic pattern recognition, New York: Prentice-Hall,

1974

D. Marr, Vision, New York: Freeman, 1982

图像分割(分词)

Where is

the object ?

What is the

object ?

Chicken or

Egg ?

结构分析

Structural analysis, Rule-based, Syntax

The basic properties among different quotient

spaces such as the falsity preserving, the truth

preserving properties are discussed. There are

three quotient-space model construction

approaches.

11001001010100100010101010

01111100001010100000100011

11110111100100100010001000

00010010101000100111110100

Image: Part, Object, … Text: Words, Sentences, …

• Uncertainty problem

• Scalability

Syntactic Analysis Faced Difficulty !

四、概率统计模型

Image (text) Classification:

Categories

Low level and local features (words)

Computer easily detectable but less

semantically meaningful

Colors, Textures, Bag of words

不确定性处理

Uncertainty Management

• How to deal with uncertainty ?

• A probabilistic information processing model

Regularity:

Probabilistic distribution

Examples:

Caltech 101, 25 objects

* * * * ****

* * * *

*

* * *

*

**

·

· ···

···

· ·· ·· ·· · ····

··· ·· · · · · ·

R. O. Duda & P. E. Hart, Pattern Classification and Scene Analysis,

New York: John Wiley & Sons, 1973

词袋法

Bag of (Visual) Words

• Defined in image patches (2005-06)

• Descriptors extracted around interest points

(2002-2004)

• Edge contours (2005-06)

• Regions (2005-06)

检测子与描述子

Detector & Descriptor

Kadir salience

region (points)

Histograms of

Oriented

Gradients (HOG)

-72 dimension

Zuo Yuanyuan (2010-)

1 n

1

CB( w)

n i 1

0

if w arg min( D(v, ri ))

vV

otherwise

CB-Codebook (Histograms)

n-the number of regions in an image

ri-an image region i

D(w,ri)-the distance between a codeword w

and region ri

V-vocabulary

数据空间的稀疏结构

- Sparsity

高维空间中的低维结构

-low-dimensional structure of highdimensional space

Data Structure

Objects

Precision

Airplane

0.947

Car-side

Dalmatian

Faces

0.987

0.733

0.760

Leopard

Pagoda

0.827

0.760

Stop-sign

0.800

Windsor-chair

0.787

优化

xˆ1 argmin x 1 subject to Ax y

xˆ1 argmin x 1

Sparse

representation

in sample space

L1 norm

subject to

Ax y 2

图像库

实验结果

数据的空间结构(非稀疏性)

数据驱动法(Data-driven)

-learning from web data

Germany

Speech

Recognition

English

Learning from Data

(probabilistic models)

Speech

Synthesis

Annotation

Text1

Translation

Service

Microsoft Research Asia

Text2

概率方法的基本缺陷

• The semantic gap between low-level local features

and high-level global concepts

Less semantically meaningful features: colors or

their distribution (histogram), gray-values or their

distribution, visual words (descriptors from interest

points), image patches, image regions, edge, …

• Lack of structural knowledge

Generalization capacity

Information processing without understanding

五、新的研究方向

Sender

Reader

X

X

S

Uncertainty

F (W,D)

句法分析的复苏

Holistic (Gestalt), Probability, Inference

Information Structure Analysis

• Syntactic + Probabilistic

• Data-driven + Knowledge-driven

• Bottom-up + Top-down

• Part-based (Shape-based)

信息结构

Information Structure

?

Semantics

The basic properties among different quotient

spaces such as the falsity preserving, the truth

preserving properties are discussed. There are

three quotient-space model construction

approaches.

数字视频编码技术发展至今已有半个世纪的历史,

已取得很大的进展。从五十年代的差分预测编码,

到七十年代的变换编码、基于块的运动预测编码,

直到如今兴起的分布式编码、立体视编码、多视

编码、视觉编码等等

11001001010100100010101010

01111100001010100000100011

11110111100100100010001000

00010010101000100111110100

Code (Holistic)

00001001010100111110101010

00001110101011010001000111

01010001100101101110100100

11001000100111100100000111

信息结构分析

History (Structural Analysis)

(1) Linguistics

Information Structure-Syntactic representation

Information packaging, Building the semantics

Focus, Topic, background, comment,…

• M. A. K. Halliday (1967) Notes on transitivity and theme in English, Part II,

Journal of Linguistics 3:199-244

• W. L. Chafe, Language and consciousness, Language 50:111-133

(2) Psychology

Structural Information Theory

• Coding: descriptive complexities_

frequencies of occurrence (Shannon)

• Information Structure:

Formalization of visual regularity: iteration,

symmetry, and alternation

E. L. J. Leeuwenberg (1968) Structural information of visual

patterns: an efficient coding system in perception, The Hague:

Mouton

E.L. J. Leeuwenberg (1969), Quantitative specification of

information in sequential patterns, Psychological Review, 76,

216-220

(3) Information Science

Algorithmic Information Theory

Kolmogorov Complexity

16 bits

1111111111111111

0101101001011010

1001110111010111

1100010001001011

R. J. Solomonoff (1964), A formal theory of inductive inference,

Information & Control, V.7, No.1, pp1-22, No.2, pp. 224-254

A. N. Kolmogorov (1965), Three approaches to the definition of

the quantity of information, Problems of Information

Transmission, No. 1, pp. 3-11

信息结构的挖掘与利用

• Kolmogorov complexity

• Related data structures

low-dimensional structure in high-dimensional

data space

• Under the probabilistic (structure) framework

• Learning from human being

(1) Kolmogorov Complexity

The absolute measure of information content

in an individual finite object

Algorithmic information theory

The minimum description length

min p : f ( p ) x, if x ran f

K ( x)

otherwise

信息距离

Information Distance

d ( x, y )

d ( x, y )

max K ( x y ), K ( y x )

max K ( x ), K ( y )

min K ( x y ), K ( y x)

min K ( x), K ( y )

K(.) is non-computable

C. Bennett , et al, Information distance, IEEE Trans. on Information

Theory, vol.44, no.4, 1998, pp.1407-1423

近似计算方法

A statistical approach to Kolgomorov complexity

(information distance)

f(x)-frequency

N-total number

d ( x, y ) NSD( x, y )

log f ( x, y ) max log f ( x ),log f ( y )

log N min log f ( x ),log( y )

语义距离与形式距离

Semantic & Formal Distance

CDM ( x, y )

max C ( x y ), C ( y x )

max C ( x ), C ( y )

CDM-Compressed Distance Measure (ASCII)

NSD-Normalized Statistical Distance

Xian Zhang, Yu Hao, et al, New information distance measure

and its application in question answering system, J. Comput.

Sci. & Tech. 23(4), 557-572, 2008

两篇文本之间的信息距离

Text representation: a bag of words

T1 W1 w11 , w12 ,..., w1n

T2 W2 w21 , w22 ,..., w2 m

Dmax (T1 , T2 ) max K (T1 T2 ), K (T2 T1 )

K (T1 T2 ) K ( w1i \

i

K (T2 T1 ) K ( w2 i \

i

K (W )

K ( w),

wW

j

j

w2 j )

w1 j )

k ( w ) log f ( w)

文本(图像)检索

Image-Text

information distance

Web

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

t1

i1

.

t2

.

.

.

.

t3

i2

.

...

tn

.

in

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

(2) 数据结构

-low-dimensional structure of highdimensional space

Sparse Representation

x1 arg min x 1 subject to

Ax y or

BAx z

A: mn matrix

x: sample space, n-dimension

y: pixel, m-dimension

z: feature space, d-dimension

Yi Ma, UIUC, USA

(3) 扁平的结构模型

-Image Region Annotation: horse, sky,

mountain, grass, tree

Yuan Jinhui (2008-)

区域自适应的网格划分

Region-adaptive Grid Partition

z* arg max p( z I ) arg max p( I z ) p( z )

z

z

1

p( I , z ) exp i f ( I ( xi ), zi ) i , j g ( zi , z j )

Z

i, j

i

p( I , z ) p( I z ) p( z )

I-data

xi-image position

z-state (feature)

g(zi,zj)-the probabilistic

constraints (co-occurrence)

region-based

不同的扁平结构模型

模型学习

n images, each image has mi=HV grids

( x, y ) ( xi , yi ), i 1,2..., n

( xi , yi ) ( xi , yi ), j 1,2,..., mi

j

j

(a) i.i.d generative model

(b) i.i.d. discriminative model

(c) 2-dimensional hidden Markov (2D HMM)

(d) Markov Random Field (MRF)

(e) Conditional Random Field (CRF)

标注配置

i

i

(

x

,

y

), i 1,2,..., N

Given a training data,

MAP (maximal a posterior) : label configuration

y* arg max P ( y1:m x1:m )

For 2D HMM, MRF, CRF

using path limited Viterbi algorithm

马尔科夫随机场模型

-MRF model

Probabilistic distribution P

Cs: labeling clique, C0: labeling and feature

clique

y* the optimal label configuration

1

i

j

k

k

P( x , y )

(

y

,

y

)

(

y

,

x

)

Z ( yi , y j C s )

( y k , x k C 0 )

1:m

1:m

y* arg max

1:m

y

( yi , y j )

( y , y C )

i

j

s

( y , xk C0 )

k

( y k , x k )

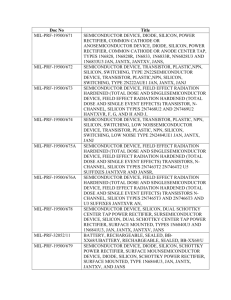

结构预测学习算法

Learning Rules

Maximal Joint

Likelihood

Estimation

Maximal Conditional

Likelihood

Estimation

Maximal Margin

Learning

Maximal Entropy

Discrimination

Learning

Classification

Structural Prediction

Naïve

Bayesian

Network

Logistic

Regression

Hidden Markov

Model (1966)1

SVM

Maximal Margin

Markov Net (2003)3

Conditional Random

Field (2001)2

Maximal

Maximal Entropy

Entropy

Discrimination

Discrimination Markov Net (2008)4

Model

相关文献

[1] L. E. Baum and T. Petrie. Statistical Inference for Probabilistic

Functions of Finte State Markov Chains. The Annals of Mathematical

Statistics, Vol. 37, No. 6, pp.1554-1563, 1966

[2] J. Lafferty et al. Conditional Random Fields: Probabilistic Models

for Segmenting and Labeling Sequence Data. In Proc. of

International Conference on Machine Learning (ICML), 2001

[3] B. Taskar et al., Max-Margin Markov Networks. Advances in

Neural Information Processing Systems (NIPS), 2003

[4] J. Zhu et al., Laplace Maximum Margin Markov Networks, In Proc.

of International Conference on Machine Learning (ICML), 2008

实验设置

• 4002 Corel images (384256 or 256384)

• 11 basic (region) concepts

• Features: color moment + wavelet

• 5 models: 2 without structural knowledge

(GMM, SVM)

3 with structural knowledge

(HMM*, RMF*, CRF*)

图像区域标注类别

不同模型的比较

GMM: Gaussian Mixture Model (30 components)

SVM: Support Vector Machine

Gaussian kennel, one-against-one

HMM: Hidden Markov Model

RMF: Random Markov Field

CRF: Conditional Random Field

Limited Path Viterbi Algorithm

实验结果

(4) Latent Hierarchical Model

Web Page Extraction

Name, Image, Price, Description,

etc.

Given Data Record

Web Page

{Head}

Hierarchy

{Info Block}

{Note}

{Tail}

{Repeat block}

{Note}

Computational

efficiency

Long-range

dependency

Joint extraction

Data Record

Data Record

{image}

Image

Desc

Name

{name, price}

{name}

Desc

Price

Zhu Jun (2008- )

{image}

{price}

Note

Note

Image

Desc

{name, price}

{desc}

Desc

{name}

Name

Desc

{price}

Price

Note

Note

Experiment

Web page extraction

Name, Photo, Price ,

Description

Models

Multi-lager CRFs, Multi-layer

M^3N, PoMEN, Partially

observed HCRFs

Data set: 37 Template

Training: 185 (5/per template)

pages, or 1585 data records

Testing: 370 (10/per template)

pages, or 3391 data records

Web Page

Data Record

Data Record

Image

Desc

Name

Image

Desc

Price

Note

Note

Desc

Desc

Name

Desc

Price

Note

Note

Results

Performances:

Avg F1:

o

Block instance

accuracy:

o

avg F1 over all

attributes

% of records

whose Name,

Image, and Price

are correct

每个属性的性能

2015/4/13

博士论文 预答辩

55

(5) 人脑视觉机制

自底向上与自顶向下

Top-down feedback

High-level

Top-down feedback

Priorknowledge

Local connection

Data-driven

V1

V2 …

IT

视觉基元

Visual Primitive Elements

• 114 basic functions

Images 1212 pixels

• HOG

稀疏编码(Spare Coding)

I ( x, y) aii ( x, y)

i

2

ai

E I ( x, y ) aii ( x, y ) S

xy

i

i

I ( x, y )

Images

i ( x, y)

ai

S log(1 x2 ) or

Basic functions

Coefficients

x

A non-linear function

Object Recognition

with sparse,

localized

features

MIT-CSAIL-TR-2006-028 T. Serre

HMAX-sum + max

多学科交叉研究

Center for Cognitive and Neural Computation

Tsinghua University, Beijing

• Computational Neuroscience

• System Neuroscience

• Intelligent Technology and Systems

• Neural Information and Brain-computer interface

• Learning and Memory

• Cognitive Psychology

谢谢!