PVTOL Overview

advertisement

Parallel Vector Tile-Optimized Library

(PVTOL) Architecture

Jeremy Kepner, Nadya Bliss, Bob Bond, James Daly, Ryan

Haney, Hahn Kim, Matthew Marzilli, Sanjeev Mohindra,

Edward Rutledge, Sharon Sacco, Glenn Schrader

MIT Lincoln Laboratory

May 2007

This work is sponsored by the Department of the Air Force under Air Force contract FA8721-05C-0002. Opinions, interpretations, conclusions and recommendations are those of the author and

are not necessarily endorsed by the United States Government.

MIT Lincoln Laboratory

PVTOL-1

4/13/2015

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-2

4/13/2015

MIT Lincoln Laboratory

PVTOL Effort Overview

Goal: Prototype advanced software

technologies to exploit novel

processors for DoD sensors

Tiled

Processors

CPU in disk drive

• Have demonstrated 10x performance

benefit of tiled processors

• Novel storage should provide 10x more IO

Approach: Develop Parallel Vector

Tile Optimizing Library (PVTOL) for

high performance and ease-of-use

Automated Parallel Mapper

A

P

0

P1

FFT

B

FFT

C

P2

PVTOL

~1 TByte

RAID disk

~1 TByte

RAID disk

Hierarchical Arrays

PVTOL-3

4/13/2015

DoD Relevance: Essential for flexible,

programmable sensors with large IO

and processing requirements

Wideband

Digital

Arrays

Massive

Storage

• Wide area data

• Collected over many time scales

Mission Impact:

•Enabler for next-generation synoptic,

multi-temporal sensor systems

Technology Transition Plan

•Coordinate development with

sensor programs

•Work with DoD and Industry

standards bodies

DoD Software

Standards

MIT Lincoln Laboratory

Embedded Processor Evolution

High Performance Embedded Processors

MFLOPS / Watt

10000

Cell

i860

MPC7447A

1000

SHARC

MPC7410

MPC7400

PowerPC

100

PowerPC with AltiVec

SHARC

750

10

i860 XR

1990

Cell (estimated)

603e

2000

2010

Year

• 20 years of exponential growth in FLOPS / Watt

• Requires switching architectures every ~5 years

• Cell processor is current high performance architecture

PVTOL-4

4/13/2015

MIT Lincoln Laboratory

Cell Broadband Engine

•

•

Cell was designed by IBM, Sony

and Toshiba

Asymmetric multicore processor

–

PVTOL-5

4/13/2015

1 PowerPC core + 8 SIMD cores

•

Playstation 3 uses Cell as

main processor

•

Provides Cell-based

computer systems for highperformance applications

MIT Lincoln Laboratory

Multicore Programming Challenge

Past Programming Model:

Von Neumann

•

Great success of Moore’s Law era

–

–

•

Simple model: load, op, store

Many transistors devoted to

delivering this model

Moore’s Law is ending

–

Future Programming Model:

???

•

Processor topology includes:

–

•

Registers, cache, local memory,

remote memory, disk

Cell has multiple programming

models

Need transistors for performance

Increased performance at the cost of exposing complexity to the programmer

PVTOL-6

4/13/2015

MIT Lincoln Laboratory

Parallel Vector Tile-Optimized Library

(PVTOL)

• PVTOL is a portable and scalable middleware library for

•

multicore processors

Enables incremental development

Desktop

1. Develop serial code

Cluster

Embedded

Computer

3. Deploy code

2. Parallelize code

4. Automatically parallelize code

Make parallel programming as easy as serial programming

PVTOL-7

4/13/2015

MIT Lincoln Laboratory

PVTOL Development Process

Serial PVTOL code

void main(int argc, char *argv[]) {

// Initialize PVTOL

process pvtol(argc, argv);

// Create input, weights, and output matrices

typedef Dense<2, float, tuple<0, 1> > dense_block_t;

typedef Matrix<float, dense_block_t, LocalMap> matrix_t;

matrix_t input(num_vects, len_vect),

filts(num_vects, len_vect),

output(num_vects, len_vect);

// Initialize arrays

...

// Perform TDFIR filter

output = tdfir(input, filts);

}

PVTOL-8

4/13/2015

MIT Lincoln Laboratory

PVTOL Development Process

Parallel PVTOL code

void main(int argc, char *argv[]) {

// Initialize PVTOL

process pvtol(argc, argv);

// Add parallel map

RuntimeMap map1(...);

// Create input, weights, and output matrices

typedef Dense<2, float, tuple<0, 1> > dense_block_t;

typedef Matrix<float, dense_block_t, RunTimeMap> matrix_t;

matrix_t input(num_vects, len_vect , map1),

filts(num_vects, len_vect , map1),

output(num_vects, len_vect , map1);

// Initialize arrays

...

// Perform TDFIR filter

output = tdfir(input, filts);

}

PVTOL-9

4/13/2015

MIT Lincoln Laboratory

PVTOL Development Process

Embedded PVTOL code

void main(int argc, char *argv[]) {

// Initialize PVTOL

process pvtol(argc, argv);

// Add hierarchical map

RuntimeMap map2(...);

// Add parallel map

RuntimeMap map1(..., map2);

// Create input, weights, and output matrices

typedef Dense<2, float, tuple<0, 1> > dense_block_t;

typedef Matrix<float, dense_block_t, RunTimeMap> matrix_t;

matrix_t input(num_vects, len_vect , map1),

filts(num_vects, len_vect , map1),

output(num_vects, len_vect , map1);

// Initialize arrays

...

// Perform TDFIR filter

output = tdfir(input, filts);

}

PVTOL-10

4/13/2015

MIT Lincoln Laboratory

PVTOL Development Process

Automapped PVTOL code

void main(int argc, char *argv[]) {

// Initialize PVTOL

process pvtol(argc, argv);

// Create input, weights, and output matrices

typedef Dense<2, float, tuple<0, 1> > dense_block_t;

typedef Matrix<float, dense_block_t, AutoMap> matrix_t;

matrix_t input(num_vects, len_vect , map1),

filts(num_vects, len_vect , map1),

output(num_vects, len_vect , map1);

// Initialize arrays

...

// Perform TDFIR filter

output = tdfir(input, filts);

}

PVTOL-11

4/13/2015

MIT Lincoln Laboratory

PVTOL Components

•

Performance

–

•

Portability

–

•

Built on standards, e.g. VSIPL++

Productivity

–

PVTOL-12

4/13/2015

Achieves high performance

Minimizes effort at user level

MIT Lincoln Laboratory

PVTOL Architecture

Portability: Runs on a range of architectures

Performance: Achieves high performance

Productivity: Minimizes effort at user level

PVTOL-13

4/13/2015

PVTOL preserves the

simple load-store

programming model in

software

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-14

4/13/2015

MIT Lincoln Laboratory

Machine Model - Why?

• Provides description of underlying hardware

• pMapper: Allows for simulation without the hardware

• PVTOL: Provides information necessary to specify map hierarchies

size_of_double

cpu_latency

cpu_rate

mem_latency

mem_rate

net_latency

net_rate

…

Hardware

PVTOL-15

4/13/2015

=

=

=

=

=

=

=

Machine Model

MIT Lincoln Laboratory

PVTOL Machine Model

•

Requirements

–

–

•

Provide hierarchical machine model

Provide heterogeneous machine model

Design

–

–

Specify a machine model as a tree of machine models

Each sub tree or a node can be a machine model in its own right

Entire

Network

Cluster

of

Clusters

CELL

Cluster

CELL

Dell

Cluster

2 GHz

CELL

SPE 0

SPE 1

…

SPE 7

SPE 0

SPE 1

…

SPE 7

LS

LS

…

LS

LS

LS

…

LS

PVTOL-16

4/13/2015

Dell 0

Dell 1

Dell

Cluster

3 GHz

…

Dell 15

Dell 0

Dell 1

…

MIT Lincoln Laboratory

Dell 32

Machine Model UML Diagram

A machine model constructor can consist of just node

information (flat) or additional children information (hierarchical).

Machine

Model

0..*

1

Node

Model

0..1

CPU

Model

0..1

Memory

Model

A machine model can take a single

machine model description

(homogeneous) or an array of

descriptions (heterogeneous).

0..1

Disk

Model

0..1

Comm

Model

PVTOL machine model is different from PVL machine model in that

it separates the Node (flat) and Machine (hierarchical) information.

PVTOL-17

4/13/2015

MIT Lincoln Laboratory

Machine Models and Maps

Machine model is tightly coupled to the maps in the application.

Machine model defines

layers in the tree

Maps provide mapping

between layers

Entire

Network

grid:

dist:

policy:

...

Cluster

of

Clusters

CELL

Cluster

grid:

dist:

policy:

...

grid:

dist:

policy:

...

CELL

Dell

Cluster

2 GHz

CELL

SPE 0

SPE 1

…

SPE 7

SPE 0

SPE 1

…

SPE 7

LS

LS

…

LS

LS

LS

…

LS

PVTOL-18

4/13/2015

Dell 0

Dell 1

*Cell node includes main memory

Dell

Cluster

3 GHz

…

Dell 15

Dell 0

Dell 1

…

MIT Lincoln Laboratory

Dell 32

Example: Dell Cluster

*

A =

Dell

Cluster

clusterMap =

Dell 0

Dell 1

Dell 2

dellMap =

Cache

Cache

Cache

grid:

dist:

policy:

nodes:

map:

1x8

block

default

0:7

dellMap

Dell 3

grid:

dist:

policy:

Dell 4

Dell 5

Dell 6

Dell ...

Cache

Cache

Cache

4x1

block

default

Cache

Cache

NodeModel nodeModelCluster, nodeModelDell,

nodeModelCache;

MachineModel machineModelMyCluster

= MachineModel(nodeModelCluster, 32, machineModelDell);

MachineModel machineModelDell

= MachineModel(nodeModelDell, 1, machineModelCache);

MachineModel machineModelCache

= MachineModel(nodeModelCache);

PVTOL-19

4/13/2015

*Assumption: each

fits into cache of each Dell node.

hierarchical machine

model constructors

flat machine

model constructor

MIT Lincoln Laboratory

Example: 2-Cell Cluster

*

A =

NodeModel nmCluster, nmCell, nmSPE,nmLS;

MachineModel mmCellCluster =

MachineModel(nmCluster, 2,mmCell);

CLUSTER

clusterMap =

grid:

dist:

policy:

nodes:

map:

1x2

block

default

0:1

cellMap

A =

MachineModel mmCell =

MachineModel(nmCell,8,mmSPE);

CELL 1

CELL 0

grid:

dist:

policy:

nodes:

map:

cellMap =

1x4

block

default

0:3

speMap

MachineModel mmSPE =

SPE 0

SPE 1

SPE 2

SPE 3

SPE 4

SPE 5

SPE 6

speMap =

LS

LS

PVTOL-20

4/13/2015

LS

LS

LS

LS

SPE 7

grid:

dist:

policy:

LS

LS

SPE 0

SPE 1

SPE 2

SPE 3MachineModel(nmSPE,

SPE 4

SPE 5

SPE1,

6

SPE 7

mmLS);

4x1

block

default

LS

LS

LS

LS

LS

MachineModel mmLS =

LS

LS

LS

MachineModel(nmLS);

MIT Lincoln Laboratory

*Assumption: each

fits into the local store (LS) of the SPE.

Machine Model Design Benefits

Simplest case (mapping an array onto a cluster of nodes)

can be defined as in a familiar fashion (PVL, pMatlab).

*

A =

Dell

Cluster

clusterMap =

grid:

dist:

nodes:

Dell 1

Dell 0

Dell 2

Dell 3

1x8

block

0:7

Dell 4

Dell 5

Dell 6

Dell ...

Ability to define heterogeneous models allows

execution of different tasks on very different systems.

CLUSTER

taskMap =

nodes: [Cell Dell]

CELL 0

...

PVTOL-21

4/13/2015

Dell

Cluster

...

...

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-22

4/13/2015

MIT Lincoln Laboratory

Hierarchical Arrays UML

View

Vector

Matrix

Tensor

Iterator

0..

Map

Block

RuntimeMap

LocalMap

0..

0..

LayerManager

DistributedManager

DiskLayerManager

NodeLayerManager

DistributedMapping

PVTOL-23

4/13/2015

OocLayerManager

DiskLayerMapping

NodeLayerMapping

HwLayerManager

SwLayerManager

HwLayerMapping

OocLayerMapping

TileLayerManager

TileLayerMapping

SwLayerMapping

MIT Lincoln Laboratory

Isomorphism

CELL

Cluster

CELL 0

SPE 0

LS

…

LS

CELL 1

SPE 7

LS

SPE 0

LS

…

LS

grid:

dist:

nodes:

map:

1x2

block

0:1

cellMap

NodeLayerManager

upperIf:

lowerIf: heap

grid:

dist:

policy:

nodes:

map:

1x4

block

default

0:3

speMap

SwLayerManager

upperIf: heap

lowerIf: heap

SPE 7

LS

grid:

4x1

dist:

block

policy: default

TileLayerManager

upperIf: heap

lowerIf: tile

Machine model, maps, and layer managers are isomorphic

PVTOL-24

4/13/2015

MIT Lincoln Laboratory

Hierarchical Array Mapping

Machine Model

Hierarchical Map

CELL

Cluster

CELL 0

CELL 1

SPE 0

…

SPE 7

SPE 0

…

SPE 7

LS

LS

LS

LS

LS

LS

grid:

dist:

nodes:

map:

1x2

block

0:1

cellMap

grid:

dist:

policy:

nodes:

map:

1x4

block

default

0:3

speMap

clusterMap

cellMap

grid:

4x1

dist:

block

policy: default

speMap

Hierarchical Array

CELL

Cluster

CELL

0

CELL

1

SPE 0

SPE 1

SPE 2

SPE 3

SPE 4

SPE 5

SPE 6

SPE 7

SPE 0

SPE 1

SPE 2

SPE 3

SPE 4

SPE 5

SPE 6

SPE 7

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

PVTOL-25

4/13/2015

MIT Lincoln Laboratory

*Assumption: each

fits into the local store (LS) of the SPE. CELL X implicitly includes main memory.

Spatial vs. Temporal Maps

•

CELL

Cluster

Spatial Maps

–

grid:

dist:

nodes:

map:

1x2

block

0:1

cellMap

–

CELL

0

SPE 0

SPE 1

SPE 2

Distribute across multiple

processors

CELL

1

grid:

dist:

policy:

nodes:

map:

SPE 3

…

–

1x4

block

default

0:3

speMap

SPE 0

SPE 1

SPE 2

SPE 3

•

LS

LS

LS

LS

LS

Temporal Maps

–

LS

LS

LS

LS

Logical

Assign ownership of array

indices in main memory to

tile processors

May have a deep or shallow

copy of data

…

grid:

4x1

dist:

block

policy: default

LS

Distribute across multiple

processors

Physical

–

Partition data owned by a

single storage unit into

multiple blocks

Storage unit loads one

block at a time

E.g. Out-of-core, caches

PVTOL-26

4/13/2015

MIT Lincoln Laboratory

These managers imply that

there is main memory at the

SPE level

Layer Managers

• Manage the data distributions between adjacent levels in

the machine model

NodeLayerManager

upperIf:

lowerIf: heap

CELL

Cluster

CELL 1

CELL 0

SPE 0

SPE 0

SPE 1

CELL

Cluster

CELL 0

SPE 1

DiskLayerManager

upperIf:

lowerIf: disk

CELL

Cluster

Spatial distribution

between nodes

Spatial distribution

between disks

Disk 0

Disk 1

CELL 0

CELL 1

OocLayerManager

upperIf: disk

lowerIf: heap

SwLayerManager

upperIf: heap

lowerIf: heap

Temporal distribution

between a node’s disk and

main memory (deep copy)

Spatial distribution

between two layers in main

memory (shallow/deep copy)

HwLayerManager

upperIf: heap

lowerIf: cache

CELL

Cluster

CELL 0

SPE 0

SPE 1

SPE 2

SPE 3

PPE

PPE

L1 $

PVTOL-27

4/13/2015

Temporal distribution between

main memory and cache

(deep/shallow copy)

LS

LS

LS

LS

TileLayerManager

upperIf: heap

lowerIf: tile

Temporal distribution between

main memory and tile processor

memory (deep copy)

MIT Lincoln Laboratory

Tile Iterators

•

Iterators are used to access temporally distributed tiles

•

Kernel iterators

–

•

CELL

Cluster

User iterators

–

–

CELL 1

CELL 0

–

•

12

3 4

SPE 0

SPE 1

SPE 0

Row-major

Iterator

PVTOL-28

4/13/2015

SPE 1

Used within kernel expressions

Instantiated by the programmer

Used for computation that

cannot be expressed by kernels

Row-, column-, or plane-order

Data management policies specify

how to access a tile

–

–

–

–

Save data

Load data

Lazy allocation (pMappable)

Double buffering (pMappable)

MIT Lincoln Laboratory

Pulse Compression Example

…

CPI 2

CELL

0

CPI 1

CPI 0

CELL

Cluster

…

CPI 2

CPI 1

CPI 0

CELL

1

CELL

2

SPE 0

SPE 1

…

SPE 0

SPE 1

…

SPE 0

SPE 1

…

LS

LS

LS

LS

LS

LS

LS

LS

LS

DIT

PVTOL-29

4/13/2015

DAT

DOT

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-30

4/13/2015

MIT Lincoln Laboratory

API Requirements

• Support transitioning from serial to parallel to hierarchical

code without significantly rewriting code

Embedded

parallel

processor

Parallel

processor

Uniprocessor

Fits in

main memory

Fits in

main memory

PVL

PVTOL

Fits in

main memory

Parallel

processor

Parallel processor w/

cache optimizations

Uniprocessor

Parallel

tiled processor

Fits in

main memory

Fits in

main memory

Fits in cache

Fits in cache

Fits in

tile memory

Uniprocessor w/

cache optimizations

PVTOL-31

4/13/2015

MIT Lincoln Laboratory

Data Types

•

Block types

–

•

•

PVTOL-32

4/13/2015

•

Map types

–

int, long, short, char, float,

double, long double

Vector, Matrix, Tensor

Local, Runtime, Auto

Layout types

–

•

Views

–

Dense

Element types

–

•

Row-, column-, plane-major

Dense<int Dims,

class ElemType,

class LayoutType>

•

Vector<class ElemType,

class BlockType,

class MapType>

MIT Lincoln Laboratory

Data Declaration Examples

Serial

Parallel

// Create tensor

typedef Dense<3, float, tuple<0, 1, 2> > dense_block_t;

typedef Tensor<float, dense_block_t, LocalMap> tensor_t;

tensor_t cpi(Nchannels, Npulses, Nranges);

// Node map information

Grid grid(Nprocs, 1, 1, Grid.ARRAY); // Grid

DataDist dist(3);

// Block distribution

Vector<int> procs(Nprocs);

// Processor ranks

procs(0) = 0; ...

ProcList procList(procs);

// Processor list

RuntimeMap cpiMap(grid, dist, procList); // Node map

// Create tensor

typedef Dense<3, float, tuple<0, 1, 2> > dense_block_t;

typedef Tensor<float, dense_block_t, RuntimeMap> tensor_t;

tensor_t cpi(Nchannels, Npulses, Nranges, cpiMap);

PVTOL-33

4/13/2015

MIT Lincoln Laboratory

Data Declaration Examples

// Tile map information

Grid tileGrid(1, NTiles 1, Grid.ARRAY);

DataDist tileDist(3);

DataMgmtPolicy tilePolicy(DataMgmtPolicy.DEFAULT);

RuntimeMap tileMap(tileGrid, tileDist, tilePolicy);

Hierarchical

//

//

//

//

Grid

Block distribution

Data mgmt policy

Tile map

// Tile processor map information

Grid tileProcGrid(NTileProcs, 1, 1, Grid.ARRAY); // Grid

DataDist tileProcDist(3);

// Block distribution

Vector<int> tileProcs(NTileProcs);

// Processor ranks

inputProcs(0) = 0; ...

ProcList inputList(tileProcs);

// Processor list

DataMgmtPolicy tileProcPolicy(DataMgmtPolicy.DEFAULT); // Data mgmt policy

RuntimeMap tileProcMap(tileProcGrid, tileProcDist, tileProcs,

tileProcPolicy, tileMap); // Tile processor map

// Node map information

Grid grid(Nprocs, 1, 1, Grid.ARRAY); //

DataDist dist(3);

//

Vector<int> procs(Nprocs);

//

procs(0) = 0;

ProcList procList(procs);

//

RuntimeMap cpiMap(grid, dist, procList,

Grid

Block distribution

Processor ranks

Processor list

tileProcMap); // Node map

// Create tensor

typedef Dense<3, float, tuple<0, 1, 2> > dense_block_t;

typedef Tensor<float, dense_block_t, RuntimeMap> tensor_t;

tensor_t cpi(Nchannels, Npulses, Nranges, cpiMap);

PVTOL-34

4/13/2015

MIT Lincoln Laboratory

Pulse Compression Example

Untiled version

Tiled version

// Declare weights and cpi tensors

tensor_t cpi(Nchannels, Npulses, Nranges,

cpiMap),

weights(Nchannels, Npulse, Nranges,

cpiMap);

// Declare weights and cpi tensors

tensor_t cpi(Nchannels, Npulses, Nranges,

cpiMap),

weights(Nchannels, Npulse, Nranges,

cpiMap);

// Declare FFT objects

Fftt<float, float, 2, fft_fwd> fftt;

Fftt<float, float, 2, fft_inv> ifftt;

// Declare FFT objects

Fftt<float, float, 2, fft_fwd> fftt;

Fftt<float, float, 2, fft_inv> ifftt;

// Iterate over CPI's

for (i = 0; i < Ncpis; i++) {

// DIT: Load next CPI from disk

...

// Iterate over CPI's

for (i = 0; i < Ncpis; i++) {

// DIT: Load next CPI from disk

...

// DAT: Pulse compress CPI

dataIter = cpi.beginLinear(0, 1);

weightsIter = weights.beginLinear(0, 1);

outputIter = output.beginLinear(0, 1);

while (dataIter != data.endLinear()) {

output = ifftt(weights * fftt(cpi));

dataIter++; weightsIter++; outputIter++;

}

// DAT: Pulse compress CPI

output = ifftt(weights * fftt(cpi));

// DOT: Save pulse compressed CPI to disk

...

// DOT: Save pulse compressed CPI to disk

...

}

}

PVTOL-35

4/13/2015

MIT Lincoln Laboratory

Kernelized tiled version is identical to untiled version

Setup Assign API

• Library overhead can be reduced by an initialization time

expression setup

– Store PITFALLS communication patterns

– Allocate storage for temporaries

– Create computation objects, such as FFTs

Assignment Setup Example

Equation eq1(a, b*c + d);

Equation eq2(f, a / d);

for( ... ) {

...

eq1();

eq2();

...

}

Expressions stored

in Equation object

Expressions invoked

without re-stating

expression

Expression objects can hold setup information without duplicating the equation

PVTOL-36

4/13/2015

MIT Lincoln Laboratory

Redistribution: Assignment

A

B

Main memory is the highest level where all of A and

B are in physical memory. PVTOL performs the

redistribution at this level. PVTOL also performs

the data reordering during the redistribution.

CELL

Cluster

CELL

0

CELL

0

CELL

1

SPE 0 SPE 1 SPE 2 SPE 3 SPE 4 SPE 5 SPE 6 SPE 7 SPE 0 SPE 1 SPE 2 SPE 3 SPE 4 SPE 5 SPE 6 SPE 7

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

Cell

Cluster

CELL

Cluster

LS

PVTOL ‘invalidates’ all of A’s local store blocks at the lower

layers, causing the layer manager to re-load the blocks

from main memory when they are accessed.

LS

LS

Individual

Cells

CELL

1

SPE 0 SPE 1 SPE 2 SPE 3 SPE 4 SPE 5 SPE 6 SPE 7 SPE 0 SPE 1 SPE 2 SPE 3 SPE 4 SPE 5 SPE 6 SPE 7

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

Individual

SPEs

SPE

Local

Stores

PVTOL ‘commits’ B’s local store memory blocks to main

memory, ensuring memory coherency

PVTOL A=B Redistribution Process:

1.

2.

3.

4.

5.

PVTOL ‘commits’ B’s resident temporal memory blocks.

PVTOL descends the hierarchy, performing PITFALLS intersections.

PVTOL stops descending once it reaches the highest set of map nodes at which all of A and all of B

are in physical memory.

PVTOL performs the redistribution at this level, reordering data and performing element-type

conversion if necessary.

PVTOL ‘invalidates’ A’s temporal memory blocks.

Programmer writes ‘A=B’

Corner turn dictated by maps, data ordering (row-major vs. column-major)

PVTOL-37

4/13/2015

MIT Lincoln Laboratory

Redistribution: Copying

Deep copy

A

B

CELL

Cluster

CELL

Cluster

CELL

0

grid: 1x2

dist: block

nodes: 0:1

map:cellMap

LS

grid:4x1

dist:block

policy: default

nodes: 0:3

map: speMap

LS

LS

CELL

0

CELL

1

SPE 0 SPE 1 SPE 2 SPE 3

LS

…

SPE 0 SPE 1 SPE 2 SPE 3

LS

LS

LS

LS

LS

…

…

LS

LS

CELL

1

…

SPE 4 SPE 5 SPE 6 SPE 7

LS

LS

A allocates a hierarchy based on

its hierarchical map

LS

LS

SPE 4 SPE 5 SPE 6 SPE 7

LS

LS

LS

LS

grid: 1x2

dist: block

nodes: 0:1

map:cellMap

LS

Commit B’s local store memory

blocks to main memory

A shares the memory allocated

by B. No copying is performed

grid:1x4

dist:block

policy: default

A allocates its own memory and

copies contents of B

grid:1x4

dist:block

policy: default

nodes: 0:3

map: speMap

CELL

Cluster

CELL

0

grid:4x1

dist:block

policy: default

CELL

1

SPE 0 SPE 1 SPE 2 SPE 3 SPE 4 SPE 5 SPE 6 SPE 7 SPE 0 SPE 1 SPE 2 SPE 3 SPE 4 SPE 5 SPE 6 SPE 7

Shallow copy

LS

A allocates a hierarchy based

on its hierarchical map

LS

A

LS

LS

LS

LS

LS

B

LS

LS

LS

LS

A

LS

LS

LS

LS

LS

Commit B’s local store memory

blocks to main memory

B

Programmer creates new view using copy constructor with a new hierarchical map

PVTOL-38

4/13/2015

MIT Lincoln Laboratory

Pulse Compression + Doppler Filtering

Example

…

CPI 2

CPI 1

CPI 0

CELL

Cluster

…

CPI 2

CPI 1

CPI 0

CELL

1

CELL

0

CELL

2

SPE 0

SPE 1

…

SPE 0

SPE 1

SPE 2

SPE 3

…

SPE 0

SPE 1

…

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

LS

DIT

PVTOL-39

4/13/2015

DIT

DOT

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-40

4/13/2015

MIT Lincoln Laboratory

Tasks & Conduits

A means of decomposing a problem into a set of

asynchronously coupled sub-problems (a pipeline)

Conduit A

Task 1

Conduit B

•

•

•

•

•

Task 2

Task 3

Conduit C

Each Task is SPMD

Conduits transport distributed data objects (i.e. Vector, Matrix, Tensor)

between Tasks

Conduits provide multi-buffering

Conduits allow easy task replication

Tasks may be separate processes or may co-exist as different threads

within a process

PVTOL-41

4/13/2015

MIT Lincoln Laboratory

Tasks/w Implicit Task Objects

Task Function

Task

Thread

Map

Communicator

0..*

Sub-Task

0..*

sub-Map

A PVTOL Task consists

of a distributed set of

Threads that use the

same communicator

0..*

sub-Communicator

Roughly

equivalent to the

“run” method of a

PVL task

Threads may be either

preemptive or

cooperative*

Task ≈ Parallel Thread

PVTOL-42

4/13/2015

MIT Lincoln Laboratory

* PVL task state machines provide primitive cooperative multi-threading

Cooperative vs. Preemptive Threading

Cooperative User Space Threads (e.g. GNU Pth)

Thread 1

User Space

Scheduler

Thread 2

yield( )

Preemptive Threads (e.g. pthread)

Thread 1

O/S

Scheduler

Thread 2

interrupt , I/O wait

return from

interrupt

return from yield( )

return from yield( )

return from

interrupt

yield( )

interrupt , I/O wait

yield( )

• PVTOL calls yield( ) instead of blocking

while waiting for I/O

• O/S support of multithreading not needed

• Underlying communication and

computation libs need not be thread safe

• SMPs cannot execute tasks concurrently

interrupt , I/O wait

• SMPs can execute tasks concurrently

• Underlying communication and

computation libs must be thread safe

PVTOL can support both threading styles via an internal thread wrapper layer

PVTOL-43

4/13/2015

MIT Lincoln Laboratory

Task API

support functions get values for current task SPMD

• length_type pvtol::num_processors();

• const_Vector<processor_type>

pvtol::processor_set();

Task API

• typedef<class T>

pvtol::tid pvtol::spawn(

(void)(TaskFunction*)(T&),

T& params,

Map& map);

•

int pvtol::tidwait(pvtol::tid);

Similar to typical thread API except for spawn map

PVTOL-44

4/13/2015

MIT Lincoln Laboratory

Explicit Conduit UML

(Parent Task Owns Conduit)

Parent task owns

the conduits

PVTOL Task

Thread

Application tasks owns

the endpoints (i.e.

readers & writers)

ThreadFunction

Application Parent Task

Child

Parent

0..

Application Function

0..

PVTOL Conduit

0..

Conduit

Data Reader

1

0..

Conduit

Data Writer

PVTOL

Data

Object

Multiple Readers are allowed

Only one writer is allowed

PVTOL-45

4/13/2015

Reader & Writer objects manage a Data Object,

provides a PVTOL view of the comm buffers

MIT Lincoln Laboratory

Implicit Conduit UML

(Factory Owns Conduit)

Factory task owns

the conduits

PVTOL Task

Thread

Application tasks owns

the endpoints (i.e.

readers & writers)

ThreadFunction

Conduit Factory Function

Application Function

0..

0..

PVTOL Conduit

0..

Conduit

Data Reader

1

0..

Conduit

Data Writer

PVTOL

Data

Object

Multiple Readers are allowed

Only one writer is allowed

PVTOL-46

4/13/2015

Reader & Writer objects manage a Data Object,

provides a PVTOL view of the comm buffers

MIT Lincoln Laboratory

Conduit API

Conduit Declaration API

• typedef<class T>

Note: the Reader and Writer

class Conduit {

connect( ) methods block

Conduit( );

waiting for conduits to finish

Reader& getReader( );

Writer& getWriter( );

initializing and perform a

};

function similar to PVL’s two

Conduit Reader API

phase initialization

• typedef<class T>

class Reader {

public:

Reader( Domain<n> size, Map map, int depth );

void setup( Domain<n> size, Map map, int depth );

void connect( );

// block until conduit ready

pvtolPtr<T> read( );

// block until data available

T& data( );

// return reader data object

};

Conduit Writer API

• typedef<class T>

class Writer {

public:

Writer( Domain<n> size, Map map, int depth );

void setup( Domain<n> size, Map map, int depth );

void connect( );

// block until conduit ready

pvtolPtr<T> getBuffer( );

// block until buffer available

void write( pvtolPtr<T> ); // write buffer to destination

T& data( );

// return writer data object

};

Conceptually Similar to the PVL Conduit API

PVTOL-47

4/13/2015

MIT Lincoln Laboratory

Task & Conduit

API Example/w Explicit Conduits

typedef struct { Domain<2> size; int depth; int numCpis; } DatParams;

int DataInputTask(const DitParams*);

int DataAnalysisTask(const DatParams*);

int DataOutputTask(const DotParams*);

Conduits

created in

parent task

int main( int argc, char* argv[])

{

…

Conduit<Matrix<Complex<Float>>> conduit1;

Conduit<Matrix<Complex<Float>>> conduit2;

Pass Conduits

to children via

Task

parameters

DatParams

datParams = …;

datParams.inp = conduit1.getReader( );

datParams.out = conduit2.getWriter( );

Spawn

Tasks

vsip:: tid ditTid = vsip:: spawn( DataInputTask, &ditParams,ditMap);

vsip:: tid datTid = vsip:: spawn( DataAnalysisTask, &datParams, datMap );

vsip:: tid dotTid = vsip:: spawn( DataOutputTask, &dotParams, dotMap );

Wait for

Completion

vsip:: tidwait( ditTid );

vsip:: tidwait( datTid );

vsip:: tidwait( dotTid );

}

“Main Task” creates Conduits, passes to sub-tasks as

parameters, and waits for them to terminate

PVTOL-48

4/13/2015

MIT Lincoln Laboratory

DAT Task & Conduit

Example/w Explicit Conduits

int DataAnalysisTask(const DatParams* p)

{

Vector<Complex<Float>> weights( p.cols, replicatedMap );

ReadBinary (weights, “weights.bin” );

Conduit<Matrix<Complex<Float>>>::Reader inp( p.inp );

inp.setup(p.size,map,p.depth);

Conduit<Matrix<Complex<Float>>>::Writer out( p.out );

out.setup(p.size,map,p.depth);

inp.connect( );

out.connect( );

for(int i=0; i<p.numCpis; i++) {

pvtolPtr<Matrix<Complex<Float>>> inpData( inp.read() );

pvtolPtr<Matrix<Complex<Float>>> outData( out.getBuffer() );

(*outData) = ifftm( vmmul( weights, fftm( *inpData, VSIP_ROW ),

VSIP_ROW );

out.write(outData);

}

}

Declare and Load

Weights

Complete conduit

initialization

connect( ) blocks until

conduit is initialized

Reader::getHandle( )

blocks until data is

received

Writer::getHandle( )

blocks until output

buffer is available

Writer::write( ) sends

the data

pvtolPtr destruction

implies reader extract

Sub-tasks are implemented as ordinary functions

PVTOL-49

4/13/2015

MIT Lincoln Laboratory

DIT-DAT-DOT Task & Conduit

API Example/w Implicit Conduits

typedef struct { Domain<2> size; int depth; int numCpis; } TaskParams;

int DataInputTask(const InputTaskParams*);

int DataAnalysisTask(const AnalysisTaskParams*);

int DataOutputTask(const OutputTaskParams*);

int main( int argc, char* argv[ ])

{

…

TaskParams

params = …;

Conduits NOT

created in

parent task

Spawn

Tasks

vsip:: tid ditTid = vsip:: spawn( DataInputTask, &params,ditMap);

vsip:: tid datTid = vsip:: spawn( DataAnalysisTask, &params, datMap );

vsip:: tid dotTid = vsip:: spawn( DataOutputTask, &params, dotMap );

vsip:: tidwait( ditTid );

vsip:: tidwait( datTid );

vsip:: tidwait( dotTid );

Wait for

Completion

}

“Main Task” just spawns sub-tasks and waits for them to terminate

PVTOL-50

4/13/2015

MIT Lincoln Laboratory

DAT Task & Conduit

Example/w Implicit Conduits

int DataAnalysisTask(const AnalysisTaskParams* p)

{

Vector<Complex<Float>> weights( p.cols, replicatedMap );

ReadBinary (weights, “weights.bin” );

Conduit<Matrix<Complex<Float>>>::Reader

inp(“inpName”,p.size,map,p.depth);

Conduit<Matrix<Complex<Float>>>::Writer

out(“outName”,p.size,map,p.depth);

inp.connect( );

out.connect( );

for(int i=0; i<p.numCpis; i++) {

pvtolPtr<Matrix<Complex<Float>>> inpData( inp.read() );

pvtolPtr<Matrix<Complex<Float>>> outData( out.getBuffer() );

(*outData) = ifftm( vmmul( weights, fftm( *inpData, VSIP_ROW ),

VSIP_ROW );

out.write(outData);

}

}

Constructors

communicate/w factory

to find other end based

on name

connect( ) blocks until

conduit is initialized

Reader::getHandle( )

blocks until data is

received

Writer::getHandle( )

blocks until output

buffer is available

Writer::write( ) sends

the data

pvtolPtr destruction

implies reader extract

Implicit Conduits connect using a “conduit name”

PVTOL-51

4/13/2015

MIT Lincoln Laboratory

Conduits and Hierarchal Data Objects

Conduit connections may be:

• Non-hierarchal to non-hierarchal

• Non-hierarchal to hierarchal

• Hierarchal to Non-hierarchal

• Non-hierarchal to Non-hierarchal

Example task function/w hierarchal mappings on conduit input & output data

…

input.connect();

output.connect();

for(int i=0; i<nCpi; i++) {

pvtolPtr<Matrix<Complex<Float>>> inp( input.getHandle( ) );

pvtolPtr<Matrix<Complex<Float>>> oup( output.getHandle( ) );

do {

*oup = processing( *inp );

inp->getNext( );

Per-time Conduit

oup->getNext( );

communication possible

} while (more-to-do);

(implementation dependant)

output.write( oup );

}

Conduits insulate each end of the conduit from the other’s mapping

PVTOL-52

4/13/2015

MIT Lincoln Laboratory

Replicated Task Mapping

• Replicated tasks allow conduits to abstract

away round-robin parallel pipeline stages

• Good strategy for when tasks reach their

scaling limits

Conduit A

Task 2

Rep #0

Task 1

Conduit B

Task 2

Rep #1

Task 2

Rep #2

Task 3

Conduit C

“Replicated” Task

Replicated mapping can be based on a 2D task map (i.e. Each row in the

map is a replica mapping, number of rows is number of replicas

PVTOL-53

4/13/2015

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-54

4/13/2015

MIT Lincoln Laboratory

PVTOL and Map Types

PVTOL distributed arrays are templated on map type.

LocalMap

The matrix is not distributed

RuntimeMap

The matrix is distributed and all map information is

specified at runtime

AutoMap

The map is either fully defined, partially defined, or

undefined

Focus on

Notional matrix construction:

Matrix<float, Dense, AutoMap> mat1(rows, cols);

Specifies the data

type, i.e. double,

complex, int, etc.

PVTOL-55

4/13/2015

Specifies the

storage layout

Specifies the

map type:

MIT Lincoln Laboratory

pMapper and Execution in PVTOL

All maps are of type LocalMap or RuntimeMap

OUTPUT

APPLICATION

At least one map

of type AutoMap

(unspecified,

partial) is present

PVTOL-56

4/13/2015

pMapper is an automatic mapping system

• uses lazy evaluation

• constructs a signal flow graph

• maps the signal flow graph at data access

PERFORM.

MODEL

EXPERT

MAPPING

SYSTEM

ATLAS

SIGNAL

FLOW

EXTRACTOR

SIGNAL

FLOW

GRAPH

EXECUTOR/

SIMULATOR

OUTPUT

MIT Lincoln Laboratory

Examples of Partial Maps

A partially specified map has one or more of the map

attributes unspecified at one or more layers of the hierarchy.

Examples:

Grid: 1x4

Dist: block

Procs:

pMapper will figure out which 4 processors to use

Grid: 1x*

Dist: block

Procs:

pMapper will figure out how many columns the grid should have

and which processors to use; note that if the processor list was

provided the map would become fully specified

Grid: 1x4

Dist:

Procs: 0:3

pMapper will figure out whether to use block, cyclic,

or block-cyclic distribution

Grid:

Dist: block

Procs:

pMapper will figure out what grid to use and on how many

processors; this map is very close to being completely unspecified

pMapper

• will be responsible for determining attributes that influence performance

• will not discover whether a hierarchy should be present

PVTOL-57

4/13/2015

MIT Lincoln Laboratory

pMapper UML Diagram

pMapper

not pMapper

PVTOL-58

4/13/2015

pMapper is only invoked when an

AutoMap-templated PvtolView is created.

MIT Lincoln Laboratory

pMapper & Application

// Create input tensor (flat)

typedef Dense<3, float, tuple<0, 1, 2> > dense_block_t;

typedef Tensor<float, dense_block_t, AutoMap> tensor_t;

tensor_t input(Nchannels, Npulses, Nranges),

// Create input tensor (hierarchical)

AutoMap tileMap();

AutoMap tileProcMap(tileMap);

AutoMap cpiMap(grid, dist, procList, tileProcMap);

typedef Dense<3, float, tuple<0, 1, 2> > dense_block_t;

typedef Tensor<float, dense_block_t, AutoMap> tensor_t;

tensor_t input(Nchannels, Npulses, Nranges, cpiMap),

For each pvar

• For each Pvar in the Signal Flow Graph (SFG),

pMapper checks if the map is fully specified

AutoMap is

partially specified

or unspecified

AutoMap is

fully specified

• If it is, pMapper will move on to the next Pvar

• pMapper will not attempt to remap a pre-defined map

Get next pvar

Map pvar

• If the map is not fully specified, pMapper will map it

• When a map is being determined for a Pvar, the map

returned has all the levels of hierarchy specified, i.e.

all levels are mapped at the same time

Get next pvar

PVTOL-59

4/13/2015

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-60

4/13/2015

MIT Lincoln Laboratory

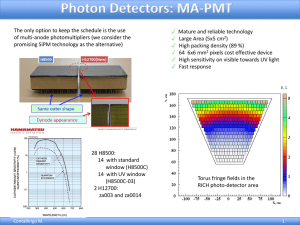

Mercury Cell Processor Test System

Mercury Cell Processor System

• Single Dual Cell Blade

– Native tool chain

– Two 2.4 GHz Cells running in SMP

mode

– Terra Soft Yellow Dog Linux

2.6.14

• Received 03/21/06

– booted & running same day

– integrated/w LL network < 1 wk

– Octave (Matlab clone) running

– Parallel VSIPL++ compiled

•Each Cell has 153.6 GFLOPS (single

precision ) – 307.2 for system @ 2.4

GHz (maximum)

Software includes:

• IBM Software Development Kit (SDK)

– Includes example programs

• Mercury Software Tools

– MultiCore Framework (MCF)

– Scientific Algorithms Library (SAL)

– Trace Analysis Tool and Library (TATL)

PVTOL-61

4/13/2015

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-62

4/13/2015

MIT Lincoln Laboratory

Cell Model

Element Interconnect Bus

•4 ring buses

Synergistic Processing Element

•½ processor speed

•Each ring 16 bytes wide

•128 SIMD Registers, 128 bits wide

•Dual issue instructions

•Max bandwidth 96 bytes / cycle (204.8 GB/s @ 3.2 GHz)

Local Store

•256 KB Flat memory

Memory Flow

Controller

•Built in DMA Engine

• PPE and SPEs need different

programming models

64 – bit PowerPC (AS)

VMX, GPU, FPU, LS, …

L1

– SPEs MFC runs concurrently with

program

– PPE cache loading is noticeable

– PPE has direct access to memory

L2

Hard to use SPMD programs on PPE and SPE

PVTOL-63

4/13/2015

MIT Lincoln Laboratory

Compiler Support

• GNU gcc

– gcc, g++ for PPU and SPU

– Supports SIMD C extensions

• IBM XLC

• IBM Octopiler

– Promises automatic

parallel code with DMA

– Based on OpenMP

– C, C++ for PPU, C for SPU

– Supports SIMD C extensions

– Promises transparent SIMD code

vadd does not produce SIMD code in SDK

•GNU provides familiar product

•IBM’s goal is easier programmability

• Will it be enough for high performance

customers?

PVTOL-64

4/13/2015

MIT Lincoln Laboratory

Mercury’s MultiCore Framework (MCF)

MCF provides a network across Cell’s

coprocessor elements.

Manager (PPE) distributes data to Workers (SPEs)

Synchronization API for Manager and its workers

Workers receive task and data in “channels”

Worker teams can receive different pieces of data

DMA transfers are abstracted away by “channels”

Workers remain alive until network is shutdown

MCF’s API provides a Task Mechanism whereby

workers can be passed any computational kernel.

PVTOL-65

4/13/2015

Can be used in conjunction with Mercury’s SAL

(Scientific Algorithm Library)

MIT Lincoln Laboratory

Mercury’s MultiCore Framework (MCF)

MCF provides API data distribution “channels” across

processing elements that can be managed by PVTOL.

PVTOL-66

4/13/2015

MIT Lincoln Laboratory

Sample MCF API functions

Manager Functions

mcf_m_net_create( )

mcf_m_net_initialize( )

mcf_m_net_add_task( )

mcf_m_net_add_plugin( )

mcf_m_team_run_task( )

mcf_m_team_wait( )

mcf_m_net_destroy( )

mcf_m_mem_alloc( )

mcf_m_mem_free( )

mcf_m_mem_shared_alloc( )

mcf_m_tile_channel_create( )

mcf_m_tile_channel_destroy( )

mcf_m_tile_channel_connect( )

mcf_m_tile_channel_disconnect( )

mcf_m_tile_distribution_create_2d( )

mcf_m_tile_distribution_destroy( )

mcf_m_tile_channel_get_buffer( )

mcf_m_tile_channel_put_buffer( )

mcf_m_dma_pull( )

mcf_m_dma_push( )

mcf_m_dma_wait( )

mcf_m_team_wait( )

mcf_w_tile_channel_create( )

mcf_w_tile_channel_destroy( )

mcf_w_tile_channel_connect( )

mcf_w_tile_channel_disconnect( )

mcf_w_tile_channel_is_end_of_channel( )

mcf_w_tile_channel_get_buffer( )

mcf_w_tile_channel_put_buffer( )

mcf_w_dma_pull_list( )

mcf_w_dma_push_list( )

mcf_w_dma_pull( )

mcf_w_dma_push( )

mcf_w_dma_wait( )

Worker Functions

mcf_w_main( )

mcf_w_mem_alloc( )

mcf_w_mem_free( )

mcf_w_mem_shared_attach( )

Initialization/Shutdown

PVTOL-67

4/13/2015

Channel Management

Data Transfer

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-68

4/13/2015

MIT Lincoln Laboratory

Cell PPE – SPE

Manager / Worker Relationship

PPE

Main

Memory

SPE

PPE loads data into Main Memory

PPE launches SPE kernel

expression

SPE loads data from Main Memory

to & from its local store

SPE writes results back to

Main Memory

SPE indicates that the task is

complete

PPE (manager) “farms out” work to the SPEs (workers)

PVTOL-69

4/13/2015

MIT Lincoln Laboratory

SPE Kernel Expressions

•

PVTOL application

–

–

•

Written by user

Can use expression kernels to perform computation

Expression kernels

–

–

Built into PVTOL

PVTOL will provide multiple kernels, e.g.

Simple

•

+, -, *

Expression

kernelFFT

loader

–

–

–

Complex

Pulse compression

Doppler filtering

STAP

Built into PVTOL

Launched onto tile processors when PVTOL is initialized

Runs continuously in background

Kernel Expressions are effectively SPE overlays

PVTOL-70

4/13/2015

MIT Lincoln Laboratory

SPE Kernel Proxy Mechanism

Matrix<Complex<Float>> inP(…);

Matrix<Complex<Float>> outP (…);

outP=ifftm(vmmul(fftm(inP)));

PVTOL

Expression or

pMapper SFG

Executor

(on PPE)

Check

Signature

Match

Call

struct PulseCompressParamSet ps;

ps.src=wgt.data,inP.data

ps.dst=outP.data

ps.mappings=wgt.map,inP.map,outP.map

MCF_spawn(SpeKernelHandle, ps);

get mappings from input param;

set up data streams;

while(more to do) {

get next tile;

process;

write tile;

}

Pulse

Compress

SPE Proxy

(on PPE)

pulseCompress(

Vector<Complex<Float>>& wgt,

Matrix<Complex<Float>>& inP,

Matrix<Complex<Float>>& outP

);

Lightweight Spawn

Pulse

Compress

Kernel

(on SPE)

Name

Parameter

Set

Kernel Proxies map expressions or expression fragments to available SPE kernels

PVTOL-71

4/13/2015

MIT Lincoln Laboratory

Kernel Proxy UML Diagram

User

Code

Program Statement

0..

Expression

0..

Manager/

Main

Processor

Direct

Implementation

SPE Computation

Kernel Proxy

FPGA Computation

Kernel Proxy

Library

Code

Computation

Library (FFTW, etc)

Worker

SPE Computation

Kernel

FPGA Computation

Kernel

This architecture is applicable to many types of accelerators (e.g. FPGAs, GPUs)

PVTOL-72

4/13/2015

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-73

4/13/2015

MIT Lincoln Laboratory

DIT-DAT-DOT on Cell Example

PPE DIT

SPE Pulse

Compression Kernel

PPE DAT

PPE DOT

1

CPI 1

CPI 2

1

2

2

3

3

CPI 3

4

Explicit for (…) {

Tasks

read data;

1

outcdt.write( );

}

Implicit

Tasks

1

2

3

PVTOL-74

4/13/2015

4

for (…) {

1

incdt.read( );

2

pulse_comp ( );

3,4 outcdt.write( );

}

for (…) {

a=read data; // DIT

b=a;

c=pulse_comp(b); // DAT

d=c;

write_data(d); // DOT

}

4

2

3

2

3

for (each tile) {

load from memory;

out=ifftm(vmmul(fftm(inp)));

write to memory;

}

4

for (…) {

incdt.read( );

write data;

}

for (each tile) {

load from memory;

out=ifftm(vmmul(fftm(inp)));

write to memory;

}

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-75

4/13/2015

MIT Lincoln Laboratory

Benchmark Description

Benchmark Hardware

Benchmark Software

Mercury Dual Cell Testbed

Octave

(Matlab clone)

PPEs

Simple

FIR Proxy

SPE FIR Kernel

SPEs

1 – 16 SPEs

Based on HPEC Challenge Time Domain FIR Benchmark

PVTOL-76

4/13/2015

MIT Lincoln Laboratory

Time Domain FIR Algorithm

3

Filter slides along

reference to form

dot products

2

1

•Number of Operations:

0

Single Filter (example size 4)

x

x

x

x

k – Filter size

n – Input size

nf - number of filters

0

1

2

3

4

5

6

7

...

n-2

Total FOPs: ~ 8 x nf x n x k

n-1

•Output Size: n + k - 1

+

Reference Input

data

0

1

2

3

4

5

Output point

6

7

...

M-3

M-2

M-1

HPEC Challenge Parameters TDFIR

•TDFIR uses complex data

•TDFIR uses a bank of filters

– Each filter is used in a tapered convolution

– A convolution is a series of dot products

Set

k

n

nf

1

128

4096

64

2

12

1024

20

FIR is one of the best ways to demonstrate FLOPS

PVTOL-77

4/13/2015

MIT Lincoln Laboratory

Performance Time Domain FIR (Set 1)

Time vs. Increasing L, Constants M = 64 N = 4096 K = 128 04-Aug-2006

3

GIGAFLOPS

vs. Increasing L, Constants M = 64 N = 4096 K = 128 04-Aug-2006

3

10

Cell (1 SPEs)

Cell @ 2.4 GHz

Cell (2 SPEs)

Cell (4 SPEs)

Cell (8 SPEs)

Cell (16 SPEs)

10

Cell

Cell

Cell

Cell

Cell

2

1

10

GIGAFLOPS

TIME (seconds)

10

(1 SPEs)

(2 SPEs)

(4 SPEs)

(8 SPEs)

(16 SPEs)

0

10

2

10

-1

10

Cell @ 2.4 GHz

-2

10

1

0

1

10

10

2

3

10

10

Number of Iterations (L)

4

10

5

10

10

0

10

1

2

10

3

4

10

10

Number of Iterations (L)

5

10

10

Set 1 has a bank of 64 size 128 filters with size 4096 input vectors

•Octave runs TDFIR in a loop

– Averages out overhead

– Applications run convolutions many times

typically

PVTOL-78

4/13/2015

Maximum GFLOPS for TDFIR #1 @2.4 GHz

# SPE

1

2

4

8

16

GFLOPS

16

32

63

126

253

MIT Lincoln Laboratory

Performance Time Domain FIR (Set 2)

Time vs. L, Constants M = 20 N = 1024 K = 12 25-Aug-2006

3

GIGAFLOPS vs. L, Constants M = 20 N = 1024 K = 12 25-Aug-2006

3

10

10

Cell

Cell

Cell

Cell

Cell

2

10

1

(1 SPEs)

(2 SPEs)

(4 SPEs)

(8 SPEs)

(16 SPEs)

Cell

Cell

Cell

Cell

Cell

2

10

(1 SPEs)

(2 SPEs)

(4 SPEs)

(8 SPEs)

(16 SPEs)

GIGAFLOPS

TIME (seconds)

10

0

10

1

10

0

10

-1

10

-1

10

-2

10

Cell @ 2.4 GHz

Cell @ 2.4 GHz

-3

10

-2

0

2

10

10

4

10

Number of Iterations (L)

6

10

8

10

10

0

10

2

4

10

10

Number of Iterations (L)

6

8

10

10

Set 2 has a bank of 20 size 12 filters with size 1024 input vectors

•

TDFIR set 2 scales well with the

number of processors

–

PVTOL-79

4/13/2015

Runs are less stable than set 1

GFLOPS for TDFIR #2 @ 2.4 GHz

# SPE

1

2

4

8

16

GFLOPS

10

21

44

85

185

MIT Lincoln Laboratory

Outline

• Introduction

• PVTOL Machine Independent Architecture

–

–

–

–

–

Machine Model

Hierarchal Data Objects

Data Parallel API

Task & Conduit API

pMapper

• PVTOL on Cell

–

–

–

–

–

The Cell Testbed

Cell CPU Architecture

PVTOL Implementation Architecture on Cell

PVTOL on Cell Example

Performance Results

• Summary

PVTOL-80

4/13/2015

MIT Lincoln Laboratory

Summary

Goal: Prototype advanced software

technologies to exploit novel

processors for DoD sensors

Tiled

Processors

CPU in disk drive

• Have demonstrated 10x performance

benefit of tiled processors

• Novel storage should provide 10x more IO

Approach: Develop Parallel Vector

Tile Optimizing Library (PVTOL) for

high performance and ease-of-use

Automated Parallel Mapper

A

P

0

P1

FFT

B

FFT

C

P2

PVTOL

~1 TByte

RAID disk

~1 TByte

RAID disk

Hierarchical Arrays

PVTOL-81

4/13/2015

DoD Relevance: Essential for flexible,

programmable sensors with large IO

and processing requirements

Wideband

Digital

Arrays

Massive

Storage

• Wide area data

• Collected over many time scales

Mission Impact:

•Enabler for next-generation synoptic,

multi-temporal sensor systems

Technology Transition Plan

•Coordinate development with

sensor programs

•Work with DoD and Industry

standards bodies

DoD Software

Standards

MIT Lincoln Laboratory