Backpropagation

advertisement

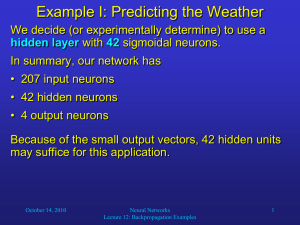

University of Belgrade School of Electrical Engineering Chair of Computer Engineering and Information Theory Data Mining and Semantic Web Neural Networks: Backpropagation algorithm Miroslav Tišma tisma.etf@gmail.com What is this? You see this: But the camera sees this: 23.12.2011. Miroslav Tišma 2/21 Computer Vision: Car detection Not a car Cars Testing: What is this? 23.12.2011. Miroslav Tišma 3/21 pixel 1 Learning Algorithm pixel 2 50 x 50 pixel images→ 2500 pixels (7500 if RGB) Raw image pixel 2 pixel 1 intensity pixel 2 intensity pixel 2500 intensity Cars “Non”-Cars 23.12.2011. pixel 1 Quadratic features ( Miroslav Tišma ): ≈3 million features 4/21 Neural Networks • Origins: Algorithms that try to mimic the brain • Was very widely used in 80s and early 90s; popularity diminished in late 90s. • Recent resurgence: State-of-the-art technique for many applications 23.12.2011. Miroslav Tišma 5/21 Neurons in the brain Dendr(I)tes Ax(O)n 23.12.2011. Miroslav Tišma 6/21 Neuron model: Logistic unit 𝑥0 = 1 “bias unit” “output” “weights” - parameters ℎΘ 𝑥 = 1 𝑇𝑥 1 + 𝑒 −Θ “input wires” Sigmoid (logistic) activation function. 23.12.2011. Miroslav Tišma 1 𝑔 𝑧 = 1 + 𝑒 −𝑧 7/21 Neural Network “bias unit” Layer 1 Layer 2 Layer 3 “input layer” “hidden layer” “output layer” 23.12.2011. Miroslav Tišma 8/21 Neural Network “activation” of unit in layer matrix of weights controlling function mapping from layer to layer If network has units in layer , will be of dimension 23.12.2011. units in layer , then . Miroslav Tišma 9/21 Simple example: AND -30 +20 +20 ℎΘ 𝑥 = 𝑔(−30 + 20𝑥1 + 20𝑥2 ) 0 0 1 1 0 1 0 1 𝑔(−30) ≈ 0 𝑔(−10) ≈ 0 𝑔(−10) ≈ 0 𝑔(10) ≈ 1 ℎΘ 𝑥 ≈ 𝑥1 𝐴𝑁𝐷 𝑥2 23.12.2011. Miroslav Tišma 10/21 Example: OR function -10 +20 +20 ℎΘ 𝑥 = 𝑔(−10 + 20𝑥1 + 20𝑥2 ) 0 0 1 1 0 1 0 1 𝑔(−10) ≈ 0 𝑔(10) ≈ 1 𝑔(10) ≈ 1 𝑔(30) ≈ 1 ℎΘ 𝑥 ≈ 𝑥1 𝑂𝑅 𝑥2 23.12.2011. Miroslav Tišma 11/21 Multiple output units: One-vs-all. Pedestrian Want Car , when pedestrian 23.12.2011. Motorcycle , etc. , when car Truck when motorcycle Miroslav Tišma 12/21 Neural Network (Classification) total no. of layers in network no. of units (not counting bias unit) in layer Layer 1 Layer 2 Layer 3 Binary classification Layer 4 Multi-class classification (K classes) E.g. , , , pedestrian car motorcycle truck 1 output unit 23.12.2011. K output units Miroslav Tišma 13/21 Cost function Logistic regression: Neural network: 23.12.2011. Miroslav Tišma 14/21 Gradient computation Our goal is to minimize the cost function Need code to compute: 23.12.2011. Miroslav Tišma 15/21 Backpropagation algorithm Given one training example ( , ): Forward propagation: 𝑎 1 Layer 1 23.12.2011. Miroslav Tišma 𝑎 2 Layer 2 𝑎 3 Layer 3 𝑎 4 Layer 4 16/21 Backpropagation algorithm Intuition: “error” of node in layer . element-wise multiplication operator For each output unit (layer L = 4) 𝛿4 𝛿3 𝛿2 ℎ𝜃 𝑥 𝑗 Layer 1 𝜕 𝜕Θ𝑖𝑗 23.12.2011. 𝐽 𝜃 = 𝑎(𝑙) 𝛿 (𝑙+1) Layer 2 Layer 3 Layer 4 the derivate of activation function can be written as 𝑎(𝑙) .∗ (1 − 𝑎 𝑙 ) Miroslav Tišma 17/21 Backpropagation algorithm Training set Set (for all ). used to compute For 𝜕 𝜕Θ𝑖𝑗 Set Perform forward propagation to compute Using , compute Compute 23.12.2011. Miroslav Tišma 𝐽 𝜃 for 18/21 Advantages: - Relatively simple implementation - Standard method and generally wokrs well - Many practical applications: * handwriting recognition, autonomous driving car Disadvantages: - Slow and inefficient - Can get stuck in local minima resulting in sub-optimal solutions 23.12.2011. Miroslav Tišma 19/21 Literature: - http://en.wikipedia.org/wiki/Backpropagation - http://www.ml-class.org - http://home.agh.edu.pl/~vlsi/AI/backp_t_en/backprop.html 23.12.2011. Miroslav Tišma 20/21 Thank you for your attention! 23.12.2011. Miroslav Tišma 21/21