Operation & Planning Control - Vel Tech Dr.RR & Dr.SR Technical

advertisement

Operation and Planning Control

U7MEA37

Prepared by

Mr. Prabhu

Assistant Professor, Mechanical Department

VelTech Dr.RR & Dr.SR Technical University

1

Unit I

Linear programming

2

Introduction to Operations Research

• Operations research/management science

– Winston: “a scientific approach to decision making, which

seeks to determine how best to design and operate a

system, usually under conditions requiring the allocation

of scarce resources.”

– Kimball & Morse: “a scientific method of providing

executive departments with a quantitative basis for

decisions regarding the operations under their control.”

Introduction to Operations Research

• Provides rational basis for decision making

– Solves the type of complex problems that turn up in

the modern business environment

– Builds mathematical and computer models of

organizational systems composed of people, machines,

and procedures

– Uses analytical and numerical techniques to make

predictions and decisions based on these models

Introduction to Operations Research

• Draws upon

– engineering, management, mathematics

• Closely related to the "decision sciences"

– applied mathematics, computer science,

economics, industrial engineering and systems

engineering

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•What are the

objectives?

•Is the proposed

problem too narrow?

•Is it too broad?

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•What data should

be collected?

•How will data be

collected?

•How do different

components of the

system interact

with each other?

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•What kind of model

should be used?

•Is the model accurate?

•Is the model too

complex?

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•Do outputs match

current observations

for current inputs?

•Are outputs

reasonable?

•Could the model be

erroneous?

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•What if there are

conflicting objectives?

•Inherently the most

difficult step.

•This is where software

tools will help us!

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•Must communicate

results in layman’s

terms.

•System must be

user friendly!

Methodology of Operations Research*

The Seven Steps to a Good OR Analysis

•Users must be trained

on the new system.

•System must be

observed over time to

ensure it works

properly.

Linear Programming

14

Objectives

– Requirements for a linear programming model.

– Graphical representation of linear models.

– Linear programming results:

•

•

•

•

Unique optimal solution

Alternate optimal solutions

Unbounded models

Infeasible models

– Extreme point principle.

15

Objectives - continued

– Sensitivity analysis concepts:

•

•

•

•

•

•

Reduced costs

Range of optimality--LIGHTLY

Shadow prices

Range of feasibility--LIGHTLY

Complementary slackness

Added constraints / variables

– Computer solution of linear programming

models

• WINQSB

• EXCEL

• LINDO

16

3.1 Introduction to Linear

Programming

• A Linear Programming model seeks to

maximize or minimize a linear function,

subject to a set of linear constraints.

• The linear model consists of the following

components:

– A set of decision variables.

– An objective function.

– A set of constraints.

– SHOW FORMAT

17

• The Importance of Linear Programming

– Many real static problems lend themselves to linear

programming formulations.

– Many real problems can be approximated by linear

models.

– The output generated by linear programs provides

useful “what’s best” and “what-if” information.

18

Assumptions of Linear Programming

• The decision variables are continuous or divisible,

meaning that 3.333 eggs or 4.266 airplanes is an

acceptable solution

• The parameters are known with certainty

• The objective function and constraints exhibit

constant returns to scale (i.e., linearity)

• There are no interactions between decision variables

19

Methodology of Linear Programming

Determine and define the decision variables

Formulate an objective function

verbal characterization

Mathematical characterization

Formulate each constraint

20

MODEL FORMULATION

• Decisions variables:

– X1 = Production level of Space Rays (in dozens per

week).

– X2 = Production level of Zappers (in dozens per

week).

• Objective Function:

– Weekly profit, to be maximized

21

The Objective Function

Each dozen Space Rays realizes $8 in profit.

Total profit from Space Rays is 8X1.

Each dozen Zappers realizes $5 in profit.

Total profit from Zappers is 5X2.

The total profit contributions of both is

8X1 + 5X2

(The profit contributions are additive because of

the linearity assumption)

22

The Linear Programming Model

Max 8X1 + 5X2 (Weekly profit)

subject to

2X1 + 1X2 < = 1200 (Plastic)

3X1 + 4X2 < = 2400 (Production Time)

X1 + X2 < = 800

(Total production)

X1 - X2 < = 450

(Mix)

Xj> = 0, j = 1,2 (Nonnegativity)

23

3.4 The Set of Feasible Solutions

for Linear Programs

The set of all points that satisfy all the

constraints of the model is called a

FEASIBLE REGION

24

Using a graphical presentation

we can represent all the constraints,

the objective function, and the three

types of feasible points.

25

X2

1200

The plastic constraint:

The

Plastic constraint

2X1+X2<=1200

Total production constraint:

X1+X2<=800

Infeasible

600

Production

Feasible

Time

3X1+4X2<=2400

Production mix

constraint:

X1-X2<=450

600

800

Interior points.

Boundary

points.

• There are three

types

of feasible points

Extreme points.

X1

26

Linear Programming- Simplex method

• “...finding the maximum or minimum of linear

functions in which many variables are subject

to constraints.” (dictionary.com)

• A linear program is a “problem that requires

the minimization of a linear form subject to

linear constraints...” (Dantzig vii)

Important Note

• Linear programming requires linear

inequalities

• In other words, first degree inequalities only!

Good: ax + by + cz < 3

Bad: ax2 + log2y > 7

Lets look at an example...

•

•

•

•

•

•

•

•

Farm that produces Apples (x) and Oranges (y)

Each crop needs land, fertilizer, and time.

6 acres of land: 3x + y < 6

6 tons of fertilizer: 2x + 3y < 6

8 hour work day: x + 5y < 8

Apples sell for twice as much as oranges

We want to maximize profit (z): 2x + y = z

We can't produce negative: x > 0, y > 0

Traditional Method

x = 1.71

y = .86

z = 4.29

• Graph the inequalities

• Look at the line we're trying to maximize.

Problems...

• More variables?

• Cannot eyeball the answer?

Simplex Method

• George B. Dantzig in 1951

• Need to convert equations

• Slack variables

Performing the Conversion

• -z

+ 2x + y = 0 (Objective Equation)

• s1 + x + 5y = 8

•

s2 + 2x + 3y = 6

•

s3 + 3x + y = 6

• Initial feasible solution

More definitions

•

•

•

•

•

Non-basic: x, y

Basic variables: s1, s2, s3, z

Current Solution: Set non-basic variables to 0

-z + 2x + y = 0 => z = 0

Valid, but not good!

Next step...

• Select a non-basic variable

– -z + 2x + 1y = 0

– x has the higher coefficient

• Select a basic variable

– s1 + 1x + 5y = 8 1/8

– s2 + 2x + 3y = 6 2/6

– s3 + 3x + y = 6

3/6

• 3/6 is the highest, use equation with s3

New set of equations

• Solve for x

– x = 2 - (1/3)s3 -(1/3)y

• Substitute in to other equations to get...

– -z – (2/3)s3 +(1/3)y = -4

– s1– (1/3)s3 + (14/3)y = 6

– s2 – (2/3)s3 +(7/3)y = 2

– x + (1/3)s3 +(1/3)y = 2

Redefine everything...

•

•

•

•

Update variables

Non-Basic: s3 and y

Basic: s1, s2, z, and x

Current Solution:

– -z – (2/3)s3 +(1/3)y = -4 => z = 4

– x + (1/3)s3 +(1/3)y = 2 => x = 2

–y=0

• Better, but not quite there.

Do it again!

• Repeat this process

• Stop repeating when the coefficients in the

objective equation are all negative.

Improvements

• Different kinds of inequalities

• Minimized instead of maximized

• L. G. Kachian algorithm proved polynomial

Artificial Variable Technique

(The Big-M Method)

Big-M Method of solving LPP

The Big-M method of handling instances with artificial

variables is the “commonsense approach”. Essentially, the

notion is to make the artificial variables, through their

coefficients in the objective function, so costly or unprofitable

that any feasible solution to the real problem would be

preferred....unless the original instance possessed no feasible

solutions at all. But this means that we need to assign, in the

objective function, coefficients to the artificial variables that are

either very small (maximization problem) or very large

(minimization problem); whatever this value,let us call it Big M.

In fact, this notion is an old trick in optimization in general; we

simply associate a penalty value with variables that we do not

want to be part of an ultimate solution(unless such an outcome

Is unavoidable).

Indeed, the penalty is so costly that unless any of the

respective variables' inclusion is warranted algorithmically,

such variables will never be part of any feasible solution.

This method removes artificial variables from the basis. Here,

we assign a large undesirable (unacceptable penalty) coefficients to

artificial variables from the objective function point of view. If the

objective function (Z) is to be minimized, then a very large positive

price (penalty, M) is assigned to each artificial variable and if Z is to

be minimized, then a very large negative price is to be assigned. The

penalty will be designated by +M for minimization problem and by –

M for a maximization problem and also M>0.

Example: Minimize Z= 600X1+500X2

subject to constraints,

2X1+ X2 >or= 80

X1+2X2 >or= 60 and X1,X2 >or= 0

Step1: Convert the LP problem into a system of linear

equations.

We do this by rewriting the constraint inequalities as equations by

subtracting new “surplus & artificial variables" and assigning them

zero & +M coefficientsrespectively in the objective function as

shown below.

So the Objective Function would be:

Z=600X1+500X2+0.S1+0.S2+MA1+MA2

subject to constraints,

2X1+ X2-S1+A1 = 80

X1+2X2-S2+A2 = 60

X1,X2,S1,S2,A1,A2 >or= 0

Step 2: Obtain a Basic Solution to the problem.

We do this by putting the decision variables X1=X2=S1=S2=0,

so that A1= 80 and A2=60.

These are the initial values of artificial variables.

Step 3: Form the Initial Tableau as shown.

Cj

Basic

Basic

CB Variab

Soln(XB)

le (B)

M

M

A1

A2

80

60

600

X1

500

X2

2

1

1

2

Zj 3M

3M

Cj - Zj 600-3M 500-3M

0

0

M

M

Min.Ratio

(XB/Pivotal

Col.)

S1

S2

A1

A2

-1

0

M

M

0

-1

M

M

1

0

M

0

0 80

1 60

M

0

It is clear from the tableau that X2 will enter and A2 will leave the

basis. Hence 2 is the key element in pivotal column. Now,the new

row operations are as follows:

R2(New) = R2(Old)/2

R1(New) = R1(Old) - 1*R2(New)

Cj

600

500

0

0

M

Basic

Basic

CB Variab

Soln(XB)

le (B)

X1

X2

S1

S2

A1

Min.Ratio

(XB/Pivota

l Col.)

M

500

3 2

1 2

0

1

-1

0

1 2

- 1/2

1

0

100/3

60

500

M

M/2-250

M

0

M

250-M/2

0

A1

X2

50

30

Zj 3M/2+250

Cj - Zj 350-3M/2

It is clear from the tableau that X1 will enter and A1 will leave the

basis. Hence 2 is the key element in pivotal column. Now,the new

row operations are as follows:

R1(New) = R1(Old)*2/3

R2(New) = R2(Old) – (1/2)*R1(New)

Cj

CB

600

500

Basic

Varia Basic

ble Soln(XB)

(B)

X1

X2

100/3

40/3

Zj

Cj - Zj

600

500

0

0

X1

X2

S1

S2

1

0

600

0

1

500

0

2 3

1 3

700 3

700 3

1 3

2 3

400 3

400 3

0

Min.

Ratio

(XB/P

ivotal

Col.)

Since all the values of (Cj-Zj) are either zero or positive and also

both the artificial variables have been removed, an optimum

solution has been arrived at with X1=100/3 , X2=40/3 and

Z=80,000/3.

Unit II

Dynamic Programming

Characteristics and Examples

48

Overview

• What is dynamic programming?

• Examples

• Applications

49

What is Dynamic Programming?

• Design technique

– ‘optimization’ problems (sequence of related decisions)

– Programming does not mean ‘coding’ in this context, it

means ‘solve by making a chart’- or ‘using an array to save

intermediate steps”. Some books call this ‘memoization’

(see below)

– Similar to Divide and Conquer BUT subproblem solutions

are SAVED and NEVER recomputed

– Principal of optimality: the optimal solution to the

problem contains optimal solutions to the subproblems (Is

this true for EVERYTHING?)

50

Characteristics

• Optimal substructure

– Unweighted shortest path?

– Unweighted longest simple path?

• Overlapping Subproblems

– What happens in recursion (D&C) when this happens?

• Memoization (not a typo!)

– Saving solutions of subproblems (like we did in Fibonacci)

to avoid recomputation

51

Examples

• Matrix chain

• Longest common subsequence (called the LCS

problem”

52

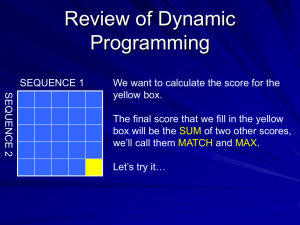

Review of Technique

• You have already applied dynamic

programming and understand it why it may

result in a good algorithm

– Fibonacci

– Ackermann

– Combinations

53

Principal of Optimality

• Often called the “optimality condition”

• What it means in plain English: you apply the divide

and conquer technique so the subproblems are

SMALLER VERSIONS OF THE ORIGINAL PROBLEM: if

you solve “optimize” the answer to the small

problems , does that fact automatically mean that

the solution to the big problem is also

optimized????? If the answer is yes , then DP applies

to his problem

54

Example 1

• Assume your problem is to draw a straight line

between two points A and B. You solve this by

divide and conquer by drawing a line from A

to the midpoint and from the midpoint to B.

QUESTION: if you paste the two smaller lines

together will the RESULTING LINE FROM A TO

B BE THE SHORTEST DISTANCE FROM A to

B???

55

Example 2

• Say you want to buy Halloween candy – 100

candy bars. You do plan to do this by divide

and conquer – buying 10 sets of 10 bars. Is

this necessarily less expensive per bar than

just buying 2 packages of 50? Or perhaps 1

package of 100?

56

How To Apply DP

• There are TWO ways to apply dynamic

programming

– METHOD 1: solve the problem at hand recursively,

notice where the same subproblem is being ‘resolved’ and implement the algorithm as a TABLE

(example: fibonacci)

– METHOD 2: generate all feasible solutions to a

problem but prune (eliminate) the solutions that

cannot be optimal (example: shortest path)

57

Practice Is the Only Way to Learn This

Technique

• See class webpage for homework.

• Do problems in textbook not assigned but for

practice – even after term ends. It took me

two years after I took this course before I

could apply DP in the real world.

58

Dynamic programming

Design technique, like divide-and-conquer.

Example: Longest Common Subsequence (LCS)

• Given two sequences x[1 . . m] and y[1 . . n], find

a longest subsequence common to them both.

“a” not “the”

x: A B

C

B

D A B

BCBA =

LCS(x, y)

y: B

D C

A B

A

functional notation,

but not a function

59

Review: Dynamic programming

• DP is a method for solving certain kind of

problems

• DP can be applied when the solution of a

problem includes solutions to subproblems

• We need to find a recursive formula for the

solution

• We can recursively solve subproblems,

starting from the trivial case, and save their

solutions in memory

• In the end we’ll get the solution of the

whole problem

4/9/2015

60

Properties of a problem that can be

solved with dynamic programming

• Simple Subproblems

– We should be able to break the original problem

to smaller subproblems that have the same

structure

• Optimal Substructure of the problems

– The solution to the problem must be a

composition of subproblem solutions

• Subproblem Overlap

– Optimal subproblems to unrelated problems can

contain subproblems in common

4/9/2015

61

Review: Longest Common

Subsequence (LCS)

• Problem: how to find the longest pattern of

characters that is common to two text

strings X and Y

• Dynamic programming algorithm: solve

subproblems until we get the final solution

• Subproblem: first find the LCS of prefixes

of X and Y.

• this problem has optimal substructure: LCS

of two prefixes is always a part of LCS of

bigger strings

4/9/2015

62

Review: Longest Common

Subsequence (LCS) continued

• Define Xi, Yj to be prefixes of X and Y of

length i and j; m = |X|, n = |Y|

• We store the length of LCS(Xi, Yj) in c[i,j]

• Trivial cases: LCS(X0 , Yj ) and LCS(Xi,

Y0) is empty (so c[0,j] = c[i,0] = 0 )

• Recursive formula for c[i,j]:

if x[i] y[ j ],

c[i 1, j 1] 1

c[i, j ]

max(c[i, j 1], c[i 1, j ]) otherwise

c[m,n] is the final solution

4/9/2015

63

Review: Longest Common

Subsequence (LCS)

• After we have filled the array c[ ], we can

use this data to find the characters that

constitute the Longest Common

Subsequence

• Algorithm runs in O(m*n), which is much

better than the brute-force algorithm: O(n

2m)

4/9/2015

64

0-1 Knapsack problem

• Given a knapsack with maximum capacity W,

and a set S consisting of n items

• Each item i has some weight wi and benefit

value bi (all wi , bi and W are integer values)

• Problem: How to pack the knapsack to achieve

maximum total value of packed items?

4/9/2015

65

0-1 Knapsack problem:

a picture

Weight

Items

This is a knapsack

Max weight: W = 20

W = 20

4/9/2015

Benefit value

wi

bi

2

3

3

4

5

5

8

9

10

4

66

0-1 Knapsack problem

• Problem, in other words, is to find

max bi subject to wi W

iT

4/9/2015

iT

The problem is called a “0-1” problem,

because each item must be entirely

accepted or rejected.

Just another version of this problem is the

“Fractional Knapsack Problem”, where we

can take fractions of items.

67

0-1 Knapsack problem: brute-force

approach

Let’s first solve this problem with a

straightforward algorithm

• Since there are n items, there are 2n possible

combinations of items.

• We go through all combinations and find

the one with the most total value and with

total weight less or equal to W

• Running time will be O(2n)

4/9/2015

68

0-1 Knapsack problem: brute-force

approach

• Can we do better?

• Yes, with an algorithm based on dynamic

programming

• We need to carefully identify the

subproblems

Let’s try this:

If items are labeled 1..n, then a subproblem

would be to find an optimal solution for

Sk = {items labeled 1, 2, .. k}

4/9/2015

69

Defining a Subproblem

If items are labeled 1..n, then a subproblem

would be to find an optimal solution for Sk

= {items labeled 1, 2, .. k}

• This is a valid subproblem definition.

• The question is: can we describe the final

solution (Sn ) in terms of subproblems (Sk)?

• Unfortunately, we can’t do that. Explanation

follows….

4/9/2015

70

Defining a Subproblem

w1 =2 w2

b1 =3 =4

b2 =5

w3 =5

b3 =8

w3 =5

b3 =8

w4 =9

b4 =10

For S5:

Total weight: 20

total benefit: 26

4/9/2015

wi

bi

1

2

3

2

3

4

3

4

5

4

5

8

5

9

10

Item

#

?

Max weight: W = 20

For S4:

Total weight: 14;

total benefit: 20

w1 =2 w2

b1 =3 =4

b2 =5

Weight Benefit

w4 =3

b4 =4

S4

S5

Solution for S4 is

not part of the

solution for S5!!!

71

Defining a Subproblem (continued)

• As we have seen, the solution for S4 is not

part of the solution for S5

• So our definition of a subproblem is flawed

and we need another one!

• Let’s add another parameter: w, which will

represent the exact weight for each subset

of items

• The subproblem then will be to compute

B[k,w]

4/9/2015

72

Recursive Formula for subproblems

Recursive formula for subproblems:

B[k 1, w]

if wk w

B[k , w]

max{B[k 1, w], B[k 1, w wk ] bk } else

• It means, that the best subset of Sk that has

total weight w is one of the two:

1) the best subset of Sk-1 that has total weight

w, or

2) the best subset of Sk-1 that has total weight

w-wk plus the item k

4/9/2015

73

Recursive Formula

B[k 1, w]

if wk w

B[k , w]

max{B[k 1, w], B[k 1, w wk ] bk } else

• The best subset of Sk that has the total

weight w, either contains item k or not.

• First case: wk>w. Item k can’t be part of the

solution, since if it was, the total weight

would be > w, which is unacceptable

• Second case: wk <=w. Then the item k can

be in the solution, and we choose the case

with greater value

4/9/2015

74

0-1 Knapsack Algorithm

for w = 0 to W

B[0,w] = 0

for i = 0 to n

B[i,0] = 0

for w = 0 to W

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

75

Running time

for w = 0 to W

O(W)

B[0,w] = 0

for i = 0 to n

Repeat n times

B[i,0] = 0

for w = 0 to W

O(W)

< the rest of the code >

What is the running time of this algorithm?

O(n*W)

Remember that the brute-force algorithm

takes O(2n)

4/9/2015

76

Example

Let’s run our algorithm on the

following data:

n = 4 (# of elements)

W = 5 (max weight)

Elements (weight, benefit):

(2,3), (3,4), (4,5), (5,6)

4/9/2015

77

Example (2)

i

W

0

0

0

1

2

3

4

0

0

0

0

5

0

1

2

3

4

for w = 0 to W

B[0,w] = 0

4/9/2015

78

Example (3)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

5

0

W

for i = 0 to n

B[i,0] = 0

4/9/2015

79

Example (4)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

5

0

W

i=1

bi=3

wi=2

w=1

w-wi =-1

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

80

Example (5)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

5

0

W

i=1

bi=3

wi=2

w=2

w-wi =0

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

81

Example (6)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

5

0

W

i=1

bi=3

wi=2

w=3

w-wi=1

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

82

Example (7)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

5

0

W

i=1

bi=3

wi=2

w=4

w-wi=2

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

83

Example (8)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

5

0

3

W

i=1

bi=3

wi=2

w=5

w-wi=2

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

84

Example (9)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

5

0

3

W

i=2

bi=4

wi=3

w=1

w-wi=-2

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

85

Example (10)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

5

0

3

W

i=2

bi=4

wi=3

w=2

w-wi=-1

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

86

Example (11)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

5

0

3

W

i=2

bi=4

wi=3

w=3

w-wi=0

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

87

Example (12)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

5

0

3

W

i=2

bi=4

wi=3

w=4

w-wi=1

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

88

Example (13)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

5

0

3

7

W

i=2

bi=4

wi=3

w=5

w-wi=2

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

89

Example (14)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

0

3

4

5

0

3

7

W

i=3

bi=5

wi=4

w=1..3

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

90

Example (15)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

0

3

4

5

5

0

3

7

W

i=3

bi=5

wi=4

w=4

w- wi=0

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

91

Example (15)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

0

3

4

5

5

0

3

7

7

W

i=3

bi=5

wi=4

w=5

w- wi=1

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

92

Example (16)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

0

3

4

5

0

3

4

5

5

0

3

7

7

W

i=3

bi=5

wi=4

w=1..4

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

93

Example (17)

i

0

1

2

3

4

0

0

0

0

0

0

1

2

3

4

0

0

0

0

0

3

3

3

0

3

4

4

0

3

4

5

0

3

4

5

5

0

3

7

7

7

W

i=3

bi=5

wi=4

w=5

Items:

1: (2,3)

2: (3,4)

3: (4,5)

4: (5,6)

if wi <= w // item i can be part of the solution

if bi + B[i-1,w-wi] > B[i-1,w]

B[i,w] = bi + B[i-1,w- wi]

else

B[i,w] = B[i-1,w]

else B[i,w] = B[i-1,w] // wi > w

4/9/2015

94

Comments

• This algorithm only finds the max possible

value that can be carried in the knapsack

• To know the items that make this maximum

value, an addition to this algorithm is

necessary

• Please see LCS algorithm from the previous

lecture for the example how to extract this

data from the table we built

4/9/2015

95

Conclusion

• Dynamic programming is a useful technique

of solving certain kind of problems

• When the solution can be recursively

described in terms of partial solutions, we

can store these partial solutions and re-use

them as necessary

• Running time (Dynamic Programming

algorithm vs. naïve algorithm):

– LCS: O(m*n) vs. O(n * 2m)

– 0-1 Knapsack problem: O(W*n) vs. O(2n)

4/9/2015

96

Unit III

Network Models

Chapter Outline

12.1 Introduction

12.2 Minimal-Spanning Tree Technique

12.3 Maximal-Flow Technique

12.4 Shortest-Route technique

Learning Objectives

Students will be able to

– Connect all points of a network while minimizing

total distance using the minimal-spanning tree

technique.

– Determine the maximum flow through a network

using the maximal-flow technique.

– Find the shortest path through a network using

the shortest-route technique.

– Understand the important role of software in

solving network problems.

Minimal-Spanning Tree Technique

• Determines the path through the network

that connects all the points while minimizing

total distance.

Minimal-Spanning Tree

Steps

1. Select any node in the network.

2. Connect this node to the nearest node that minimizes the

total distance.

3. Considering all of the nodes that are now connected, find

and connect the nearest node that is not connected.

4. Repeat the third step until all nodes are connected.

5. If there is a tie in the third step and two or more nodes that

are not connected are equally near, arbitrarily select one and

continue. A tie suggests that there might be more than one

optimal solution.

Minimal-Spanning Tree

Lauderdale Construction

2

3

1

3

5

3

2

5

2

8

3

6

2

4

7

7

3

5

4

6

1

Minimal-Spanning Tree

Iterations 1&2

2

3

1

3

5

3

2

5

Second Iteration

First Iteration

2

8

3

6

2

4

7

7

3

5

4

6

1

2

3

1

3

5

5

3

2

2

8

3

6

2

4

7

7

3

5

4

6

1

Minimal-Spanning Tree

Iterations 3&4

2

3

1

3

5

3

2

5

Third Iteration

2

8

3

6

2

4

7

7

3

5

4

6

1

2

3

1

3

5

5

3

2

Fourth Iteration

2

8

3

6

2

4

7

7

3

5

4

6

1

Minimal-Spanning Tree

Iterations 4&5

2

3

1

3

5

5

3

2

2

8

3

6

2

4

Fourth Iteration

7

7

3

5

4

6

1

2

3

1

3

5

5

3

2

Fifth Iteration

2

8

3

6

2

4

7

7

3

5

4

6

1

Minimal-Spanning Tree

Iterations 6&7

2

3

1

3

5

3

2

5

Sixth iteration

2

8

3

6

2

4

7

7

3

5

4

6

1

Seventh & final

iteration

Minimum Distance: 16

2

3

1

3

5

5

3

2

2

8

3

6

2

4

7

7

3

5

4

6

1

The Maximal-Flow Technique

1. Pick any path (streets from west to east) with

some flow.

2. Increase the flow (number of cars) as much as

possible.

3. Adjust the flow capacity numbers on the path

(streets).

4. Repeat the above steps until an increase in

flow is no longer possible.

Maximal-Flow

Road Network for Waukesha

2

1 2

1

2

3

West

Point

1

6

1

2

0

0 1 1

10

1

4

6

0

3

3 2

5

1

East

Point

Maximal-Flow

Road Network for Waukesha

Add 2

2

1 2

1

Subtract 2

3

West

Point

1

2

2

6

1

0

0 1 1

10

1

4

6

0

3

3 2

5

1

East

Point

Road Network for Waukesha

First Iteration

Add 1

0

3 2

1

4

1

West

Point

1

6

1

2

0

0 1 1

10

1

4

Subtract 1

6

0

5

3

1

3 2

12-110

East

Point

Road Network for Waukesha

Second Iteration

0

4 2

0

4

0

West

Point

6

2

1 2

0

0 2 0

10

1

0

4

Subtract 2

3

3 2

6

1

Add 2

5

East

Point

Road Network for Waukesha

Third Iteration

0

4 2

0

West

Point

4

0

1

6

2

2

2

0 2 0

8

1

East

Point

4

Path

4

2

3

3 0

5

3

1-2-6

1-2-4-6

1-3-5-6

Total

Flow (Cars

Per Hour)

200

100

200

500

The Shortest-Route Technique

• 1. Find the nearest node to the origin (plant). Put the

distance in a box by the node.

– In some cases, several paths will have to be checked to

find the nearest node.

• 2. Repeat this process until you have gone through the entire

network. The last distance at the ending node will be the

distance of the shortest route. You should note that the

distances placed in the boxes by each node are the shortest

route to this node. These distances are used as intermediate

results in finding the next nearest node.

Shortest-Route Problem

Ray Design, Inc.

Roads from Ray’s Plant to the

Warehouse

4

2

200

100

100

Plant

1

50

100

150

200

6

100

3

40

5

Warehouse

Ray Design, Inc.

First Iteration

100

4

2

200

100

100

Plant

1

50

100

150

200

6

100

3

40

5

Warehouse

Ray Design, Inc.

Second Iteration

100

4

2

200

100

100

Plant

1

50

100

150

200

6

100

3

150

40

5

Warehouse

Ray Design, Inc.

Third Iteration

100

4

2

200

100

100

Plant

1

50

100

150

200

6

100

3

150

40

5

190

Warehouse

Ray Design, Inc.

Fourth Iteration

100

4

2

200

100

100

290

Plant

1

50

100

150

200

6

100

3

150

40

5

190

Warehouse

Project Management - CPM/PERT

Project Scheduling and Control Techniques

Gantt Chart

Critical Path Method (CPM)

Program Evaluation and Review Technique (PERT)

120

History of CPM/PERT

• Critical Path Method (CPM)

– E I Du Pont de Nemours & Co. (1957) for construction of new

chemical plant and maintenance shut-down

– Deterministic task times

– Activity-on-node network construction

– Repetitive nature of jobs

• Project Evaluation and Review Technique (PERT)

–

–

–

–

U S Navy (1958) for the POLARIS missile program

Multiple task time estimates (probabilistic nature)

Activity-on-arrow network construction

Non-repetitive jobs (R & D work)

121

Project Network

• Network analysis is the general name given to certain specific

techniques which can be used for the planning, management and

control of projects

• Use of nodes and arrows

Arrows

An arrow leads from tail to head directionally

– Indicate ACTIVITY, a time consuming effort that is required to perform a

part of the work.

Nodes

A node is represented by a circle

- Indicate EVENT, a point in time where one or more activities start and/or

finish.

• Activity

– A task or a certain amount of work required in the project

– Requires time to complete

– Represented by an arrow

• Dummy Activity

– Indicates only precedence relationships

– Does not require any time of effort

122

CPM calculation

• Path

– A connected sequence of activities leading from

the starting event to the ending event

• Critical Path

– The longest path (time); determines the project

duration

• Critical Activities

– All of the activities that make up the critical path

123

Forward Pass

• Earliest Start Time (ES)

– earliest time an activity can start

– ES = maximum EF of immediate predecessors

• Earliest finish time (EF)

– earliest time an activity can finish

– earliest start time plus activity time

EF= ES + t

Backward Pass

Latest Start Time (LS)

Latest time an activity can start without delaying critical path

time

LS= LF - t

Latest finish time (LF)

latest time an activity can be completed without delaying

critical path time

LS = minimum LS of immediate predecessors

124

CPM analysis

• Draw the CPM network

• Analyze the paths through the network

• Determine the float for each activity

– Compute the activity’s float

float = LS - ES = LF - EF

– Float is the maximum amount of time that this activity can

be delay in its completion before it becomes a critical

activity, i.e., delays completion of the project

• Find the critical path is that the sequence of activities and

events where there is no “slack” i.e.. Zero slack

– Longest path through a network

• Find the project duration is minimum project completion time

125

Consider below table summarizing the details of a project

involving 10 activities

Activity

Immediate precedence duration

a

6

b

8

c

5

d

b

13

e

c

9

f

a

15

g

a

17

h

f

9

i

g

6

j

d,e

12

Construct the CPM network. Determine the critical path and project

completion time .Also compute total float and free floats for the126non-

CPM Example:

• CPM Network

f, 15

h, 9

g, 17

a, 6

i, 6

b, 8

d, 13

j, 12

c, 5

e, 9

127

CPM Example

• ES and EF Times

f, 15

h, 9

g, 17

a, 6

0 6

i, 6

b, 8

0 8

d, 13

j, 12

c, 5

0 5

e, 9

128

CPM Example

• ES and EF Times

f, 15

6 21

h, 9

g, 17

a, 6

0 6

6 23

i, 6

b, 8

0 8

c, 5

0 5

d, 13

j, 12

8 21

e, 9

5 14

129

CPM Example

• ES and EF Times

f, 15

6 21

g, 17

a, 6

0 6

6 23

i, 6

23 29

h, 9

21 30

b, 8

0 8

c, 5

0 5

d, 13

8 21

e, 9

5 14

j, 12

21 33

Project’s EF = 33

130

CPM Example

• LS and LF Times

a, 6

0 6

b, 8

0 8

c, 5

0 5

f, 15

6 21

g, 17

6 23

d, 13

8 21

i, 6

23 29

27 33

h, 9

21 30

24 33

j, 12

21 33

21 33

e, 9

5 14

131

CPM Example

• LS and LF Times

a, 6

0 6

4 10

b, 8

0 8

0 8

c, 5

0 5

7 12

f, 15

6 21

18 24

g, 17

6 23

10 27

d, 13

8 21

8 21

e, 9

5 14

12 21

i, 6

23 29

27 33

h, 9

21 30

24 33

j, 12

21 33

21 33

132

CPM Example

• Float

a, 6

3 0 6

3 9

b, 8

0 0 8

0 8

c, 5

7 0 5

7 12

f, 15

3 6 21

9 24

g, 17

4 6 23

10 27

d, 13

0 8 21

8 21

h, 9

3 21 30

24 33

i, 6

4 23 29

27 33

j, 12

0 21 33

21 33

e, 9

7 5 14

12 21

133

CPM Example

• Critical Path

f, 15

h, 9

g, 17

a, 6

i, 6

b, 8

d, 13

j, 12

c, 5

e, 9

134

PERT

• PERT is based on the assumption that an activity’s duration

follows a probability distribution instead of being a single value

• Three time estimates are required to compute the parameters

of an activity’s duration distribution:

– pessimistic time (tp ) - the time the activity would take if

things did not go well

– most likely time (tm ) - the consensus best estimate of the

activity’s duration

– optimistic time (to ) - the time the activity would take if

things did go well

Mean (expected time):

te =

tp + 4 tm + to

6

2

Variance: Vt = =

2

darla/smbs/vit

tp - to

6

135

PERT analysis

• Draw the network.

• Analyze the paths through the network and find the critical path.

• The length of the critical path is the mean of the project duration

probability distribution which is assumed to be normal

• The standard deviation of the project duration probability

distribution is computed by adding the variances of the critical

activities (all of the activities that make up the critical path) and

taking the square root of that sum

• Probability computations can now be made using the normal

distribution table.

136

Probability computation

Determine probability that project is completed within specified time

x-

Z=

where = tp = project mean time

= project standard mean time

x = (proposed ) specified time

137

Normal Distribution of Project Time

Probability

Z

= tp

x

Time

138

PERT Example

Immed. Optimistic Most Likely Pessimistic

Activity Predec. Time (Hr.) Time (Hr.) Time (Hr.)

A

-4

6

8

B

-1

4.5

5

C

A

3

3

3

D

A

4

5

6

E

A

0.5

1

1.5

F

B,C

3

4

5

G

B,C

1

1.5

5

H

E,F

5

6

7

I

E,F

2

5

8

J

D,H

2.5

2.75

4.5

K

G,I

3

5

7

139

PERT Example

PERT Network

D

A

E

H

J

C

B

I

F

K

G

140

PERT Example

Activity

A

B

C

D

E

F

G

H

I

J

K

Expected Time

6

4

3

5

1

4

2

6

5

3

5

Variance

4/9

4/9

0

1/9

1/36

1/9

4/9

1/9

1

1/9

4/9

141

PERT Example

Activity ES

A

B

C

D

E

0

0

6

6

6

F

G

H

I

J

K

9

9

13

13

19

18

EF

6

4

9

11

7

13

11

19

18

22

23

LS

LF

0

5

6

15

12

9

16

14

13

20

18

Slack

6

9

9

20

13

13

18

20

18

23

23

0 *critical

5

0*

9

6

0*

7

1

0*

1

0*

142

PERT Example

Vpath = VA + VC + VF + VI + VK

= 4/9 + 0 + 1/9 + 1 + 4/9

= 2

path = 1.414

z = (24 - 23)/(24-23)/1.414 = .71

From the Standard Normal Distribution table:

P(z < .71) = .5 + .2612 = .7612

143

PROJECT COST

Cost consideration in project

• Project managers may have the option or requirement to crash

the project, or accelerate the completion of the project.

• This is accomplished by reducing the length of the critical path(s).

• The length of the critical path is reduced by reducing the

duration of the activities on the critical path.

• If each activity requires the expenditure of an amount of money

to reduce its duration by one unit of time, then the project

manager selects the least cost critical activity, reduces it by one

time unit, and traces that change through the remainder of the

network.

• As a result of a reduction in an activity’s time, a new critical path

may be created.

• When there is more than one critical path, each of the critical

paths must be reduced.

• If the length of the project needs to be reduced further, the

process is repeated.

145

Project Crashing

• Crashing

– reducing project time by expending additional resources

• Crash time

– an amount of time an activity is reduced

• Crash cost

– cost of reducing activity time

• Goal

– reduce project duration at minimum cost

146

Time-Cost Relationship

Crashing costs increase as project duration decreases

Indirect costs increase as project duration increases

Reduce project length as long as crashing costs are less than

indirect costs

Time-Cost Tradeoff

Min total cost =

optimal project

time

Total project cost

Indirect

cost

Direct cost

time

147

Benefits of CPM/PERT

•

•

•

•

•

Useful at many stages of project management

Mathematically simple

Give critical path and slack time

Provide project documentation

Useful in monitoring costs

CPM/PERT can answer the following important

questions:

•How long will the entire project take to be completed? What are the

risks involved?

•Which are the critical activities or tasks in the project which could

delay the entire project if they were not completed on time?

•Is the project on schedule, behind schedule or ahead of schedule?

•If the project has to be finished earlier than planned, what is the best

way to do this at the least cost?

148

Limitations to CPM/PERT

•

•

•

•

•

•

Clearly defined, independent and stable activities

Specified precedence relationships

Over emphasis on critical paths

Deterministic CPM model

Activity time estimates are subjective and depend on judgment

PERT assumes a beta distribution for these time estimates, but

the actual distribution may be different

• PERT consistently underestimates the expected project

completion time due to alternate paths becoming critical

To overcome the limitation, Monte Carlo simulations can be

performed on the network to eliminate the optimistic bias

149

PERT vs

CPM

CPM

PERT

CPM uses activity oriented network.

PERT uses event oriented Network.

Durations of activity may be estimated

with a fair degree of accuracy.

Estimate of time for activities are not so

accurate and definite.

It is used extensively in construction

projects.

It is used mostly in research and

development projects, particularly

projects of non-repetitive nature.

Deterministic concept is used.

Probabilistic model concept is used.

CPM can control both time and cost

when planning.

PERT is basically a tool for planning.

In CPM, cost optimization is given prime

importance. The time for the completion

of the project depends upon cost

optimization. The cost is not directly

proportioned to time. Thus, cost is the

In PERT, it is assumed that cost varies

directly with time. Attention is therefore

given to minimize the time so that

minimum cost results. Thus in PERT, time

150

is the controlling factor.

Unit IV

Inventory Management

The objective of inventory

management is to strike a balance

between inventory investment and

customer service

Inventory control

It means stocking adequate number and kind of

stores, so that the materials are available

whenever required and wherever required.

Scientific inventory control results in optimal

balance

What is inventory?

Inventory is the raw materials, component

parts, work-in-process, or finished products

that are held at a location in the supply chain.

Input

Material

Management

department

Inventory

(money)

Goods in stores

Work-in-progress

Finished products

Equipment etc.

Basic inventory model

Output

Production

department

Zero Inventory?

• Reducing amounts of raw materials and purchased

parts and subassemblies by having suppliers deliver

them directly.

• Reducing the amount of works-in process by using

just-in-time production.

• Reducing the amount of finished goods by shipping

to markets as soon as possible.

Importance of Inventory

One of the most expensive assets of

many companies representing as much

as 50% of total invested capital

Operations managers must balance

inventory investment and customer

service

FUNCTIONS OF

INVENTORY

• To meet anticipated demand.

• To smoothen production requirements.

• To decouple operations.

INVENTORY

SUPPLY

PROCESS

PRODUCTS

DEMAND

PRODUCTS

DEMAND

DEMAND

PROCESS

Functions Of Inventory

(Cont’d)

• To protect against stock-outs.

• To take advantage of order cycles.

• To help hedge against price increases.

• To permit operations.

• To take advantage of quantity discounts.

Types of Inventory

Raw material

Purchased but not processed

Work-in-process

Undergone some change but not completed

A function of cycle time for a product

Maintenance/repair/operating (MRO)

Necessary to keep machinery and processes productive

Finished goods

Completed product awaiting shipment

The Material Flow Cycle

Cycle time

95%

Input

Wait for

inspection

Wait to

be moved

Move Wait in queue Setup

time

for operator

time

5%

Run

time

Output

Safety stock =

(safety factor z)(std deviation in LT demand)

Service level

Probability

of stock-out

Safety

Stock

Read z from Normal table for a given service level

Average Inventory =

(Order Qty)/2 + Safety Stock

Inventory

Level

Order

Quantity

EOQ/2

Safety Stock (SS)

Lead Time

Place

order

Receive

order

Time

Average

Inventory

Managing Inventory

1. How inventory items can be classified

2. How accurate inventory records can be

maintained

Inventory Models for

Independent Demand

Need to determine when and how

much to order

1. Basic economic order quantity

2. Production order quantity

3. Quantity discount model

Economic order of quantity

EOQ = Average Monthly Consumption X Lead Time [in

months] + Buffer Stock – Stock on hand

ECONOMIC ORDER OF

QUANTITY(EOQ)

PURCHASING

COST

CARRYING

COST

•Re-order level: stock level at which fresh order

is placed.

•Average consumption per day x lead time +

buffer stock

•Lead time: Duration time between placing an

order & receipt of material

•Ideal – 2 to 6 weeks.

Basic EOQ Model

Important assumptions

1. Demand is known, constant, and independent

2. Lead time is known and constant

3. Receipt of inventory is instantaneous and

complete

4. Quantity discounts are not possible

5. Only variable costs are setup and holding

6. Stockouts can be completely avoided

An EOQ Example

Determine optimal number of needles to order

D = 1,000 units

S = $10 per order

H = $.50 per unit per year

Q* =

2DS

H

Q* =

2(1,000)(10)

=

0.50

40,000 = 200 units

An EOQ Example

Determine optimal number of needles to order

D = 1,000 units

Q* = 200 units

S = $10 per order

H = $.50 per unit per year

Expected

Demand

D

number of = N =

=

Order

quantity

Q*

orders

1,000

N=

= 5 orders per year

200

An EOQ Example

Determine optimal number of needles to order

D = 1,000 units

Q* = 200 units

S = $10 per order

N = 5 orders per year

H = $.50 per unit per year

Number of working

Expected time

days per year

between orders = T =

N

T=

250

= 50 days between orders

5

Classification of Materials for

Inventory Control

Classification

Criteria

A-B-C

Annual value of consumption of the items

V-E-D

Critical nature of the components with respect to

products.

H-M-L

Unit price of material

F-S-N

Issue from stores

S-D-E

Purchasing problems in regard to availability

S-O-S

Seasonality

G-O-L-F

X-Y-Z

Channel for procuring the material

Inventory value of items stored

Relevant Inventory Costs

Holding costs - the costs of holding

or “carrying” inventory over time

Ordering costs - the costs of

placing an order and receiving

goods

Setup costs - cost to prepare a

machine or process for

manufacturing an order

Ordering Costs

•

•

•

•

•

Stationary

Clerical and processing, salaries/rentals

Postage

Processing of bills

Staff work in expedition /receiving/

inspection and documentation

Holding/Carrying Costs

•

•

•

•

•

•

•

•

Storage space (rent/depreciation)

Property tax on warehousing

Insurance

Deterioration/Obsolescence

Material handling and maintenance, equipment

Stock taking, security and documentation

Capital blocked (interest/opportunity cost)

Quality control

Stock out Costs

• Loss of business/ profit/ market/ advise

• Additional expenditure due to urgency of

purchases

a) telegraph / telephone charges

b) purchase at premium

c) air transport charges

• Loss of labor hours

Balancing Carrying against

Ordering Costs

Higher

Annual Cost ($)

Minimum

Total Annual

Stocking Costs

Lower

Total Annual

Stocking Costs

Annual

Carrying Costs

Annual

Ordering Costs

Smaller

EOQ

Larger

Order Quantity

16

Unit V Queuing Theory

Outlines

Introduction

Single server model

Multi server model

Introduction

• Involves the mathematical study of queues or waiting line.

• The formulation of queues occur whenever the demand for a

service exceeds the capacity to provide that service.

• Decisions regarding the amount of capacity to provide must

be made frequently in industry and elsewhere.

• Queuing theory provides a means for decision makers to

study and analyze characteristics of the service facility for

making better decisions.

Basic structure of queuing model

• Customers requiring service are generated over time by an

input source.

• These customers enter the queuing system and join a queue.

• At certain times, a member of the queue is selected for

service by some rule know as the service disciple.

• The required service is then performed for the customer by

the service mechanism, after which the customer leaves the

queuing system

The basic queuing process

Queuing

system

Input

source

Customer

s

Queue

Service

mechanis

m

Served

Customer

s

Characteristics of queuing models

•

•

•

•

•

•

Input or arrival (interarrival) distribution

Output or departure (service) distribution

Service channels

Service discipline

Maximum number of customers allowed in the system

Calling source

Kendall and Lee’s Notation

Kendall and Lee introduced a useful notation representing the 6

basic characteristics of a queuing model.

Notation: a/b/c/d/e/f

where

a = arrival (or interarrival) distribution

b = departure (or service time) distribution

c = number of parallel service channels in the system

d = service disciple

e = maximum number allowed in the system (service + waiting)

f = calling source

Conventional Symbols for a, b

M = Poisson arrival or departure distribution (or equivalently

exponential distribution or service times distribution)

D = Deterministic interarrival or service times

Ek = Erlangian or gamma interarrival or service time distribution

with parameter k

GI = General independent distribution of arrivals (or interarrival

times)

G = General distribution of departures (or service times)

Conventional Symbols for d

•

•

•

•

FCFS = First come, first served

LCFS = Last come, first served

SIRO = Service in random order

GD = General service disciple

Transient and Steady States

Transient state

• The system is in this state when its operating characteristics

vary with time.

• Occurs at the early stages of the system’s operation where its

behavior is dependent on the initial conditions.

Steady state

• The system is in this state when the behavior of the system

becomes independent of time.

• Most attention in queuing theory analysis has been directed

to the steady state results.

Queuing Model Symbols

n = Number of customers in the system

s = Number of servers

pn(t) = Transient state probabilities of exactly n customers in the

system at time t

pn = Steady state probabilities of exactly n customers in the

system

λ = Mean arrival rate (number of customers arriving per unit

time)

μ = Mean service rate per busy server (number of customers

served per unit time)

Queuing Model Symbols (Cont’d)

ρ = λ/μ = Traffic intensity

W = Expected waiting time per customer in the system

Wq = Expected waiting time per customer in the queue

L = Expected number of customers in the system

Lq = Expected number of customers in the queue

Relationship Between L and W

If λn is a constant λ for all n, it can be shown that

L = λW

Lq = λ Wq

If λn are not constant then λ can be replaced in the above

equations by λbar,the average arrival rate over the long run.

If μn is a constant μ for all n, then

W = Wq + 1/μ

Relationship Between L and W (cont’d)

These relationships are important because:

• They enable all four of the fundamental quantities L, W, Lq

and Wq to be determined as long as one of them is found

analytically.

• The expected queue lengths are much easier to find than that

of expected waiting times when solving a queuing model from

basic principles.

Single server queuing models

• M/M/1/FCFS/∞/∞ Model

when the mean arrival rate λn and mean service μn are all constant we

have

n

cn n , for n 1,2,...

T herefore

p n n p0

, for n 1,2,...

where

1

p0

1

1

n

1

1

1

1 n n 0

n 1

T hus

pn 1 n

, for n 1,2,...

Single server queuing models (cont’d)

Consequently

n

d

L n(1 ) n (1 )

n 0

n 0 d

d n

d 1

(1 )

(1 )

d n 0

d 1

1

1

Single server queuing models

(cont’d)

Similarly

2

Lq (n 1) pn L 1 p0

( )

n 1

T heexpectedwaiting timeis

1

L

W

Lq

Wq

Multi server queuing models

• M/M/s/FCFS/∞/∞ Model

When the mean arrival rate λn and mean service μn,

are all constant, we have the following rate diagram

Multi server queuing models (cont’d)

In this case we have

n

n 1,2, , s

n!

cn n

n s, s 1,

s! s n s

T herefore

n

p0 n 1,2, , s where p0

n

!

pn

n

p n s, s 1,

s! s n s 0

1

n

n!

s

s!1 s

Multi server queuing models

(cont’d)

It follows t hat

s 1

Lq

( s 1)!( s )

L Lq

Wq

Lq

W Wq

1

2

p0

Thank You

198