Computational Methods and Programming M.Sc. II, Sem

advertisement

Computational Methods and

Programming

M.Sc. II, Sem-IV

Under Academic Flexibility (Credit) Scheme

Computation as a third pillar of science

Observation/

Experiment

Theory

Computation

Traditional

view

Modern view

What are we going to study ?

• Ordinary Differential equations

• Partial Differential Equations

• Matrix Algebra

• Monte Carlo Methods and Simulations

1. Ordinary Differential Equations

Types of differential equations

Numerical solutions of ordinary differential

equations

Euler method

Applications to non-linear and vector equations

Leap-Frog method

Runge-Kutta method

The predictor corrector method

The intrinsic method

2. Partial Differential Equations

Types of equations

Elliptic Equations-Laplace’s equations

Hyperbolic Equations-Wave Equation,

Eularian-Lagrangian Methods

Parabolic Equations-Diffusion,

Conservative Methods The equation of continuity

Maxwell’s Equations

Dispersion

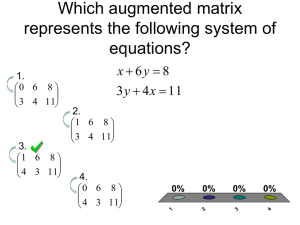

3. Matrix Algebra

Types of Matrices

Simple matrix problems

Elliptic equations-Poisson’s equation

System of equation and Matrix inversion

Iterative method- The Jacobi method

Matrix Eigen Value problems- Schrodinger’s

Equation

4. Monte Carlo methods and Simulation

Random number generators

Monte Carlo Integration

The Metropolis Algorithm

The Ising model

Quantum Monte Carlo

Reference Books

1. Potter D, Computational Physics, Wiley, Chichester, 1973

2. Press W H et. al., Numerical Recipes: The Art of Scientific

Computing, CUP, Cambridge, 1992

3. Wolfrom S, Mathematica- A System For Doing Mathematics By

Computer, Addison Wesley, 1991

4. Angus McKinnon, Computational Physics - 3rd/4th Year Option,

Notes in .pdf format

Some questions on Unit No 3 and 4

1. Discuss the types of matrices.

2. Explain the method of Elimination of unknowns, solve the following

equations by the same:

5x - 3y + 9z = 0.7

0.007x + 4.17y + 8.3z = 10.79

4x - 3.7y + 0.5z = 0.157

3. and explain Write note on Gauss–Seidel Method

4. Explain Elliptic equations as a Poisson’s equation

5. State Matrix Eigen Value problems- Schrodinger’s Equation

1.

2.

3.

4.

What is Random number generators?

Write note on Monte-Carlo integration.

What is Metropolis Algorithm ? Explain Ising model in detail.

Write note on Quantum Monte Carlo

Chapter 3

Matrix Algebra

Nearly every scientific problem which is solvable on a computer can be

represented by matrices. However the ease of solution can depend crucially on

the types of matrices involved. There are 3 main classes of problems which we

might want to solve:

1. Trivial Algebraic Manipulation such as addition, A + B or multiplication, AB, of

matrices.

2. Systems of equations: Ax = b where A and b are known and we have to find x.

This also includes the case of finding the inverse, A-1. The standard example of

such a problem is Poisson’s equation.

3. Eigenvalue Problems: Ax = x. This also includes the generalised eigenvalue

problem: Ax = B x. Here we will consider the time–independent Schrodinger

equation.

Matrices

A matrix is defined as a square or rectangular array of

numbers that obeys certain laws. This is a perfectly logical

extension of familiar mathematical concepts.

Use of Matrix

We can write the linear equations given below more

elegantly and compactly by collecting the coefficients in a 3×3

square array of numbers called a matrix:

3 x 2 y z 11

2 x 3 y z 13

x y 4 z 12

3

A 2

1

2

3

1

1

1

4

Row matrix

A row matrix consists of a single row. For example:

4

3

7

2

Column matrix

A column matrix consists of a single column. For

example:

Rank

6

3

8

The rank m of a matrix is the maximal number of linearly

independent row vectors it has; with 0 ≤ m ≤ n.

As the triple scalar product for above matrix is not zero,

they span a non zero volume, are linearly independent, and the

homogeneous linear equations have only the trivial solution. In

this case, the matrix is said to have rank 3.

Equality

Two matrices A=B are defined to be equal if and only if aij

=bij for all values of i and j.

If A [ a ij ] m n , B [ bij ] m n

Then A B if and only if

a ij b ij 1 i m , 1 j n

Example

1

A

3

2

4

a

B

c

b

d

If A B

Then

a 1, b 2 , c 3 , d 4

Special matrices

Square matrix

Unit matrix

Null matrix

Special matrices

Square matrix

A square matrix is of order m × m.

A square matrix is symmetric if

For example: 1 2 5

2

5

8

9

9

4

aij a ji.

A square matrix is skew-symmetric if aij a. ji

For example:

0

2

5

2

0

9

5

9

0

Special matrices

Unit matrix

A unit matrix is a diagonal matrix with all elements on the leading

diagonal being equal to unity.

For example:

1

0 0

I 0

1

0

0

0

1

The product of matrix A and the unit matrix is the matrix A:

A .I = A

Special matrices

Null matrix

A null matrix is one whose elements are all zero.

0

0

0

0 0

0

0

0

0

0

Notice that A .0 = 0

But that if A .B = 0

we cannot deduce that A = 0 or B = 0

Trace

In any square matrix the sum of the diagonal elements is called the trace.

For example,

T race

9

2

6

1

5

1 9 5 2 16

2

3

2

Clearly, the trace is a linear operation:

trace(A − B) = trace(A) − trace(B).

Matrix addition

We also need to add and subtract matrices according to rules that, again,

are suggested by and consistent with adding and subtracting linear

equations.

When we deal with two sets of linear equations in the same unknowns

that we can add or subtract, then we add or subtract individual

coefficients keeping the unknowns the same. This leads us to the

definition

A + B = C if and only if aij + bij = cij for all values of i and j,

with the elements combining according to the laws of ordinary algebra (or

arithmetic if they are simple numbers). This means that

A + B = B + A, commutation.

Two matrices of the same order are said to be

conformable for addition or subtraction.

Two matrices of different orders cannot be

added or subtracted, e.g.,

2

1

3

1

7

5

1

2

4

3

1

7

1

4

6

are NOT conformable for addition or subtraction.

Properties

Matrices A, B and C are conformable,

A + B = B + A

(commutative law)

A + (B +C) = (A + B) +C

(associative law)

l(A + B) = lA + lB, where l is a scalar

(distributive law)

Scalar multiplication:

If A [ a ij ] m n , c : scalar

Then cA [ ca ij ] m n

Matrix subtraction:

A B A ( 1) B

Example (Scalar multiplication and matrix subtraction)

1

A 3

2

2

0

1

4

1

2

2

B 1

1

Find (a) 3A, (b) –B, (c) 3A – B

0

4

3

0

3

2

Sol:

(a)

(b)

(c)

1

3 A 3 3

2

2

0

1

2

B 1 1

1

3

3 A B 9

6

4

3 1

1 3 3

2

3 2

0

4

3

6

0

3

32

30

3 1

0

2

3 1

1

2

12 2

3 1

6 1

0

4

3

0

4

3

3 4

3

3 1 9

3 2

6

6

0

3

0

3

2

1

0

3 10

7

2

6

4

0

12

6

4

12

3

6

Matrix Multiplication

We now write linear equations in terms of matrices because their

coefficients represent an array of numbers that we just defined as a matrix,

3 x1 2 x 2 x 3 11

2 x1 3 x 2 x 3 13

x1 x 2 4 x 3 12

The above inhomogeneous equations can be written similarly

Example : (Multiplication of Matrices)

1

A 4

5

3

2

0

3 2

3

B

4

2

1 2 2

Sol:

( 1)( 3 ) ( 3 )( 4 )

AB ( 4 )( 3 ) ( 2 )( 4 )

( 5 )( 3 ) ( 0 )( 4 )

9

4

15

1

6

10

( 1)( 2 ) ( 3 )(1)

( 4 )( 2 ) ( 2 )(1)

( 5 )( 2 ) ( 0 )(1)

1

A

0

1

0

2

1

2

1

2

3

0

c11 1 ( 1) 2 2 3 5 18

2

c12 1 2 2 3 3 0 8

3

c 21 0 ( 1) 1 2 4 5 22

0

c 0 2 1 3 4 0 3

22

1

3

2

4

5

1

C AB

0

3

4

1

B 2

5

2

1

1

3

2

4

5

2

18

3

22

0

8

3

Properties

Matrices A, B and C are conformable,

A(B + C) = AB + AC

(A + B)C = AC + BC

A(BC) = (AB) C

AB BA in general

AB = 0 NOT necessarily imply A = 0 or B = 0

AB = AC NOT necessarily imply B = C

Transpose of a matrix:

a 11

a

21

If A

a m1

Then

a 12

a 22

am2

a 11

a

12

T

A

a1 n

a1 n

a2n

M

a mn

a 21

a 22

a2n

mn

a m1

am2

M

a mn

n m

Properties of transposes:

(1) ( A ) A

T

T

(2) ( A B ) A B

T

T

( 3 ) ( cA ) c ( A )

T

T

( 4 ) ( AB ) B A

T

T

T

T

Matrix Inversion

We cannot define division of matrices, but for a square

matrix A with nonzero determinant we can find a matrix

A−1, called the inverse of A, so that

A−1A = AA−1 = I.

Theorem : (The inverse of a matrix is unique)

If B and C are both inverses of the matrix A, then B = C.

Proof:

AB I

C ( AB ) CI

( CA ) B C

IB C

B C

Consequently, the inverse of a matrix is unique.

Notes:

(1) The inverse of A is denoted by A 1

( 2 ) AA

1

1

A AI

Example:

1

A 1

1

2

3

2

3

3

4

6

B 1

1

2

1

0

Show B is the the inverse of matrix A.

Ans: Note that

1

AB BA 0

0

0

1

0

0

0

1

3

0

1

Inverse of a 33 matrix

1

A 0

1

3

5

6

2

4

0

Cofactor matrix of A

A11

C F M of A A21

A31

A12

A22

A32

The cofactor for each element of matrix A:

A11

4

5

0

6

A21

A31

2

3

0

6

2

3

4

5

24

12

2

A12

A22

0

5

1

6

1

3

1

6

A32

5

3

1

3

0

5

5

A13

0

4

1

0

A23

A33

4

1

2

1

0

1

2

0

4

2

4

33

A13

A23

A33

Cofactor matrix of

24

12

2

1

A 0

1

5

3

5

1

det 0

1

2

4

0

2

4

0

3

5

6

is then given by:

4

2

4

3

4

5 1

0

6

5

6

2

0

5

1

6

3

0

4

1

0

det 1(24 0) 2(0 5) 3(0 4) 24 10 12 22

34

Inverse matrix of

A

1

24

1

12

A

2

1

A 0

1

5

3

5

3

5

6

2

4

0

is given by:

T

4

24

1

2

5

22

4

4

12 11

5 22

2 11

12

3

2

2

5

4

6 11

3 22

1 11

1 11

5 22

2 11

35

Simple C Program

/* A first C Program*/

#include <stdio.h>

void main()

{

printf("Happy New Year 2014 \n");

}

Matrix multiplication in c language

1 Requirements

Design, develop and execute a program in C to read two matrices A (m x n) and

B (p x q) and compute the product of A and B if the matrices are compatible for

multiplication. The program must print the input matrices and the resultant matrix

with suitable headings and format if the matrices are compatible for multiplication,

otherwise the program must print a suitable message. (For the purpose of

demonstration, the array sizes m, n, p, and q can all be less than or equal to 3)

2 Analysis

c[i, j] = a[i,k] b[k,j]

3 Design

3.1 Algorithm

Start

Read matrix A[m,n]

Read matrix B[p,q]

if n is not equal to p, Print “Matrix Multiplication not possible”. stop

Write matrix A[m,n]

Write matrix B[p,q]

for i = 0 to m-1

for j = 0 to q-1

c[i][j]=0;

for k = 0 to n-1

c[i][j] += a[i][k]*b[k][j]

write the matrix C[m,n]

Stop

3.2 Flowchart

4 Code/ program

#include <stdio.h>

int main()

{

int m, n, p, q, c, d, k, sum = 0;

int first[10][10], second[10][10], multiply[10][10];

printf("Enter the number of rows and columns of

first matrix\n");

scanf("%d%d", &m, &n);

printf("Enter the elements of first matrix\n");

for ( c = 0 ; c < m ; c++ )

for ( d = 0 ; d < n ; d++ )

scanf("%d", &first[c][d]);

printf("Enter the number of rows and columns of

second matrix\n");

scanf("%d%d", &p, &q);

if ( n != p )

printf("Matrices with entered orders can't be

multiplied with each other.\n");

else

{

printf("Enter the elements of second matrix\n");

for ( c = 0 ; c < p ; c++ )

for ( d = 0 ; d < q ; d++ )

scanf("%d", &second[c][d]);

for ( c = 0 ; c < m ; c++ )

{

for ( d = 0 ; d < q ; d++ )

{

for ( k = 0 ; k < p ; k++ )

{

sum = sum + first[c][k]*second[k][d];

}

multiply[c][d] = sum;

sum = 0;

}

}

printf("Product of entered matrices:-\n");

for ( c = 0 ; c < m ; c++ )

{

for ( d = 0 ; d < q ; d++ )

printf("%d\t", multiply[c][d]);

printf("\n");

}

}

return 0;

}

=MINVERSE(A2:C4)

=MMULT(array1,array2)

=MDETERM(array)

=TRANSPOSE(A2:C2)

12 11

5 22

2 11

6 11

3 22

1 11

1 11

5 22

2 11

C code to find inverse of a matrix

C code to find inverse of a matrix

Inverse of a 3x3 matrix in c

#include<stdio.h>

int main(){

int a[3][3],i,j;

float determinant=0;

printf("Enter the 9 elements of matrix: ");

for(i=0;i<3;i++)

for(j=0;j<3;j++)

scanf("%d",&a[i][j]);

printf("\nThe matrix is\n");

for(i=0;i<3;i++){

printf("\n");

for(j=0;j<3;j++)

printf("%d\t",a[i][j]);

}

for(i=0;i<3;i++)

determinant = determinant + (a[0][i]*(a[1][(i+1)%3]*a[2][(i+2)%3] a[1][(i+2)%3]*a[2][(i+1)%3]));

printf("\nInverse of matrix is: \n\n");

for(i=0;i<3;i++){

for(j=0;j<3;j++)

printf("%.2f\t",((a[(i+1)%3][(j+1)%3] * a[(i+2)%3][(j+2)%3]) (a[(i+1)%3][(j+2)%3]*a[(i+2)%3][(j+1)%3]))/ determinant);

printf("\n");

}

return 0;

}

Example

Find (a) the eigenvalues and (b) the eigenvectors of the matrix

5

1

4

2

Solution: (a) The eigenvalues: The characteristic equation is

det( A l I )

5l

4

1

2l

0

( 5 l )( 2 l ) 4 0

10 5 l 2 l l 4 0

2

l 7l 6 0

2

( l 6 )( l 1) 0

which has two roots

l1 6

and

l2 1

(b) The eigenvectors: For l = l1 = 6 the system for eigen vectors assumes the

form: (A lI) X = 0 i.e. (A 6I) X = 0

( a 11 l ) x1 a 12 x 2 a 1 n x n 0

a 21 x1 ( a 22 l ) x 2 a 2 n x n 0

a n 1 x1 a n 2 x 2 ( a nn l ) x n 0

( 5 6 ) x1 4 x 2 0

x1 4 x 2 0

x1 ( 2 6 ) x 2 0

x1 4 x 2 0

Thus x1 = 4x2, and

4

X 1

1

For l = l2 = 1 the system for eigen vectors assumes the form

( 5 1) x1 4 x 2 0

4 x1 4 x 2 0

x1 ( 2 1) x 2 0

x1 x 2 0

1

X 2

1

Thus x1 = x2, and

4

S

1

S

1

S

1

1

det S 4 1 5 CfS

1

1

1

1 5

1

1

1

4

1

AS 1 5

1

5

A

1

1 5

4 1

1

4

4

2

44

21

1 6

1 0

0

1

CfS

T

1

1

1

4

Example

Find a matrix S that diagonalizes

3

A 2

0

2

3

0

0

0

5

Solution:

We have first to find the eigenvalues and the corresponding eigenvectors of matrix A. The characteristic equation of A is

3l

2

0

2

3l

0

0

0

5l

0

( l 1)( l 5 ) 0

2

so that the eigenvalues of A are l = 1 and l = 5.

If l = 5 the equation (lI A) X = 0 becomes

5 3

2

0

2

2

0

2

53

0

2

2

0

0 x1 0

0 x 2 0

5 5 x 3 0

0 x1 0

0 x 2 0

0 x 3 0

2 x1 2 x 2 0 x 3 0

2 x1 2 x 2 0 x 3 0

0x 0x 0x 0

2

3

1

Solving this system yields

x1 = s; x2 = s; x3 = t;

where s and t are arbitrary values.

Thus the eigenvectors of A corresponding to l = 5 are the non-zero vectors of the

form

s s 0

1

0

X s s 0 s 1 t 0

t 0 t

0

1

since

1

1

0

and

0

0

1

are linearly independent, they are eigenvectors corresponding to l = 5

for l = 1 we have

1 3

2

0

2

2

0

2

1 3

0

2

2

0

0 x1 0

0 x 2 0

1 5 x 3 0

0 x1 0

0 x 2 0

4 x 3 0

2 x1 2 x 2 0 x 3 0

2 x1 2 x 2 0 x 3 0

0x 0x 4x 0

1

2

3

Solving this system yields

x1 = t; x2 = t; x3 = 0;

where t is arbitrary.

Thus the eigenvectors of A corresponding to l = 1 are the non-zero vectors of the

form

t

1

X t t 1

0

0

It is easy to check that the three eigenvectors

1

0

1

X 1 1 , X 2 0 , X 3 1

0

1

0

are linearly independent. We now form the matrix S that has X1, X2, and X3 as its

column vectors:

1

S 1

0

0

0

1

1

1

0

The matrix S1AS is diagonal:

1/ 2

1

S AS 0

1/ 2

1/ 2

0

1/ 2

0 3

1 2

0 0

2

3

0

0 1

0 1

5 0

0

0

1

1 5

1 0

0 0

There is no preferred order for the columns of S. If had we written

1

S 1

0

1

1

0

0

0

1

then we would have obtained (verify)

5

1

S AS 0

0

0

5

0

0

0

1

0

5

0

0

0

1

Example

Find eigenvalues and eigenvectors of the following matrix and diagonalize it:

cos

T

sin

l1 e

i

~

X1 1/

sin

cos

, l2 e

i

1

2

i

1/ 2

U

i/ 2

i

e

†

U TU

0

1/

i/

~

X 2 1/

2

2

0

i

e

U

†

1

2

i

1 /

1 /

2

2

2

i / 2

i/

Chapter 3

Matrix Algebra

3.1 Introduction

3.2 Types of Matrices

3.3 Simple Matrix Problems

3.3.1 Addition and Subtraction

3.3.2 Multiplication of Matrices

3.4 Elliptic Equations —Poisson’s Equation

3.4.1 One Dimension

3.4.2 2 or more Dimensions

3.5 Systems of Equations and Matrix Inversion

3.5.1 Exact Methods

3.5.2 Iterative Methods

The Jacobi Method

The Gauss–Seidel Method

3.6 Matrix Eigenvalue Problems

3.6.1 Schrodinger’s equation

3.6.2 General Principles

3.6.3 Full Diagonalisation

3.6.4 The Generalised Eigenvalue Problem

3.6.5 Partial Diagonalisation

3.6.6 Sparse Matrices and the Lanczos Algorithm

Types of Matrices

There are several ways of

classifying matrices

depending on symmetry,

sparsity etc.

Hermitian Matrices

Here we provide a list of

types of matrices and the

situation in which they may

arise in physics.

Unitary Matrices

Real Symmetric Matrices

Positive Definite Matrices

Diagonal Matrices

Tridiagonal Matrices

Upper and Lower Triangular Matrices

Sparse Matrices

General Matrices

Complex Symmetric Matrices

Symplectic Matrices

Hermitian Matrices

Many Hamiltonians have this property especially those containing magnetic fields:

†

A ji Aij

where at least some elements are complex.

A Hermitian matrix (or self-adjoint matrix) is a square matrix with complex entries

that is equal to its own conjugate transpose-that is, the element in the i-th row and

j-th column is equal to the complex conjugate of the element in the j-th row and i-th

column, for all indices i and j:

2

2i

4

2i

4

i

1

3

i

Pauli matrices

Skew-Hermitian matrix

0

1 x

1

0

2 y

i

1

3 z

0

1

0

i

0

0

1

i

(2 i )

2 i

0

†

A ji Aij

Real Symmetric Matrices

These are the commonest matrices in physics as most Hamiltonians can be

represented this way: And all Aij are real. This is a special case of Hermitian

matrices.

A ji Aij

a symmetric matrix is a square matrix that is equal to its transpose. Formally,

matrix A is symmetric if A = AT.

1

7

3

7

4

5

3

5

6

Positive Definite Matrices

A special sort of Hermitian matrix in which all the eigenvalues are positive. The

overlap matrices used in tight–binding electronic structure calculations are like

this.

Sometimes matrices are non–negative definite and zero eigenvalues are also

allowed. An example is the dynamical matrix describing vibrations of the atoms of

a molecule or crystal, where 2 0.

2

M 1

0

1

2

1

0

1

2

A−1A = AA−1 = I

Unitary Matrices

A complex square matrix U is unitary if

U* U = U U* = I ,

where I is the identity matrix and U* is the conjugate transpose of U. The real

analogue of a unitary matrix is an orthonormal matrix.

Show that the following matrix is unitary.

1 1 i

A

2 1 i

1 1 i

AA*

2 1 i

1 i

1 i

1 i 1 1 i

1 i 2 1 i

1 i 1 4

1 i 4 0

0 1

4 0

We conclude that A* = A– 1 . Therefore, A is a unitary matrix.

0

I2

1

Diagonal Matrices

All matrix elements are zero except the diagonal elements,

The matrix of eigenvalues of a matrix has this form.

1

0

0

0

4

0

0

0

6

Aij 0

when

i j

.

Tridiagonal Matrices

All matrix elements are zero except the diagonal and first off diagonal elements,

Ai , i 0

Ai , i 1 0

Such matrices often occur in 1 dimensional problems and at an intermediate

stage in the process of diagonalisation.

1

3

0

0

4

0

4

1

2

3

0

1

0

0

4

3

Upper and Lower Triangular Matrices

In Upper Triangular Matrices all the matrix elements below the diagonal are zero,

Aij 0 i j . A Lower Triangular Matrix is the other way round, Aij 0 i j

These occur at an intermediate stage in solving systems of equations and

inverting matrices.

1

0

0

1

2

4

4

3

0

0

8

9

2

4

1

0

0

7

Sparse Matrices

Matrices in which most of the elements are zero according to some pattern. In

general sparsity is only useful if the number of non–zero matrix elements of an

N x N matrix is proportional to N rather than N2. In this case it may be possible to

write a function which will multiply a given vector x by the matrix A to give Ax

without ever having to store all the elements of A. Such matrices commonly occur

as a result of simple discretisation of partial differential equations, and in simple

models to describe many physical phenomena.

0

2

10

0

0

0

0

0

0

0

0

0

6

0

0

12

0

0

0

7

0

0

0

3

9

8

0

0

0

0

0

0

0

0

0

0

0

4

0

0

0

5

General Matrices

Any matrix which doesn’t fit into any of the above categories, especially non–

square matrices.

There are a few extra types which arise less often:

Complex Symmetric Matrices

Not generally a useful symmetry. There are however two related situations in

which these occur in theoretical physics: Green’s functions and scattering (S)

matrices.

In both these cases the real and imaginary parts commute with each other, but

this is not true for a general complex symmetric matrix.

2i

A

1

1

0

5

T i

1

i

2

i

1

i

3

Symplectic Matrices

a symplectic matrix is a 2n×2n matrix M with real entries that satisfies the

condition

MT M = .

where MT denotes the transpose of M and is a fixed 2n×2n nonsingular, skewsymmetric matrix. This definition can be extended to 2n×2n matrices with entries

in other fields, e.g. the complex numbers.

Typically Ω is chosen to be the block matrix.

Elliptic equations-Poisson’s equation

u

2

A

A second-order partial differential equation,

x

2

u

2

B

u

2

xy

C

y

2

D

u

x

E

u

y

F 0

A u xx 2 B u xy C u yy D u x E u y F 0

is called elliptic if the matrix

A

Z

B

B

C

is positive definite.

The basic example of an elliptic partial differential equation is Laplace's

equation 2 u 0

in n-dimensional Euclidean space, where the Laplacian is defined by

n

2

2

x

i 1

2

i

Other examples of elliptic equations include the

nonhomogeneous Poisson's equation 2

u f ( x)

and the non-linear minimal surface equation.

2

d V

dx

2

2

d V

dy

2

2

d V

dz

2

f ( x)

One Dimension

4

2

We start by considering the one dimensional Poisson's equation

2

d V

dx

2

f ( x)

The 2nd derivative may be discretised in the usual way to give

V n 1 2V n V n 1 x f n

2

where we define

f n f ( x x n n x )

The boundary conditions are usually of the form V ( x ) Vo at x x o and V ( x ) V N 1 at x

although sometimes the condition is on the first derivative. Since Vo and VN+1 are

both known the n = 1 and n = N above equations may be written as

2 V1 V 2 x f 1 V o

n 1

V1 2V 2 V 3 x f 2

n2

V 2 2V 3 V 4 x f 3

n3

V N 1 2V N x f N V N 1

n N

2

2

2

2

V n 1 2V n V n 1 x f n

2

x N 1 .

This may seem trivial but it maintains the convention that all the terms on the left

contain unknowns and everything on the right is known.

2 V1 V 2 x f 1 V o

2

V1 2V 2 V 3 x f 2

2

Tridiagonal Matrix

It also allows us to rewrite the above equations in matrix form as

2

1

V1 x f 1 V o

2

V2

x

f

2

2

V3

x f3

2

V

x fn

n

1 V N 1 x 2 f N 1

2

2 V N x f N f N 1

2

1

2

1

1

2

1

1

2

1

1

2

1

which is a simple matrix equation of the form

Ax=b

V 2 2V 3 V 4 x f 3

2

V N 1 2V N x f N V N 1

2

x = A–1 b

in which A is tridiagonal. Such equations can be solved by methods which we

shall consider below. For the moment it should suffice to note that the

tridiagonal form can be solved particularly efficiently and that functions for this

purpose can be found in most libraries of numerical functions.

We could only write the equation in this matrix form because the boundary

conditions allowed us to eliminate a term from the 1st and last lines.

Periodic boundary conditions, such as V (x + L) = V (x) can be implemented,

but they have the effect of adding a non-zero element to the top right and

bottom left of the matrix, A1N and AN1, so that the tridiagonal form is lost.

It is sometimes more efficient to solve Poisson's or Laplace's equation using

Fast Fourier Transformation (FFT). Again there are efficient library routines

available (Numerical Algorithms Group, n.d.). This is especially true in

machines with vector processors.

V n 1 2V n V n 1 x f n

2

2 or more Dimensions

Poisson's and Laplace's equations can be solved in 2 or more dimensions by

simple generalisations of the schemes discussed for 1D. However the resulting

matrix will not in general be tridiagonal. The discretised form of the equation takes

the form

2

V m , n 1 V m , n 1 V m 1, n V m 1, n 4V m , n x f m , n

The 2 dimensional index pairs {m, n} may be mapped on to one dimension for the

purpose of setting up the matrix. A common choice is so-called dictionary order,

(1,1), (1, 2), ......(1, N ), (2,1), (2, 2), ....( 2, N ), ...( N ,1), ( N , 2), ...( N , N )

Alternatively Fourier transformation can be used either for all dimensions or to

reduce the problem to tridiagonal form.

3.5 Systems of Equations and Matrix Inversion

3.5.1 Exact Methods

3.5.2 Iterative Methods

The Jacobi Method

The Gauss–Seidel Method

Systems of Equations and Matrix Inversion

We now move on to look for solutions of problems of the form

AX=B

Where A is an M M matrix and X and B are M N matrices. Matrix inversion

is simply the special case where B is an M M unit matrix.

"direct" versus "Iterative" solvers

Two classes of methods for solving an N × N linear algebraic system

AX=B

Direct (exact) methods:

Iterative methods:

Examples: Gaussian elimination,

LU factorizations, matrix inversion, etc.

Examples: GMRES, conjugate

gradients, Gauss-Seidel, etc.

Always give an answer. Deterministic.

Construct a sequence of vectors

u1, u2, u3, . . . that (hopefully!)

converge to the exact solution.

Robust. No convergence analysis.

Great for multiple right hand sides.

Have often been considered too slow

for high performance computing.

(Directly access elements or blocks of

A.)

(Exact except for rounding errors.)

Many iterative methods access A only

via its action on vectors.

Often require problem specific preconditioners.

High performance when they work

well.

O(N) solvers.

Exact Methods

Most library routines, for example those in the NAG (Numerical Algorithms Group,

n.d.) or LaPack (Lapack Numerical Library, n.d.) libraries are based on some

variation of Gaussian elimination. The standard procedure is to call a first function

which performs an LU decomposition of the matrix A ,

A = LU

where L and U are lower and upper triangular matrices respectively, followed by a

function which performs the operations

Y = L–1 B

X = U–1 Y

on each column of B.

The procedure is sometimes described as Gaussian Elimination. A common

variation on this procedure is partial pivoting. This is a trick to avoid numerical

instability in the Gaussian Elimination (or LU decomposition) by sorting the rows of

A to avoid dividing by small numbers.

There are several aspects of this procedure which should be noted:

The LU decomposition is usually done in place, so that the matrix A is

overwritten by the matrices L and U .

The matrix B is often overwritten by the solution X .

A well written LU decomposition routine should be able to spot when A is

singular and return a flag to tell you so.

Often the LU decomposition routine will also return the determinant of A .

Conventionally the lower triangular matrix L is defined such that all its

diagonal elements are unity. This is what makes it possible to replace A with

both L and U .

Some libraries provide separate routines for the Gaussian Elimination and the

Back-Substitution steps. Often the Back-Substitution must be run separately for

each column of B .

Routines are provided for a wide range of different types of matrices. The

symmetry of the matrix is not often used however.

The time taken to solve N equations in N unknowns is generally proportional

to N3 for the Gaussian-Elimination ( LU -decomposition) step. The BackSubstitution step goes as N2 for each column of B . As before this may be

modified by parallelism.

Sets of Linear Equations

Gaussian Elimination: Successive Elimination of Variables

Our problem is to solve a set of linear algebraic equations. For the time being we

will assume that a unique solution exists and that the number of equations equals

the number of variables.

Consider a set of three equations:

a1 1 x1 a1 2 x 2 a1 3 x 3 b1

a 2 1 x1 a 2 2 x 2 a 2 3 x 3 b 2

a 3 1 x1 a 3 2 x 2 a 3 3 x 3 b 3

The basic idea of the Gaussian elimination method is the transformation of this set

of equations into a staggered set:

a11 x1 a12 x 2 a13 x 3 b1

a 2 2 x 2 a 2 3 x 3 b 2

a 3 3 x 3 b3

Klaus Weltner · Wolfgang J. Weber · Jean Grosjean Peter Schuster

Mathematics for Physicists and Engineers pp441

All coefficients a’ij below the diagonal are zero. The solution in this case is

straightforward.

The last equation is solved for x3. Now, the second can be solved by inserting

the value of x3. This procedure can be repeated for the uppermost equation.

The question is how to transform the given set of equations into a staggered set.

This can be achieved by the method of successive elimination of variables. The

following steps are necessary:

1. We have to eliminate x1 in all but the first equation. This can be done by subtracting

a21/a11 times the first equation from the second equation and a31/a11

times the first equation from the third equation.

2. We have to eliminate x2 in all but the second equation. This can be done by

subtracting a32/a22 times the second equation from the third equation.

3. Determination of the variables. Starting with the last equation in the set and

proceeding upwards, we obtain first x3, then x2, and finally x1.

This procedure is called the Gaussian method of elimination. It can be extended to

sets of any number of linear equations.

Example We can solve the following set of equations according to the procedure

given:

6 x1 12 x 2 6 x 3 6

[1]

3 x1 5 x 2 5 x 3 13

[2]

2 x1 6 x 2 0 10

[3]

1. Elimination of x1. We multiply Eq. [1] by 3/6 and subtract it from Eq. [2]. Then

we multiply Eq. [1] by 2/6 and subtract it from Eq. [3]. The result is

6 x1 12 x 2 6 x 3 6

[1]

x 2 2 x 3 10

[2 ]

2 x 2 2 x 3 12

[3 ]

2. Elimination of x2. We multiply Eq. [2’] by 2 and add it to Eq. [3’]. The result is

6 x1 12 x 2 6 x 3 6

[1 ]

x 2 2 x 3 10

[ 2 ]

2 x3 8

[3 ]

3. Determination of the variables x1, x2, x3. Starting with the last equation in the set, we

obtain

x3

8

4

2

Now Eq. [2] can be solved for x2 by inserting the value of x3. Thus

x2 2

This procedure is repeated for Eq. [1] giving

x1 1

Flow chart & C program of Gauss Elimination Method

/* Gauss elimination method */

# include <studio.h)

# define N 4

main ( )

{

float a[N] [N+1] ,x[N] , t, s;

int i, j, k ;

print f (“enter the elements of the augmented matrix row wise

\n”) ;

for( i=0 ; i<N; i++ )

for (j=0; j<N+1 ; j++)

Scan f (“% f” ,& a [i] , [j] );

for (j=0; j<N-1; j++)

for (i= j+1; i<N ; i++)

{

t= a[i] [j] /a[j] [j];

for (k=0; k<N+1; k++)

a [i] [k] =a [j] [k] *t;

}

/* now printing the upper triangular matrix */

print f(“The upper triangular matrix is :- /n”)

for ( i=0 ; i<N ;i++)

{

for (j=0; j<N+1 ; j++)

print f (“%8 . 4f “ , a [i] [j] ) :

print f (“\n”);

}

/* now performing back substitution */

for (i=N-1 ; i>=0 ; i- -)

{

s= 0 ;

for (j=i+1 ; j<N; j++ )

s + = a[i] [j] *x[j] ;

x[i] = (a[i] [N –s )/a [i] [i] ;

}

/* now printing the results */

print f (“ The solution is :- /n”) ;

for (i=0 ; i<N; i++)

print f (“x[%3d] = %7 . 4f \n “ , i+1, x[i]) ;

}

Gauss–Jordan Elimination

Let us consider whether a set of n linear equations with n variables can be transformed

by successive elimination of the variables into the form

x1 0 0

0 C1

0 x2 0

0 C2

0 0 x3

0 C3

000

xn C n

The transformed set of equations gives the solution for all variables directly. The

transformation is achieved by the following method, which is basically an extension

of the Gaussian elimination method.

At each step, the elimination of xj has to be carried out not only for the coefficients

below the diagonal, but also for the coefficients above the diagonal. In addition, the

equation is divided by the coefficient ajj .

This method is called Gauss–Jordan elimination.

We show the procedure by using the previous example.

This is the set

6 x1 12 x 2 6 x 3 6

3 x1 5 x 2 5 x 3 13

2 x1 6 x 2 0 10

To facilitate the numerical calculation, we will begin each step by dividing the respective

equation by ajj .

1. We divide the first equation by a11 = 6 and eliminate x1 in the other two equations.

Second equation: we subtract 3× first equation

Third equation: we subtract 2× first equation

This gives

x1 2 x 2 x 3 1

[1]

0 x 2 2 x3 1 0

[2]

0 2 x 2 2 x3 1 2

[3]

2. We eliminate x2 above and below the diagonal.

Third equation: we add 2× second equation

First equation: we add 2× second equation

This gives

x1 0 5 x 3 2 1

[1 ]

0 x 2 2 x3 1 0

[2 ]

0 0 2 x3 8

[3 ]

3. We divide the third equation by a33 and eliminate x3 in the two equations above it.

Second equation: we subtract 2× third equation

First equation: we subtract 5× third equation

This gives

x1 0 0 1

[1]

0 x2 0 2

[2 ]

0 0 x3 4

[3 ]

This results in the final form which shows the solution.

Do by another example

4 x 3 y z 13

2 x y z 3

7 x y 3z 0

Form augmented coefficient matrix

4

2

7

3

1

1

1

1

3

3/4

1/ 4

1

1

1

3

13

3

0

Multiply ¼ to make upper left corner (a11) = 1 (First Equation)

1

2

7

Multiply first row by 2 and subtract from 2nd row

Multiply first row by 7 and subtract from 3rd row

Multiply 2nd row by (-2/5)

1

0

0

1

0

0

3/4

1/ 4

5 / 2

3 / 2

17 / 4

19 / 4

13 / 4

3

0

3/4

1/ 4

1

3/5

17 / 4

19 / 4

13 / 4

19 / 2

91 / 4

13 / 4

19 / 5

91 / 4

Multiply 2nd row by ¾ and subtract from 1st row

Multiply 2nd row by 17/4 and add to 3rd row

1

0

0

0

1 / 5

1

3/5

0

11 / 5

2/5

19 / 5

33 / 5

Multiply 3rd row by -5/11 to make a33 = 1

1

0

0

0

1 / 5

1

3/5

0

1

2/5

19 / 5

3

Multiply 3rd row by 3/5 and subtract from 2nd row

Multiply 3rd row by 1/5 and add to 1st row

1

0

0

0

0

1

0

0

1

1

2

3

Now rewrite equations as

1x 0 y 0 z 1

0x 1y 0z 2

0 x 0 y 1z 3

C program of Gauss Jordan method

/* Gauss Jordan method */

# include <studio.h>

#define N 3

main ( )

{

float a [N] [N+1] , t;

int

i, j, k;

print f (“Enter the elements of the “

“augmented matrix row wise\n”)

for (i=0; i<N; i++)

for (j=0 ;j<N+1;j++)

scan f (“%f “, &a[i] [j]) ;

/* now calculating the values of x1,x2, ……,xN */

for (j=0; j<N; j++)

for (i=0; i<N;i++)

if (i!= j)

{

t= a [i] [j] [j] ;

for ( k=0 ;k<N+1; k++)

a[i] [k]

-= a[j] [k]*t;

}

/* now printing the diagonal matrix */

print f (“The diagonal matrix is :- ‘n”) ;

{

for (i= 0; i<N; i++)

for (j=0 ;j<N+1; j++)

print f (“%9. 4f “ , a[i] [j] );

print f (“\n” ) ;

}

/* now printing the results */

print f (“The solution is :\n”) ;

for (i=0; i<N ; i++)

print f (“x[%3d] = %7.4f\n”,

i+1, a[i] [N]/a[i] [i]);

}

Iterative Methods

As an alternative to the above library routines, especially when large sparse

matrices are involved, it is possible to solve the equations iteratively.

The Jacobi Method

It is an algorithm for determining the solutions of a system of linear equations with

largest absolute values in each row and column dominated by the diagonal

element. Each diagonal element is solved for, and an approximate value plugged

in. The process is then iterated until it converges.

We first divide the matrix A into 3 parts A = D + L + U

1

3

4

7

4

5

4

1

2

3

3

1

3 1

0

4 0

3 0

6

0

0

4

0

0

3

0

0

0 0

0

0 0

3 0

0

4

5

0

1

0

0

0

0

3 0

3

4 4

0 7

6

0

0

0

0

2

0

3

1

0

0

0

0

Where D is a diagonal matrix (i.e. D 0 for i j ) and L and U are strict lower and

upper triangular matrices respectively (i.e.L U 0 for all i ). We now write the Jacobi

or Gauss-Jacobi iterative procedure to solve our system of equations as

Xn+1 = D 1 [B (L + U) Xn]

where the superscripts n refer to the iteration number. Note that in practice this

procedure requires the storage of the diagonal matrix, D, and a function to multiply

the vector, Xn by L + U .

ij

ii

AX=B

X = A–1B

ii

(D + L + U) X = B

D X + (L +U)X = B

D X = B – (L +U) X

X = D –1 [ B – (L +U) X ]

Simple C code to implement this for a 1D Poisson’s equation is given below.

int i;

const int N = ??; // incomplete code

double xa[N], xb[N], b[N];

while ( ... ) // incomplete code

{

for ( i = 0; i < N; i++ )

xa[i] = (b[i] - xb[i-1] - xb[i+1]) * 0.5;

for ( i = 0; i < N; i++ )

xb[i] = xa[i];

}

Gauss-Seidel Method

Any implementation of the Jacobi Method above will involve a loop over the matrix

elements in each column of Xn+1. Instead of calculating a completely new matrix

Xn+1 at each iteration and then replacing Xn with it before the next iteration, as in

the above code, it might seem sensible to replace the elements of Xn with those of

Xn+1 as soon as they have been calculated. Naïvely we would expect faster

convergence. This is equivalent to rewriting the Jacobi equation as

Xn+1 = (D + L)1 [B U Xn]

This Gauss-Seidel method has better convergence than the Jacobi method, but

only marginally so.

AX=B

X = A–1B

(D + L + U) X = B

(D + L) X + U X = B

(D + L) X = B – U X

X = (D + L) –1 [ B – U X ]

Gauss-Seidel Method

Elimination methods can be used for m = 25 - 50. Beyond this, round off errors,

computing time etc. kill you.

Instead we use Gauss-Seidel Method for larger m.

Guess a root=> iteration=> improve root.

Assume we have a set of equations

[A] [X] = [C]

x1

a11 x1 a12 x 2 a13 x 3

a1 n x n C 1

a 21 x1 a 22 x 2 a 23 x 3

a2 n xn C 2

a 31 x1 a 32 x 2 a 33 x 3

a3n xn C 3

a n 1 x1 a n 2 x 2 a n 3 x 3

x2

x3

a nn x n C n

xn

C 1 a12 x 2 a13 x 3

a1 n x n

a11

C 2 a 21 x1 a 23 x 3

a2 n xn

a 22

C 3 a 31 x1 a 32 x 2

a3n xn

a 33

C n a n 1 x1 a n 2 x 2 a n 3 x 3

a nn

a n , n 1 x n 1

Guess x2, x3, ....... xn = 0

Calculate x1

Substitute x1 into x2 equation, calculate x2

...

...

...

Calculate xm

Use calculated values to cycle again until process converges.

Example

3 x1 0 .1 x 2 0 .2 x 3 7 .8 5

0 .1 x1 7 x 2 0 .3 x 3 1 9 .3

0 .3 x1 0 .2 x 2 1 0 x 3 7 1 .4

Rewriting equations

x1

x2

x3

7.85 0.1 x 2 0.2 x 3

3

19.3 0.1 x1 0.3 x 3

7

71.4 0.3 x1 0.2 x 2

10

Answer: Put x2 = x3 = 0

x1

Substitute in x2

x2

Put into x3 equation

x3

Cycle over

x1

7.85

2.62

3

19.3 0.1 2.62 0

2.79

7

71.4 0.3 2.62 0.2 2.79

7.005

10

7.85 0.1 2.79 0.2 7.005

3

x1 3

2.99

x 2 2 .5

x3 7

#include<stdio.h>

20 x1 20 x 2 2 x 3 17

#include<conio.h>

#include<math.h>

3 x1 20 x 2 x 3 18

#define ESP 0.0001

#define X1(x2,x3) ((17 - 20*(x2) + 2*(x3))/20) 2 x1 3 x 2 20 x 3 25

#define X2(x1,x3) ((-18 - 3*(x1) + (x3))/20)

#define X3(x1,x2) ((25 - 2*(x1) + 3*(x2))/20)

void main()

{

double x1=0,x2=0,x3=0,y1,y2,y3;

int i=0;

clrscr();

printf("\n__________________________________________\n");

printf("\n

x1\t\t

x2\t\t

x3\n");

printf("\n__________________________________________\n");

printf("\n%f\t%f\t%f",x1,x2,x3);

do

{

y1=X1(x2,x3);

y2=X2(y1,x3);

y3=X3(y1,y2);

if(fabs(y1-x1)<ESP && fabs(y2-x2)<ESP && fabs(y3-x3)<ESP )

{

printf("\n__________________________________________\n");

printf("\n\nx1 = %.3lf",y1);

printf("\n\nx2 = %.3lf",y2);

printf("\n\nx3 = %.3lf",y3);

i = 1;

}

else

{

x1 = y1;

x2 = y2;

x3 = y3;

printf("\n%f\t%f\t%f",x1,x2,x3);

}

}while(i != 1);

getch();

}

OUT PUT

___________

__________________________________________

x1

x2

x3

__________________________________________

0.000000

0.000000

0.000000

0.850000

-1.027500

1.010875

1.978588

-1.146244

0.880205

2.084265

-1.168629

0.866279

2.105257

-1.172475

0.863603

2.108835

-1.173145

0.863145

2.109460

-1.173262

0.863065

2.109568

-1.173282

0.863051

__________________________________________

x1 = 2.110

x2 = -1.173

x3 = 0.863

#include<stdio.h>

#include<conio.h>

#include<math.h>

#define e 0.01

void main()

{

int i,j,k,n;

float a[10][10],x[10];

float sum,temp,error,big;

printf("Enter the number of

equations: ");

scanf("%d",&n) ;

printf("Enter the coefficients of

the equations: \n");

for(i=1;i<=n;i++)

{

for(j=1;j<=n+1;j++)

{

printf("a[%d][%d]= ",i,j);

scanf("%f",&a[i][j]);

}

}

for(i=1;i<=n;i++)

{

x[i]=0;

}

do

{

big=0;

for(i=1;i<=n;i++)

{

sum=0;

for(j=1;j<=n;j++)

{

if(j!=i)

{

sum=sum+a[i][j]*x[j];

}

}

temp=(a[i][n+1]-sum)/a[i][i];

error=fabs(x[i]-temp);

if(error>big)

{

big=error;

}

x[i]=temp;

printf("\nx[%d] =%f",i,x[i]);

}printf("\n");

}

while(big>=e);

printf("\n\nconverges to

solution");

for(i=1;i<=n;i++)

{

printf("\nx[%d]=%f",i,x[i]);

}

getch();

}

Enter the number of equations: 3

Enter the coefficients of the equations:

a[1][1]= 2

a[1][2]= 1

a[1][3]= 1

a[1][4]= 5

a[2][1]= 3

a[2][2]= 5

a[2][3]= 2

a[2][4]= 15

a[3][1]= 2

a[3][2]= 1

a[3][3]= 4

a[3][4]= 8

x[1] =2.500000

x[2] =1.500000

x[3] =0.375000

x[1] =1.562500

x[2] =1.912500

x[3] =0.740625

x[1] =1.173437

x[2] =1.999688

x[3] =0.913359

x[1] =1.043477

x[2] =2.008570

x[3] =0.976119

x[1] =1.007655

x[2] =2.004959

x[3] =0.994933

x[1] =1.000054

x[2] =2.001995

x[3] =0.999474

converges to solution

x[1]=1.000054

x[2]=2.001995

x[3]=0.999474

2 x1 x 2 x 3 5

3 x1 5 x 2 2 x 3 1 5

2 x1 x 2 4 x 3 8

x1 1

x2 2

x3 1

Matrix Eigenvalue Problems

Schrodinger’s equation

In dimensionless form the time–independent Schr¨odinger equation can be written

as

2

- Ñ y + V (r ) y = E y

(1)

The Laplacian,2, can be represented in discrete form as in the case of

Laplace’s or Poisson’s equations. For example, in 1D becomes

- y

j- 1

+ ( 2 + d x V j )y j - y

j+ 1

= Ey

j

(2)

which can in turn be written in terms of a tridiagonal matrix H as

H y = Ey

(3)

An alternative and more common procedure is to represent the eigenfunction in

terms of a linear combination of basis functions so that we have

y (r ) =

å

b

ab f b (r )

(4)

y (r ) =

å

b

ab f b (r )

The basis functions are usually chosen for convenience and as some approximate

analytical solution of the problem. Thus in chemistry it is common to choose the

to be known atomic orbitals. In solid state physics often plane waves are chosen.

Inserting (4) into (1) gives

å

2

a b (- Ñ + V ( r ))f b ( r ) = E å a b f b ( r )

b

(5)

b

Multiplying this by one of the ’s and integrating gives

å ò d rf

*

a

2

( r ) (- Ñ + V ( r )) f b ( r )a b = E å

b

ò

*

d r f a ( r )f b ( r )a b

(6)

b

We now define 2 matrices

ò

H º Hab =

S º Sa b =

ò

*

2

d r f a ( r ) (- Ñ + V ( r ) ) f b ( r )

*

d r f a ( r )f b ( r )

(7)

(8)

so that the whole problem can be written concisely as

å

H a b ab = E å Sa b ab

b

H a = ESa

(9)

b

(10)

which has the form of the generalised eigenvalue problem. Often the ’s are chosen

to be orthogonal so that S = and the matrix S is eliminated from the problem.

In a matrix form,

The eigenvalues are determined by the characteristic equation

General Principles

The usual form of the eigenvalue problem is written

The usual form of the eigenvalue problem is written A x = a x

where A is a square matrix, x is an eigenvector and is an eigenvalue. Sometimes the

eigenvalue and eigenvector are called latent root and latent vector respectively. An N N

matrix usually has N distinct eigenvalue/eigenvector pairs. The full solution of the

eigenvalue problem can then be written in the form A U = U a

r

r

U lA = a U l

where is a diagonal matrix of eigenvalues and Ur (Ul) are matrices whose columns

(rows) are the corresponding eigenvectors. Ul and Ur are the left and right handed

eigenvectors respectively, and Ul = Ur1.

For Hermitian matrices Ul and Ur are unitary and are therefore Hermitian transposes of

each other: Ul + = Ur..

For Real Symmetric matrices Ul and Ur are also real. Real unitary matrices are sometimes

called orthogonal.

Full Diagonalisation

Routines are available to diagonalise real symmetric, Hermitian, tridiagonal and

general matrices.

In the first 2 cases this is usually a 2 step process in which the matrix is first

tridiagonalised (transformed to tridiagonal form) and then passed to a routine for

diagonalising a tridiagonal matrix. Routines are available which find only the

eigenvalues or both eigenvalues and eigenvectors. The former are usually

much faster than the latter.

Usually the eigenvalues of a Hermitian matrix are returned sorted into ascending

order, but this is not always the case (check the description of the routine). Also

the eigenvectors are usually normalised to unity.

For non–Hermitian matrices only the right–handed eigenvectors are returned and

are not normalised.

The Generalised Eigenvalue Problem

A common generalisation of the simple eigenvalue problem involves 2 matrices

A x = a Bx

This can easily be transformed into a simple eigenvalue problem by multiplying both sides

by the inverse of either A or B. This has the disadvantage however that if both matrices

are Hermitian B1A is not, and the advantages of the symmetry are lost, together, possibly,

with some important physics. There is actually a more efficient way of handling the

transformation. Using Cholesky factorisation an LU decomposition of a positive definite

matrix can be carried out such that

†

B = LL

which can be interpreted as a sort of square root of B. Using this we can transform the

problem into the form

éL- 1 A (L† )- 1 ùéL† x ù= a éL† x ù

êë

úê

êë ú

ûë ú

û

û

A 'y = a y

Cholesky factorization algorithm

Partition matrices in A = LLT as

éa

ê 11

êA

ë 21

A21 ù

ú=

A22 ú

û

T

0 ùél11

úê

ê

L 22 ú

ûë0

él11

ê

êL

ë 21

é l2

11

= ê

êl L

ë 11 21

L 21 ù

ú

T ú

L 22 û

T

ù

ú

T

T ú

L 21 L 21 + L 22 L 22 û

T

l11 L 21

algorithm

1. determine l11 and L21:

2. compute L22 from

l11 =

a11

L 21 =

T

T

A22 - L 21 L 21 = L 22 L 22

this is a Cholesky factorization of order n − 1.

1

l11

A21

Example: Cholesky factorization

é2 5

ê

ê1 5

ê

ê- 5

ë

é2 5

ê

ê1 5

ê

ê- 5

ë

15

18

0

2

l1 1 = 2 5,

l1 1l1 2 = 1 5

l1 1l1 3 = 5

l 2 1l1 1 = 1 5

l 3 1 l1 1 = - 5

5 ù

ú

0 ú=

ú

1 1ú

û

15

18

0

él 2

ê1 1

êl l

ê 21 11

êl l

ê

ë 31 11

5ù

ú

0 ú=

ú

1 1ú

û

él1 1

ê

êl

ê 21

êl

ë 31

0 ùél1 1

úê

0 úê0

úê

l 3 3 úê

ûë0

0

l22

l3 2

l1 1l1 2

l1 2

l22

0

l3 1 ù

ú

l3 2 ú

ú

l3 3 ú

û

l1 1l 3 1

2

l 2 1l1 2 + l 2 2

l 2 1l1 3 + l 2 2 l 2 3

l 3 1l1 2 + l 3 2 l 2 2

l 3 1l1 3 + l 3 2 l 2 3

l1 1 = 5

l1 2 = 3

l1 3 = 1

l21 = 3

l3 1 = - 1

2

2

(3 )(3 ) + l 2 2 = 1 8

l 2 1l1 2 + l 2 2 = 1 8

l22 = 3

l 3 1 l1 2 + l 3 2 l 2 2 = 0

(- 1)(3 ) + l 3 2 (3 ) = 0

l3 2 = 1

l 2 1l1 3 + l 2 2 l 2 3 = 0

(3 )(1) + (3 ) l 2 3 = 0

l23 = - 1

2

l 3 1 l1 3 + l 3 2 l 2 3 + l 3 3 = 2

(- 1)(1) + (1)(- 1) + l 323 = 2

l3 3 = 2

ù

ú

ú

ú

2

+ l33 ú

ú

û

First column of L

é25

ê

ê15

ê

ê- 5

ë

15

18

0

5ù

ú

0 ú=

ú

11ú

û

é5

ê

ê3

ê

ê1

ë

0 ùé5

úê

0 úê0

úê

l 33 úê

ûë0

0

l 22

l 32

- 1ù

ú

l 32 ú

ú

l 33 ú

û

3

l 22

0

second column of L

é18

ê

ê0

ë

0ù

ú11ú

û

é9

ê

ê3

ë

él 22

- 1]= ê

êl

ë 32

é3 ù

ê ú[3

ê- 1ú

ë û

3ù

ú=

10 ú

û

é3

ê

ê1

ë

0 ùé3

úê

l 33 úê

ûë0

0 ùél 22

úê

l 33 úê

ûë 0

1ù

ú

l 33 ú

û

Third column of L: 10 - 1 = l233, i.e., l33 = 3 second

column of L

conclusion:

é25 15 5 ù é 5

0 0 ùé5

ê

ê15

ê

ê- 5

ë

18

0

ú

0 ú=

ú

11ú

û

ê

ê3

ê

ê- 1

ë

3

1

l 32 ù

ú

l 33 ú

û

úê

0 úê0

úê

3 úê

ûë0

3

3

0

- 1ù

ú

1 ú

ú

3 ú

û

Here is the Cholesky decomposition of a symmetric real matrix:

é 4

ê

ê 12

ê

ê- 16

ë

12

37

- 43

- 16 ù

ú

- 43 ú=

ú

98 úû

é2

ê

ê6

ê

ê- 8

ë

ùé2

úê

úê

úê

3 úê

ûë

1

5

6

1

- 8ù

ú

5 ú

ú

3 úû

And here is the LDLT decomposition of the same matrix:

é 4

ê

ê 12

ê

ê- 16

ë

éM 11

ê

êM

ê 21

êM

ë 31

M 12

M 22

M 32

12

37

- 43

M 13 ù

ú

M 23 ú=

ú

M 33 úû

- 16 ù

ú

- 43 ú=

ú

98 ú

û

éa11

ê

êa

ê 21

êa

ë 31

é1

ê

ê3

ê

ê- 4

ë

0

a 22

a 32

1

5

ùé4

úê

úê

úê

1 úê

ûë

0 ùéb11

úê

0 úê 0

úê

a 33 úê

ûë 0

1

0

b 22

0

ùé1

úê

úê

úê

9 úê

ûë

0 ùéc11

úê

0 úê 0

úê

b33 úê

ûë 0

3

1

- 4ù

ú

5 ú

ú

1 ú

û

c12

c 22

0

c13 ù

ú

c 23 ú

ú

c 33 úû

The following program computes the eigenvalues and eigenvectors of the 4-th order Hilbert matrix,

H(i,j) = 1/(i + j + 1).

#include <stdio.h>

#include <gsl/gsl_math.h>

#include <gsl/gsl_eigen.h>

int

main (void)

{

double data[] = { 1.0 ,

1/2.0,

1/3.0,

1/4.0,

1/2.0,

1/3.0,

1/4.0,

1/5.0,

1/3.0,

1/4.0,

1/5.0,

1/6.0,

1/4.0,

1/5.0,

1/6.0,

1/7.0 };

gsl_matrix_view m

= gsl_matrix_view_array (data, 4, 4);

gsl_vector *eval = gsl_vector_alloc (4);

gsl_matrix *evec = gsl_matrix_alloc (4, 4);

gsl_eigen_symmv_workspace * w =

gsl_eigen_symmv_alloc (4);

gsl_eigen_symmv (&m.matrix, eval, evec, w);

gsl_eigen_symmv_free (w);

gsl_eigen_symmv_sort (eval, evec,

GSL_EIGEN_SORT_ABS_ASC);

{

int i;

for (i = 0; i < 4; i++)

{

double eval_i

= gsl_vector_get (eval, i);

gsl_vector_view evec_i

= gsl_matrix_column (evec,

i);

printf ("eigenvalue =

%g\n", eval_i);

printf ("eigenvector =

\n");

gsl_vector_fprintf (stdout,

&evec_i.vector, "%g");

}

}

gsl_vector_free (eval);

gsl_matrix_free (evec);

return 0;

}

#include <stdlib.h>

#include <stdio.h>

DSYEV Example.

/* DSYEV prototype */

extern void dsyev( char* jobz, char* uplo, int* n, double* a, int* lda,

double* w, double* work, int* lwork, int* info );

/* Auxiliary routines prototypes */

extern void print_matrix( char* desc, int m, int n, double* a, int lda );

/* Parameters */

#define N 5

#define LDA N

/* Main program */

int main() {

/* Locals */

int n = N, lda = LDA, info, lwork;

double wkopt;

double* work;

/* Local arrays */

double w[N];

double a[LDA*N] = {

1.96, 0.00, 0.00, 0.00, 0.00,

-6.49, 3.80, 0.00, 0.00, 0.00,

-0.47, -6.39, 4.17, 0.00, 0.00,

-7.20, 1.50, -1.51, 5.70, 0.00,

-0.65, -6.34, 2.67, 1.80, -7.10

};

/* Executable statements */

printf( " DSYEV Example Program Results\n" );

/* Query and allocate the optimal workspace */

lwork = -1;

dsyev( "Vectors", "Upper", &n, a, &lda, w, &wkopt, &lwork, &info );

lwork = (int)wkopt;

work = (double*)malloc( lwork*sizeof(double) );

/* Solve eigenproblem */

dsyev( "Vectors", "Upper", &n, a, &lda, w, work, &lwork, &info );

/* Check for convergence */

if( info > 0 ) {

printf( "The algorithm failed to compute eigenvalues.\n" );

exit( 1 );

}

/* Print eigenvalues */

print_matrix( "Eigenvalues", 1, n, w, 1 );

/* Print eigenvectors */

print_matrix( "Eigenvectors (stored columnwise)", n, n, a, lda );

/* Free workspace */

free( (void*)work );

exit( 0 );

} /* End of DSYEV Example */

/* Auxiliary routine: printing a matrix */

void print_matrix( char* desc, int m, int n, double* a, int lda ) {

int i, j;

printf( "\n %s\n", desc );

for( i = 0; i < m; i++ ) {

for( j = 0; j < n; j++ ) printf( " %6.2f", a[i+j*lda] );

printf( "\n" );

}

}

DSYEV Example.

==============

Program computes all eigenvalues and eigenvectors of a real symmetric

matrix A:

1.96

-6.49

-0.47

-7.20

-0.65

-6.49

3.80

-6.39

1.50

-6.34

-0.47

-6.39

4.17

-1.51

2.67

-7.20

1.50

-1.51

5.70

1.80

-0.65

-6.34

2.67

1.80

-7.10

Description.

============

The routine computes all eigenvalues and, optionally, eigenvectors of an

n-by-n real symmetric matrix A. The eigenvector v(j) of A satisfies

A*v(j) = lambda(j)*v(j)

where lambda(j) is its eigenvalue. The computed eigenvectors are

orthonormal.

Example Program Results.

========================

DSYEV Example Program Results

Eigenvalues

-11.07 -6.23

0.86

8.87

16.09

Eigenvectors (stored columnwise)

-0.30 -0.61

0.40 -0.37

0.49

-0.51 -0.29 -0.41 -0.36 -0.61

-0.08 -0.38 -0.66

0.50

0.40

0.00 -0.45

0.46

0.62 -0.46

-0.80

0.45

0.17

0.31

0.16

*/

W Kinzel_Physics by Computer (using

Mathematica and C)

Monte Carlo simulation

http://www.cmth.ph.ic.ac.uk/people/a.mackinnon/Lectures/cp3/node54.html

http://oregonstate.edu/instruct/ch590/lessons/lesson13.htm