Project Meeting - Duke University

advertisement

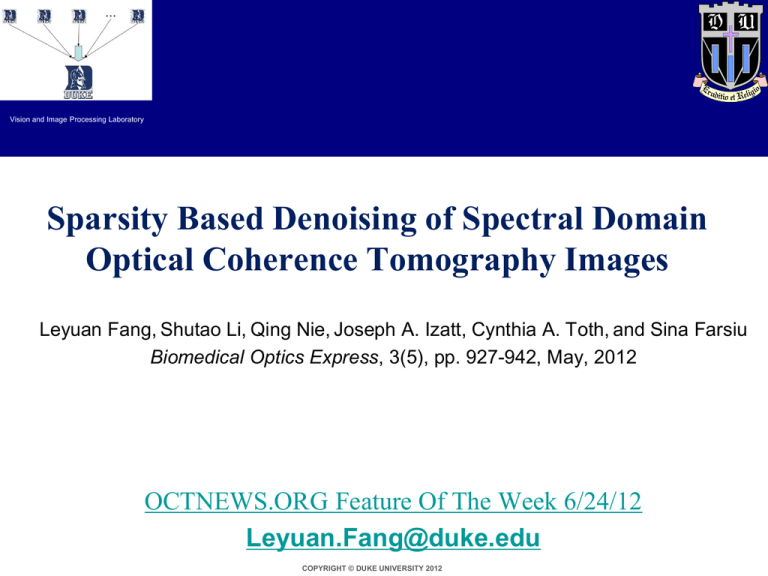

Vision and Image Processing Laboratory Sparsity Based Denoising of Spectral Domain Optical Coherence Tomography Images Duke University Leyuan Fang, Shutao Li, Qing Nie, Joseph A. Izatt, Cynthia A. Toth, and Sina Farsiu Biomedical Optics Express, 3(5), pp. 927-942, May, 2012 OCTNEWS.ORG Feature Of The Week 6/24/12 Leyuan.Fang@duke.edu COPYRIGHT © DUKE UNIVERSITY 2012 Vision and Image Processing Laboratory Content 1. Introduction 2. Multiscale structural dictionary 3. Non-local denoising 4. Results comparison 5. Software display COPYRIGHT © DUKE UNIVERSITY 2012 Introduction Vision and Image Processing Laboratory Two classic denoising frameworks: 1. multi-frame averaging technique 2. model-based single-frame techniques (e.g. Wiener filtering, kernel regression, or wavelets) Low quality denoising result High quality denoising result but requires higher image acquisition time COPYRIGHT © DUKE UNIVERSITY 2012 Proposed Method Overview Vision and Image Processing Laboratory • We introduce the Multiscale Sparsity Based Tomographic Denoising (MSBTD) framework. • MSBTD is a hybrid more efficient alternative to the noted two classic denoising frameworks applicable to virtually all tomographic imaging modalities. • MSBTD utilizes a non-uniform scanning pattern, in which, a fraction of B-scans are captured slowly at a relatively higher than nominal SNR. • The rest of the B-scans are captured fast at the nominal SNR. • Utilizing the compressive sensing principles, we learn a sparse representation dictionary for each of these high-SNR images and utilize these dictionaries to denoise the neighboring low-SNR Bscans. COPYRIGHT © DUKE UNIVERSITY 2012 Assumption Vision and Image Processing Laboratory In common SDOCT volumes, neighboring B-scans have similar texture and noise pattern. B-Scan acquired from the location of the blue line summed-voxel projection (SVP) en face SDOCT image B-Scan acquired from the location of the yellow line COPYRIGHT © DUKE UNIVERSITY 2012 Sparse Representation Vision and Image Processing Laboratory y Da Sparse coefficients How to learn the dictionary? SDOCT image or its patches Dictionary to represent the SDOCT image Classic paradigm: Learn the dictionary directly from the noisy image Train by K-SVD Our paradigm: Learn the dictionary from the neighboring high-SNR B-scan Train by K-SVD COPYRIGHT © DUKE UNIVERSITY 2012 Train by PCA Multiscale structural dictionary Vision and Image Processing Laboratory To better capture the properties of structures and textures of different size, we utilize a novel multi-scale variation of the structural dictionary representation. Upsampled Image (Finer scale) Upsampled and downsampled Structural dictionary learning Clustering Training image Downsamped image (Coarser scale) Patches extracted from images at different scales Structural clusters at different scales COPYRIGHT © DUKE UNIVERSITY 2012 Non-local strategy Vision and Image Processing Laboratory To further improve the performance, we search for the similar patches in the SDOCT images and average them to achieve better results. Multiscale structural dictionary learning Similar patches searching Dictionary selection Sparse representation Sparse representation Reconstruction with the selected dictionary The MSTBD denoising process COPYRIGHT © DUKE UNIVERSITY 2012 Results comparison Vision and Image Processing Laboratory Quantitative measures 1. Mean-to-standard-deviation ratio (MSR) f MSR = , where f f and f are the mean and the standard deviation of the foreground regions 2. Contrast-to-noise ratio (CNR) CNR where | f b | 0.5( ) 2 f 2 b , b and b are the mean and the standard deviation of the background regions 3. Peak signal-to-noise-ratio (PSNR) PSNR = 20 log10 1 H Max R H h 1 ˆ Rh R h 2 , ˆ represents the hth pixel where R h is the hth pixel in the reference noiseless image R , R h of the denoised image Rˆ , H is the total number of pixels, and Max R is the maximum intensity value of R COPYRIGHT © DUKE UNIVERSITY 2012 Results comparison Vision and Image Processing Laboratory Experiment 1: denoising (on normal subject image) based on learned dictionary from a nearby high-SNR Scan Averaged image MSR = 10.64, CNR = 3.90 Noisy image (Normal subject) MSR = 3.20, CNR = 1.17 Result using the NEWSURE method [2] Result using the KSVD method [3] MSR = 7.85, CNR = 2.87, PSNR = 24.51 Result using the Tikhonov method [1] MSR = 7.65, CNR = 3.25, PSNR = 23.35 Result using the BM3D method [4] MSR = 13.26, CNR = 5.19, PSNR = 28.48 MSR = 11.96, CNR = 4.72, PSNR = 28.35 [1] G. T. Chong, et al., “Abnormal foveal morphology in ocular albinism imaged with spectral-domain optical coherence tomography,” Arch. Ophthalmol. (2009). [2] F. Luisier, et al., “A new SURE approach to image denoising: Interscale orthonormal Result using the MSBTD method wavelet thresholding,” IEEE Trans. Image MSR = 15.41, CNR = 5.98, PSNR = 28.83 Process (2007). [3] M. Elad, et al., “Image denoising via sparse and redundant representations over learned dictionaries,” IEEE Trans. Image Process. (2006). [4] K. Dabov, et al., “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Trans. Image Process. (2007). COPYRIGHT © DUKE UNIVERSITY 2012 Results comparison Vision and Image Processing Laboratory Experiment 1: denoising (on dry AMD subject image) based on learned dictionary from a nearby high-SNR Scan Averaged image MSR = 10.20, CNR = 3.75 Noisy image (AMD subject) MSR = 3.46, CNR = 1.42 Result using the NEWSURE method [2] Result using the KSVD method [3] MSR = 8.04, CNR = 3.39, PSNR = 23.87 MSR = 12.82, CNR = 5.62, PSNR = 26.07 [1] G. T. Chong, et al., “Abnormal foveal morphology in ocular albinism imaged with spectral-domain optical coherence tomography,” Arch. Ophthalmol. (2009). [2] F. Luisier, et al., “A new SURE approach to image denoising: Interscale orthonormal Result using the MSBTD method wavelet thresholding,” IEEE Trans. Image MSR = 15.28, CNR = 6.45, PSNR = 26.11 Process (2007). COPYRIGHT © DUKE UNIVERSITY 2012 Result using the Tikhonov method [1] MSR = 8.12, CNR = 3.92, PSNR = 21.76 Result using the BM3D method [4] MSR = 12.08, CNR = 5.31, PSNR = 25.68 [3] M. Elad, et al., “Image denoising via sparse and redundant representations over learned dictionaries,” IEEE Trans. Image Process. (2006). [4] K. Dabov, et al., “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Trans. Image Process. (2007). Results comparison Vision and Image Processing Laboratory Experiment 2: denoising based on learned dictionary from a distant high-SNR scan Summed-voxel projection (SVP) en face image Result using the KSVD method [1] MSR = 13.93, CNR = 5.03 Noisy image acquired from the location b MSR = 3.10, CNR = 1.01 Result using the BM3D method [2] MSR = 14.93, CNR = 5.46 Result using the MSBTD method MSR = 18.57, CNR = 6.88 [1] M. Elad, et al., “Image denoising via sparse and redundant representations over learned dictionaries,” IEEE Trans. Image Process. (2006). [2] K. Dabov, et al., “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Trans. Image Process. (2007). COPYRIGHT © DUKE UNIVERSITY 2012 Results comparison Vision and Image Processing Laboratory Experiment 2: denoising based on learned dictionary from a distant high-SNR scan Summed-voxel projection (SVP) en face image Result using the KSVD method [1] MSR = 10.30, CNR = 4.95 Noisy image acquired from the location c MSR = 3.30, CNR = 1.40 Result using the BM3D method [2] MSR = 9.91, CNR = 4.70 Result using the MSBTD method MSR = 11.71, CNR = 5.35 [1] M. Elad, et al., “Image denoising via sparse and redundant representations over learned dictionaries,” IEEE Trans. Image Process. (2006). [2] K. Dabov, et al., “Image denoising by sparse 3-D transform-domain collaborative filtering,” IEEE Trans. Image Process. (2007). COPYRIGHT © DUKE UNIVERSITY 2012 Software display Vision and Image Processing Laboratory MATLAB based MSBTD software & dataset is freely available at http://www.duke.edu/~sf59/Fang_BOE_2012.htm Input the test image Input the Averaged image Setting the parameters for the MSBTD Run the Tikhonov Save the results Run the MSBTD Select region from the test image Select a background region from the test image Select a foregournd region from the test image COPYRIGHT © DUKE UNIVERSITY 2012 Vision and Image Processing Laboratory CLICK ON THE GUI TO PLAY VIDEO DEMO OF THE SOFTWARE MATLAB based MSBTD software & dataset is freely available at http://www.duke.edu/~sf59/Fang_BOE_2012.htm COPYRIGHT © DUKE UNIVERSITY 2012