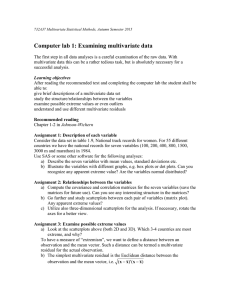

Kernel nearest means

advertisement

Kernel nearest means Usman Roshan Feature space transformation • Let Φ(x) be a feature space transformation. • For example if we are in a twodimensional vector space and x=(x1, x2) then f (x) = (x , x ) 2 1 2 2 Computing Euclidean distances in a different feature space • The advantage of kernels is that we can compute Euclidean and other distances in different features spaces without explicitly doing the feature space conversion. Computing Euclidean distances in a different feature space • First note that the Euclidean distance between two vectors can be written as x - y = (x - y)T (x - y) = xT x + yT y - 2xT y 2 • In feature space we have f (x) - f (y) 2 = (f (x) - f (y)) (f (x) - f (y)) T = f (x) f (x) + f (y) f (y) - 2f (x) f (y) = K(x, x) + K(y, y) - 2K(x, y) T where K is the kernel matrix. T T Computing distance to mean in feature space • Recall that the mean of a class (say C1) is given by m1 = = ( ) ( )å 1 x + x + … + x 1 2 n1 n1 1 n1 x1 i =1… n1 • In feature space the mean Φm would be ( ) ( )åf fm = 1 n f (x1 ) + f (x2 ) +… + f (xn ) = 1 n 1 1 1 i=1… n1 (x1 ) Computing distance to mean in feature space K (m, m) = fmTfm ( ) æ 1 =ç n è = 1 = 1 T ö æ 1 å f (xi )÷ø çè n i=1… n ( ) å n 2 i=1… n j=1… n T f (x ) å i f (x j ) n 2 å å K(x , x ) i i=1… n j=1… n j ö å f (x j )÷ø j=1… n Computing distance to mean in feature space K(x, m) = f xT fm ( ) æ 1 = f (x) ç n è T ö å f (xi )÷ø i=1… n = 1 n å f (x)T f (xi ) i=1… n = 1 n å K(x, x j ) i=1… n Computing distance to mean in feature space • Replace K(m,m) and K(m,x) with calculations from previous slides f (m) - f (x) 2 = K(m, m) + K(x, x) - 2K(m, x) = 1 n 2 å å K(x , x ) + K(x, x) - 2 1 n å K(x, x ) i i=1… n j=1… n j j i=1… n Kernel nearest means algorithm • Compute kernel • Let xi (i=0..n-1) be the training datapoints and yi (i=0..n’-1) the test. • For each mean mi compute K(mi,mi) • For each datapoint yi in the test set do – For each mean mj do • dj = K(mj,mj) + K(yi,yi) - 2K(mi,yj) • Assign yi to the class with the minimum dj