Spectral Matting

advertisement

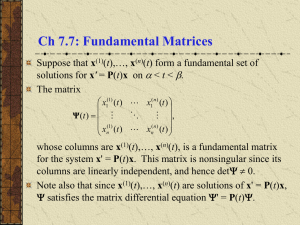

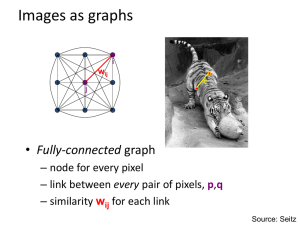

Spectral Matting A. Levin D. Lischinski and Y. Weiss. A Closed Form Solution to Natural Image Matting. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), June 2006, New York A. Levin, A. Rav-Acha, D. Lischinski. Spectral Matting. Best paper award runner up. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Minneapolis, June 2007 A. Levin1,2, A. Rav-Acha1, D. Lischinski1. Spectral Matting. IEEE Trans. Pattern Analysis and Machine Intelligence, Oct 2008. 1School of CS&Eng The Hebrew University 2CSAIL 1 MIT Hard segmentation and matting Hard segmentation compositing Source image Matte 2 compositing Previous approaches to segmentation and matting Input Hard output Unsupervised Spectral segmentation: Shi and Malik 97 Yu and Shi 03 Weiss 99 Ng et al 01 Zelnik and Perona 05 Tolliver and Miller 06 3 Matte output Previous approaches to segmentation and matting Input Hard output Unsupervised Supervised 0 1 July and Boykov01 Rother et al 04 Li et al 04 4 Matte output Previous approaches to segmentation and matting Input Hard output Matte output Unsupervised Supervised ? 0 1 5 Trimap interface: Bayesian Matting (Chuang et al 01) Poisson Matting (Sun et al 04) Random Walk (Grady et al 05) Scribbles interface: Wang&Cohen 05 Levin et al 06 Easy matting (Guan et al 06) User guided interface Scribbles 6 Trimap Matting result Generalized compositing equation 2 layers compositing = 7 I i iFi (1 i ) Bi x L1 + 1 x L2 Generalized compositing equation 2 layers compositing = x K layers compositing = + 8 I i iFi (1 i ) Bi 1 3 L1 + 1 x L2 Ii 1i L1i i2 L2i ... iK LKi x x L1 3 L + + 2 4 Matting components x x L2 L4 Generalized compositing equation K layers compositing = + 1 3 Ii L i L ... i L 1 1 i i x x L1 3 L 2 + + 2 i 2 4 Matting components: 0 ik 1 1i i2 ... iK 1 “Sparse” layers- 0/1 for most image pixels 9 K x x L2 L4 K i Unsupervised matting Input 1 2 3 4 5 6 7 8 Automatically computed matting components 10 Building foreground object by simple components addition + 11 + = Spectral segmentation Spectral segmentation: Analyzing smallest eigenvectors of a graph Laplacian L L D W D (i, i ) j W (i, j ) W (i, j ) e E.g.: Shi and Malik 97 Yu and Shi 03 Weiss 99 Ng et al 01 Maila and shi 01 Zelnik and Perona 05 Tolliver and Miller 06 12 Ci C j 2 / 2 Problem Formulation = x L1 + 1 x L2 Assume a and b are constant in a small window 13 Derivation of the cost function 14 Derivation J ( ) L T 15 The matting Laplacian J ( ) L T • L semidefinite sparse matrix • L(i, 16 j) local function of the image: The matting affinity 17 The matting affinity Input 18 Color Distribution Matting and spectral segmentation Typical affinity function 19 Matting affinity function Eigenvectors of input image Input Smallest eigenvectors 20 Spectral segmentation Fully separated classes: class indicator vectors belong to Laplacian nullspace General case: class indicators approximated as linear combinations of smallest eigenvectors Null Binary indicating vectors 21 Laplacian matrix Spectral segmentation Fully separated classes: class indicator vectors belong to Laplacian nullspace General case: class indicators approximated as linear combinations of smallest eigenvectors Smallest eigenvectors- class indicators only up to linear transformation Zero eigenvectors Laplacian matrix 22 Smallest eigenvectors R33 Binary indicating vectors Linear transformation From eigenvectors to matting components linear transformation 23 From eigenvectors to matting components Sparsity of matting components Minimize 24 From eigenvectors to matting components Minimize Newton’s method with initialization 25 From eigenvectors to matting components 1) Initialization: projection of hard segments Smallest eigenvectors K-means e m l .. Ck Projection into eigs space .. 2)26Non linear optimization for sparse components k EET mC .. k Extracted Matting Components 27 Brief Summary Construct Matting Laplacian J ( ) L T Smallest eigenvectors Linear Transformation Matting components 28 Grouping Components + 29 + = Grouping Components Complete foreground matte + + Unsupervised matting User-guided matting 30 = Unsupervised matting Matting cost function J ( ) L T Hypothesis: Generate indicating vector b 31 Unsupervised matting results 32 User-guided matting Graph cut method Energy function Unary term Constrained components 33 Pairwise term Components with the scribble interface Components (our approach) 34 Wang&Cohen 05 Random Walk Levin et al cvpr06 Poisson Components with the scribble interface Components (our approach) 35 Wang&Cohen 05 Random Walk Levin et al cvpr06 Poisson Direct component picking interface Building foreground object by simple components addition + 36 + = Results 37 Quantitative evaluation 38 Spectral matting versus obtaining trimaps from a hard segmentation 39 Limitations Number of eigenvectors Ground truth matte 40 Matte from 70 eigenvectors Matte from 400 eigenvectors Limitations Number of matting components 41 Conclusion Derived analogy between hard spectral segmentation to image matting Automatically extract matting components from eigenvectors Automate matte extraction process and suggest new modes of user interaction 42