Chapter 13

Dummy

Dependent

Variable

Techniques

Copyright © 2011 Pearson Addison-Wesley.

All rights reserved.

Slides by Niels-Hugo Blunch

Washington and Lee University

The Linear Probability Model

• The linear probability model is simply running OLS for a

regression, where the dependent variable is a dummy (i.e.

binary) variable:

(13.1)

where Di is a dummy variable, and the Xs, βs, and ε are typical

independent variables, regression coefficients, and an error term,

respectively

• The term linear probability model comes from the fact that the

right side of the equation is linear while the expected value of

the left side measures the probability that Di = 1

© 2011 Pearson Addison-Wesley. All rights reserved.

13-1

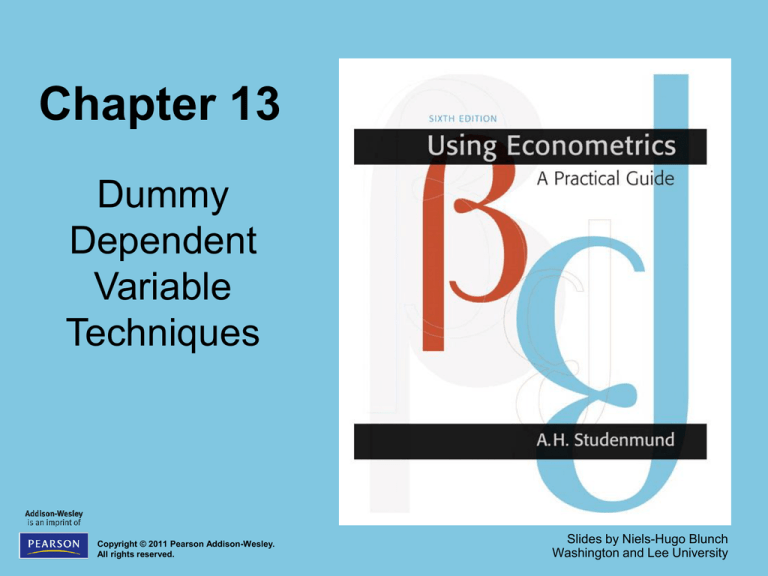

Problems with the Linear

Probability Model

1. R2 is not an accurate measure of overall fit:

– Di can equal only 1 or 0, but must move in a continuous fashion from one

extreme to the other (as also illustrated in Figure 13.1)

– Hence, is likely to be quite different from Di for some range of Xi

– Thus, R2 is likely to be much lower than 1 even if the model actually does an

exceptional job of explaining the choices involved

2

– As an alternative, one can instead use R p, a measure based on the

percentage of the observations in the sample that a particular estimated

equation explains correctly

– To use this approach, consider a

> .5 to predict that Di = 1 and a < .5

to predict that Di = 0 and then simply compare these predictions with the

actual Di

2.

is not bounded by 0 and 1:

– The alternative binomial logit model, presented in Section 13.2, will address this

issue

© 2011 Pearson Addison-Wesley. All rights reserved.

13-2

Figure 13.1

A Linear Probability Model

© 2011 Pearson Addison-Wesley. All rights reserved.

13-3

The Binomial Logit Model

•

The binomial logit is an estimation technique for equations with dummy

dependent variables that avoids the unboundedness problem of the

linear probability model

•

It does so by using a variant of the cumulative logistic function:

(13.7)

•

Logits cannot be estimated using OLS but are instead estimated by

maximum likelihood (ML), an iterative estimation technique that is

especially useful for equations that are nonlinear in the coefficients

•

Again, for the logit model

•

This is illustrated by Figure 13.2

© 2011 Pearson Addison-Wesley. All rights reserved.

is bounded by 1 and 0

13-4

Figure 13.2

Is Bounded by 0

and 1 in a Binomial Logit Model

© 2011 Pearson Addison-Wesley. All rights reserved.

13-5

Interpreting Estimated

Logit Coefficients

• The signs of the coefficients in the logit model have the

same meaning as in the linear probability (i.e. OLS) model

• The interpretation of the magnitude of the coefficients

differs, though, the dependent variable has changed

dramatically.

• That the “marginal effects” are not constant can be seen

from Figure 13.2: the slope (i.e. the change in probability)

of the graph of the logit changes as moves from 0 to 1!

• We’ll consider three ways for helping to interpret logit

coeffcients meaningfully:

© 2011 Pearson Addison-Wesley. All rights reserved.

13-6

Interpreting Estimated Logit

Coefficients (cont.)

1. Change an average observation:

– Create an “average” observation by plugging the means of all the independent variables

into the estimated logit equation and then calculating an “average”

– Then increase the independent variable of interest by one unit and recalculate the

– The difference between the two s then gives the marginal effect

2. Use a partial derivative:

– Taking a derivative of the logit yields the result that the change in the expected value of

caused by a one unit increase in holding constant the other independent variables in the

equation equals

– To use this formula, simply plug in your estimates of and Di

– From this, again, the marginal impact of X does indeed depend on the value of

3. Use a rough estimate of 0.25:

– Plugging in into the previous equation, we get the (more handy!) result that multiplying a

logit coefficient by 0.25 (or dividing by 4) yields an equivalent linear probability model

coefficient

© 2011 Pearson Addison-Wesley. All rights reserved.

13-7

Other Dummy Dependent

Variable Techniques

• The Binomial Probit Model:

– Similar to the logit model this an estimation technique for equations with

dummy dependent variables that avoids the unboundedness problem

of the linear probability model

– However, rather than the logistic function, this model uses a variant of the

cumulative normal distribution

• The Multinomial Logit Model:

– Sometimes there are more than two qualitative choices available

– The sequential binary model estimates such choices as a series of

binary decisions

– If the choice is made simultaneously, however, this is not appropriate

– The multinomial logit is developed specifically for the case with more

than two qualitative choices and the choice is made simultaneously

© 2011 Pearson Addison-Wesley. All rights reserved.

13-8

Key Terms from Chapter 13

• Linear probability model

2

• Rp

• Binomial logit model

• The interpretation of an estimated logit coefficient

• Binomial probit model

• Sequential binary model

• Multinomial logit model

© 2011 Pearson Addison-Wesley. All rights reserved.

13-9