Quadratic Forms, Characteristic Roots and Characteristic

advertisement

Quadratic Forms, Characteristic Roots and

Characteristic Vectors

Mohammed Nasser

Professor, Dept. of Statistics, RU,Bangladesh

Email: mnasser.ru@gmail.com

The use of matrix theory is now widespread .- - - -- are

essential in ----------modern treatment of univeriate and

multivariate statistical methods.

----------C.R.Rao

1

Contents

Linear Map and Matrices

Quadratic Forms and Its Applications in MM

Classification of Quadratic Forms

Quadratic Forms and Inner Product

Definitions of Characteristic Roots and Characteristic

Vectors

Geometric Interpretations

Properties of Grammian Matrices

Spectral Decomposition and Applications

Matrix Inequalities and Maximization

Computations

2

Relation between MM (ML) and Vector

space

Statistical

Concepts in Vector space

Concepts/Techniques

Variance

Length of a vector, Qd. forms

Covariance

Dot product of two vectors

Correlation

Angle bt.two vectors

Regression and

Classification

Mapping bt two vector sp.

PCA/LDA/CCA

Orthogonal/oblique projection

on lower dim.

3

Some Vector Concepts

• Dot product =

scalar

xT y x 1 x 2

y1

n

y2

... x n x i y i

i 1

y n

y1

3

T

x y x1 x2 x3 y2 x1 y1 x2 y2 x3 y3 xi yi

i 1

y3

• Length of a vector

|| x || = (x1

2+

x2

2+

x3

Right-angle triangle

x2

2 )1/2

Inner product of a vector

with itself = (vector

length)2

xT x =x12+ x22 +x32 = (|| x

||)2

x2

Pythagoras’ theorem

|| x || =

(x12+ x22)1/2

||x||

x1

x1

4

Some Vector Concepts

• Angle between two

vectors

sin

y2

sin

x2

y

x

cos

y1

cos

x1

||x||

||y||

y

y2

y1

x

cos cos( ) cos cos sin sin

y 1x 1 y 2x 2

x

x y

xT y

cos

x y

xT y x y cos

x

Orthogonal vectors:

xT y = 0

=/

2

y

5

Linear Map and Matrices

Linear mappings are almost omnipresent

If both domain and co-domain are both finitedimensional vector space, each linear mapping

can be uniquely represented by a matrix w.r.t.

specific couple of bases

We intend to study properties of linear mapping

from properties of its matrix

6

Linear map and Matrices

This isomorphism is basis

dependent

7

Linear map and Matrices

Let A be similar to B, i.e. B=P-1AP

Similarity defines an equivalent relation in the vector

space of square matrices of orde n, i.e. it partitions the

vector space in to different equivalent classes.

Each equivalent class represents unique linear

operator

How can we choose

i) the simplest one in each equivalent class and

ii) The one of special interest ??

8

Linear map and Matrices

Two matrices representing the same linear transformation

with respect to different bases must be similar.

A major concern of ours is to make the best choice of basis,

so that the linear operator with which we are working will

have a representing matrix in the chosen basis that is as

simple as possible.

A diagonal matrix is a very useful matrix, for example,

Dn=P-1AnP

9

Linear map and Matrices

Each equivalent class represent unique linear

operator

Can we characterize the class in simpler way?

Yes, we can

Under extra conditions

The concept , characteristic roots plays an important role in

this regards

10

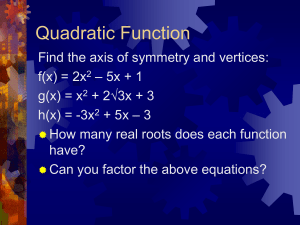

Quadratic Form

Definition: The quadratic form in n variables x1,

x2, …, xn is the general homogeneous function of

second degree in the variables

n

n

i

j

Y f ( x1 , x2 ,..., xn ) aij xi x j

In terms of matrix notation, the

quadratic form is given by

Y x1

x2

...

a11

a

xn 21

...

an1

a12

...

a22

...

an 2

...

...

...

a1n x1

a2 n x2

xT Ax

... ...

ann xn

11

Examples of Some Quadratic Forms

1

2.

3.

Y x Ax 2x 6x1x2 7 x

T

2

1

2

2

Y xT Ax 21x12 11x22 2x32 30x1 x2 8x2 x3 12x3 x1

Y xT Ax a1 x12 a2 x22 .... an xn2

Standard form

What is its uses??

A can be many for a particular quadratic form. To

make it unique it is customary to write A as symmetric

matrix.

12

In Fact Infinite A’s

• For example 1 we have

to take a12, and a21

s.t.a12 +a21 =6.

• Symmetric A

• We can do it in infinite

ways.

13

Its Importance in Statistics

Variance is a fundamental concept in statistics. It is

nothing but a quadratic form with a idempotent matrix of

rank (n-1) 1 n

1 T

2

( xi x ) x Ax ;...

n i 1

n

1 T

where , A I n 11

n

Quadratic forms play a central role in multivariate

statistical analysis. For example, principal component

analysis, factor analysis, discriminant analysis etc.

14

Its Importance in Statistics

Multivariate Gaussian

0

15

Its Importance in Statistics

Bivariate Gaussian

16

Spherical, diagonal, full covariance

UM

17

Quadratic Form as Inner Product

Length ofY, ||Y||= (YTY)1/2;

XTY, dot product of X andY

XT AX=(AT X)TX

= XT (AX)

= XT Y

• Let A=CTC.

Then XT AX= XTCTCX=(CX)TCX=YTY

XT AY= XTCTCY=(CX)TCY=WT Z

What is its geometric meaning ?

Different nonsingular Cs represent different inner

products

Different inner products different geometries.

18

Euclidean Distance and Mathematical

Distance

• Usual human concept of distance is Eucl. Dist.

• Each coordinate contributes equally to the distance

P( x1 , x2 , , x p ),

d ( P, Q )

Q( y1 , y2 , , y p )

( x1 y1 ) 2 ( x2 y2 ) 2 ( x p y p ) 2

Mathematicians, generalizing its three properties ,

1) d(P,Q)=d(Q,P).2) d(P,Q)=0 if and only if P=Q and

3) d(P,Q)=<d(P,R)+d(R,Q) for all R, define distance

on any set.

19

Statistical Distance

• Weight coordinates subject to a great deal of variability

less heavily than those that are not highly variable

Who is nearer to data set if it

were point?

20

Statistical Distance for Uncorrelated Data

P (x 1, x 2 ),

x x1 /

*

1

d (O , P )

s11 ,

O (0, 0)

x x2 /

*

2

x x

*

1

2

*

2

2

s22

x

2

1

s11

x

2

2

s 22

21

Ellipse of Constant Statistical Distance for

Uncorrelated Data

x2

c s22

0

c s11

x1

c s11

c s22

22

Scattered Plot for

Correlated Measurements

23

Statistical Distance under Rotated

Coordinate System

O (0, 0),

d (O , P )

P ( x 1, x 2 )

x 12

s11

x 22

s 22

x 1 x 1 cos x 2 sin

x 2 x 1 sin x 2 cos

d (O , P ) a x 2a12x 1x 2 a x

2

11 1

2

22 2

24

General Statistical Distance

P( x1 , x2 , , x p ), O(0,0,,0), Q( y1 , y2 , , y p )

d (O, P)

[a11 x12 a22 x22 a pp x 2p

2a12 x1 x2 2a13 x1 x3 2a p 1, p x p 1 x p ]

[a11 ( x1 y1 ) 2 a22 ( x2 y2 ) 2

d ( P, Q )

a pp ( x p y p ) 2

2a12 ( x1 y1 )( x2 y2 ) 2a13 ( x1 y1 )( x3 y3 )

2a p 1, p ( x p 1 y p 1 )( x p y p )]

25

Necessity of Statistical Distance

26

Mahalonobis Distance

Population version: MD(x, μ, Σ)

Sample veersion;

(x μ)T Σ 1 (x μ)

MD(x, x, S) (x x)T S 1 (x x)

We can robustify it using robust

estimators of location and scatter

functional

27

Classification of Quadratic Form

• Chart:

Quadratic Form

Definite

Positive

Definite

Positive

Semi definite

Indefinite

Negative

Definite

Negative

Semi definite

28

Classification of Quadratic Form

Definitions

1. Positive Definite: A quadratic form Y=XTAX is said to be

positive definite iff Y=XTAX>0 ; for all x≠0 . Then the matrix A

is said to be a positive definite matrix.

2. Positive Semi-definite:A quadratic form, Y=XTAX is said to be

positive semi-definite iff Y=XTAX>=0 , for all x≠0 and there

exists x≠0 such that XTAX=0 . Then the matrix A is said to

be a positive semidefinite matrix.

3. Negative Definite: A quadratic form Y=XTAX is said to be

negative definite iff Y=XTAX<=0 for all x≠0. Then the matrix A is

said to be negative definite matrix

29

Classification of Quadratic Form

Definitions

T

Y

x

Ax

4. Negative Semi-definite: A quadratic form,

T

Y

x

Ax 0 ,

is said to be negative semi-definite iff

T

for all x≠0 and there exists x≠0 such that x Ax 0. The

matrix A is said to be a negative semi-definite matrix.

Indefinite: Quadratic forms and their associated

symmetric matrices need not be definite or semi-definite

in any of the above scenes. In this case the quadratic

form is said to be indefinite; that is , it can be negative,

zero or positive depending on the values of x.

30

Two Theorems On Quadratic Form

Theorem(1): A quadratic form can always be

expressed with respect to a given coordinate

system as

T

Y x Ax

.

where A is a unique symmetric matrix.

Theorem2: Two symmetric matrices A and B

represent the same quadratic form if and only if

B=PTAP

where P is a non-singular matrix.

31

Classification of Quadratic Form

Importance of Standard Form

From standard form we can easily classify a quadratic

form.

n

XT AX=

a x

i 1

2

i i

Is positive /positive semi/negative/ negative

semidifinite/indefinite if ai >0 for all i/ ai >0 for some i

others, a=0/ai <0 for all i,/ ai <0 some i , others, a=0/

Some ai are +ive, some are negative.

32

Classification of Quadratic Form

Importance of Standard Form

That is why using suitable nonsingular trandformation ( why

nonsingular??) we try to transform general XT AX into a

standard form.

If we can find a P nonsingular matrix s.t.

PT AP D

D, a diagonal mat rix

we can easily classify it. We can do it i) for congruent

transformation and

ii) using eigenvalues and eigen vectors.

Method 2 is mostly used in MM

33

Classification of Quadratic Form

Importance of Determinant, Eigen Values and

Diagonal Element

1. Positive Definite: (a). A quadratic form Y xT Ax 0is

positive definite iff the nested principal minors of A is

given as

a11 a12 ... a1n

a11 0,

a11

a21

a11 a12 a13

a21 a22 .... a2n

a12

0, a21 a22 a23 0,........,

0

... ... ... ...

a22

a31 a32 a33

an1 an 2 ... ann

Evidently a matrix A is positive definite only if det(A)>0

(b). A quadratic form Y=XTAX be positive definite iff all

the eigen values of A are positive.

34

Classification of Quadratic Form

Importance of Determinant, Eigen Values and

Diagonal Element

2. Positive Semi-definite:(a) A quadratic form Y xT Ax is

positive semi-definite iff the nested principal minors of A

is given as

a11 0,

a11

a12

a21

a22

a11

a12

a21

0,........,

...

an1

a22

...

an 2

...

....

...

...

a1n

a2 n

0

...

ann

(b). A quadratic form Y=XTAX is positive semi-definite iff

at least one eigen value of A is zero while the remaining

roots are positive.

35

Continued

3. Negative Definite: (a). A quadratic form Y xT Ax is

negative definite iff the nested principal minors of A are

given as

a11 0,

a11

a12

a21 a22

a11

a12

0, a21 a22

a31 a32

a13

a23 0,........

a33

Evidently a matrix A is negative definite only if (-1)n×

det(A)>0; where det(A) is either negative or positive

depending on the order n of A.

(b). A quadratic form Y=XTAX be negative definite iff all

the eigen Roots of A are negative.

36

Continued

4. Negative Semi-definite:(a)A quadratic formY xT Ax is

negative semi-definite iff the nested principal minors of A

is given as

a11 0,

a11

a12

a21

a22

a11

a12

a13

0, a21

a22

a23 0,........

a31

a32

a33

Evidently a

matrix A is negative semi-definite only if (1) n A 0

,that is, det(A)≥0 ( det(A)≤0 ) when n is odd(

even).

(b). A quadratic form Y xT Ax is negative semi-definite iff

at least one eigen value of A is zero while the remaining

roots are negative.

37

(1)n A 0

Theorem on Quadratic Form

(Congruent Transformation)

If Y xT Ax is a real quadratic form of n variables x1, x2,

…, xn and rank r i.e. ρ(A)=r then there exists a nonsingular matrix P of order n such that x=Pz will convert Y

in the canonical form

1 z 2 z ... r z

2

1

2

2

2

r

where λ1, λ2, …, λr are all the different from zero.

That implies

X T AX Z T PT APZ

Z T DZ , D diag{ 1 ,, r ,00}

38

Grammian (Gram)Matrix

Grammian Matrix

-----If A be n×m matrix then the matrix S=ATA is called grammian

matrix of A. If A is m×n then S=ATA is a symmetric n-rowed

matrix.

Properties

a. Every positive definite or positive semi-definite matrix can be

represented as a Grammian matrix

b. The Grammian matrix ATA is always positive definite or

positive semi-definite according as the rank of A is equal to or

less than the number of columns of A

T

T

(

A

)

r

(

A

A

)

(

AA

)r

c.

d. If ATA=0 then A=0

39

What are eigenvalues?

• Given a matrix, A, x is the eigenvector and is the

corresponding eigenvalue if Ax = x

– A must be square and the determinant of A - I must

be equal to zero

Ax - x = 0 ! (A - I) x = 0

• Trivial solution is if x = 0

• The non trivial solution occurs when det(A - I) = 0

• Are eigenvectors are unique?

– If x is an eigenvector, then x is also an eigenvector

and is an eigenvalue of A,

A(x) = (Ax) = (x) = (x)

40

Calculating the Eigenvectors/values

• Expand the det(A - I) = 0 for a 2 × 2 matrix

a11 a12

1 0

0

det A I det

a

a

0

1

22

21

a12

a11

det

0 a11 a22 a12 a21 0

a22

a21

2 a11 a22 a11a22 a12 a21 0

• For a 2 × 2 matrix, this is a simple quadratic equation

with two solutions (maybe complex)

1

{a11 a22

2

a11 a22 2 4a11a22 a12 a21 }

• This “characteristic equation” can be used to solve for x

41

Eigenvalue example

• Consider,

2 a11 a22 a11a22 a12 a21 0

1 2

2

A

(1 4) 1 4 2 2 0

2 4

2

(1 4) 0, 5

• The corresponding eigenvectors can be computed as

1 2 0 0 x

1 2 x 1x 2 y 0

0

2 4 y 2 x 4 y 0

2 4 0 0 y

0

1 2 5 0 x

4 2 x 4 x 2 y 0

5

0 5 y 0 2 1 y 2 x 1y 0

2

4

– For = 0, one possible solution is x = (2, -1)

– For = 5, one possible solution is x = (1, 2)

42

Geometric Interpretation of eigen roots and

vectors

We know from the definition of eigen roots and vectors

Ax = λx;

(**)

where A is m×m matrix, x is m tuples vector and λ is

scalar quantity.

From the right side of (**) we see that the vector is

multiplied by a scalar. Hence the direction of x and λx is

on the same line.

The left side of(**)shows the effect of matrix

multiplication of matrix A (matrix operator) with vector x.

But matrix operator may change the direction and

magnitude of the vector.

43

Geometric Interpretation of eigen roots and

vectors

Hence our goal is to find such kind of vectors that

change in magnitude but remain on the same line after

matrix multiplication.

Now the question arises: does these eigen vectors along

with their respective change in magnitude characterize

the matrix?

Answer is the DECOMPOSITION THEOREMS

44

Geometric Interpretation of eigen Roots and

Vectors

Geometric Interpretation

Y

Y

[A]

Ax2

x1

x2

Ax1

X

Z

X

Z

45

More to Notice

a 0 x 1

x 1

a

0

a

x

x

2

2

a 0 x 1

x 1

a ,

0 b 0

0

a 0 x 1 ax 1

0 b x 2 bx 2

a 0 0

0

b

0 b x 2

x 2

46

Properties of Eigen Values and Vectors

If B=CAC-1, where A, B and C are all n×n then A and B

have same eigen roots. If x is the eigen Vector of A then

Cx for B

The eigen roots of A and AT are same.

A eigen Vector x≠o can not be associated with more

than one eigen Root

The eigen Vectors of a matrix A are linearly independent

if they corresponds to distinct roots.

Let A be a square matrix of order m and suppose all its

roots are distinct. Then A is similar to a diagonal matrix

Λ,i.e. P-1AP= Λ.

eigen Roots and vectors are all real for any real

symmetric matrix, A

If λi and λj are two distinct roots of a real symmetric

matrix A, then vectors xi and xj are orthogonal

47

Properties of Eigen Values and Vectors

If λ1, λ2, … , λm are the eigen roots of the non-singular

matrix A then λ1-1, λ2-1, … , λm-1 are the eigen roots of A-1.

Let A, B be two square matrices of order m. Then the

eigen roots of AB are exactly the eigen roots of BA.

Let A, B be respectively m×n and n×m matrices, where

m≤n. Then the eigen Roots of (BA)n×n consists of n-m

zeros and the m eigen Roots of (AB)m×m.

48

Properties of Eigen Values and Vectors

Let A be a square matrix of order m and λ1, λ2, … , λm be its

m

eigen Roots then A i .

i 1

Let A be a m×m matrix with eigen Roots λ1, λ2, … , λm then tr(A)

= tr(Λ) = λ1+ λ2+ … + λm .

If A has eigen Roots λ1, λ2, … , λm then A-kI has eigen Roots

λ1-k, λ2-k, … , λm-k and kA has the eigen Roots kλ1, kλ2, … ,

kλm , where k is scalar.

If A is an orthogonal matrix then all its eigen Roots have

absolute value 1.

Let A be a square matrix of order m; suppose further that A is

idempotent. Then its eigen Roots are either 0 or 1.

49

50

Eigen/diagonal Decomposition

• Let

be a square matrix with m linearly

independent eigenvectors (a “non-defective” matrix)

• Theorem: Exists an eigen decomposition

diagonal

Unique for

distinct

eigenvalues

– (cf. matrix diagonalization theorem)

• Columns of U are eigenvectors of S

• Diagonal elements of

are eigenvalues of

51

Diagonal decomposition: why/how

Let U have the eigenvectors as columns:U v1 ... vm

Then, SU can be written

1

SU S v1 ... vm 1v1 ... mvm v1 ... vm

...

m

Thus SU=U, or U–1SU=

–1.

And S=U

U

UM

52

Diagonal decomposition - example

2 1

; 1 1, 2 3.

Recall S

1 2

1

1 1

1

The eigenvectors

and form U

1

1

1

1

Inverting, we have

U

Then, S=UU–1

=

1

1 / 2 1 / 2

1

/

2

1

/

2

Recall

UU–1 =1.

1 1 1 0 1 / 2 1 / 2

1 1 0 3 1 / 2 1 / 2

UM

53

Example continued

Let’s divide U (and multiply U–1) by 2

1 / 2 1 / 2 1 0 1 / 2

Then, S=

1 / 2 1 / 2 0 3 1 / 2

Q

1/ 2

1/ 2

(Q-1= QT )

Why? …

54

Symmetric Eigen Decomposition

• If

is a symmetric matrix:

• Theorem: Exists a (unique) eigen decomposition

• where Q is orthogonal:

– Q-1= QT

S QQ

T

– Columns of Q are normalized eigenvectors

– Columns are orthogonal.

– (everything is real)

55

Spectral Decomposition theorem

• If A is a symmetric m ×m matrix with i and ei, i = 1

m being the m eigenvector and eigenvalue pairs,

then

m

T

T

T

A 1 e1 e1 2 e2 e2 m em em A i ei eTi PPT

mm

mm

m11m

m11m

m11m

1 0

0

2

P e1 , e2 em ,

mm

mm

0 0

i 1

m11m

0

0

m

– This is also called the eigen( spectral) decomposition theorem

• Any symmetric matrix can be reconstructed using its

eigenvalues and eigenvectors

56

Example for Spectral Decomposition

• Let A be a symmetric, positive definite matrix

2. 2 0. 4

A

det A I 0

0.4 2.8

2 5 6.16 0.16 3 2 0

• The eigenvectors for the corresponding eigenvalues are

• Consequently,

e1T 1 , 2 , eT2 2

, 1

5

5

5

5

1

2.2 0.4

5 1

A

3

2

5

0.4 2.8

5

0.6 1.2 1.6 0.8

0.8 0.4

1

.

2

2

.

4

2

5 2

2 2

5 1

5

5

1

5

57

The Square Root of a Matrix

The spectral decomposition allows us to express a square

matrix in terms of its eigenvalues and eigenvectors.

This expression enables us to conveniently create a

square root matrix.

A is a p x p positive definite matrix with the spectral

decomposition:

A

k

'

e

e

i i i

If

i1

A

P = [ e1 e2 e3 … ep]

k

'

'

e

e

P

P

i i i

i1

where P’P = PP’ = I and = diag(i).

58

The Square Root of a Matrix

Let

1

1

2 0

0

0

0

p

0

2

0

This implies (P1/2P’)P1/2P’ = P1/21/2P’

= (PP’)

The matrix

1

2

'

P P

k

i1

'

i

i eie A

1

2

Is

called he square root

of A

59

Matrix Square Root Properties

The square root of A has the following

properties(prove them):

A

1

2

1

2

'

1

A 2

1

2

A A A

-1

2

1

2

-1

2

-1

2

1

2

A A A A

A A

A

1

-1

2

I

where A

-1

2

A

1

2

-1

60

Physical Interpretation of SPD

(Spectral Decomposition)

Suppose xT Ax = c2. For p = 2, all x that satisfy this equation form

an ellipse, i.e., c2 = 1(xTe1)2 + 2(xT e2)2 (using SPD of p.d. A).

e2

All points at same ellipsedistance.

What will be the case if

we replace A by A-1 ?

Var(eTi x)=eiTVar(X)eT=

Λi ith eigen value

ofVar(X)

e1

Let x1 = c 1-1/2 e1 and x2 = c λ2-1/2 e2. Both x satisfy the above

equation in the direction of eigenvector. Note that the length of x is c

1-1/2. ||x|| is inversely propotional to sqrt of eigen values of A.

61

Matrix Inequalities

and Maximization

- Extended Cauchy-Schwartz Inequality – Let b and d be

any two p x 1 vectors and B be a p x p positive definite

matrix. Then

(b’d)2 (b’Bb)(d’B-1d)

with equality iff b=kB-1d or (or d=kB -1d) for some constant c.

- Maximization Lemma – let d be a given p x 1 vector and B

be a p x p positive definite matrix. Then for an arbitrary

2

nonzero vector x

x ' d

max

x 0

x ' Bx

d ' B 1d

with the maximum attained when x = kB-1d for any constant

k.

62

Matrix Inequalities

and Maximization

- Maximization of Quadratic Forms for Points on the Unit

Sphere – let B be a p x p positive definite matrix with

eigenvalues 1 2 p and associated eigenvectors

e1, e2, ,ep. Then

x ' Bx

1 (attained when x=e1 )

max

x 0

x'x

x ' Bx

p (attained when x=e p )

min

x 0 x ' x

x ' Bx

k+1 (attained when x ek+1 , k 1,2,

max

x e1 , ek x ' x

,p-1)

63

Calculation in R

t<-sqrt(2)

x<-c(3.0046,t,t,16.9967)

A<-matrix(x, nrow=2)

eigen(A)

>x

[1] 3.004600 1.414214 1.414214

16.996700

> A<-matrix(x, nrow=2)

>A

[,1]

[,2]

[1,] 3.004600 1.414214

[2,] 1.414214 16.996700

> eigen(A)

$values

[1] 17.138207 2.863093

$vectors

[,1]

[,2]

[1,] 0.09956317 -0.99503124

[2,] 0.99503124 0.09956317

64

Thank you

65