PowerPoint on Regression Analysis

advertisement

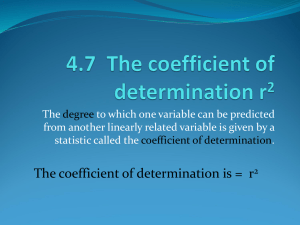

Equations in Simple Regression Analysis The Variance x sx n 1 2 2 The standard deviation Sx s 2 x The covariance xy sxy n 1 The Pearson product moment correlation rxy sxy sx s y The normal equations (for the regressions of y on x) xy bxy x sxy sx 2 2 a Y - byx X The structural model (for an observation on individual i) Yi a byx X i ei The regression equation Y a b yx X (Y b yx X ) b yx X Y b yx ( X X ) Y b yx X Partitioning a deviation score, y y YY Y (Y Y ) (Y Y ) Y (Y Y ) (Y Y ) Partitioning the sum of squared deviations (sum of squares, SSy) y 2 (Y Y ) [(Y Y ) (Y Y )] (Y Y ) (Y Y ) 2 2 2 SS reg SS res 2 Calculation of proportions of sums of squares due to regression and due to error (or residual) y y 2 2 1 SS reg y 2 SS reg y 2 SS res y SS res y 2 2 Alternative formulas for computing the sums of squares due to regression (Y Y ) (Y bx Y ) (bx ) b x ( xy ) x ( x ) ( xy ) x xy xy x b xy SS reg 2 2 2 2 2 2 2 2 2 2 2 2 Test of the regression coefficient, byx, (i.e. test the null hypothesis that byx = 0) First compute the variance of estimate s 2 y x est ( ) 2 y 2 (Y Y ) N k1 SS res N k1 Test of the regression coefficient, byx, (i.e. test the null hypothesis that byx = 0) Then obtain the standard error of estimate s y x 2 s y x Then compute the standard error of the regression coefficient, Sb sb s 2 y x ( x ) / (n 1) 2 s y x ( x ) / ( N 1) 2 The test of significance of the regression coefficient (byx) The significance of the regression coefficient is tested using a t test with (N-k-1) degrees of freedom: t b yx sb b yx S y x Sx n 1 Computing regression using correlations The correlation, in the population, is given by xy N x y The population correlation coefficient, ρxy, is estimated by the sample correlation coefficient, rxy zx z y rxy N sxy sx s y xy 2 2 x y Sums of squares, regression (SSreg) Recalling that r2 gives the proportion of variance of Y accounted for (or explained) by X, we can obtain SS reg r 2 y 2 SS res (1 r ) y 2 2 or, in other words, SSreg is that portion of SSy predicted or explained by the regression of Y on X. Standard error of estimate From SSres we can compute the variance of estimate and standard error of estimate as (1 r ) y N k 1 s y x 2 2 s y x s y x 2 (Note alternative formulas were given earlier.) Testing the Significance of r The significance of a correlation coefficient, r, is tested using a t test: t r N 2 1 r 2 With N-2 degrees of freedom. Testing the difference between two correlations To test the difference between two Pearson correlation coefficients, use the “Comparing two correlation coefficients” calculator on my web site. Testing the difference between two regression coefficients This, also, is a t test: b1 b2 t S b21 S b22 Where S 2 b was given earlier. When the variances, Sb2 , are unequal, used the pooled estimate given on page 258 of our textbook. Other measures of correlation Chapter 10 in the text gives several alternative measures of correlation: Point-biserial correlation Phi correlation Biserial correlation Tetrachoric correlation Spearman correlation Point-biserial and Phi correlation These are both Pearson Product-moment correlations The Point-biserial correlation is used when on variable is a scale variable and the other represents a true dichotomy. For instance, the correlation between an performance on an item—the dichotomous variable—and the total score on a test—the scaled variable. Point-biserial and Phi correlation The Phi correlation is used when both variables represent a true dichotomy. For instance, the correlation between two test items. Biserial and Tetrachoric correlation These are non-Pearson correlations. Both are rarely used anymore. The biserial correlation is used when one variable is truly a scaled variable and the other represents an artificial dichotomy. The Tetrachoric correlation is used when both variables represent an artificial dichotomy. Spearman’s Rho Coefficient and Kendall’s Tau Coefficient Spearman’s rho is used to compute the correlation between two ordinal (or ranked) variables. It is the correlation between two sets of ranks. Kendall’s tau (see pages 286-288 in the text) is also a measure of the relationship between two sets of ranked data.