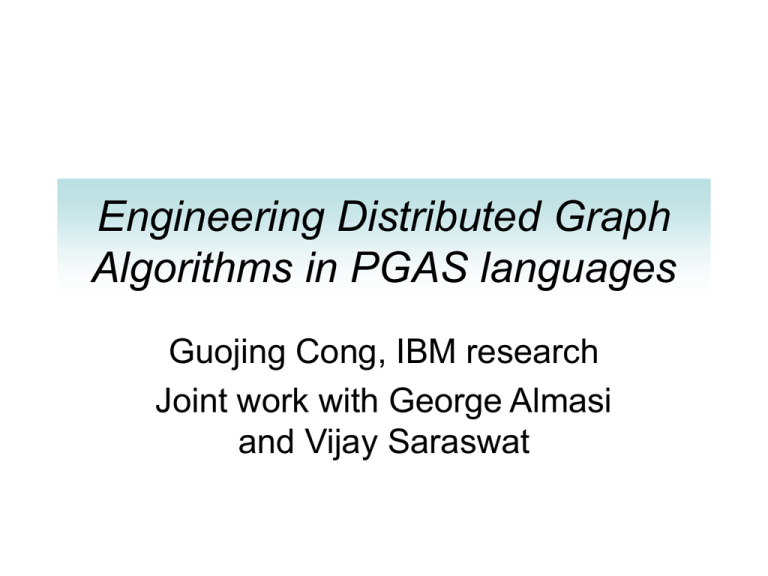

Engineering Distributed Graph Algorithms in PGAS languages

advertisement

Engineering Distributed Graph Algorithms in PGAS languages Guojing Cong, IBM research Joint work with George Almasi and Vijay Saraswat Programming language from the perspective of a not-so-distant admirer Mapping graph algorithms onto distributed memory machines has been a challenge • • • • Efficient mapping PRAM algorithm onto SMPs is hard Mapping onto a cluster of SMPs is even harder Optimizations are available and shown to improve performance Can these be somehow automated with help from the language design, compiler and runtime development? • Expectations of the languages – Expressiveness • SPMD, task parallelism (spawn/async), pipeline, future, virtual sharedmemory abstraction, work-stealing, data distribution, … • Ease of programming – Efficiency • Mapping high level constructs to run fast on the target machine – – – – SMP Multi-core, multi-threaded MPP Heterogeneous with accelerators • Leverage for tuning A case study with connected components on a cluster of SMPs with UPC • A connected component of an undirected graph G=(V,E), |V|=n, |E|=m, is a maximal connected subgraph – Connected components algorithm find all such components in G • Sequential algorithms – Breadth-first traversal (BFS) – Depth-first traversal (DFS) • One parallel algorithm -- Shiloach-Vishkin algorithm (SV82) – Edge list as input – Adopts the graft and shortcut approach • Start with n isolated vertices. • Graft vertex v to a neighbor u with (u < v) • Shortcut the connected components into super-vertices and continue on the reduced graph Example: SV 4 2 4 2 1,4 1st iter. 2nd iter. 1 3 Input graph 1 1 1 2 2,3 3 graft shortcut 2 Simple? Yes, performs poorly Random Graph, 1M vertices, 10M edges Random Graph (1M vertices, 20 M edges) 300 100 Bor-AL Bor-EL Prim SV Time (seconds) Execution Time (400M cycles) 250 10 200 150 100 50 1 0 2 4 6 Number of Processors 8 10 12 2 4 6 8 Number of Processors Sun enterprise E4500 • Memory-intensive, irregular accesses, poor temporal locality 10 Typical behavior of graph algorithms • CPI construction • BC – betweeness centrality • BiCC – Biconnected components • MST – Minimum spanning tree • LRU stack distance plot On distributed-memory machines • Random access and indirection make it hard to – implement, e.g, no fast MPI implementation – Optimize, i.e., random access creates problems for both communication and cache performance • The partitioned global address space (PGAS) paradigm – presents a shared-memory abstraction to the programmer for distributed-memory machines. receives a fair amount of attention recently. – allows the programmer to control the data layout and work assignment – improve ease of programming, and also give the programmer leverage to tune for high performance Implementation in UPC is straightforward UPC implementation Pthread implementation Performance is miserable Communication efficient algorithms • Proposed to address the “bottleneck of processor-to-processor communication” – Goodrich [96] presented a communication-efficient sorting algorithmon weakCREWBSP that runs in O(log n/ log(h + 1)) communication rounds and O((n log n)/p) local computation time, for h = Θ(n/p) – Adler et. al. [98] presented a communication-optimal MST algorithm – Dehne et al. [02] designed an efficient list ranking algorithm for coarse-grained multicomputers (CGM) and BSP that takes O(log p) communication rounds with O(n/p) local computation • Common approach – simulating several (e.g., O(log p) or O(log log p) ) steps of the PRAM algorithms to reduce the input size so that it fits in the memory of a single node – A “sequential” algorithm is then invoked to process the reduced input of size O(n/p) – finally the result is broadcast to all processors for computing the final solution • Question – How well do communication efficient algorithms work on practice? – How fast can optimized shared-memory based algorithms run? Cache performace vs. communication performance – Can these optimizations be automated through necessary language/compiler support Locality-central optimization • Improve locality behavior of the algorithm – The key performance issues are communication and cache performance – Determined by locality • Many prior cache-friendly results, but no tangible practical evidence – Fine-grain parallelism makes it hard to optimize for temporal locality – Focus on spatial locality • To take advantage of large cache lines, hardware prefetching, software prefetching Scheduling of the memory accesses in a parallel loop Typical loop in CC Generic loop An example Mapping to the distributed environments • All remote accesses are consecutive in our scheduling • If the runtime provides remote prefetching or coalescing, then communication efficiency can be improved • If not, coalescing can be easily done at the program level as shown on right Performance improvement due to communication efficency Applying the approach to single-node for cache-friendly design CC 0.75 100M, 400M 100M, 1G 200M, 800M 0.70 normalized execution time • Apply as many levels of recursions as necessary • Simulate the recursions with virtual threads • Assuming a large-enough, one level, fully associative cache 0.65 0.60 0.55 0.50 0.45 Original execution time 0.40 1 Optimized execution time 2 4 6 8 10 W 12 14 16 18 20 Graph-specific optimization • Compact edge list – the size of the list determines the number of elements to request from remote nodes – edges within components no longer contribute to the merging of connected components, and can be filtered out • Avoid communication hotspot – Grafting in CC shoots a pointer from a vertex with larger numbering to one with smaller numbering. – Thread thr0 owns vertex 0, and may quickly become a communication hotspot – Avoid querying thr0 about D[0] UPC specific optimization • Avoid runtime cost on local data – After optimization, all direct access to the shared arrays are local – Yet the compiler is not able to recognize – With UPC, we use private pointer arithmetics for • Avoid intrinsics – It is costly to invoke compiler intrinsics to determine the target thread id – Computing target thread ids is done for every iteration. – we compute these ids directly instead of invoking the intrinsics. – Noticing that the target ids do not change across iteration, we compute them once and store them in a global buffer. Performance Results Random Graph, 100M vertices, 400M edges Random Graph, 100M vertices, 1G edges 1000 1000 Optimized SMP BFS Time (seconds) Time (seconds) Optimized SMP BFS 100 10 100 10 16 32 64 128 256 16 32 # Threads Hybrid Graph, 100M vertices, 400M edges 128 256 Random Graph, 100M vertices, 400M edges 100 120 Comm Sort Copy Irregular Work Setup 60 40 20 Comm Sort Copy Irregular Work Setup 100 Time (seconds) 80 Time (seconds) 64 # Threads 80 60 40 20 0 0 base compact offload circular Implementations localcpy id base compact offload circular Implementations localcpy id So, how helpful is UPC • Straightforward mapping of shared-memory algorithm is easy – quick prototyping – Quick profiling – Incremental optimization (10 versions for CC) • All other optimizations are manual • Many of them can be automated, though • UPC is not flexible enough to expose the hierarchy of nodes and processors to the programmer Conclusion and future work • We show that with appropriate optimizations, shared-memory graph algorithms can be mapped to the PGAS environment with high performance. • On inputs that fit in the main memory on one node, our implementation achieves good speedups over the best SMP implementation and the best sequential implementation. • Our results suggest that effective use of processors and caches can bring better performance than simply reducing the communication rounds • Automating these optimizations is our future work