Bayes_2 - Reza Shadmehr

advertisement

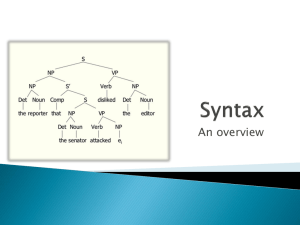

580.691 Learning Theory Reza Shadmehr Bayesian Learning 2: Gaussian distribution & linear regression Causal inference For today’s lecture we will attack the problem of how to apply Bayes rule when both our prior (p(w)) and our condition p(y|w) are Gaussian: Prior Distr. Conditional Distr. Posterior distr. p(w | y ) p w , y py p w p y | w p w p y | w dw The numerator is just the joint distribution of w and y, evaluated at a particular y(n). The denominator is the marginal distribution of y, evaluated at y(n), that is, it is just a number that makes the numerator integrate to one. Joint distribution p(w,y) Joint distribution Evaluated at y(n) w Prior dist p(w) y(n) y Marginal dist p(y) Example: Linear regression with a prior n n1 n n1 p w N w ,P n n1 var w P E w w n n1 y H x w ε ε N 0, R E y E H x w ε H x E w H x w n n 1 var y var H x w ε H x var w H x 2cov H x w , ε var ε T H x P n n 1 H x R T n n 1 p y ( n) w N H x( n) w , R T n n 1 n n 1 ( n) ( n) ( n) py N H x w ,H x P H x R T cov y , w cov H x w ε, w E H x w ε H x E w w E w T T T E H x wwT H x wE w H x E w wT H x E w E w εwT εE w H x E wwT H x E w E w T H x var(w ) H x P n n 1 n n 1 n n 1 w P w , p w, y p N n n 1 ( n) n n 1 w y H x H x P T n n 1 H x P H x R P n n 1 H x T μ 12 N 1 , 11 μ 2 21 22 So the joint probability is Normally distributed. Now what we would like to do is to factor this expression so that we can write it as a conditional probability times a prior. p w, y p w y p y If we can do this, then the conditional probability is the posterior that we are looking for. For the rest of the lecture we will try to solve this problem when our prior and the conditional distribution are both Normally distributed. The Multivariate Normal distribution is: p x 1 2 d /2 Σ 1/ 2 T 1 exp x μ Σ1 x μ 2 Where x is a d x 1 vector and Sigma is a d x d variance covariance matrix.. The distribution has two parts: The exponential part is a quadratic form that determines the form of the Gaussian curve. The factor before is just a constant factor that makes the exponential part integrate to 1 (it does not depend on x). Now let’s start with two variables that have a joint Gaussian distribution: x1 is a px1 vector and x2 a qx1 vector. They have covariance 12: pxp p x1 , x2 2 p q / 2 pxq 11 12 21 22 qxp 1/ 2 qxq T 1 x1 μ1 exp μ 2 x 2 2 1 11 12 x1 μ1 x μ 21 22 2 2 How would we calculate p x1 | x2 ? The following calculation for Gaussians will be a little long, but it is worth it, because the result will be extremely useful. Often we have things that are Gaussian and often we can use the Gaussian distribution as approximations. To calculate the posterior probability, we need to know how to factorize the joint probability into a part that depends on x1 and x2 and one that only depends on x2. So, we need to learn how to block-diagonalize the variance-covariance matrix: E F M G H 0 I FH 1 E F I 1 I G H H G I 0 0 E FH 1G F FH 1 H I 1 G H H G I E FH 1G 0 M / H 0 H 0 H 0 M/H is called the Schur complement of the matrix M with respect to H. Result 1 Now let’s take the determinant of the above equation. Remember for square matrices A and B: det(AB)=det(A)*det(B). Also remember that the determinant of a block-triangular matrix is just the product of the determinants of the diagonal blocks. I FH 1 E F I 0 M / H 0 det det H 1G I 0 G H 0 H I det I det M det I det M / H det H det M det M / H det H Result 2 As a second result, what is M-1? XYZ W Z 1Y 1 X 1 W 1 Y 1 ZW 1 X E M 1 G 1 F 0 M / H 1 I 1 H H G I 0 1 0 I FH I H 1 0 We use result 1 to split the constant first factor out of multivariate Gaussian into two factors. 2 pq / 2 2 p/2 11 12 21 22 / 22 1/ 2 1/ 2 2 q / 2 22 (A) 1/ 2 (B) Now we can factorize the exponential part into two, using result 2: T x μ 1 exp 1 1 2 x2 μ2 1 11 12 x1 μ1 x μ 21 22 2 2 T 1 0 / 22 1 0 I 12 22 x1 μ1 1 x1 μ1 I exp 1 1 x μ I x μ 2 0 I 0 2 2 22 21 2 2 22 T 1 1 1 0 x1 μ1 12 22 x 2 μ 2 1 x1 μ1 12 22 x 2 μ 2 / 22 exp 1 2 x2 μ 2 x2 μ 2 0 22 T 1 1 1 1 exp x1 μ1 12 22 x 2 μ 2 / 22 x1 μ1 12 22 x2 μ 2 (C) 2 T 1 exp x 2 μ 2 22 1 x 2 μ 2 (D) 2 Now see that part A and C and part B and D each combine to a normal distribution. Thus we can write: μ 12 p x1 , x 2 N 1 , 11 μ 22 2 21 1 N μ1 12 22 x2 μ 2 , / 22 N μ2 , 22 1 N μ1 12 22 x2 μ 2 , 11 1222121 N μ2 , 22 conditional m arg inal p(x1 | x 2 ) p x1 , x 2 dx1 If x1 and x2 are jointly normally distributed, with: 12 p x1 , x2 N 1 , 11 22 2 21 Then x1 given x2 has a normal distribution with: 1 E x1 | x2 1 1222 x2 2 1 var x1 | x2 11 1222 21 Linear regression with a prior and the relationship to Kalman gain pw N w n n 1 ,P n n 1 n n 1 p y ( n) w N H x( n) w , R p y T n n 1 n n 1 (n) (n) (n) NH x w ,H x P H x R (n) n n 1 n n 1 P w n n 1 w , N p w, y (n) p y ( n) H x( n) w n n1 n n 1 H x( n) P μ 12 N 1 , 11 μ 22 2 21 T n n 1 H x( n) P H x( n) R P n n 1 H x ( n) T mean 1 T T n n 1 n n 1 n n 1 n n 1 ( n ) ( n ) ( n ) w P H x P H x R y ( n ) H x( n) w H x (n) p wy N 1 T T n n1 n n 1 n n 1 n n 1 P H x( n ) H x( n) P H x( n ) R H x( n ) P P variance , Causal inference Recall that in the hiking problem we had two GPS devices that measured our position. We combined the reading from the two devices to form an estimate of our location. This approach makes sense if the two readings are close to each other. However, we can hardly be expected to combine the two readings if one of them is telling us that we are on the north bank of the river and the other is telling us that we are on the south bank. We know that we are not in the middle of the river! In this case the idea of combining the two readings makes little sense. Wallace and colleagues (2004) examined this question by placing people in a room where LEDs and small speakers were placed around a semi-circle (Fig. 1A). A volunteer was placed in the center of the semi-circle and held a pointer in hand. The experiment began by the volunteer fixating a location (fixation LED, Fig. 1A). An auditory stimulus was presented from one of the speakers, and then one of the LEDs was turned on 200, 500, or 800ms later. The volunteer estimated the location of the sound by pointing (pointer, Fig. 1A). Then the subject pressed a switch with their foot if they thought that the light and the sound came from the same location. The results of the experiment are plotted in Fig. 1B and C. The perception of unity was highest when the two events occurred in close temporal and spatial proximity. Importantly, when the volunteers perceived a common source, their perception of the location of the sound was highly affected by the location of the light. If location of the sound is: xs Location of the LED is: xv Estimate of the location of the sound:xˆs The estimate of location of sound was biased by the location of the LED when the volunteer thought that there was a common source (Fig. 1C). This bias fell to near zero when the volunteer perceived light and sound to originate from different sources People were asked to report their perception of unity, i.e., whether the location and light and sound were the same. Wallace et al. (2004) Exp Brain Res 158:252-258. When our various sensory organs produce reports that are temporally and spatially in agreement, we tend to believe that there was a single source that was responsible for both observations. In this case, we combine the readings from the sensors to estimate the state of the source. On the other hand, if our sensory measurements are temporally or spatially inconsistent, then we view the events as having disparate sources, and we do not combine the sources. Therefore, the nature of our belief as to whether there was a common source or not is not black or white. Rather, there is some probability that there was a common source. In that case, this probability should have a lot to do with how we combine the information from the various sensors 1 0 x1 y εy x 1 0 2 1 0 x1 y εy x 0 1 2 Pr common source y a Pr not common source y 1 a y 1 0 x1 1 0 x1 y 1 a 1 a x 0 1 x ε y 1 0 2 2 2 y aC1x 1 a C2 x ε ε x N μ, P Prior belief: Pr z 1 , probability of a common source We would like to compute: p z 1 y , p x y N 0, R y C1x ε if common source xˆ μ k1 y C1μ y C2 x ε if separate sources xˆ μ k 2 y C2μ Pr z 1 y p y z 1 Pr z 1 p y z 0 Pr z 0 p y z 1 Pr z 1 a p y z 1 N C1μ, C1 PC1T R p y z 0 N C2μ, C2 PC2T R xˆ a μ k 1 y C1μ 1 a μ k 2 y C2μ μ ak1 y C1μ 1 a k 2 y C2μ