Data Cleansing - Northern Kentucky University

advertisement

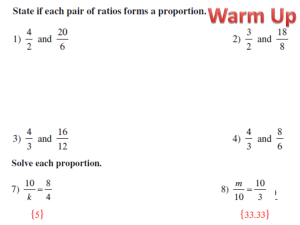

A Privacy Preserving Efficient Protocol for Semantic Similarity Join Using Long String Attributes Bilal Hawashin, Farshad Fotouhi Traian Marius Truta Department of Computer Science Wayne State University Northern Kentucky University Outlines What is Similarity Join Long String Values Our Contribution Privacy Preserving Protocol For Long String Values Experiments and Results Conclusions/Future Work Contact Information Motivation Is Natural Join always suitable? Name John Smith Mary Jones Address 4115 Main St. 2619 Ford Rd. Major Biology Chemical Eng. … Name Address Smith, John 4115 Main Street Mary Jons 2619 Ford Rd. Monthly Sal. 1645 … 2100 Similarity Join Joining a pair of records if they have SIMILAR values in the join attribute. Formally, similarity join consists of grouping pairs of records whose similarity is greater than a threshold, T. Studied widely in the literature, and referred to as record linkage, entity matching, duplicate detection, citation resolution, … Our Previous Contribution: Long String Values (ICDM MMIS10) The term long string refers to the data type representing any string value with unlimited length. The term long attribute refers to any attribute of long string data type. Most tables contain at least one attribute with long string values. Examples are Paper Abstract, Product Description, Movie Summary, User Comment, … Most of the previous work studied similarity join on short fields. In our previous work, we showed that using long attributes as join attributes under supervised learning can enhance the similarity join performance. Example P1 Title P2 Title P3 Title … P1 Kwds P2 Kwds P3 Kwds P1 Authrs P1 Abstract P2 Authrs P2 Abstract P3 Authrs P3 Abstract … … … P10 Title P11 Title … P10 Kwds P10 Authrs P10 Abstract … P11 Kwds P11 Authrs P11 Abstract … Our Paper (Motivation) Some sources may not allow sharing its whole data in the similarity join process. Solution: Privacy Preserved Similarity Join. Using long attributes as join attributes can increase the similarity join accuracy. Up to our knowledge, all the current Privacy Preserved SJ algorithms use short attributes. Most of the current privacy preserved SJ algorithms ignore the semantic similarities among the values. Problem Formulation Our goal is to find a Privacy Preserved Similarity Join Algorithm when the join attribute is a long attribute and consider the semantic similarities among such long values. Our Work Plan Phase1: Compare multiple similarity methods for long attributes when similarity thresholds are used. Phase2: Use the best method as part in the privacy preserved SJ protocol. Phase1: Finding Best SJ Method for Long Strings with Threshold Candidate Methods: Diffusion Maps. Latent Semantic Indexing. Locality Preserving Projection. Performance Measurements F1 Measurement: the harmonic mean between recall R and precision P. Where recall is the ratio of the relevant data among the retrieved data, and precision is the ratio of the accurate data among the retrieved data. Performance Measurements(Cont.) Preprocessing time is the time needed to read the dataset and generate matrices that could be used later as an input to the semantic operation. Operation time is the time needed to apply the semantic method. Matching time is the time required by the third party, C, to find the cosine similarity among the records provided by both A and B in the reduced space and compare the similarities with the predefined similarity threshold. Datasets IMDB Internet Movies Dataset: Movie Summary Field Amazon Dataset: Product Title Product Description Phase1 Results Finding best dimensionality reduction method using Movie Summary from IMDB Dataset (Left) and Product Descriptions from Amazon (Right). Phase2 Results Preprocessing Time: Read Dataset (1000 Movie Summaries) TF.IDF Weighting 12 Sec. Reduce Dimensionality using Mean TF.IDF Find Shared Features 0.5 Sec. 1 Sec. Negligible Phase2 Results Operation Time for the best performing methods from phase 1. Matching Time is negligible. Our Protocol Both sources A and B share the Threshold value T to decide similar pairs later. Our Protocol Source A P1 Title P1 Authors P1 Abstract … P2 Title P2 Authors P2 Abstract … P3 Title P3 Authors P3 Abstract Px Authors Px Abstract Source B Px Title … Ma Find Term_LSV Frequency Matrix for Each Source LSV1 LSV2 LSV3 Image 4 0 0 Classify 5 0 0 Similarity 0 6 5 Join 0 6 4 Find TD_Weighted Matrix Using TF.IDF Weighting WeightedMa LSV1 LSV2 LSV3 Image 0.9 0 0 Classify 0.7 0 0 Similarity 0 0.85 0.9 Join 0 0.7 0.85 TF.IDF Weighting TF.IDF weighting of a term W in a long string value x is given as: where tfw,x is the frequency of the term w in the long string value x, and idfw is , where N is the number of long string values in the relation, and nw is the number of long string values in the relation that contains the term w. MeanTF.IDF Feature Selection MeanTF.IDF is an unsupervised feature selection method. Every feature (term) is assigned a value according to its importance. The Value of a term feature w is given as Where TF.IDF(w, x) is the weighting of feature w in long string value x, and N is the total number of long string values. Apply MeanTF.IDF on WeightedMa and Get Important Features to Imp_Fea. Add Random features to Imp_Fea to get Rand_ Imp_Fea. Rand_ Imp_Fea and Rand_ Imp_Feb are returned to C. C Finds the intersection and return the shared important features SF to both A and B. Reduced WeightedM Dimensions in Both Sources using SF. SFa LSV1 LSV2 LSV3 Image 0.9 0 0 Similarity 0 0.85 0.9 … Add Random Vectors to SF Rand_Weighted_a LSV1 LSV2 LSV3 Random Cols Image 0.9 0 0 0.6 Similarity 0 0.85 0.9 0.2 … Find Wa (The Kernel) … 1-Cos_Sim(LSV1,LSV1)=0 1-Cos_Sim(LSV1,LSV2)=0.2 1-Cos_Sim(LSV1,LSV3)=0.3 … 1-Cos_Sim(LSV2,LSV1)=0.2 1-Cos_Sim(LSV2,LSV2)=0 1-Cos_Sim(LSV2,LSV3)=0.87 … 1-Cos_Sim(LSV3,LSV1)=0.3 1-Cos_Sim(LSV3,LSV2)=0.87 1-Cos_Sim(LSV3,LSV3)=0 … … … … |Wa| = D x D, where D is total number of columns in Rand_Weighteda Use Diffusion Maps to Find Red_Rand_Weighted_a [Red_Rand_Weighted_a,Sa,Va,Aa] = Diffusion_Map(Wa , 10, 1, red_dim), red_dim < D Red_Rand_Weighted_a=Diffusion Map Representation of first row of W a Diffusion Map Representation of second row of Wa Diffusion Map Representation of third row of Wa Col1 Col2 0.4 0.1 0.8 0.6 0.75 0.5 … … Colred_dim C Finds Pairwise Similarity Between Red_Rand_Weighted_a and Red_Rand_Weighted_b Red_Rand_ Weighted_a 1 Red_Rand_ Cos_Sim Weighted_b 1 0.77 1 2 0.3 … … … 2 1 0.9 If Cos_Sim>T, Insert the tuple in Matched Matched Red_Rand_ Weighted_a 1 Red_Rand_ Cos_Sim Weighted_b 1 0.77 2 1 0.9 2 7 0.85 … … … Matched is returned to both A and B. A and B remove random vectors from Matched and share their matrices. Our Protocol (Part1) Our Protocol (Part2) Phase2 Results Effect of adding random columns on the accuracy. Phase2 Results Effect of adding random columns on the number of suggested matches. Conclusions Efficient secure SJ semantic protocol for long string attributes is proposed. Diffusion maps is the best method (among compared) to semantically join long string attributes when threshold values are used. Mapping into diffusion maps space and adding random records can hide the original data without affecting the accuracy. Future Work Potential further works: Compare diffusion maps with more candidate semantic methods for joining long string attributes. Study the performance of the protocol on huge databases. Thank You … Dr. Farshad Fotouhi. Dr. Traian Marius Truta.