term() - Al Akhawayn University

advertisement

CSC 3315

Lexical and Syntax Analysis

Hamid Harroud

School of Science and Engineering, Akhawayn University

http://www.aui.ma/~H.Harroud/csc3315/

CSC3315 (Spring 2009)

1

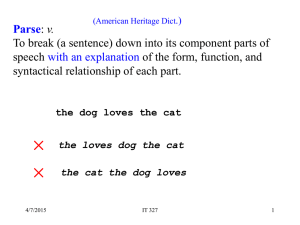

Syntax Description vs. Syntax Analysis

Syntax Description: the set of rules that can

be used to generate any sentence in the

language

We can construct derivations (or parse trees) to

generate (synthesize) arbitrary sentences

Syntax Analysis: the reverse process

Given a sentence, obtain the derivation (or parse

tree) that would generate this sentence based on

some grammar

The Parsing Problem

Given the sequence of tokens of a source

program (a sentence), we ask: is it

syntactically valid, i.e. could it be generated

from that grammar?

If it is not; find all syntax errors (or as many as

possible)

» for each error, produce a diagnostic message and

recover quickly– i.e. do not “crash”

If it is; construct the corresponding parse tree

(i.e. the derivation)

The Parsing Problem

A parser is a program that solves the parsing

problem

It is the second phase of a compiler

Parse tree constructed by parser is used in the

subsequent compilation phase: semantic analysis and

code generation

A recognizer is similar to a parser, except that it

does not produce the parse tree- it only gives a

Yes or No answer

Syntax Description vs. Syntax Analysis

Parser design is nearly always directly based on

a grammar description (BNF) of language

syntax

Two categories of parsers

Top down - parse tree is constructed starting at root,

using an order of a leftmost derivation

Bottom up - parse tree is constructed starting at

leaves, using an order of the reverse of a rightmost

derivation

Top-down Parsing: LL Parsing

Top-down parsers are also called LL-parsers

First L: Scan input sentence from left to right

Second L: Produce a left-most derivation

Scans the token stream from left to right:

For each input token, it decides which rule to use to expand the

leftmost non-terminal in the current sentential form

This decision is based only on whether the leftmost terminal

generated by the current leftmost non-terminal matches the current

input token

=> one token of lookahead

=> LL parsers that use one token of lookahead are called LL(1) parsers

LL(1) Parsing

The LL Parser must expand <A> using some rule of the

form: <A> RHS

Obviously, this decision must be based both on the

grammar and the unparsed portion of the token stream

A LL(1) parser determines the correct rule to use to expand a nonterminal <A> based only on the first token generated by <A>

Definition: if X is the RHS of some grammar rule then FIRST(X) =

{a | =>* aY}

where a : a terminal symbol

=>* : zero or more derivation steps

LL(1) Parsing

Example:

Grammar: <A> b<B> | c<B>b | a

Suppose the input token currently being processed is c

If the current sentential form is x<A>a, then there are

three possible rules we can apply, and the possible

next sentential forms are then: xb<B>a, xc<B>ba, and

xaa

=> But which rule to use next?

=> Choose the rule such that the leftmost terminal

obtained when <A> is expanded matches our current

input token “c”

LL(1) Parsing

Example (cont.)

Consider now a slightly modified version of

this grammar:<A> c<B> | c<B>b | a

=> this grammar actually cannot be used with

a LL(1) parser, since it is sometimes

impossible to decide on next rule based on

the current token only

Recursive-Descent Parsing

This is a recursive implementation of LL(1) parsers

Based directly on BNF description of the language:

There is a subprogram for each non-terminal in the

grammar, which can parse sentences generated by that

non-terminal

EBNF is ideally suited for being the basis for a recursive

descent parser, because EBNF tends to minimize the

number of non-terminals in grammar

In a way, this is analogous to how the lexical

analyzer is implemented directly from the state

diagram of the corresponding FA

Recursive-Descent Parsing

Suppose a non-terminal <A> has only one RHS

The subprogram for <A> is implemented as

follows:

Scan the symbols of RHS one by one from left to right

For each terminal symbol in the RHS, compare it with the

next input token; if they match, then read the next input

token and continue; else report an error

For each non-terminal symbol in the RHS, call the

subprogram associated with that non-terminal

Recursive-Descent Parsing

Example: <A> a<B>cb

The subprogram for non-terminal <A>

does the following:

Check if next token == ‘a’ else error()

Call subprogram for non-terminal <B>

Check if next token == ‘c’ else error()

Check if next token == ‘b’ else error()

Recursive-Descent Parsing

Suppose a non-terminal <A> has more than one

RHS

The subprogram for <A> is implemented as

follows:

First find rule <A> X such that FIRST(X) == next

token

» If none of the RHS’s of <A> satisfies this, then

report an error

» If more than one RHS of <A> satisfies this, then the

grammar is not appropriate for recursive descent

parsing to begin with!

Continue as in the previous case

Recursive-Descent Parsing

Assumptions:

We have a lexical analyzer subprogram Lex(),

which whenever called, puts the next token code

in a global variable nextToken

At the beginning of every parsing subprogram,

the next input token is in nextToken

Obviously, the first subprogram called is the

one associated with the start symbol of the

grammar

Recursive-Descent Parsing

Example: consider the following grammar

<A> a<B>cb | c<B> | ba<C>

Let ASub be subprogram associated with

nonterminal <A>

Let BSub be subprogram associated with

nonterminal <B>

Let CSub be subprogram associated with

nonterminal <C>

Recursive-Descent Parsing

Parser() { //this is the main function of the parser of this grammar

Lex(); //get the first token in the source program

ASub(); //call the subprogram for the start symbol of the grammar

}

ASub() {

switch (nextToken)

case ‘a’:

Lex();

BSub();

if nextToken != ‘c’ then error();

Lex();

if nextToken != ‘b’ then error();

Lex();

break;

case ‘c’:

Lex();

BSub();

break;

default:

CSub();

}

Recursive-Descent Parsing

An example in EBNF:

<expr> <term> {(+ | -) <term>}

<term> <factor> {(* | /) <factor>}

<factor> id | ( <expr> )

Let expr() be subprogram associated with non-terminal

<expr>

Let term() be subprogram associated with non-terminal

<term>

Let factor() be subprogram associated with nonterminal <factor>

Recursive-Descent Parsing

/* Parse strings in the language

generated by the rule:

<expr> <term> {(+ | -) <term>}

*/

void expr() {

term(); /* Parse the first term */

while (nextToken == PLUS_CODE || nextToken == MINUS_CODE)

{

lex();

term();

}

}

Recursive-Descent Parsing

/* Parse strings in the language

generated by the rule:

<term> <factor> {(* | /) <factor>}

*/

void term() {

factor(); /* Parse first factor */

while (nextToken == MULT_CODE || nextToken == DIV_CODE)

{

lex();

factor();

}

}

Recursive-Descent Parsing

/* Parse strings in the language

generated by the rule:

<factor> id | (<expr>) */

void factor() {

if (nextToken) == ID_CODE)

lex();

else if (nextToken == LEFT_PAREN_CODE) {

lex();

expr();

if (nextToken == RIGHT_PAREN_CODE)

lex();

else error();

} /* End of else if (nextToken == ... */

else error(); /* Neither RHS matches */

}

Recursive-Descent Parsing

Limitations:

Recursive descent cannot be used with a grammar that:

contains a left recursive rule => an infinite loop!

OR

is such that we cannot always choose correct RHS based on a single

token of lookahead

These two features are in fact problematic for LL(1) parsers

in general

Left Recursion Problem

Examples:

Direct recursion: <A> <A> + <B>

Indirect recursion:

<A> <C>a

<C> <A>b

=> In both cases, the subprogram for parsing <A> will call

itself indefinitely (hence an infinite loop)

what about non-left recursion; does it always lead to an

infinite loop?

Left Recursion Problem

A grammar can always be converted into one without left

recursion

EBNF is especially useful for this purpose

Example:

<expr> <term> | <expr> + <term>

<expr> <term> {+ <term>}

Pairwise Disjointness

Lack of pairwise disjointness: the inability to determine the

correct RHS (to expand a non-terminal) on the basis of a

single token of lookahead

To make sure a grammar does not have this problem,

grammar must pass the pairwise disjointness test:

For each non-terminal A in the grammar,

Let α1, α2, …, αm be the RHS’s for the m rules in which A appears

on the LHS

For any pair (αi, αj) we must have

FIRST(αi) η FIRST(αj) = Ø

Pairwise Disjointness

The pair disjointness problem can sometimes be solved via

a technique called left factoring

Example:

<variable> identifier | identifier [<expression>]

<variable> identifier <new>

<new> [<expression>] | ε

<variable> identifier [ [<expression>] ]

Pairwise Disjointness

Exercise:

<S> <S>a | b

What is the language generated by this grammar?

For which input token streams will the subprogram

for <S> run into an infinite loop?

Does this grammar pass the pairwise disjointness

test?

Pairwise Disjointness

Exercise:

<A> a | <B>b | <C><B>

<B> c<C> | c

<C> b | a<B>

Does this grammar pass the pairwise disjointness test?

If not, re-write grammar to fix the problem.

LR Parsing

LL(k) parsers predict which production to use,

considering only the first k tokens.

What if we could postpone choosing a production

until we see all input tokens corresponding to the

entire right-hand side of a production?

LR(k): rightmost derivation, lookahead of k tokens

How is rightmost derivation compatible with leftto-right parsing of input?

LR Parsing

Top-down parsing

Start at most abstract level (grammar sentence) and work

down to most concrete level (tokens)

Leftmost derivation

LL(k), recursive-descent or predictive parsing

Bottom-up parsing

Work from tokens up to sentences

Rightmost derivation

LR(k)

LR Parsing

LR Parsing

An LR(k) parser uses the contents of its stack and the next

k tokens of the input to decide which action to take. (In

practice, k = 1.)

The parser knows when to shift and reduce by applying a

DFA to the stack.

Edges are labeled with terminals and non-terminals.

Transitions indicate actions such as “shift and go to state

n”, “reduce by rule k”, “accept”.

LR Parsing