ppt

advertisement

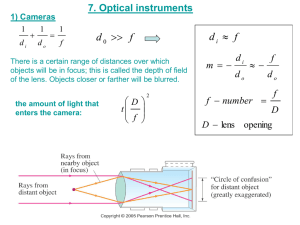

CSE 473/573 Computer Vision and Image Processing (CVIP) Ifeoma Nwogu inwogu@buffalo.edu Lecture 4 – Image formation(part I) Schedule • Last class – linear algebra overview • Today – Image formation and camera properties • Readings for today: Forsyth and Ponce 1.1, 1.4, Szeliski 2.1 and 2.3.1 (optional). Physical parameters of image formation • Optical – Sensor’s lens type – focal length, field of view, aperture • Geometric – Type of projection – Camera pose • Photometric – Type, direction, intensity of light reaching sensor – Surfaces’ reflectance properties What is an image? • Till now: a function – a 2D pattern of intensity values • Today: a 2D projection of 3D points What is a camera? • Some device that allows the projection of light from 3D points to some “medium” that will record the light pattern. 1st known photograph View from the Window at le Gras, Joseph Nicéphore Niépce 1826 Reproduction, 1952 Heliograph- a pewter plate coated with bitumen of Judea (an asphalt derivative of petroleum); after at least a day-long exposure of eight hours, the plate was removed and the latent image of the view from the window was rendered visible by washing it with a mixture of oil of lavender and white petroleum which dissolved away the parts of the bitumen which had not been hardened by light. – Harry Ransom Center UT Austin Image formation • Let’s design a camera: – Put a film in front of an object – Will we get a reasonable image? – Why? Why not? Turning a room into a camera obscura Hotel room, contrast enhanced View from hotel window Accidental pinholes produce images that are unnoticed or misinterpreted as shadows A. Torralba and W. Freeman, Accidental Pinhole and Pinspeck Cameras, CVPR 2012 Image formation • Let’s design a camera: – Put a film in front of an object – Add a barrier with an opening to block off most of the rays (reduce blurring) – Opening is called aperture Ist known camera • Known to Aristotle (384-322 B.C.) • According to DaVinci “When images of illuminated objects ... penetrate through a small hole into a very dark room ... you will see [on the opposite wall] these objects in their proper form and color, reduced in size, in a reversed position, owing to the intersection of the rays". • Depth of the room is the “focal length” • How does the aperture size affect the image? Shrinking the aperture Pinhole too big many directions are averaged, blurring the image Pinhole too smalldiffraction effects blur the image Generally, pinhole cameras are dark, because a very small set of rays from a particular point hits the screen. Slide by Steve Seitz Shrinking the aperture Pinhole too big many directions are averaged, blurring the image Pinhole too smalldiffraction effects blur the image Generally, pinhole cameras are dark, because a very small set of rays from a particular point hits the screen. Adding a lens - concept of focus • A lens focuses light onto the film – There is a specific distance at which objects are “in focus” – other points project to a “circle of confusion” in the image • Changing the shape or relative locations of the lens elements changes this distance The thin lens The thin lens Sign is +ve when incident lens surface is convex, and –ve when concave Depth of field http://www.cambridgeincolour.com/tutorials/depth-of-field.htm Depth of field is the range of distance within the subject that is acceptably sharp. Slide by A. Efros How can we control the depth of field? • Changing the aperture size affects depth of field – A smaller aperture increases the range in which the object is approximately in focus – But small aperture reduces amount of light – need to increase exposure Slide by A. Efros Field of View (FOV) • FOV is the extent of the observable world that is seen at any given moment. • For cameras, it is a solid angle through which a detector is sensitive to light – the area of the inspection captured on the camera’s imager. Zooming and Moving are not the same… Large FOV, small f Camera close to car Small FOV, large f Camera far from the car Real lens systems Lens flaws: chromatic aberration A lens can have different refractive indices for different wavelengths: causes color fringing Near Lens Center Near Lens Outer Edge Lens flaws: Spherical aberration • Spherical lenses don’t focus light perfectly • Rays farther from the optical axis focus closer Lens flaws: Spherical aberration Left: image showing low level of spherical aberration and right: image showing high level of spherical aberration http://www.mto-ophtalmo.ch/intraocular-lenses/neutralasphericity/ Radial distortion – Caused by imperfect lenses – Deviations are most noticeable near the edge of the lens No distortion Pin cushion Barrel Lens flaws: Vignetting Digital camera • A digital camera replaces film with a sensor array – Each cell in the array is light-sensitive diode that converts photons to electrons – Two common types • Charge Coupled Device (CCD) • Complementary metal oxide semiconductor (CMOS) – http://electronics.howstuffworks.com/digital-camera.htm Slide by Steve Seitz CCD vs. CMOS • CCD: transports the charge across the chip and reads it at one corner of the array. An analog-to-digital converter (ADC) then turns each pixel's value into a digital value by measuring the amount of charge at each photosite and converting that measurement to binary form • CMOS: uses several transistors at each pixel to amplify and move the charge using more traditional wires. The CMOS signal is digital, so it needs no ADC. http://electronics.howstuffworks.com/digital-camera.htm http://www.dalsa.com/shared/content/pdfs/CCD_vs_CMOS_Litwiller_2005.pdf Geometric projections Types of 3D projections • 3D projection is any method of mapping threedimensional points to a two-dimensional plane. – Perspective projections • objects in the distance appear smaller than those close by • Parallel lines converge at an image point in infinity, on the horizon – Weak perspective projections • perspective effects, not over the scale of individual objects – Orthographic projections • objects in the distance appear same size as those close by • parallel lengths at all points are of the same scale regardless of distance from the camera Distant objects are smaller Effects of perspective projection: • Apparent size of object depends on their distance e.g. B’ and C’ have the same height but in reality A and C are half the size of B • Distance d from pinhole O to the plane of C is half the distance from O to plane of A and B. Parallel lines meet It is common to draw the image plane (or film) in front of the focal point. Moving the film plane merely scales the image. Projection of 2 parallel lines lying in the same plane: • The projections of 2 parallel lines in the same plane F appear to converge on h • h is a horizontal line formed by the intersection of image plane P and a plane parallel to F passing through the aperture O. • The line L in plane F and parallel to image plane P has no image Vanishing points • Each set of parallel lines (=direction) meets at a different point – The vanishing point for this direction • Sets of parallel lines on the same plane lead to collinear vanishing points. – The line is called the horizon for that plane • Good ways to spot faked images – scale and perspective don’t work – vanishing points behave badly – supermarket tabloids are a great source. Example of a scene vanishing point Perspective projection • Consider a coordinate system (O, i, j, k) attached to the camera whose origin O coincides with the camera aperture. • O is located at a distance d along the vector k. • The line passing through the aperture and perpendicular to P is the optical axis • The point c where this line intersects with the plane P is the image center. This is often the origin of the image plane coordinate frame. Perspective projection equations • In image space, z = d • Since P, O, and p are collinear, Op = lOP for some l, • x = lX, y = lY, d = lZ OR l = 𝑥 𝑋 • Therefore, x= d 𝑋 𝑍 and y = d 𝑌 𝑍 = 𝑦 𝑌 = 𝑑 𝑍 Weak perspective • An even coarser approximation of image formation – Consider front-parallel plane Po defined by Z = Zo – For any point P in Po 𝑑 – x = -mX, y = -mY, where m = 𝑍𝑜 – m is the positive magnification associated with plane Po Weak perspective • Issue – perspective effects, but not over the scale of individual objects – collect points into a group at about the same depth, then divide each point by the depth of its group – Advantage: easy – Disadvantage: wrong Orthographic projection • • • • • No reversal of image features m = -1 (unnatural negative magnification) All light rays are parallel to the k-axis and orthogonal to P x = X, y = Y Useful for creating to-scale drawings for construction and engineering (showing details) Modeling projection y d z x Projection equation: x y ( x, y , z ) ( d , d ) z z Source: J. Ponce, S. Seitz Homogeneous coordinates x y ( x, y , z ) ( f , f ) z z • Is this a linear transformation? • no—division by z is nonlinear Trick: add one more coordinate: homogeneous image coordinates homogeneous scene coordinates Converting from homogeneous coordinates Slide by Steve Seitz Perspective Projection Matrix • Projection is a matrix multiplication using homogeneous coordinates f 0 0 0 f 0 x 0 0 f x y 0 0 f y z 1 0 z 1 x y (f , f ) z z divide by the third coordinate In practice: lots of coordinate transformations… 2D point (3x1) = Camera to pixel coord. trans. matrix (3x3) Perspective projection matrix (3x4) World to camera coord. trans. matrix (4x4) 3D point (4x1) Orthographic projection (sort of…) M.C. Escher's waterfall http://glasnost.itcarlow.ie/~powerk/GeneralGraphicsNotes/projection/orthographicprojection.html Orthographic Projection • Special case of perspective projection – Distance from center of projection to image plane is infinite – Also called “parallel projection” – What’s the projection matrix? Slide by Steve Seitz Physical parameters of image formation • Optical – Sensor’s lens type – focal length, field of view, aperture • Geometric – Type of projection – Camera pose • Photometric – Type, direction, intensity of light reaching sensor – Surfaces’ reflectance properties Slide Credits • David Forsyth – UIUC, slides accompanying Forsyth and Ponce – Computer Vision book, 2/e • Rob Fergus – NYU • AaronBobick – GA Tech • Svetlana Lazebnik - UIUC Next class • More on image formation (photometric) • Readings for next lecture: – Forsyth and Ponce 2.1, 2.2.4; Szeliski 2.2 (optional) • Readings for today: – Forsyth and Ponce 1.1, 1.4; Szeliski 2.1 and 2.3.1, (optional) Questions