- GENI Wiki

Ilya Baldin

RENCI, UNC-CH

Victor Orlikowski

Duke University

First things first

1.

IMPORT THE OVA INTO YOUR VIRTUAL BOX

2.

LOGIN as gec20user/gec20tutorial

3.

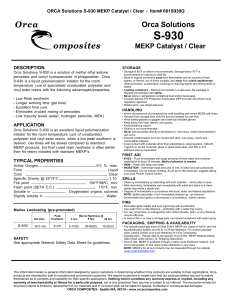

START ORCA ACTORS

sudo /etc/init.d/orca_am+broker-12080 clean-restart

sudo /etc/init.d/orca_sm-14080 clean-restart

sudo /etc/init.d/orca_controller-11080 start

4.

WAIT AND LET IT CHURN – THIS IS ALL OF EXOGENI IN ONE

VIRTUAL MACHINE!

WILL TAKE SEVERAL MINUTES!

13 GPO-funded racks built by IBM plus several “opt-in” racks

◦

Partnership between RENCI, Duke and IBM

◦

IBM x3650 M4 servers (X-series 2U)

1x146GB 10K SAS hard drive +1x500GB secondary drive

48G RAM 1333Mhz

Dual-socket 8-core CPU

Dual 1Gbps adapter (management network)

10G dual-port Chelsio adapter (dataplane)

◦

BNT 8264 10G/40G OpenFlow switch

◦ DS3512 6TB or server w/ drives totaling 6.5TB sliverable storage

iSCSI interface for head node image storage as well as experimenter slivering

Cisco (WVN, NCSU, GWU) and Dell (UvA) configurations also exist

Each rack is a small networked cloud

◦

OpenStack-based with NEuca extensions

◦ xCAT for baremetal node provisioning http://wiki.exogeni.net

3

5 upcoming racks at TAMU, UMass Amherst, WSU, UAF and PSC not shown

Option 2

Static VLAN segments provisioned to the backbone

Option 1

Direct L2 Peering w/ the backbone

VPN Appliance (Juniper

SSG5 )

Management switch (IBM

G8052R )

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Worker node (IBM x3650 M4)

Management node (IBM x3650 M4)

Sliverable Storage

IBM DS3512

OpenFlow-enabled L2 switch

(IBM G8264R)

Dataplane to dynamic circuit backbone

(10/40/100Gbps)

To campus Layer 3

10/100

Network

1 Gbps

Campus

1 Gbps

Dataplane to campus

OpenFlow

Network

CentOS 6.X base install

Resource Provisioning

◦ xCAT for bare metal provisioning

◦

OpenStack + NEuca for VMs

◦

FlowVisor

Floodlight used internally by ORCA

GENI Software

◦

ORCA for VM, bare metal and OpenFlow

◦

FOAM for OpenFlow experiments

Worker and head nodes can be reinstalled remotely via IPMI +

KickStart

Monitoring via Nagios (Check_MK)

◦

ExoGENI ops staff can monitor all racks

◦

Site owners can monitor their own rack

Syslogs collected centrally

7

OpenStack

◦

Currently Folsom based on early release of RHOS

◦

Patched to support ORCA

Additional nova subcommands

Quantum plugin to manage Layer2 networking between VMs

◦

Manages creation of VMs with multiple L2 interfaces attached to highbandwidth L2 dataplane switch

◦

One “management” interface created by nova attaches to management switch for low-bandwidth experimenter access

Quantum plugin

◦

Creates and manages 802.1q interfaces on worker nodes to attach VMs to VLANs

◦

Creates and manages OVS instances to bridge interfaces to VLANs

◦

DOES NOT MANAGE MANAGEMENT IP ADDRESS SPACE!

◦

DOES NOT MANAGE THE ATTACHED SWITCH!

Manages booting of bare-metal nodes for users and installation of OpenStack workers for sysadmins

Stock software

Upgrading the rack means

◦

Upgrading the head node (painful)

◦

Using xCAT to update worker nodes (easy!)

Flowvisor

◦

Used by ORCA to “slice” the OpenFlow part of the switch for experiments via API

Typically along <port><vlan tag> dimensions

◦

For emulating VLAN behavior ORCA starts Floodlight instances attached to slices

Floodlight

◦

Stock v. 0.9 packaged as a jar

◦

Started with parameters that minimize JVM footprint

◦

Uses ‘forwarding’ module to emulate learning switch behavior on a

VLAN

FOAM

◦

Translator from GENI AM API + RSpec to Flowvisor

Does more, but not in ExoGENI

Control framework

◦

Orchestrates resources at user requests

◦

Provides operator visibility and control

Presents multiple APIs

◦

GENI API

Used by GENI experimenter tools (Flack, omni)

◦

ORCA API

Used by Flukes experimenter tool

◦

Management API

Used by Pequod administrator tool

slice

OpenFlow-enabled L2 topology

Computed embedding

Virtual network exchange

Slice owner may deploy an IP network into a slice (OSPF).

Cloud hosts with network control

Virtual colo campus net to circuit fabric

Brokering Services

Site Site

User facing

ORCA Agents

1.

Provision a dynamic slice of networked virtual infrastructure from multiple providers, built to order for a guest application.

2.

Stitch slice into an end-toend execution environment.

User

Topology requests specified in NDL

USER

RE

DE

EM

RE

QU

ES

T

BROKER

BROKER

BROKER

SITE SITE SITE

NETWORK

/EDGE

SITE

NETWORK/

TRANSIT

STORAGE

COMPUTE

An actor encapsulates a piece of logic

◦

Aggregate Manager (AM) – owner of the resources

◦

Broker – partitions and redistributes resources

◦

Service Manager – interacts with the user

A Controller is a separable piece of logic encapsulating topology embedding and presenting remote APIs to users

A container stores some number of actors, connects them to

◦

The outside world using remote API endpoints

◦

A database for storing their state

Any number of actors can share a container

A controller is *always* by itself

Tickets, leases and reservations are used somewhat interchangeably

◦

Tickets and leases are kinds of reservation

A ticket indicates the right to instantiate a resource

A lease indicates ownership of instantiated resources

AM gives tickets to brokers to indicate delegation of resources

Brokers subdivide the given tickets into other smaller tickets and give them to SMs upon request

SMs redeem tickets with AMs and receive leases which indicate which resources have been instantiated for them

Slices consist of reservations

Slices are identified by GUID

◦

They do have user-given names as an attribute

Reservations are identified by GUIDs

◦

They have additional properties that describe

Constraints

Details of resources

Each reservation belongs to a slice

Slice and reservation GUIDs are the same across all actors

◦

Ticket issued by broker to a slice

◦

Ticket seen on SM in a slice, becomes a lease with the same GUID

◦

Lease issued by AM for a ticket to a slice

2. Reques t

Sl i c e

CONTROLLER

3.

6.

s ue st

R eq

Re so ur ce

R ed ck ee

Ti et m s

SM

4.

st ue

R eq ur

Re so ce

5.

s vi

P r o ck

Ti et de s

BROKER

L e a s

8

.

e s

R e t u r n

T i

7

.

c k e t s

R e d e e m

AM AM AM

ORCA actor configuration

◦

ORCA looks for configuration files relative to $ORCA_HOME environment variable

◦

/etc/orca/am+broker-12080

◦

/etc/orca/sm-14080

ORCA controller configuration

◦

Similar, except everything is in reference to

$CONTROLLER_HOME

◦

/etc/orca/controller-11080

Actor configuration config/orca.properties – describes the container config/config.xml – describes the actors in the container config/runtime/ - contains keys of actors config/ndl/ - contains NDL topology descriptions of actor topologies (AMs only)

Controller configuration config/controller.properties – similar to orca.properties

describes the container for controller geni-trusted.jks – Java truststore with trust roots for

XMLRPC interface to users xmlrpc.jks – Java keystore with the keypair for this controller to use for SSL xmlrpc.user.whitelist, xmlrpc.user.blacklist – lists of user urn regex patterns that should be allowed/denied

◦

Blacklist parsed first

ExoBroker

Global ExoGENI Actors

ExoSM

DD AM

BEN AM OSCARS AM rci-hn.exogeni.net

A Broker

A SM A AM

RCI XO

LEARN AM

LEARN

BEN

I2

AL2S

SL AM

DOE ESnet

StarLight headnode

B Broker

B SM B AM

Departure

Drive

2601-2610 bbn-hn.exogeni.net

C Broker

C SM C AM

Other XO headnode

B Broker

B SM B AM

Campus OF

BBN XO

Other XO

Campus OF

AMs and brokers have ‘special’ inventory slices

◦

AM inventory slice describes the resources it owns

◦

Broker inventory slice describes resources it was given

AMs also have slices named after the broker they delegated resources to

◦

Describe resources delegated to that broker

Inspect existing slices on different actors using Pequod

Create an inter-rack slice in Flukes

Inspect slice in Flukes

Inspect slice in

◦

SM

◦

Broker

◦

AMs

Close reservations/slice on various actors

http://www.exogeni.net

http://wiki.exogeni.net