Approximate Bayesian Inference I

advertisement

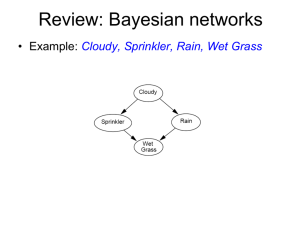

PATTERN RECOGNITION AND MACHINE LEARNING CHAPTER 10 ≈ Approximate Bayesian Inference I: Structural Approximations FALK LIEDER DECEMBER 2 2010 Introduction Variational Inference Variational Bayes Applications Statistical Inference Z Hidden States X P(Z|X) 𝔼 𝑓 𝑍 |𝑋 Observations ↝ Posterior Belief Introduction Variational Inference Variational Bayes Applications When Do You Need Approximations? The problem with Bayes theorem is that it often leads to integrals that you don’t know how to solve. 𝑝 𝑋 𝑧 ⋅ 𝑝(𝑧) 𝑝 𝑧𝑋 = ,𝑝 𝑋 = 𝑝(𝑋) 1. No analytic solution for 𝑝 𝑥 = 𝑝 𝑋 𝑧 ⋅ 𝑝 𝑧 𝑑𝑧 𝑝 𝑋 𝑧 ⋅ 𝑝 𝑧 𝑑𝑧 2. No analytic solution for 𝔼 𝑓 𝑍 |𝑋 = 𝑓 𝑧 ⋅ 𝑝 𝑧 𝑋 dz 3. In the discrete case computing 𝑝(𝑋) has complexity 𝑂(exp(#dim of Z)) 4. Sequential Learning For Non-Conjugate Priors Introduction Variational Inference Variational Bayes Applications How to Approximate? Samples Approximate Density by Histogram Approx. Expectations by Averages Numerical integration Approximate Integrals Numerically: a) Evidence p(x) b) Expectations Infeasible if Z is highdimensional Structual Approximation Approximation by a Density of a given Form Evidence /Expectations of Approximate Density are easy to compute Introduction Variational Inference Variational Bayes Applications How to Approximate? Structural Approximations (Variational Inference) Stochastic Approximations (Monte-Carlo-Methods, Sampling) + Fast to Compute - Systematic Error + Efficient Representation - Application often requires mathematical derivations + Learning Rules give Insight - Time-Intensive + Asymptotically Exact - Storage Intensive + Easily Applicable General Purpose Algorithms Introduction Variational Inference Variational Bayes Applications Variational Inference—An Intuition Target Family KL-Divergence VB Approximation Probability Distributions True Posterior Introduction Variational Inference Variational Bayes Applications What Does Closest Mean? Intuition: Closest means minimal additional surprise on average. Kullback-Leibler (KL) divergence measures average additional surprise. KL[p||q]=𝔼𝑝 [Surprise𝑞 𝑍 ] − 𝔼𝑝 [Surprise𝑝 (𝑍)] KL[p||q] measures how much less accurate the belief q is than p, if p is the true belief. KL[p||q] is largest reduction in average surprise that you can achieve, if p is the true belief. Introduction Variational Inference Variational Bayes KL-Divergence Illustration KL[𝑝(⋅ |𝑋)||𝑞] ≔ 𝒑(𝒁|𝑿) 𝒑 𝒁|𝑿 ⋅ 𝐥𝐧 𝑑𝑍 𝒒(𝒁) Applications Introduction Variational Inference Variational Bayes Applications Properties of the KL-Divergence KL[𝑞| 𝑝(⋅ |𝑋) ≔ 𝑞 𝑍 ⋅ ln 𝑞(𝑍) 𝑑𝑍 𝑝(𝑍|𝑋) 1. Zero iff both arguments are identical: KL[𝑞| 𝑝 ⋅ 𝑋 = 0 ⇔ 𝑞=𝑝 2. Greater than zero, if they are different: KL[𝑞| 𝑝 ⋅ 𝑋 > 0 ⇔𝑞≠𝑝 Disadvantage The KL-divergence is not a metric (distance function), because a) It is not symmetric . b) It does not satisfy the triangle inequality. Introduction Variational Inference Variational Bayes Applications How to Find the Closest Target Density? • Intuition: Minimize Distance • Implementations: – Variational Bayes: Minimize KL[𝑞||𝑝] – Expectation Propagation: KL[𝑝||𝑞] • Arbitrariness – Different Measures ⇒ Different Algorithms & Different Results – Alternative Schemes are being developed, e.g. Jaakola-Jordan variational method, Kikuchi-Approximations Introduction Variational Inference Variational Bayes Applications Minimizing Functionals • KL-divergence is a functional Calculus Functions that map vectors to real numbers: 𝑓: ℝ𝑛 ↦ ℝ Derivative: Change of 𝑓(𝑥1 , … , 𝑥𝑛 ) for infinitesimal changes in 𝑥1 , 𝑥2 , … , 𝑥𝑛 Variational Calculus Functionals map functions to real numbers Functional Derivative: Change of 𝐹(𝑓 −∞ , … , 𝑓(+∞)) for infinitesimal changes in f −∞ , … , 𝑓 0 , … , 𝑓(−∞) Minimizing Functions Minimizing Functionals Find the root of the derivative Find the root of the functional derivative Introduction Variational Inference Variational Bayes Applications VB and the Free-Energy ℱ(𝑞) Variational Bayes: approximate posterior q = argmin KL[q||p(𝑧|𝑋)] q∈T Problem: You can’t evaluate the KL-divergence, because you can’t evaluate the posterior. Solution: KL[𝑞| 𝑝 𝑧 𝑋 = = 𝑞 𝑧 𝑝 𝑥 𝑝 𝑧, 𝑥 𝑞 𝑍 ⋅ ln 𝑞 𝑍 ⋅ ln 𝑞 𝑍 𝑝 𝑍𝑋 𝑑𝑧 = 𝑑𝑍 𝑞 𝑧 ⋅ ln 𝑞 𝑧 𝑝 𝑧, 𝑥 𝑑𝑧 + ln 𝑝 𝑥 −ℱ 𝑞 = −ℒ(𝑞) Conclusion: • You can maximize the free-energy instead. const Introduction Variational Inference Variational Bayes Examples VB: Minimizing KL-Divergence is equivalent to Maximizing Free-Energy ℱ(q) ln 𝑝(𝑋) = ℱ(𝑞) + KL[𝑞||𝑝] ln 𝑝(𝑋) Introduction Variational Inference Variational Bayes Applications Constrained Free-Energy Maximization q = argmax ℱ (q) q∈T Intuition: • Maximize a Lower Bound on the Log Model Evidence • Maximization is restricted to tractable target densities Definition: 𝑝(𝑋, 𝑍) ℱ 𝑞 ≔ 𝑞 𝑧 ⋅ ln dz 𝑞(𝑍) Properties • ℱ 𝑞 ≤ ln 𝑝(𝑋) • The free-energy is maximal for the true posterior. Introduction Variational Inference Variational Bayes Applications Variational Approximations 1. Factorial Approximations (Meanfield) – Independence Assumption 𝑞(𝑧) = 𝐾 𝑖=1 𝑞𝑖 (𝑧𝑖 ) – Optimization with respect to factor densities 𝑞𝑖 – No Restriction on Functional Form of the factors 2. Approximation by Parametric Distributions – Optimization w.r.t. Parameters 3. Variational Approximations for Model Comparison – Variational Approximation of the Log Model Evidence Introduction Variational Inference Variational Bayes Examples Meanfield Approximation Goal: 1. Rewrite ℱ(𝑞) as a function of 𝑞𝑗 and optimize. 2. Optimize ℱ(𝑞𝑗 ) separately for each factor 𝑞𝑗 Step 1: 𝐾 ℱ 𝑞 = 𝐾 𝑞𝑖 𝑧𝑖 ln 𝑝 𝑋, 𝑍 𝑑𝑧1 ⋯ 𝑑𝑧𝐾 + 𝑖=1 𝑞𝑗 𝑧𝑗 𝑞𝑖 𝑧𝑖 ln 𝑞𝑖 𝑧𝑖 𝑑𝑧1 ⋯ 𝑑𝑧𝐾 𝑖=1 𝑞𝑖 𝑧𝑖 ln 𝑝 𝑋, 𝑍 𝑑𝑧1 ⋯ 𝑑𝑧𝑗−1 𝑑𝑧𝑗+1 ⋯ 𝑑𝑧𝐾 𝑑𝑧𝑗 𝑖≠𝑗 ln 𝑝 𝑋, 𝑍𝑗 + const ≔ 𝔼𝑖≠𝑗 [ln 𝑝(𝑋, 𝑍)] Introduction Variational Inference Variational Bayes Applications Meanfield Approximation, Step 1 𝐾 ℱ 𝑞 = 𝐾 𝐾 𝑞𝑖 𝑧𝑖 ln 𝑝 𝑋, 𝑍 𝑑𝑧1 ⋯ 𝑑𝑧𝐾 − 𝑖=1 𝑞𝑖 𝑧𝑖 ⋅ 𝑖=1 𝑞 𝑧 ln 𝑞𝑗 𝑧𝑗 𝑑𝑧1 ⋯ 𝑑𝑧𝐾 + ln 𝑞𝑖 𝑧𝑖 𝑑𝑧1 ⋯ 𝑑𝑧𝐾 𝑖=1 𝑞 𝑧 ⋅ ln 𝑞𝑖 𝑧𝑖 𝑑𝑧1 ⋯ 𝑑𝑧𝐾 𝑖≠𝑗 𝑞𝑗 𝑧𝑗 ln 𝑞𝑗 𝑧𝑗 𝑑𝑧1 ⋯ 𝑑𝑧𝑗−1 𝑑𝑧𝑗+1 ⋯ 𝑑𝑧𝐾 𝑑𝑧𝑗 const ln 𝑞𝑗 (𝑧𝑗 ) 𝐹 𝑞𝑗 = qj 𝑧𝑗 ⋅ ln 𝑝 (𝑋, 𝑍𝑗 ) 𝑑𝑧𝑗 − 𝑞𝑗 𝑧𝑗 ⋅ ln 𝑞𝑗 𝑧𝑗 𝑑𝑧𝑗 + const Introduction Variational Inference Variational Bayes Applications Meanfield Approximation, Step 2 ℱ 𝑞𝑗 = qj 𝑧𝑗 ⋅ ln 𝑝 (𝑋, 𝑍𝑗 ) 𝑑𝑧𝑗 − Notice that ℱ 𝑞𝑗 = −𝐾𝐿[𝑞𝑗 | 𝑝 𝑋, 𝑍𝑗 𝑞𝑗 𝑧𝑗 ⋅ ln 𝑞𝑗 𝑧𝑗 𝑑𝑧𝑗 + const + const. qj = arg max ℱ 𝑞𝑗 = 𝑝 𝑋, 𝑍𝑗 𝑞𝑗 = exp 𝔼𝑖≠𝑗 ln 𝑝 𝑋, 𝑍 + const The constant must be the evidence, because qj has to integrate to one. Hence, exp 𝔼𝑖≠𝑗 ln 𝑝 𝑋, 𝑍 qj = exp 𝔼𝑖≠𝑗 ln 𝑝 𝑋, 𝑍 𝑑𝑧𝑗 Introduction Variational Inference Variational Bayes Applications Meanfield Example True Distribution: 𝑝 𝑧1 , 𝑧2 = 𝒩 𝜇, Λ−1 with 𝜇 = 𝜇1 , 𝜇2 𝑡 , Λ = 𝜆11 𝜆12 Target Family: 𝑞 𝑧1 , 𝑧2 = 𝑞1 𝑧1 ⋅ 𝑞2 (𝑧2 ) VB meanfield solution: 1. ln q1 z1 = 𝔼z2 [ln p z1 , z2 ] + const 1 2. 𝔼z2 [ln p z1 , z2 ] = − 2 λ11 z1 − μ1 2 + 2λ12 (z1 − 𝜆12 𝜆22 Introduction Variational Inference Variational Bayes Applications Meanfield Example True Density Approximation Observation: VB-Approximation is more compact than true density. Reason: KL[q||p] does not penalize deviations where q is close to 0. KL[𝑞| 𝑝(⋅ |𝑋) ≔ 𝑞 𝑍 ⋅ ln 𝑞(𝑍) 𝑑𝑍 𝑝(𝑍|𝑋) Unreasonable Assumptions Poor Approximation Introduction Variational Inference Variational Bayes Applications KL[q||p] vs. KL[p||q] Variational Bayes • Analytically Easier • Approx. is more compact Expectation Propagation • More Involved • Approx. is wider Introduction Variational Inference Variational Bayes Applications 2. Parametric Approximations • Problem: – You don’t know how to integrate prior times likelihood. • Solution: – Approximate 𝑝(𝑧|𝑋) by q ∈ 𝑞 ⋅ ; 𝜃 : 𝜃 ∈ Θ . – KL-divergence and free-energy become functions of the parameters – Apply standard optimization techniques. – Setting derivatives to zero One equation per parameter. – Solve System of Equations by iterative Updating. Introduction Variational Inference Variational Bayes Applications Parametric Approximation Example Goal: Learn the Reward Probability p • Likelihood: 𝑋 ∼ 𝐵𝑛 , 𝑝 , 𝑝 = 1/(1 + exp(−20𝑧 − 4)) • Prior: 𝑍 ∼ 𝒩(0,1) • Posterior: 𝑝 𝑧 𝑋 = 1 ∝ Problem exp(−𝑧 2 /2) 1+exp(−20𝑧+4) ℝ Z X 0,1 You cannot derive a learning rule for the expected reward and ist variance, because… a) No Analytic Formula for Expected Reward Probability b) Form of Prior Changes with Every Observation Solution: Approximate the Posterior by a Gaussian. Introduction Variational Inference Variational Bayes Applications Solution (𝜇, 𝜎) = arg min KL[𝑞(𝜇, 𝜎)| 𝑝(𝑧|𝑋) = arg max ℱ(𝑞(𝜇, 𝜎) 𝜇,𝜎 Solve 𝜇,𝜎 𝜕KL[𝑞(𝜇, 𝜎)||𝑝] 𝜕ℱ(𝑞(𝜇, 𝜎) I =− =0 𝜕𝜇 𝜕𝜇 𝜕KL[𝑞(𝜇, 𝜎)||𝑝] 𝜕ℱ(𝑞(𝜇, 𝜎) II =− =0 𝜕𝜎 𝜕𝜎 Introduction Variational Inference Variational Bayes Applications Result: A Global Approximation Learning Rules for expected reward probability and the uncertainty about it Sequential Learning Algorithm True Posterior Laplace Variational Bayes Introduction Variational Inference Variational Bayes Applications VB for Bayesian Model Selection • 𝑝 𝑚𝑋 = 𝑝 𝑋𝑚 ⋅𝑝(𝑚) 𝑝(𝑋) ∝𝑝 𝑋 𝑚 ⋅𝑝 𝑚 • Hence, if 𝑝 𝑚 is uniform 𝑝 𝑚 𝑋 ∝ 𝑝(𝑋|𝑚). • Problem: – 𝑝 𝑋𝑚 = 𝑝(𝜃|𝑚) ⋅ 𝑝 𝑋 𝜃, 𝑚 𝑑𝜃 is “intractable” • Solution: – ln 𝑝(𝑋|𝑚) = ℱ 𝑞 + KL[𝑞||𝑝(𝜃|𝑋)] – ln 𝑝(𝑋|𝑚) ≈ max ℱ 𝑞 𝑞∈𝑇 • Justification: – If 𝑝 𝜃 𝑋 ∈ 𝑇, then 𝑞𝑚𝑎𝑥 𝜃 = 𝑝 𝜃 𝑋 – ⇒ KL[𝑞max ||𝑝(𝜃|𝑋)]=0 ⇒ ℱ 𝑞max = ln 𝑝(𝑋|𝑚) Motivation & Overview VI Intuition VB Maths Applications Summary Approximate Bayesian Inference Structural Approximations Variational Bayes (Ensemble Learning) Meanfield Parametric Approx. Learning Rules, Model Selection